The Nom Profit-Maximizing Operating System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microkernel Mechanisms for Improving the Trustworthiness of Commodity Hardware

Microkernel Mechanisms for Improving the Trustworthiness of Commodity Hardware Yanyan Shen Submitted in fulfilment of the requirements for the degree of Doctor of Philosophy School of Computer Science and Engineering Faculty of Engineering March 2019 Thesis/Dissertation Sheet Surname/Family Name : Shen Given Name/s : Yanyan Abbreviation for degree as give in the University calendar : PhD Faculty : Faculty of Engineering School : School of Computer Science and Engineering Microkernel Mechanisms for Improving the Trustworthiness of Commodity Thesis Title : Hardware Abstract 350 words maximum: (PLEASE TYPE) The thesis presents microkernel-based software-implemented mechanisms for improving the trustworthiness of computer systems based on commercial off-the-shelf (COTS) hardware that can malfunction when the hardware is impacted by transient hardware faults. The hardware anomalies, if undetected, can cause data corruptions, system crashes, and security vulnerabilities, significantly undermining system dependability. Specifically, we adopt the single event upset (SEU) fault model and address transient CPU or memory faults. We take advantage of the functional correctness and isolation guarantee provided by the formally verified seL4 microkernel and hardware redundancy provided by multicore processors, design the redundant co-execution (RCoE) architecture that replicates a whole software system (including the microkernel) onto different CPU cores, and implement two variants, loosely-coupled redundant co-execution (LC-RCoE) and closely-coupled redundant co-execution (CC-RCoE), for the ARM and x86 architectures. RCoE treats each replica of the software system as a state machine and ensures that the replicas start from the same initial state, observe consistent inputs, perform equivalent state transitions, and thus produce consistent outputs during error-free executions. -

The Linux Command Line

The Linux Command Line Fifth Internet Edition William Shotts A LinuxCommand.org Book Copyright ©2008-2019, William E. Shotts, Jr. This work is licensed under the Creative Commons Attribution-Noncommercial-No De- rivative Works 3.0 United States License. To view a copy of this license, visit the link above or send a letter to Creative Commons, PO Box 1866, Mountain View, CA 94042. A version of this book is also available in printed form, published by No Starch Press. Copies may be purchased wherever fine books are sold. No Starch Press also offers elec- tronic formats for popular e-readers. They can be reached at: https://www.nostarch.com. Linux® is the registered trademark of Linus Torvalds. All other trademarks belong to their respective owners. This book is part of the LinuxCommand.org project, a site for Linux education and advo- cacy devoted to helping users of legacy operating systems migrate into the future. You may contact the LinuxCommand.org project at http://linuxcommand.org. Release History Version Date Description 19.01A January 28, 2019 Fifth Internet Edition (Corrected TOC) 19.01 January 17, 2019 Fifth Internet Edition. 17.10 October 19, 2017 Fourth Internet Edition. 16.07 July 28, 2016 Third Internet Edition. 13.07 July 6, 2013 Second Internet Edition. 09.12 December 14, 2009 First Internet Edition. Table of Contents Introduction....................................................................................................xvi Why Use the Command Line?......................................................................................xvi -

Chapter 1: Linux Operating System

CHAPTER 1: LINUX OPERATING SYSTEM A bit of history What is Linux? A short answer to this question is "a computer operating system based on Linux kernel and GNU tools and libraries". In order to understand what Linux is, first we need to know different concepts such as Linux, kernel, and GNU. Formally, Linux is not an operating system. It's just a software component working as a bridge between applications and the data processing done by the hardware. Because of this fact, the kernel is the core component of an operating system. First Linux kernel was written by Linus Torvalds in 1991. Usually, the term Linux is used to refer to a whole operating system based on the kernel. However, an operating system needs more components to be complete. At this point, there are a number of operating system based on Linux kernel, plus a set of tools provided by the GNU open source project. What are GNU tools? Well, first of all we should learn about the GNU project. Basically, this is an open source project started by Richard Stallman with the goal of building a set of software components and tools to avoid the use of any software that is not free. Now that we've learned about Linux, kernel, and GNU, we can define a distribution as a composition of Linux kernel and GNU tools and other useful software. We've just mentioned a new concept—distribution. Have you heard about Linux mascot: Tux the penguin Ubuntu, Fedora, or Debian? These three are examples of Linux distributions. -

Official User's Guide

Official User Guide Linux Mint 18 Cinnamon Edition Page 1 of 52 Table of Contents INTRODUCTION TO LINUX MINT ......................................................................................... 4 HISTORY............................................................................................................................................4 PURPOSE...........................................................................................................................................4 VERSION NUMBERS AND CODENAMES.....................................................................................................5 EDITIONS...........................................................................................................................................6 WHERE TO FIND HELP.........................................................................................................................6 INSTALLATION OF LINUX MINT ........................................................................................... 8 DOWNLOAD THE ISO.........................................................................................................................8 VIA TORRENT...................................................................................................................................9 Install a Torrent client...............................................................................................................9 Download the Torrent file.........................................................................................................9 -

English 17.2.Pdf

Official User Guide Linux Mint 17.2 MATE Edition Page 1 of 48 Table of Contents INTRODUCTION TO LINUX MINT ......................................................................................... 4 HISTORY..........................................................................................................................................4 PURPOSE..........................................................................................................................................4 VERSION NUMBERS AND CODENAMES....................................................................................................5 EDITIONS.........................................................................................................................................6 WHERE TO FIND HELP........................................................................................................................6 INSTALLATION OF LINUX MINT ........................................................................................... 7 DOWNLOAD THE ISO........................................................................................................................7 VIA TORRENT....................................................................................................................................8 Install a Torrent client....................................................................................................................8 VIA A DOWNLOAD MIRROR...................................................................................................................8 -

Cterm & Mint Utilities for Windows &

cTERM and MINT™ Utilities for Windows and DOS Issue:3.0 Ref: MN00XXX-000;m:\user manuals\eurosystem family\utilities\cterm\i3_0 latest release\ctmuwd.doc/ZZ/XX97 Copyright Baldor Optimised Control Ltd 1997. All rights reserved. This manual is copyrighted and all rights are reserved. This document may not, in whole or in part, be copied or reproduced in any form without the prior written consent of Baldor Optimised Control. Baldor Optimised Control makes no representations or warranties with respect to the contents hereof and specifically disclaims any implied warranties of fitness for any particular purpose. The information in this document is subject to change without notice. Baldor Optimised Control assumes no responsibility for any errors that may appear in this document. MINT™ is a registered trademark of Baldor Optimised Control Ltd. Baldor Optimised Control Ltd. 178-180 Hotwell Road Bristol BS8 4RP U.K. Tel: (+44) (117) 987 3100 FAX: (+44) (117) 987 3101 BBS: (+44) (117) 987 3102 Technical Support E-mail: [email protected] Manual Revision History Issue Date Reference Comments 1.0 Mar 92 MN00106-001 First release 1.01 n/a MN00106-002 2.0 Mar 96 MN00106-003 Re-formatted for clarity Added section for cTERM for Windows 2.01 Apr 96 MN00106-004 Added section for Macro keys in cTERM for DOS 3.0 Aug 97 MN00106-005 Correct for cTERM for Windows v3.0 Contents 1. Introduction ............................................................................. 1 1.1 cTERM....................................................................................................1 -

Linux? POSIX? GNU/Linux? What Are They? a Short History of POSIX (Unix-Like) Operating Systems

Unix? GNU? Linux? POSIX? GNU/Linux? What are they? A short history of POSIX (Unix-like) operating systems image from gnu.org Mohammad Akhlaghi Instituto de Astrof´ısicade Canarias (IAC), Tenerife, Spain (founder of GNU Astronomy Utilities) Most recent slides available in link below (this PDF is built from Git commit d658621): http://akhlaghi.org/pdf/posix-family.pdf Understanding the relation between the POSIX/Unix family can be confusing Image from shutterstock.com The big bang! In the beginning there was ... In the beginning there was ... The big bang! Fast forward to 20th century... Early computer hardware came with its custom OS (shown here: PDP-7, announced in 1964) Fast forward to the 20th century... (∼ 1970s) I AT&T had a Monopoly on USA telecommunications. I So, it had a lot of money for exciting research! I Laser I CCD I The Transistor I Radio astronomy (Janskey@Bell Labs) I Cosmic Microwave Background (Penzias@Bell Labs) I etc... I One of them was the Unix operating system: I Designed to run on different hardware. I C programming language was designed for writing Unix. I To keep the monopoly, AT&T wasn't allowed to profit from its other research products... ... so it gave out Unix for free (including source). Unix was designed to be modular, image from an AT&T promotional video in 1982 https://www.youtube.com/watch?v=tc4ROCJYbm0 User interface was only on the command-line (image from late 80s). Image from stevenrosenberg.net. AT&T lost its monopoly in 1982. Bell labs started to ask for license from Unix users. -

Installation Guide

Installation Guide Linux Mint Apr 09, 2019 Download 1 Choose the right edition 3 2 Verify your ISO image 7 3 Create the bootable media 9 4 Boot Linux Mint 11 5 Install Linux Mint 13 6 Hardware drivers 21 7 Multimedia codecs 23 8 Language support 25 9 System snapshots 27 10 EFI 33 11 Boot options 37 12 Multi-boot 41 13 Partitioning 45 14 Pre-installing Linux Mint (OEM Installation) 47 15 Where to find help 49 i ii Installation Guide Linux Mint comes in the form of an ISO image (an .iso file) which can be used to make a bootable DVD or a bootable USB stick. This guide will help you download the right ISO image, create your bootable media and install Linux Mint on your computer. Download 1 Installation Guide 2 Download CHAPTER 1 Choose the right edition You can download Linux Mint from the Linux Mint website. Read below to choose which edition and architecture are right for you. 1.1 Cinnamon, MATE or Xfce? Linux Mint comes in 3 different flavours, each featuring a different desktop environment. Cinnamon The most modern, innovative and full-featured desktop MATE A more stable, and faster desktop Xfce The most lightweight and the most stable The most popular version of Linux Mint is the Cinnamon edition. Cinnamon is primarily developed for and by Linux Mint. It is slick, beautiful, and full of new features. Linux Mint is also involved in the development of MATE, a classic desktop environment which is the continuation of GNOME 2, Linux Mint’s default desktop between 2006 and 2011. -

Linux Mint / Ubuntu Cheat Sheet

Linux Mint / Ubuntu Cheat Sheet www.ihaveapc.com Navigation: pwd – print working directory ls –la – list contents of directory cd – change directory cp –avr <path to source directory/file> <path to destination directory/file> - copy directory / file mv <path to source directory/file> <path to destination directory/file> - move directory / file mkdir <directory name> - create directory touch <filename> - create / update file rm –rf <directory / file name> - delete directory / file Viewing: cat <path to file> - view contents of a file more <path to file> - view contents of a file (screen full at a time) less <path to file> - view contents of a file (screen full at a time with option to scroll backwards) nano <path to file> - create and open / open file for editing in nano text editor vi <path to file> - create and open / open file for editing in vi text editor head <path to file> - view first 10 lines of the file tail <path to file> - view last 10 lines of the file tail –f <path to file> - view last 10 lines of a changing file (usually a log file) in real time watch <linux command> - watch the output of a command (refreshes every 2 seconds by default) File Permissions: File permissions representation: - r w x(owner) r w x(group) r w x(others) r (read) – Allows user to view the file – numerical value = 4 w (write) – Allows user to edit the file – numerical value = 2 x (execute) – Allows user to run the file as an executable – numerical value = 1 chmod 777 <path to file> - Owner, group users and other users can read, write and execute the file. -

Popcorn Linux: Enabling Efficient Inter-Core Communication in a Linux-Based Multikernel Operating System

Popcorn Linux: enabling efficient inter-core communication in a Linux-based multikernel operating system Benjamin H. Shelton Thesis submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Master of Science in Computer Engineering Binoy Ravindran Christopher Jules White Paul E. Plassman May 2, 2013 Blacksburg, Virginia Keywords: Operating systems, multikernel, high-performance computing, heterogeneous computing, multicore, scalability, message passing Copyright 2013, Benjamin H. Shelton Popcorn Linux: enabling efficient inter-core communication in a Linux-based multikernel operating system Benjamin H. Shelton (ABSTRACT) As manufacturers introduce new machines with more cores, more NUMA-like architectures, and more tightly integrated heterogeneous processors, the traditional abstraction of a mono- lithic OS running on a SMP system is encountering new challenges. One proposed path forward is the multikernel operating system. Previous efforts have shown promising results both in scalability and in support for heterogeneity. However, one effort’s source code is not freely available (FOS), and the other effort is not self-hosting and does not support a majority of existing applications (Barrelfish). In this thesis, we present Popcorn, a Linux-based multikernel operating system. While Popcorn was a group effort, the boot layer code and the memory partitioning code are the authors work, and we present them in detail here. To our knowledge, we are the first to support multiple instances of the Linux kernel on a 64-bit x86 machine and to support more than 4 kernels running simultaneously. We demonstrate that existing subsystems within Linux can be leveraged to meet the design goals of a multikernel OS. -

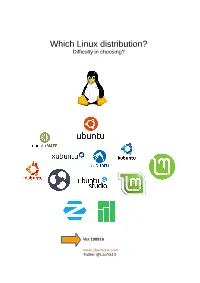

Which Linux Distribution? Difficulty in Choosing?

Which Linux distribution? Difficulty in choosing? Ver 190916 www.ubuntutor.com Twitter @LaoYa14 Contents Page Contents 3 That's enough 4 At first 5 At first little about Linux world 6 Quick start guide for choosing the right distro for beginners 7 Basic information 8 ”Linux tree” 9 Basic information 10 Questions on the web site 11 Distros 12 App store 13 Ubuntu 16.04 and 18.04 14 Ubuntu MATE 15 Lubuntu 16 Ubuntu Budgie 17 Kubuntu 18 Xubuntu 19 Linux Mint 20 Zorin 21 MX Linux 22 Pepermint 23 Deepin 24 Arch Linux 25 Manjaro 26 Ubuntu Kylin 27 Ubuntu Studio 28 Kali Linux 29 Edubuntu 30 Desktop environments for Linux 31 File manager NEMO 32 File manager NAUTILUS 33 Installing Ubuntu live USB (test drive) That's enough When laptop is old and there is Windows XP, what to do? You can install Ubuntu Mate on your old laptop and keep at the same time Windows XP too, if you like XP. Or you can buy a tiny new laptop about 200-300 €/$ and change Windows 10 to Ubuntu. It works! I have made both about three years ago, and I haven't used Windows since then. My own laptop is cheap HP Stream 4 MB/32 GB. When I was studying Ubuntu, I noticed that simple beginner's guide books were not available. So, I did a guide book. I also created a website and named it www.ubuntutor.com. It currently includes Ubuntu 16.04 and 18.04 tutorials. And this guide is third one. -

Baldor Mint Software and Applications

Mint® Automation Software and Applications Courtesy of Steven Engineering, Inc.-230 Ryan Way, South San Francisco, CA 94080-6370-Main Office: (650) 588-9200-Outside Local Area: (800) 258-9200-www.stevenengineering.com Mint® The Real-Time Automation Language Mint® – The Real-time Automation Language > High speed compiled BASIC programming language for motion and machine control > Multitasking capability for motion, I/O, HMI and communication tasks. Allows complex applications to be broken down into simpler, more manageable sub-tasks BASIC – the de facto Programming Modular programming capability, including functions and > Language subroutines, allows for code re-use and ease of debugging With nearly two decades of history, Mint® was designed from the outset around the BASIC Common programming interface for both Baldor’s NextMove > programming language. It was understood then, and intelligent drives reduces the learning curve and it is still true today, that BASIC is the de-facto programming language around the world. Now in its > Comprehensive library of motion types including interpolated fifth generation, Mint fully embraces all the modern moves, CAM profiling, flying shears, gearing and more BASIC programming functionality including features such as multitasking, function and procedures, data > Comprehensive Windows® tools including color keyword types and local data. This functionality makes it highlighting in the editor, software oscilloscope, online help, simple to write and develop modular programs that drive configuration wizard and auto-tuning are easily understood by others, easily maintained and easily re-used across different applications. Mint’s > ActiveX® components, supplied free of charge, aid in the new Code Library makes code re-use even simpler by allowing snippets of code to be stored and used for development of Windows® front end applications other projects.