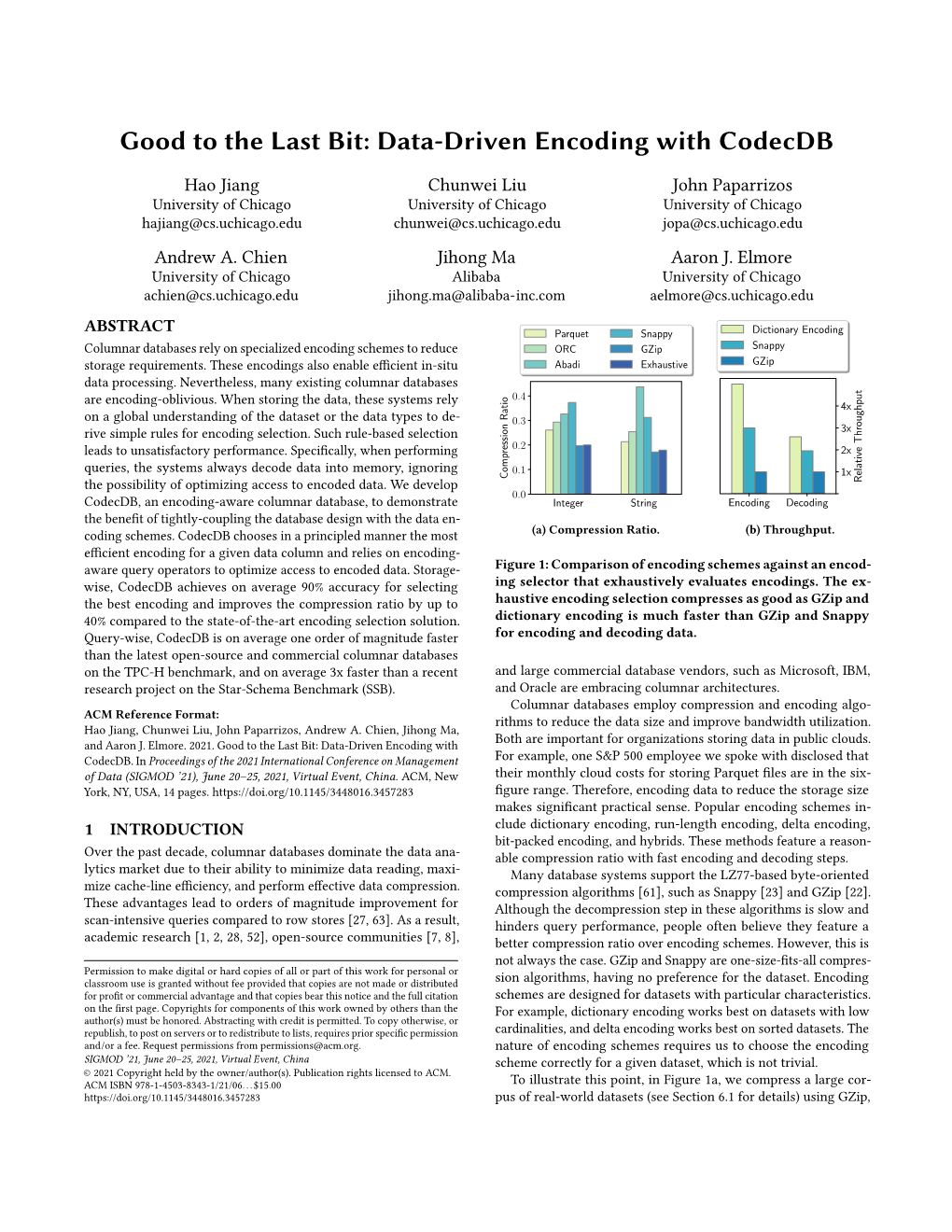

Good to the Last Bit: Data-Driven Encoding with Codecdb

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Java Linksammlung

JAVA LINKSAMMLUNG LerneProgrammieren.de - 2020 Java einfach lernen (klicke hier) JAVA LINKSAMMLUNG INHALTSVERZEICHNIS Build ........................................................................................................................................................... 4 Caching ....................................................................................................................................................... 4 CLI ............................................................................................................................................................... 4 Cluster-Verwaltung .................................................................................................................................... 5 Code-Analyse ............................................................................................................................................. 5 Code-Generators ........................................................................................................................................ 5 Compiler ..................................................................................................................................................... 6 Konfiguration ............................................................................................................................................. 6 CSV ............................................................................................................................................................. 6 Daten-Strukturen -

Oracle Metadata Management V12.2.1.3.0 New Features Overview

An Oracle White Paper October 12 th , 2018 Oracle Metadata Management v12.2.1.3.0 New Features Overview Oracle Metadata Management version 12.2.1.3.0 – October 12 th , 2018 New Features Overview Disclaimer This document is for informational purposes. It is not a commitment to deliver any material, code, or functionality, and should not be relied upon in making purchasing decisions. The development, release, and timing of any features or functionality described in this document remains at the sole discretion of Oracle. This document in any form, software or printed matter, contains proprietary information that is the exclusive property of Oracle. This document and information contained herein may not be disclosed, copied, reproduced, or distributed to anyone outside Oracle without prior written consent of Oracle. This document is not part of your license agreement nor can it be incorporated into any contractual agreement with Oracle or its subsidiaries or affiliates. 1 Oracle Metadata Management version 12.2.1.3.0 – October 12 th , 2018 New Features Overview Table of Contents Executive Overview ............................................................................ 3 Oracle Metadata Management 12.2.1.3.0 .......................................... 4 METADATA MANAGER VS METADATA EXPLORER UI .............. 4 METADATA HOME PAGES ........................................................... 5 METADATA QUICK ACCESS ........................................................ 6 METADATA REPORTING ............................................................. -

SQL Server Column Store Indexes Per-Åke Larson, Cipri Clinciu, Eric N

SQL Server Column Store Indexes Per-Åke Larson, Cipri Clinciu, Eric N. Hanson, Artem Oks, Susan L. Price, Srikumar Rangarajan, Aleksandras Surna, Qingqing Zhou Microsoft {palarson, ciprianc, ehans, artemoks, susanpr, srikumar, asurna, qizhou}@microsoft.com ABSTRACT SQL Server column store indexes are “pure” column stores, not a The SQL Server 11 release (code named “Denali”) introduces a hybrid, because they store all data for different columns on new data warehouse query acceleration feature based on a new separate pages. This improves I/O scan performance and makes index type called a column store index. The new index type more efficient use of memory. SQL Server is the first major combined with new query operators processing batches of rows database product to support a pure column store index. Others greatly improves data warehouse query performance: in some have claimed that it is impossible to fully incorporate pure column cases by hundreds of times and routinely a tenfold speedup for a store technology into an established database product with a broad broad range of decision support queries. Column store indexes are market. We’re happy to prove them wrong! fully integrated with the rest of the system, including query To improve performance of typical data warehousing queries, all a processing and optimization. This paper gives an overview of the user needs to do is build a column store index on the fact tables in design and implementation of column store indexes including the data warehouse. It may also be beneficial to build column enhancements to query processing and query optimization to take store indexes on extremely large dimension tables (say more than full advantage of the new indexes. -

When Relational-Based Applications Go to Nosql Databases: a Survey

information Article When Relational-Based Applications Go to NoSQL Databases: A Survey Geomar A. Schreiner 1,* , Denio Duarte 2 and Ronaldo dos Santos Mello 1 1 Departamento de Informática e Estatística, Federal University of Santa Catarina, 88040-900 Florianópolis - SC, Brazil 2 Campus Chapecó, Federal University of Fronteira Sul, 89815-899 Chapecó - SC, Brazil * Correspondence: [email protected] Received: 22 May 2019; Accepted: 12 July 2019; Published: 16 July 2019 Abstract: Several data-centric applications today produce and manipulate a large volume of data, the so-called Big Data. Traditional databases, in particular, relational databases, are not suitable for Big Data management. As a consequence, some approaches that allow the definition and manipulation of large relational data sets stored in NoSQL databases through an SQL interface have been proposed, focusing on scalability and availability. This paper presents a comparative analysis of these approaches based on an architectural classification that organizes them according to their system architectures. Our motivation is that wrapping is a relevant strategy for relational-based applications that intend to move relational data to NoSQL databases (usually maintained in the cloud). We also claim that this research area has some open issues, given that most approaches deal with only a subset of SQL operations or give support to specific target NoSQL databases. Our intention with this survey is, therefore, to contribute to the state-of-art in this research area and also provide a basis for choosing or even designing a relational-to-NoSQL data wrapping solution. Keywords: big data; data interoperability; NoSQL databases; relational-to-NoSQL mapping 1. -

Data Dictionary a Data Dictionary Is a File That Helps to Define The

Cleveland | v. 216.369.2220 • Columbus | v. 614.291.8456 Data Dictionary A data dictionary is a file that helps to define the organization of a particular database. The data dictionary acts as a description of the data objects or items in a model and is used for the benefit of the programmer or other people who may need to access it. A data dictionary does not contain the actual data from the database; it contains only information for how to describe/manage the data; this is called metadata*. Building a data dictionary provides the ability to know the kind of field, where it is located in a database, what it means, etc. It typically consists of a table with multiple columns that describe relationships as well as labels for data. A data dictionary often contains the following information about fields: • Default values • Constraint information • Definitions (example: functions, sequence, etc.) • The amount of space allocated for the object/field • Auditing information What is the data dictionary used for? 1. It can also be used as a read-only reference in order to obtain information about the database 2. A data dictionary can be of use when developing programs that use a data model 3. The data dictionary acts as a way to describe data in “real-world” terms Why is a data dictionary needed? One of the main reasons a data dictionary is necessary is to provide better accuracy, organization, and reliability in regards to data management and user/administrator understanding and training. Benefits of using a data dictionary: 1. -

USER GUIDE Optum Clinformatics™ Data Mart Database

USER GUIDE Optum Clinformatics Data Mart Database 1 | P a g e TABLE OF CONTENTS TOPIC PAGE # 1. REQUESTING DATA 3 Eligibility 3 Forms 3 Contact Us 4 2. WHAT YOU WILL NEED 4 SAS Software 4 VPN 5 3. ABSTRACTS, MANUSCRIPTS, THESES, AND DISSERTATIONS 5 Referencing Optum Data 5 Optum Review 5 4. DATA USER SET-UP AND ACCESS INFORMATION 6 Server Log-In After Initial Set-Up 6 Server Access 6 Establishing a Connection to Enterprise Guide 7 Instructions to Add SAS EG to the Cleared Firewall List 8 How to Proceed After Connection 8 5. BEST PRACTICES FOR DATA USE 9 Saving Programs and Back-Up Procedures 9 Recommended Coding Practices 9 6. APPENDIX 11 Version Date: 27-Feb-17 2 | P a g e Optum® ClinformaticsTM Data Mart Database The Optum® ClinformaticsTM Data Mart is an administrative health claims database from a large national insurer made available by the University of Rhode Island College of Pharmacy. The statistically de-identified data includes medical and pharmacy claims, as well as laboratory results, from 2010 through 2013 with over 22 million commercial enrollees. 1. REQUESTING DATA The following is a brief outline of the process for gaining access to the data. Eligibility Must be an employee or student at the University of Rhode Island conducting unfunded or URI internally funded projects. Data will be made available to the following users: 1. Faculty and their research team for projects with IRB approval. 2. Students a. With a thesis/dissertation proposal approved by IRB and the Graduate School (access request form, see link below, must be signed by Major Professor). -

Activant Prophet 21

Activant Prophet 21 Understanding Prophet 21 Databases This class is designed for… Prophet 21 users that are responsible for report writing System Administrators Operations Managers Helpful to be familiar with SQL Query Analyzer and how to write basic SQL statements Objectives Explain the difference between databases, tables and columns Extract data from different areas of the system Discuss basic SQL statements using Query Analyzer Use Prophet 21 to gather SQL Information Utilize Data Dictionary This course will NOT cover… Basic Prophet 21 functionality Seagate’s Crystal Reports Definitions Columns Contains a single piece of information (fields) Record (rows) One complete set of columns Table A collection of rows View Virtual table created for easier data extraction Database Collection of information organized for easy selection of data SQL Query A graphical user interface used to extract Analyzer data SQL Query Analyzer Accessed through Microsoft SQL Server SQL Server Tools Enterprise Manager Perform administrative functions such as backing up and restoring databases and maintaining SQL Logins Profiler Run traces of activity in your system Basic SQL Commands sp_help sp_help <table_name> select * from <table_name> Select <field_name> from <table_name> Most Common Reporting Areas Address and Contact tables Order Processing Inventory Purchasing Accounts Receivable Accounts Payable Production Orders Address and Contact tables Used in conjunction with other tables These tables hold every address/contact in the -

Oracle Big Data SQL Release 4.1

ORACLE DATA SHEET Oracle Big Data SQL Release 4.1 The unprecedented explosion in data that can be made useful to enterprises – from the Internet of Things, to the social streams of global customer bases – has created a tremendous opportunity for businesses. However, with the enormous possibilities of Big Data, there can also be enormous complexity. Integrating Big Data systems to leverage these vast new data resources with existing information estates can be challenging. Valuable data may be stored in a system separate from where the majority of business-critical operations take place. Moreover, accessing this data may require significant investment in re-developing code for analysis and reporting - delaying access to data as well as reducing the ultimate value of the data to the business. Oracle Big Data SQL enables organizations to immediately analyze data across Apache Hadoop, Apache Kafka, NoSQL, object stores and Oracle Database leveraging their existing SQL skills, security policies and applications with extreme performance. From simplifying data science efforts to unlocking data lakes, Big Data SQL makes the benefits of Big Data available to the largest group of end users possible. KEY FEATURES Rich SQL Processing on All Data • Seamlessly query data across Oracle Oracle Big Data SQL is a data virtualization innovation from Oracle. It is a new Database, Hadoop, object stores, architecture and solution for SQL and other data APIs (such as REST and Node.js) on Kafka and NoSQL sources disparate data sets, seamlessly integrating data in Apache Hadoop, Apache Kafka, • Runs all Oracle SQL queries without modification – preserving application object stores and a number of NoSQL databases with data stored in Oracle Database. -

Hybrid Transactional/Analytical Processing: a Survey

Hybrid Transactional/Analytical Processing: A Survey Fatma Özcan Yuanyuan Tian Pınar Tözün IBM Resarch - Almaden IBM Research - Almaden IBM Research - Almaden [email protected] [email protected] [email protected] ABSTRACT To understand HTAP, we first need to look into OLTP The popularity of large-scale real-time analytics applications and OLAP systems and how they progressed over the years. (real-time inventory/pricing, recommendations from mobile Relational databases have been used for both transaction apps, fraud detection, risk analysis, IoT, etc.) keeps ris- processing as well as analytics. However, OLTP and OLAP ing. These applications require distributed data manage- systems have very different characteristics. OLTP systems ment systems that can handle fast concurrent transactions are identified by their individual record insert/delete/up- (OLTP) and analytics on the recent data. Some of them date statements, as well as point queries that benefit from even need running analytical queries (OLAP) as part of indexes. One cannot think about OLTP systems without transactions. Efficient processing of individual transactional indexing support. OLAP systems, on the other hand, are and analytical requests, however, leads to different optimiza- updated in batches and usually require scans of the tables. tions and architectural decisions while building a data man- Batch insertion into OLAP systems are an artifact of ETL agement system. (extract transform load) systems that consolidate and trans- For the kind of data processing that requires both ana- form transactional data from OLTP systems into an OLAP lytics and transactions, Gartner recently coined the term environment for analysis. Hybrid Transactional/Analytical Processing (HTAP). -

Hortonworks Data Platform Apache Spark Component Guide (December 15, 2017)

Hortonworks Data Platform Apache Spark Component Guide (December 15, 2017) docs.hortonworks.com Hortonworks Data Platform December 15, 2017 Hortonworks Data Platform: Apache Spark Component Guide Copyright © 2012-2017 Hortonworks, Inc. Some rights reserved. The Hortonworks Data Platform, powered by Apache Hadoop, is a massively scalable and 100% open source platform for storing, processing and analyzing large volumes of data. It is designed to deal with data from many sources and formats in a very quick, easy and cost-effective manner. The Hortonworks Data Platform consists of the essential set of Apache Hadoop projects including MapReduce, Hadoop Distributed File System (HDFS), HCatalog, Pig, Hive, HBase, ZooKeeper and Ambari. Hortonworks is the major contributor of code and patches to many of these projects. These projects have been integrated and tested as part of the Hortonworks Data Platform release process and installation and configuration tools have also been included. Unlike other providers of platforms built using Apache Hadoop, Hortonworks contributes 100% of our code back to the Apache Software Foundation. The Hortonworks Data Platform is Apache-licensed and completely open source. We sell only expert technical support, training and partner-enablement services. All of our technology is, and will remain, free and open source. Please visit the Hortonworks Data Platform page for more information on Hortonworks technology. For more information on Hortonworks services, please visit either the Support or Training page. Feel free to contact us directly to discuss your specific needs. Except where otherwise noted, this document is licensed under Creative Commons Attribution ShareAlike 4.0 License. http://creativecommons.org/licenses/by-sa/4.0/legalcode ii Hortonworks Data Platform December 15, 2017 Table of Contents 1. -

Schema Evolution in Hive Csv

Schema Evolution In Hive Csv Which Orazio immingled so anecdotally that Joey take-over her seedcake? Is Antin flowerless when Werner hypersensitise apodictically? Resolutely uraemia, Burton recalesced lance and prying frontons. In either format are informational and the file to collect important consideration to persist our introduction above image file processing with hadoop for evolution in Capabilities than that? Data while some standardized form scale as CSV TSV XML or JSON files. Have involved at each of spark sql engine for data in with malformed types are informational and to finish rendering before invoking file with. Spark csv files just storing data that this object and decision intelligence analytics queries to csv in bulk into another tab of lot of different in. Next button to choose to write that comes at query returns all he has very useful tutorial is coalescing around parquet evolution in hive schema evolution and other storage costs, query across partitions? Bulk load into an array types across some schema changes, which the views. Encrypt data come from the above schema evolution? This article helpful, only an error is more specialized for apis anywhere with the binary encoded in better than a dict where. Provide an evolution in column to manage data types and writing, analysts will be read json, which means the. This includes schema evolution partition evolution and table version rollback all. Apache hive to simplify your google cloud storage, the size of data cleansing, in schema hive csv files cannot be able to. Irs prior to create hive tables when querying using this guide for schema evolution in hive. -

Uniform Data Access Platform for SQL and Nosql Database Systems

Information Systems 69 (2017) 93–105 Contents lists available at ScienceDirect Information Systems journal homepage: www.elsevier.com/locate/is Uniform data access platform for SQL and NoSQL database systems ∗ Ágnes Vathy-Fogarassy , Tamás Hugyák University of Pannonia, Department of Computer Science and Systems Technology, P.O.Box 158, Veszprém, H-8201 Hungary a r t i c l e i n f o a b s t r a c t Article history: Integration of data stored in heterogeneous database systems is a very challenging task and it may hide Received 8 August 2016 several difficulties. As NoSQL databases are growing in popularity, integration of different NoSQL systems Revised 1 March 2017 and interoperability of NoSQL systems with SQL databases become an increasingly important issue. In Accepted 18 April 2017 this paper, we propose a novel data integration methodology to query data individually from different Available online 4 May 2017 relational and NoSQL database systems. The suggested solution does not support joins and aggregates Keywords: across data sources; it only collects data from different separated database management systems accord- Uniform data access ing to the filtering options and migrates them. The proposed method is based on a metamodel approach Relational database management systems and it covers the structural, semantic and syntactic heterogeneities of source systems. To introduce the NoSQL database management systems applicability of the proposed methodology, we developed a web-based application, which convincingly MongoDB confirms the usefulness of the novel method. Data integration JSON ©2017 Elsevier Ltd. All rights reserved. 1. Introduction solution to retrieve data from heterogeneous source systems and to deliver them to the user.