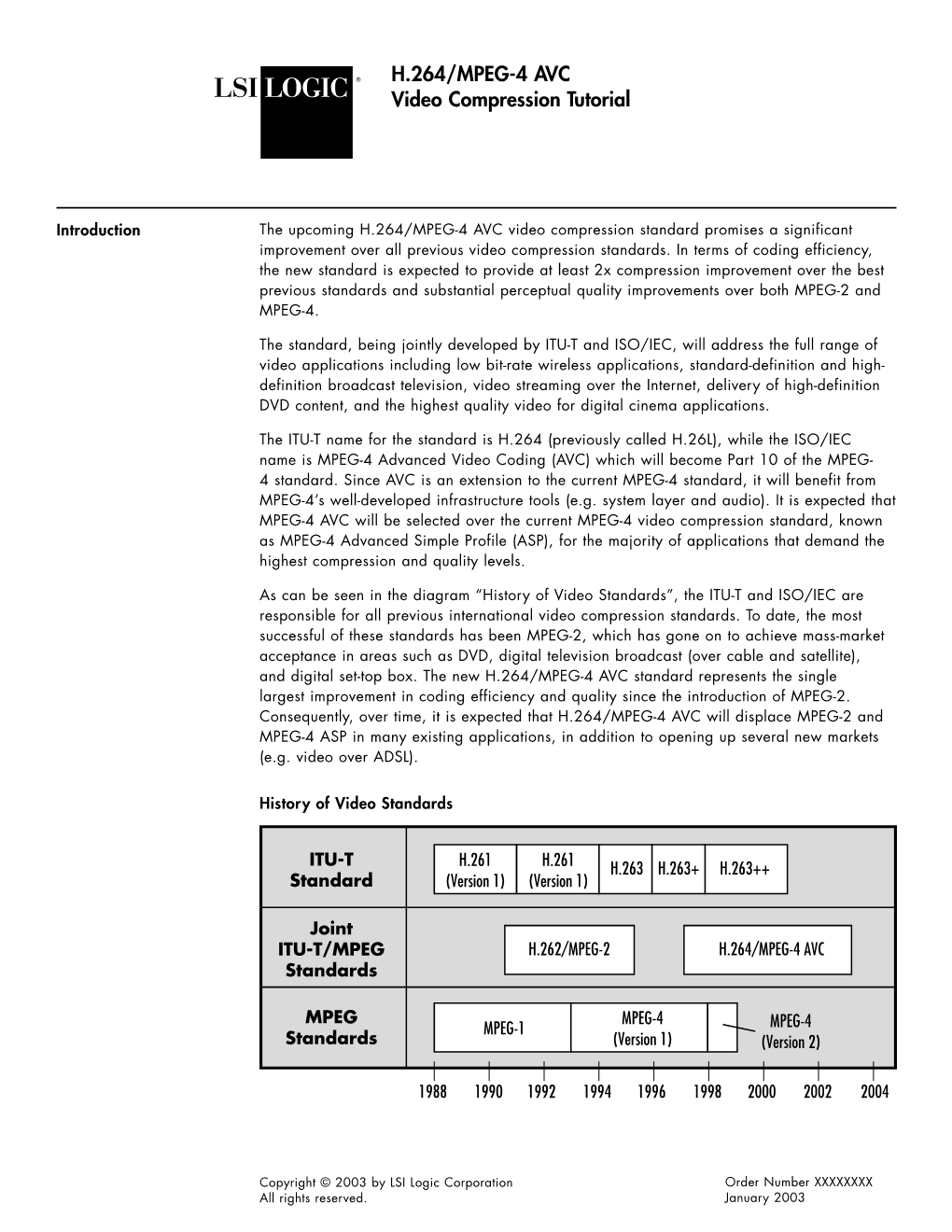

H.264/MPEG-4 AVC Video Compression Tutorial

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Microsoft Office Open XML Formats New File Formats for “Office 12”

The Microsoft Office Open XML Formats New File Formats for “Office 12” White Paper Published: June 2005 For the latest information, please see http://www.microsoft.com/office/wave12 Contents Introduction ...............................................................................................................................1 From .doc to .docx: a brief history of the Office file formats.................................................1 Benefits of the Microsoft Office Open XML Formats ................................................................2 Integration with Business Data .............................................................................................2 Openness and Transparency ...............................................................................................4 Robustness...........................................................................................................................7 Description of the Microsoft Office Open XML Format .............................................................9 Document Parts....................................................................................................................9 Microsoft Office Open XML Format specifications ...............................................................9 Compatibility with new file formats........................................................................................9 For more information ..............................................................................................................10 -

ARCHIVE ONLY: DCI Digital Cinema System Specification, Version 1.2

Digital Cinema Initiatives, LLC Digital Cinema System Specification Version 1.2 March 07, 2008 Approval March 07, 2008 Digital Cinema Initiatives, LLC, Member Representatives Committee Copyright © 2005-2008 Digital Cinema Initiatives, LLC DCI Digital Cinema System Specification v.1.2 Page 1 NOTICE Digital Cinema Initiatives, LLC (DCI) is the author and creator of this specification for the purpose of copyright and other laws in all countries throughout the world. The DCI copyright notice must be included in all reproductions, whether in whole or in part, and may not be deleted or attributed to others. DCI hereby grants to its members and their suppliers a limited license to reproduce this specification for their own use, provided it is not sold. Others should obtain permission to reproduce this specification from Digital Cinema Initiatives, LLC. This document is a specification developed and adopted by Digital Cinema Initiatives, LLC. This document may be revised by DCI. It is intended solely as a guide for companies interested in developing products, which can be compatible with other products, developed using this document. Each DCI member company shall decide independently the extent to which it will utilize, or require adherence to, these specifications. DCI shall not be liable for any exemplary, incidental, proximate or consequential damages or expenses arising from the use of this document. This document defines only one approach to compatibility, and other approaches may be available to the industry. This document is an authorized and approved publication of DCI. Only DCI has the right and authority to revise or change the material contained in this document, and any revisions by any party other than DCI are unauthorized and prohibited. -

Why ODF?” - the Importance of Opendocument Format for Governments

“Why ODF?” - The Importance of OpenDocument Format for Governments Documents are the life blood of modern governments and their citizens. Governments use documents to capture knowledge, store critical information, coordinate activities, measure results, and communicate across departments and with businesses and citizens. Increasingly documents are moving from paper to electronic form. To adapt to ever-changing technology and business processes, governments need assurance that they can access, retrieve and use critical records, now and in the future. OpenDocument Format (ODF) addresses these issues by standardizing file formats to give governments true control over their documents. Governments using applications that support ODF gain increased efficiencies, more flexibility and greater technology choice, leading to enhanced capability to communicate with and serve the public. ODF is the ISO Approved International Open Standard for File Formats ODF is the only open standard for office applications, and it is completely vendor neutral. Developed through a transparent, multi-vendor/multi-stakeholder process at OASIS (Organization for the Advancement of Structured Information Standards), it is an open, XML- based document file format for displaying, storing and editing office documents, such as spreadsheets, charts, and presentations. It is available for implementation and use free from any licensing, royalty payments, or other restrictions. In May 2006, it was approved unanimously as an International Organization for Standardization (ISO) and International Electrotechnical Commission (IEC) standard. Governments and Businesses are Embracing ODF The promotion and usage of ODF is growing rapidly, demonstrating the global need for control and choice in document applications. For example, many enlightened governments across the globe are making policy decisions to move to ODF. -

MPEG-21 Overview

MPEG-21 Overview Xin Wang Dept. Computer Science, University of Southern California Workshop on New Multimedia Technologies and Applications, Xi’An, China October 31, 2009 Agenda ● What is MPEG-21 ● MPEG-21 Standards ● Benefits ● An Example Page 2 Workshop on New Multimedia Technologies and Applications, Oct. 2009, Xin Wang MPEG Standards ● MPEG develops standards for digital representation of audio and visual information ● So far ● MPEG-1: low resolution video/stereo audio ● E.g., Video CD (VCD) and Personal music use (MP3) ● MPEG-2: digital television/multichannel audio ● E.g., Digital recording (DVD) ● MPEG-4: generic video and audio coding ● E.g., MP4, AVC (H.24) ● MPEG-7 : visual, audio and multimedia descriptors MPEG-21: multimedia framework ● MPEG-A: multimedia application format ● MPEG-B, -C, -D: systems, video and audio standards ● MPEG-M: Multimedia Extensible Middleware ● ● MPEG-V: virtual worlds MPEG-U: UI ● (29116): Supplemental Media Technologies ● ● (Much) more to come … Page 3 Workshop on New Multimedia Technologies and Applications, Oct. 2009, Xin Wang What is MPEG-21? ● An open framework for multimedia delivery and consumption ● History: conceived in 1999, first few parts ready early 2002, most parts done by now, some amendment and profiling works ongoing ● Purpose: enable all-electronic creation, trade, delivery, and consumption of digital multimedia content ● Goals: ● “Transparent” usage ● Interoperable systems ● Provides normative methods for: ● Content identification and description Rights management and protection ● Adaptation of content ● Processing on and for the various elements of the content ● ● Evaluation methods for determining the appropriateness of possible persistent association of information ● etc. Page 4 Workshop on New Multimedia Technologies and Applications, Oct. -

CP750 Digital Cinema Processor the Latest in Sound Processing—From Dolby Digital Cinema

CP750 Digital Cinema Processor The Latest in Sound Processing—from Dolby Digital Cinema The CP750 is Dolby’s latest cinema processor specifically designed to work within the new digital cinema environment. The CP750 receives and processes audio from multiple digital audio sources and can be monitored and controlled from anywhere in the network. Plus, Dolby reliability ensures a great moviegoing experience every time. The Dolby® CP750 provides easy-to- The CP750 integrates with Dolby operate audio control in digital cinema Show Manager software, enabling it environments equipped with the latest to process digital input selection and technology while integrating seamlessly volume cues within a show, real-time with existing technologies. The CP750 volume control from any Dolby Show supports the innovative Dolby Surround Manager client, and ASCII commands 7.1 premium surround sound format, from third-party controllers. Moreover, and will receive and process audio Dolby Surround 7.1 (D-cinema audio), from multiple digital audio sources, 5.1 digital PCM (D-cinema audio), Dolby including a digital cinema server, Digital Surround EX™ (bitstream), Dolby preshow servers, and alternative content Digital (bitstream), Dolby Pro Logic® II, sources. The CP750 is NOC (network and Dolby Pro Logic decoding are all operations center) ready and can be included to deliver the best in surround monitored and controlled anywhere on sound from all content sources. the network for status and function. CP750 Digital Cinema Processor Audio Inputs Other Inputs/Outputs -

Dolby Surround 7.1 for Theatres

Dolby Surround 7.1 Technical Information for Theatres Dolby® has partnered with Walt Disney Pictures and Pixar Animation Studios to deliver Toy Story 3 in Dolby Surround 7.1 audio format to suitably equipped 3D cinemas in selected countries. Dolby Surround 7.1 is a new audio format for cinema, supported in Dolby CP650 and CP750 Digital Cinema Processors, that increases the number of discrete surround channels to add more definition to the existing 5.1 surround array. This document provides an overview of the Dolby Surround 7.1 format, and the effect that it may have on theatre equipment and content. Full technical details of cabling requirements and software versions are provided in appropriate Dolby field bulletins. Table 1 lists the channel names and abbreviations used in this document. Table 1 Channel Abbreviations Channel Name Abbreviation Left L Center C Right R Left Surround Ls Right Surround Rs Low‐Frequency Effects LFE Back Surround Left Bsl Back Surround Right Bsr Hearing Impaired HI Visually Impaired‐Narrative (Audio VI‐N Description) 1 Theatre Channel Configuration Two new discrete channels are added in the theatre, Back Surround Left (Bsl) and Back Surround Right (Bsr) as shown in Figure 1. Use of these additional surround channels provides greater flexibility in audio placement to tie in with 3D visuals, and can also enhance the surround definition with 2D content. Dolby and the double-D symbol are registered trademarks of Dolby Dolby Laboratories, Inc. Laboratories. Surround EX is a trademark of Dolby Laboratories. 100 Potrero Avenue © 2010 Dolby Laboratories. All rights reserved. S10/22805 San Francisco, CA 94103-4813 USA 415-558-0200 415-645-4175 dolby.com Dolby Surround 7.1 Technical Information for Theatres 2 Figure 1 Dolby Surround 7.1 (Surround Channel Layout) Existing theatres that are wired for Dolby Digital Surround EX™ will already have the appropriate wiring and amplification for these channels. -

Versatile Video Coding – the Next-Generation Video Standard of the Joint Video Experts Team

31.07.2018 Versatile Video Coding – The Next-Generation Video Standard of the Joint Video Experts Team Mile High Video Workshop, Denver July 31, 2018 Gary J. Sullivan, JVET co-chair Acknowledgement: Presentation prepared with Jens-Rainer Ohm and Mathias Wien, Institute of Communication Engineering, RWTH Aachen University 1. Introduction Versatile Video Coding – The Next-Generation Video Standard of the Joint Video Experts Team 1 31.07.2018 Video coding standardization organisations • ISO/IEC MPEG = “Moving Picture Experts Group” (ISO/IEC JTC 1/SC 29/WG 11 = International Standardization Organization and International Electrotechnical Commission, Joint Technical Committee 1, Subcommittee 29, Working Group 11) • ITU-T VCEG = “Video Coding Experts Group” (ITU-T SG16/Q6 = International Telecommunications Union – Telecommunications Standardization Sector (ITU-T, a United Nations Organization, formerly CCITT), Study Group 16, Working Party 3, Question 6) • JVT = “Joint Video Team” collaborative team of MPEG & VCEG, responsible for developing AVC (discontinued in 2009) • JCT-VC = “Joint Collaborative Team on Video Coding” team of MPEG & VCEG , responsible for developing HEVC (established January 2010) • JVET = “Joint Video Experts Team” responsible for developing VVC (established Oct. 2015) – previously called “Joint Video Exploration Team” 3 Versatile Video Coding – The Next-Generation Video Standard of the Joint Video Experts Team Gary Sullivan | Jens-Rainer Ohm | Mathias Wien | July 31, 2018 History of international video coding standardization -

Paramount Theatre Sherry Lansing Theatre Screening Room #5 Marathon Theatre Gower Theatre

PARAMOUNT THEATRE SHERRY LANSING THEATRE SCREENING ROOM #5 MARATHON THEATRE GOWER THEATRE ith rooms that seat from 33 to 516 people, The Studios at Paramount has a screening room to accommodate an intimate screening with your production team, a full premiere gala, or anything in between. We also offer a complete range of projection and audio equipment to handle any feature, including 2K, 4K DLP projection in 2D and 3D, as well as 35mm and 70mm film projection. On top of that, all our theaters are staffed with skilled projectionists and exceptional engineering teams, to give you a perfect presentation every time. 2 PARAMOUNT THEATRE CUTTING-EDGE FEATURES, LAVISH DESIGN, PERFECT FOR PREMIERES FEATURES • VIP Green Room • Multimedia Capabilities • Huge Rotunda Lobby • Performance Stage in front of Screen • Reception Area • Ample Parking and Valet Service SPECIFICATIONS • 4K – Barco DP4K-60L • 2K – Christie CP2230 • 35mm and 70mm Norelco AA II Film Projection • Dolby Surround 7.1 • 16-Channel Mackie Mixer 1604-VLZ4 • Screen: 51’ x 24’ - Stewart White Ultra Matt 150-SP CAPACITY • Seats 516 DIGITAL CINEMA PROJECTION • DCP - Barco Alchemy ICMP • DCP – Doremi DCP-2K4 • XpanD Active 3D System • Barco Passive 3D System • Avid Media Composer • HDCAM SR and D5 • Blu-ray and DVD • 8 Sennheiser Wireless Microphones – Hand-held and Lavalier • 10 Clear-Com Tempest 2400 RF PL • PIX ADDITIONAL SERVICES AVAILABLE • Catering • Event Planning POST PRODUCTION SERVICES 10 • SecurityScreening Rooms 3 SCREENING ROOMS SHERRY LANSING THEATRE THE ULTIMATE REFERENCE -

Quadtree Based JBIG Compression

Quadtree Based JBIG Compression B. Fowler R. Arps A. El Gamal D. Yang ISL, Stanford University, Stanford, CA 94305-4055 ffowler,arps,abbas,[email protected] Abstract A JBIG compliant, quadtree based, lossless image compression algorithm is describ ed. In terms of the numb er of arithmetic co ding op erations required to co de an image, this algorithm is signi cantly faster than previous JBIG algorithm variations. Based on this criterion, our algorithm achieves an average sp eed increase of more than 9 times with only a 5 decrease in compression when tested on the eight CCITT bi-level test images and compared against the basic non-progressive JBIG algorithm. The fastest JBIG variation that we know of, using \PRES" resolution reduction and progressive buildup, achieved an average sp eed increase of less than 6 times with a 7 decrease in compression, under the same conditions. 1 Intro duction In facsimile applications it is desirable to integrate a bilevel image sensor with loss- less compression on the same chip. Suchintegration would lower p ower consumption, improve reliability, and reduce system cost. To reap these b ene ts, however, the se- lection of the compression algorithm must takeinto consideration the implementation tradeo s intro duced byintegration. On the one hand, integration enhances the p os- sibility of parallelism which, if prop erly exploited, can sp eed up compression. On the other hand, the compression circuitry cannot b e to o complex b ecause of limitations on the available chip area. Moreover, most of the chip area on a bilevel image sensor must b e o ccupied by photo detectors, leaving only the edges for digital logic. -

OASIS CGM Open Webcgm V2.1

WebCGM Version 2.1 OASIS Standard 01 March 2010 Specification URIs: This Version: XHTML multi-file: http://docs.oasis-open.org/webcgm/v2.1/os/webcgm-v2.1-index.html (AUTHORITATIVE) PDF: http://docs.oasis-open.org/webcgm/v2.1/os/webcgm-v2.1.pdf XHTML ZIP archive: http://docs.oasis-open.org/webcgm/v2.1/os/webcgm-v2.1.zip Previous Version: XHTML multi-file: http://docs.oasis-open.org/webcgm/v2.1/cs02/webcgm-v2.1-index.html (AUTHORITATIVE) PDF: http://docs.oasis-open.org/webcgm/v2.1/cs02/webcgm-v2.1.pdf XHTML ZIP archive: http://docs.oasis-open.org/webcgm/v2.1/cs02/webcgm-v2.1.zip Latest Version: XHTML multi-file: http://docs.oasis-open.org/webcgm/v2.1/latest/webcgm-v2.1-index.html PDF: http://docs.oasis-open.org/webcgm/v2.1/latest/webcgm-v2.1.pdf XHTML ZIP archive: http://docs.oasis-open.org/webcgm/v2.1/latest/webcgm-v2.1.zip Declared XML namespaces: http://www.cgmopen.org/schema/webcgm/ System Identifier: http://docs.oasis-open.org/webcgm/v2.1/webcgm21.dtd Technical Committee: OASIS CGM Open WebCGM TC Chair(s): Stuart Galt, The Boeing Company Editor(s): Benoit Bezaire, PTC Lofton Henderson, Individual Related Work: This specification updates: WebCGM 2.0 OASIS Standard (and W3C Recommendation) This specification, when completed, will be identical in technical content to: WebCGM 2.1 W3C Recommendation, available at http://www.w3.org/TR/webcgm21/. Abstract: Computer Graphics Metafile (CGM) is an ISO standard, defined by ISO/IEC 8632:1999, for the interchange of 2D vector and mixed vector/raster graphics. -

Digital Cinema System Specification Version 1.3

Digital Cinema System Specification Version 1.3 Approved 27 June 2018 Digital Cinema Initiatives, LLC, Member Representatives Committee Copyright © 2005-2018 Digital Cinema Initiatives, LLC DCI Digital Cinema System Specification v. 1.3 Page | 1 NOTICE Digital Cinema Initiatives, LLC (DCI) is the author and creator of this specification for the purpose of copyright and other laws in all countries throughout the world. The DCI copyright notice must be included in all reproductions, whether in whole or in part, and may not be deleted or attributed to others. DCI hereby grants to its members and their suppliers a limited license to reproduce this specification for their own use, provided it is not sold. Others should obtain permission to reproduce this specification from Digital Cinema Initiatives, LLC. This document is a specification developed and adopted by Digital Cinema Initiatives, LLC. This document may be revised by DCI. It is intended solely as a guide for companies interested in developing products, which can be compatible with other products, developed using this document. Each DCI member company shall decide independently the extent to which it will utilize, or require adherence to, these specifications. DCI shall not be liable for any exemplary, incidental, proximate or consequential damages or expenses arising from the use of this document. This document defines only one approach to compatibility, and other approaches may be available to the industry. This document is an authorized and approved publication of DCI. Only DCI has the right and authority to revise or change the material contained in this document, and any revisions by any party other than DCI are unauthorized and prohibited. -

Security Solutions Y in MPEG Family of MPEG Family of Standards

1 Security solutions in MPEG family of standards TdjEbhiiTouradj Ebrahimi [email protected] NET working? Broadcast Networks and their security 16-17 June 2004, Geneva, Switzerland MPEG: Moving Picture Experts Group 2 • MPEG-1 (1992): MP3, Video CD, first generation set-top box, … • MPEG-2 (1994): Digital TV, HDTV, DVD, DVB, Professional, … • MPEG-4 (1998, 99, ongoing): Coding of Audiovisual Objects • MPEG-7 (2001, ongo ing ): DitiDescription of Multimedia Content • MPEG-21 (2002, ongoing): Multimedia Framework NET working? Broadcast Networks and their security 16-17 June 2004, Geneva, Switzerland MPEG-1 - ISO/IEC 11172:1992 3 • Coding of moving pictures and associated audio for digital storage media at up to about 1,5 Mbit/s – Part 1 Systems - Program Stream – Part 2 Video – Part 3 Audio – Part 4 Conformance – Part 5 Reference software NET working? Broadcast Networks and their security 16-17 June 2004, Geneva, Switzerland MPEG-2 - ISO/IEC 13818:1994 4 • Generic coding of moving pictures and associated audio – Part 1 Systems - joint with ITU – Part 2 Video - joint with ITU – Part 3 Audio – Part 4 Conformance – Part 5 Reference software – Part 6 DSM CC – Par t 7 AAC - Advance d Au dio Co ding – Part 9 RTI - Real Time Interface – Part 10 Conformance extension - DSM-CC – Part 11 IPMP on MPEG-2 Systems NET working? Broadcast Networks and their security 16-17 June 2004, Geneva, Switzerland MPEG-4 - ISO/IEC 14496:1998 5 • Coding of audio-visual objects – Part 1 Systems – Part 2 Visual – Part 3 Audio – Part 4 Conformance – Part 5 Reference