A Robust Group Communication Library for Grid Environments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Live Distributed Objects

LIVE DISTRIBUTED OBJECTS A Dissertation Presented to the Faculty of the Graduate School of Cornell University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Krzysztof Jan Ostrowski August 2008 c 2008 Krzysztof Jan Ostrowski ALL RIGHTS RESERVED LIVE DISTRIBUTED OBJECTS Krzysztof Jan Ostrowski, Ph.D. Cornell University 2008 Distributed multiparty protocols such as multicast, atomic commit, or gossip are currently underutilized, but we envision that they could be used pervasively, and that developers could work with such protocols similarly to how they work with CORBA/COM/.NET/Java objects. We have created a new programming model and a platform in which protocol instances are represented as objects of a new type called live distributed objects: strongly-typed building blocks that can be composed in a type-safe manner through a drag and drop interface. Unlike most prior object-oriented distributed protocol embeddings, our model appears to be flexible enough to accommodate most popular protocols, and to be applied uniformly to any part of a distributed system, to build not only front-end, but also back-end components, such as multicast channels, naming, or membership services. While the platform is not limited to applications based on multicast, it is replication- centric, and reliable multicast protocols are important building blocks that can be used to create a variety of scalable components, from shared documents to fault-tolerant storage or scalable role delegation. We propose a new multicast architecture compatible with the model and designed in accordance with object-oriented principles such as modu- larity and encapsulation. -

Ultra-Large-Scale Sites

Ultra-large-scale Sites <working title> – Scalability, Availability and Performance in Social Media Sites (picture from social network visualization?) Walter Kriha With a forword by << >> Walter Kriha, Scalability and Availability Aspects…, V.1.9.1 page 1 03/12/2010 Copyright <<ISBN Number, Copyright, open access>> ©2010 Walter Kriha This selection and arrangement of content is licensed under the Creative Commons Attribution License: http://creativecommons.org/licenses/by/3.0/ online: www.kriha.de/krihaorg/ ... <a rel="license" href="http://creativecommons.org/licenses/by/3.0/de/"><img alt="Creative Commons License" style="border-width:0" src="http://i.creativecommons.org/l/by/3.0/de/88x31.png" /></a><br /><span xmlns:dc="http://purl.org/dc/elements/1.1/" href="http://purl.org/dc/dcmitype/Text" property="dc:title" rel="dc:type"> Building Scalable Social Media Sites</span> by <a xmlns:cc="http://creativecommons.org/ns#" href="wwww.kriha.de/krihaorg/books/ultra.pdf" property="cc:attributionName" rel="cc:attributionURL">Walter Kriha</a> is licensed under a <a rel="license" href="http://creativecommons.org/licenses/by/3.0/de/">Creative Commons Attribution 3.0 Germany License</a>.<br />Permissions beyond the scope of this license may be available at <a xmlns:cc="http://creativecommons.org/ns#" href="www.kriha.org" rel="cc:morePermissions">www.kriha.org</a>. Walter Kriha, Scalability and Availability Aspects…, V.1.9.1 page 2 03/12/2010 Acknowledgements <<master course, Todd Hoff/highscalability.com..>> Walter Kriha, Scalability and Availability Aspects…, V.1.9.1 page 3 03/12/2010 ToDo’s - The role of ultra fast networks (Infiniband) on distributed algorithms and behaviour with respect to failure models - more on group behaviour from Clay Shirky etc. -

Database Replication in Large Scale Systemsa4paper

Universidade do Minho Escola de Engenharia Departamento de Informática Dissertação de Mestrado Mestrado em Engenharia Informática Database Replication in Large Scale Systems Miguel Gonçalves de Araújo Trabalho efectuado sob a orientação do Professor Doutor José Orlando Pereira Junho 2011 Partially funded by project ReD – Resilient Database Clusters (PDTC / EIA-EIA / 109044 / 2008). ii Declaração Nome: Miguel Gonçalves de Araújo Endereço Electrónico: [email protected] Telefone: 963049012 Bilhete de Identidade: 12749319 Título da Tese: Database Replication in Large Scale Systems Orientador: Professor Doutor José Orlando Pereira Ano de conclusão: 2011 Designação do Mestrado: Mestrado em Engenharia Informática É AUTORIZADA A REPRODUÇÃO INTEGRAL DESTA TESE APENAS PARA EFEITOS DE INVESTIGAÇÃO, MEDIANTE DECLARAÇÃO ESCRITA DO INTERESSADO, QUE A TAL SE COMPROMETE. Universidade do Minho, 30 de Junho de 2011 Miguel Gonçalves de Araújo iii iv Experience is what you get when you didn’t get what you wanted. Randy Pausch (The Last Lecture) vi Acknowledgments First of all, I want to thank Prof. Dr. José Orlando Pereira for accepting being my adviser and for encouraging me to work on the Distributed Systems Group at University of Minho. His availability, patient and support were crucial on this work. I would also like to thank Prof. Dr. Rui Oliveira for equal encouragement on joining the group. A special thanks to my parents for all support throughout my journey and particularly all the incentives to keep on with my studies. I thank to my friends at the group that joined me on this important stage of my stud- ies. A special thank to Ricardo Coelho, Pedro Gomes and Ricardo Gonçalves for all the discussions and exchange of ideas, always encouraging my work. -

First Assignment

Second Assignment – Tutorial lecture INF5040 (Open Distributed Systems) Faraz German ([email protected]) Department of Informatics University of Oslo October 17, 2016 Group Communication System Services provided by group communication systems: o Abstraction of a Group o Multicast of messages to a Group o Membership of a Group o Reliable messages to a Group o Ordering of messages sent to a Group o Failure detection of members of the Group o A strong semantic model of how messages are handled when changes to the Group membership occur 2 Spread An open source toolkit that provides a high performance messaging service that is resilient to faults across local and wide area networks. Does not support very large groups, but does provide a strong model of reliability and ordering Integrates a membership notification service into the stream of messages Supports multiple link protocols and multiple client interfaces The client interfaces provided with Spread include native interfaces for Java and C. o Also non native Pearl, Python and Ruby interfaces Spread Provides different types of messaging services to applications o Messages to entire groups of recipients o Membership information about who is currently alive and reachable Provides both ordering and reliability guarantees Level of service When an application sends a Spread message, it chooses a level of service for that message. The level of service selected controls what kind of ordering and reliability are provided to that message. Spread Service Type Ordering Reliability UNRELIABLE -

Best Practice Assessment of Software Technologies for Robotics

Best Practice Assessment of Software Technologies for Robotics Azamat Shakhimardanov Jan Paulus Nico Hochgeschwender Michael Reckhaus Gerhard K. Kraetzschmar February 28, 2010 Abstract The development of a complex service robot application is a very difficult, time-consuming, and error-prone exercise. The need to interface to highly heterogeneous hardware, to run the final distributed software application on a ensemble of often heterogeneous computational devices, and to integrate a large variety of different computational approaches for solving particular functional aspects of the application are major contributing factors to the overall complexity of the task. While robotics researchers have focused on developing new methods and techniques for solving many difficult functional problems, the software industry outside of robotics has developed a tremendous arsenal of new software technologies to cope with the ever-increasing requirements of state-of-the-art and innovative software applications. Quite a few of these techniques have the potential to solve problems in robotics software development, but uptake in the robotics community has often been slow or the new technologies were almost neglected altogether. This paper identifies, reviews, and assesses software technologies relevant to robotics. For the current document, the assessment is scientifically sound, but neither claimed to be complete nor claimed to be a full-fledged quantitative and empirical evaluation and comparison. This first version of the report focuses on an assessment of technologies -

Database Management Systems Ebooks for All Edition (

Database Management Systems eBooks For All Edition (www.ebooks-for-all.com) PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sun, 20 Oct 2013 01:48:50 UTC Contents Articles Database 1 Database model 16 Database normalization 23 Database storage structures 31 Distributed database 33 Federated database system 36 Referential integrity 40 Relational algebra 41 Relational calculus 53 Relational database 53 Relational database management system 57 Relational model 59 Object-relational database 69 Transaction processing 72 Concepts 76 ACID 76 Create, read, update and delete 79 Null (SQL) 80 Candidate key 96 Foreign key 98 Unique key 102 Superkey 105 Surrogate key 107 Armstrong's axioms 111 Objects 113 Relation (database) 113 Table (database) 115 Column (database) 116 Row (database) 117 View (SQL) 118 Database transaction 120 Transaction log 123 Database trigger 124 Database index 130 Stored procedure 135 Cursor (databases) 138 Partition (database) 143 Components 145 Concurrency control 145 Data dictionary 152 Java Database Connectivity 154 XQuery API for Java 157 ODBC 163 Query language 169 Query optimization 170 Query plan 173 Functions 175 Database administration and automation 175 Replication (computing) 177 Database Products 183 Comparison of object database management systems 183 Comparison of object-relational database management systems 185 List of relational database management systems 187 Comparison of relational database management systems 190 Document-oriented database 213 Graph database 217 NoSQL 226 NewSQL 232 References Article Sources and Contributors 234 Image Sources, Licenses and Contributors 240 Article Licenses License 241 Database 1 Database A database is an organized collection of data. -

An Information-Driven Architecture for Cognitive Systems Research

An Information-Driven Architecture for Cognitive Systems Research Sebastian Wrede Dipl.-Inform. Sebastian Wrede AG Angewandte Informatik Technische Fakultät Universität Bielefeld email: [email protected] Abdruck der genehmigten Dissertation zur Erlangung des akademischen Grades Doktor-Ingenieur (Dr.-Ing.). Der Technischen Fakultät der Universität Bielefeld am 09.07.2008 vorgelegt von Sebastian Wrede, am 27.11.2008 verteidigt und genehmigt. Gutachter: Prof. Dr. Gerhard Sagerer, Universität Bielefeld Assoc. Prof. Bruce A. Draper, Colorado State University Prof. Dr. Christian Bauckhage, Universität Bonn Prüfungsausschuss: Prof. Dr. Helge Ritter, Universität Bielefeld Dr. Robert Haschke, Universität Bielefeld Gedruckt auf alterungsbeständigem Papier nach ISO 9706 An Information-Driven Architecture for Cognitive Systems Research Der Technischen Fakultät der Universität Bielefeld zur Erlangung des Grades Doktor-Ingenieur vorgelegt von Sebastian Wrede Bielefeld – Juli 2008 Acknowledgments Conducting research on a computer science topic and writing a thesis in a collaborative research project where the thesis results are at the same time the basis for scientific innovation in the very same project is a truly challenging experience - both for the author as well as its colleagues. Even though, working in the VAMPIRE EU project was always fun. Consequently, I would first like to thank all the people involved in this particular project for their commitment and cooperation. Among all the people supporting me over my PhD years, I need to single out two persons that were of particular importance. First of all, I would like to thank my advisor Christian Bauckhage for his inspiration and his ongoing support from the early days in the VAMPIRE project up to his final com- ments on this thesis manuscript. -

Architecture of the Focus 3D Telemodeling Tool

NISTIR 7322 Architecture of the Focus 3D Telemodeling Tool Arthur F. Griesser, Ph.D. NISTIR 7322 Architecture of the Focus 3D Telemodeling Tool Arthur F. Griesser, Ph.D. Semiconductor Electronics Devision Electronics and Electrical Engineering Laboratory August 2005 U.S. DEPARTMENT OF COMMERCE Carlos M. Gutierrez, Secretary TECHNOLOGY ADMINISTRATION Michelle O’Neill, Acting Under Secretary of Commerce for Technology NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY William A. Jeffrey, Director Architecture of the Focus 3D Telemodeling Tool Abstract A high level design of the Focus 3D modeling tool is presented. Infrastructure components are selected in the light of the functional and non functional requirements of the Focus project. A description of how these components will fit together is provided, and architectural alternatives are considered. Table of Contents Overview..................................................................................................................................................................... 4 Frameworks................................................................................................................................................................ 4 Change Distribution..................................................................................................................................................... 5 GUI Toolkit.................................................................................................................................................................. -

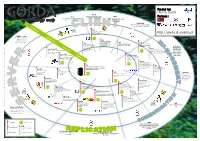

Replication Suite Er Op Jmanage E D4.3 Ore He Pr D6.4C In-C E T

Fun ded by: FP6 IST2 004758 TPC-W TPC-B Industry standard benchmark TPC-C Partners : GGORDAORDA Simple benchmark used for loading simulating a web application Industry standard benchmark implementations while testing simulating a write-intensive OLTP tteechchnonolologgyy mmapap application PolePosition Micro-benchmark collection used for sc IEN measuring overhead of the GAPI ena L T rios C Carob http://carob.continuent.org C/C++ binding to the middleware wrapper http://gorda.di.uminho.pt RDBMS and SQL plat The GORDA project targets D4.4 relational database form ODBC management systems s The standard database exposing a SQL interface JDBC Jade Other access interface in the MA Windows platform is Standard database access http://jade.objectweb.org Proprietary database interface in the Java platform access interfaces (DBMS supported natively or Autonomic management pack through Carob environment Centralized . specific or Perl, PHP, s r Management Python, etc) are Fractal Oracle Berkley DB age supported natively or Observation and wne http://fractal.objectweb.org N http://oss.oracle.com/berkeley-db.html through Carob e o s D5.4 control of the v i t Work-in-progress native Modular, extensible and programming c replicated database e language agnostic component model p implementation A system from a es Apache Derby int http://db.apache.org/derby e GORDA Management central location heir r rf Reference implementation of Interfaces for deploying and f t ac managing replicated database G o GORDA protocols in a flexible es y t R management systems -

Formation Flying Test Bed Data Analysis Software Programming Project

Project Number: SJB-GPS3 Worcester Polytechnic Institute 100 Institute Road, Worcester, MA 01609 Formation Flying Test Bed Data Analysis Software Programming Project A Preliminary Qualifying Project Proposal: Submitted to the Faculty of the WORCESTER POLYTECHNIC INSTITUTE In partial fulfillment of the requirements for the Degree of Bachelor of Science By ___________________________________ Ye Wang ___________________________________ Cody Holemo Date: October 14, 2004 NASA Mentors WPI Advisors Richard Burns Stephen Bitar [email protected] [email protected] ______________________________ Dave Gaylor Fred Looft [email protected] [email protected] ______________________________ This document represents the work of WPI students. The opinions expressed in this report are not necessarily those of the Goddard Space Flight Center or the National Aeronautics and Space Administration Abstract Our senior design project related to software development for the Formation Flying Test Bed (FFTB) at the National Aeronautics and Space Administration’s (NASA) Goddard Space Flight Center (GSFC). Our project consisted of enhancing existing software tools that provide real-time data plotting and data communication for the FFTB. The existing software tools consisted of a simulation plotting package and the software created by the 2003 WPI project team. We improved the data communications of the FFTB by implementing a middleware communications system to replace point-to-point connections. We also developed a real-time plotting application that utilized -

Jason W. Mitchell‡ and David M. Zakar§ Richard D. Burns¶ And

AIAA-2006-6127 A MESSAGE ORIENTED MIDDLEWARE FOR A SOFT REAL-TIME HARDWARE-IN-THE-LOOP SPACECRAFT FORMATION FLYING , TESTBED∗ † Jason W. Mitchell‡ and David M. Zakar§ Emergent Space Technologies, Inc., Greenbelt, MD 20770-6334 Richard D. Burns¶ and Richard J. Luquette! NASA Goddard Space Flight Center, Greenbelt, MD 20771 The Formation Flying Test-Bed (FFTB) at NASA Goddard Space Flight Center (GSFC) provides a hardware-in-the-loop test environment for for- mation navigation and control. The facility is evolving as a modular, hybrid, dynamic simulation facility for end-to-end guidance, navigation, and con- trol (GN&C) design and analysis of formation flying spacecraft. The core capabilities of the FFTB, as a platform for testing critical hardware and software algorithms in-the-loop, are presented with a focus on the imple- mentation and use of a message-oriented middleware (MOM) architecture. The MOM architecture provides a common messaging bus for software agents, easing integration, and interfaces with the GSFC Mission Services Evolution Center (GMSEC) architecture via software bridge. The perfor- mance of the overall messaging service in the context of a soft real-time simulation environment is discussed. Keywords: distributed computing, message oriented middleware, spacecraft forma- tion flying, hardware-in-the-loop, real-time. I. Introduction pacecraft formation flying is a concept that continues to attract significant attention; SFig. 1. The advantages of formation flying are manifold. The President’s Commission on Implementation of United States Space Exploration Policy1 identifies formation flying as one of seventeen enabling technologies needed to meet exploration objectives. Both NASA and ESA are evaluating formation flying concepts for numerous planned mis- sions. -

State of the Art in Database Replication

Project no. 004758 GORDA Open Replication Of Databases Specific Targeted Research Project Software and Services State of the Art in Database Replication GORDA Deliverable D1.1 Due date of deliverable: 2005/01/31 Actual submission date: 2005/09/15 Revision date: 2006/12/30 Start date of project: 1 October 2004 Duration: 36 Months Universidade do Minho Revision 1.2 Project co-funded by the European Commission within the Sixth Framework Programme (2002-2006) Dissemination Level PU Public X PP Restricted to other programme participants (including the Commission Services) RE Restricted to a group specified by the consortium (including the Commission Services) CO Confidential, only for members of the consortium (including the Commission Services) Contributors Alfrânio Correia Júnior António Sousa Emmanuel Cecchet Fernando Pedone José Pereira Luís Rodrigues Nuno Miguel Carvalho Ricardo Vilaça Rui Oliveira Sara Bouchenak Vaide Zuikeviciute —————————————————— (C) 2006 GORDA Consortium. Some rights reserved. This work is licensed under the Attribution-NonCommercial-NoDerivs 2.5 Creative Commons License. See http://creativecommons.org/licenses/by-nc-nd/2.5/legalcode for details. Contents 1 Introduction 7 2 Lazy Protocols 9 2.1 Model . 9 2.1.1 Capture . 9 2.1.2 Distribution . 10 2.1.3 Apply . 11 2.2 Management . 12 2.3 Implementations . 13 2.3.1 MS SQL Server 2000 . 13 2.3.2 Oracle 10g . 14 2.3.3 Sybase . 15 2.3.4 IBM DB2 . 16 2.3.5 PostgreSQL . 17 2.3.6 MySQL . 17 2.4 Algorithms . 17 3 Eager Protocols 21 3.1 Volume Replication Protocols . 21 3.2 Distributed Database Protocols .