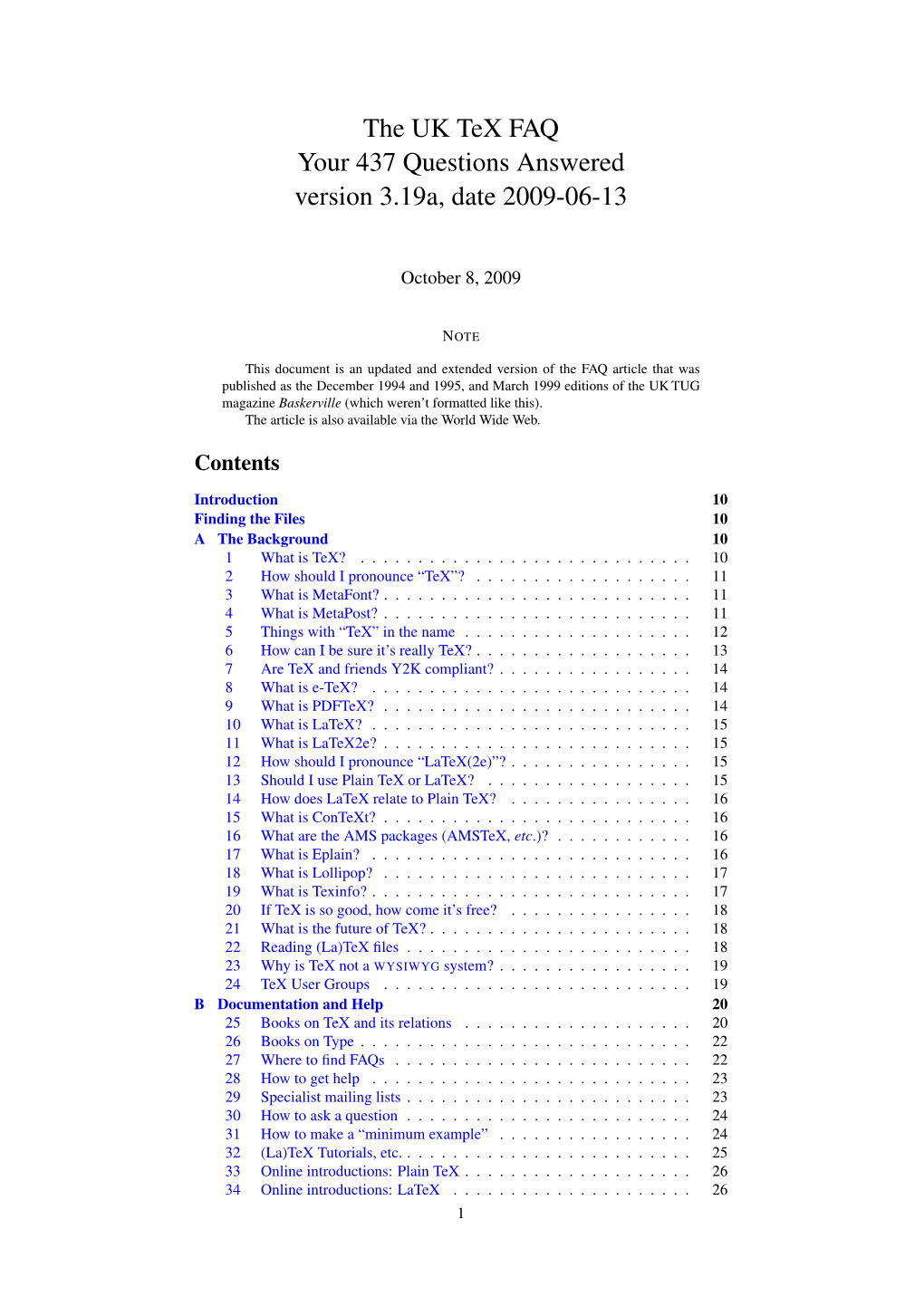

The UK Tex FAQ Your 437 Questions Answered Version 3.19A, Date 2009-06-13

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Donald Knuth Fletcher Jones Professor of Computer Science, Emeritus Curriculum Vitae Available Online

Donald Knuth Fletcher Jones Professor of Computer Science, Emeritus Curriculum Vitae available Online Bio BIO Donald Ervin Knuth is an American computer scientist, mathematician, and Professor Emeritus at Stanford University. He is the author of the multi-volume work The Art of Computer Programming and has been called the "father" of the analysis of algorithms. He contributed to the development of the rigorous analysis of the computational complexity of algorithms and systematized formal mathematical techniques for it. In the process he also popularized the asymptotic notation. In addition to fundamental contributions in several branches of theoretical computer science, Knuth is the creator of the TeX computer typesetting system, the related METAFONT font definition language and rendering system, and the Computer Modern family of typefaces. As a writer and scholar,[4] Knuth created the WEB and CWEB computer programming systems designed to encourage and facilitate literate programming, and designed the MIX/MMIX instruction set architectures. As a member of the academic and scientific community, Knuth is strongly opposed to the policy of granting software patents. He has expressed his disagreement directly to the patent offices of the United States and Europe. (via Wikipedia) ACADEMIC APPOINTMENTS • Professor Emeritus, Computer Science HONORS AND AWARDS • Grace Murray Hopper Award, ACM (1971) • Member, American Academy of Arts and Sciences (1973) • Turing Award, ACM (1974) • Lester R Ford Award, Mathematical Association of America (1975) • Member, National Academy of Sciences (1975) 5 OF 44 PROFESSIONAL EDUCATION • PhD, California Institute of Technology , Mathematics (1963) PATENTS • Donald Knuth, Stephen N Schiller. "United States Patent 5,305,118 Methods of controlling dot size in digital half toning with multi-cell threshold arrays", Adobe Systems, Apr 19, 1994 • Donald Knuth, LeRoy R Guck, Lawrence G Hanson. -

Developments in Technical Typesetting: Tex at the End of the Th Century

A Century of Science Publishing E.H. Fredriksson (Ed.) IOS Press, Chapter Developments in Technical Typesetting: TeX at the End of the th Century Barbara Beeton Editor, TUGboat, the Communications of the TeX Users Group Introduction The composition of mathematical and technical material has traditionally been considered “penalty copy”, because of both the large number of special sym- bols required, and the complicated arrangement of these symbols on the page. While careful exposition has always been a valued skill for the mathematician or scientist, the tools were not available to allow authors to complete the presentation until relatively recently. The machinery has now come together: a desktop or portable computer; a text editor; an input language that is reasonably intuitive to a scientific author; a composition program that “understands” what mathematical notation is supposed to look like; a laser printer with a resolution high enough to print even very small symbols clearly. The composition engine is TeX, by Donald Knuth of Stanford University. This tool, originally developed specifically to pro- duce the second edition of Knuth’s The Art of Computer Programming, Volume , has been adopted by mathematicians, physicists, and other scientists for their own use. A number of scientific and technical publishers accept electronic manuscripts prepared with TeX and insert them directly into their production flow, while other publishers use TeX as a back end, continuing to accept and process copy in the tra- ditional manner. TeX manuscripts are circulated in electronic form by their authors in e-mail and posted into preprint archives on the World Wide Web; they are portable and durable — a new edition of a work need not be typed from scratch, but can be modified from the file of the previous edition. -

The File Cmfonts.Fdd for Use with Latex2ε

The file cmfonts.fdd for use with LATEX 2".∗ Frank Mittelbach Rainer Sch¨opf 2019/12/16 This file is maintained byA theLTEX Project team. Bug reports can be opened (category latex) at https://latex-project.org/bugs.html. 1 Introduction This file contains the external font information needed to load the Computer Modern fonts designed by Don Knuth and distributed with TEX. From this file all .fd files (font definition files) for the Computer Modern fonts, both with old encoding (OT1) and Cork encoding (T1) are generated. The Cork encoded fonts are known under the name ec fonts. 2 Customization If you plan to install the AMS font package or if you have it already installed, please note that within this package there are additional sizes of the Computer Modern symbol and math italic fonts. With the release of LATEX 2", these AMS `extracm' fonts have been included in the LATEX font set. Therefore, the math .fd files produced here assume the presence of these AMS extensions. For text fonts in T1 encoding, the directive new selects the new (version 1.2) DC fonts. For the text fonts in OT1 and U encoding, the optional docstrip directive ori selects a conservatively generated set of font definition files, which means that only the basic font sizes coming with an old LATEX 2.09 installation are included into the \DeclareFontShape commands. However, on many installations, people have added missing sizes by scaling up or down available Metafont sources. For example, the Computer Modern Roman italic font cmti is only available in the sizes 7, 8, 9, and 10pt. -

Surviving the TEX Font Encoding Mess Understanding The

Surviving the TEX font encoding mess Understanding the world of TEX fonts and mastering the basics of fontinst Ulrik Vieth Taco Hoekwater · EuroT X ’99 Heidelberg E · FAMOUS QUOTE: English is useful because it is a mess. Since English is a mess, it maps well onto the problem space, which is also a mess, which we call reality. Similary, Perl was designed to be a mess, though in the nicests of all possible ways. | LARRY WALL COROLLARY: TEX fonts are mess, as they are a product of reality. Similary, fontinst is a mess, not necessarily by design, but because it has to cope with the mess we call reality. Contents I Overview of TEX font technology II Installation TEX fonts with fontinst III Overview of math fonts EuroT X ’99 Heidelberg 24. September 1999 3 E · · I Overview of TEX font technology What is a font? What is a virtual font? • Font file formats and conversion utilities • Font attributes and classifications • Font selection schemes • Font naming schemes • Font encodings • What’s in a standard font? What’s in an expert font? • Font installation considerations • Why the need for reencoding? • Which raw font encoding to use? • What’s needed to set up fonts for use with T X? • E EuroT X ’99 Heidelberg 24. September 1999 4 E · · What is a font? in technical terms: • – fonts have many different representations depending on the point of view – TEX typesetter: fonts metrics (TFM) and nothing else – DVI driver: virtual fonts (VF), bitmaps fonts(PK), outline fonts (PFA/PFB or TTF) – PostScript: Type 1 (outlines), Type 3 (anything), Type 42 fonts (embedded TTF) in general terms: • – fonts are collections of glyphs (characters, symbols) of a particular design – fonts are organized into families, series and individual shapes – glyphs may be accessed either by character code or by symbolic names – encoding of glyphs may be fixed or controllable by encoding vectors font information consists of: • – metric information (glyph metrics and global parameters) – some representation of glyph shapes (bitmaps or outlines) EuroT X ’99 Heidelberg 24. -

CM-Super: Automatic Creation of Efficient Type 1 Fonts from Metafont

CM-Super: Automatic creation of efficient Type 1 fonts from METAFONT fonts Vladimir Volovich Voronezh State University Moskovsky prosp. 109/1, kv. 75, Voronezh 304077 Russia [email protected] Abstract In this article I describe making the CM-Super fonts: Type 1 fonts converted from METAFONT sources of various Computer Modern font families. The fonts contain a large number of glyphs covering writing in dozens of languages (Latin-based, Cyrillic-based, etc.) and provide outline replacements for the original METAFONT fonts. The CM-Super fonts were produced by tracing the high resolution bitmaps generated by METAFONT with the help of TEXtrace, optimizing and hinting the fonts with FontLab, and applying cleanups and optimizations with Perl scripts. 1 The idea behind the CM-Super fonts There exist free Type 1 versions of the original CM The Computer Modern (CM) fonts are the default fonts, provided by Blue Sky Research, Elsevier Sci- ence, IBM Corporation, the Society for Industrial and most commonly used text fonts with TEX. Orig- inally, CM fonts contained only basic Latin letters, and Applied Mathematics (SIAM), Springer-Verlag, and thus covered only the English language. There Y&Y and the American Mathematical Society, but are however a number of Computer Modern look- until not long ago there were no free Type 1 versions alike METAFONT fonts developed which cover other of other “CM look-alike” fonts available, which lim- languages and scripts. Just to name a few: ited their usage in PDF and PostScript target docu- ment formats. The CM-Super fonts were developed • EC and TC fonts, developed by J¨orgKnappen, to cover this gap. -

DE-Tex-FAQ (Vers. 72

Fragen und Antworten (FAQ) über das Textsatzsystem TEX und DANTE, Deutschsprachige Anwendervereinigung TEX e.V. Bernd Raichle, Rolf Niepraschk und Thomas Hafner Version 72 vom September 2003 Dieser Text enthält häufig gestellte Fragen und passende Antworten zum Textsatzsy- stem TEX und zu DANTE e.V. Er kann über beliebige Medien frei verteilt werden, solange er unverändert bleibt (in- klusive dieses Hinweises). Die Autoren bitten bei Verteilung über gedruckte Medien, über Datenträger wie CD-ROM u. ä. um Zusendung von mindestens drei Belegexem- plaren. Anregungen, Ergänzungen, Kommentare und Bemerkungen zur FAQ senden Sie bit- te per E-Mail an [email protected] 1 Inhalt Inhalt 1 Allgemeines 5 1.1 Über diese FAQ . 5 1.2 CTAN, das ‚Comprehensive TEX Archive Network‘ . 8 1.3 Newsgroups und Diskussionslisten . 10 2 Anwendervereinigungen, Tagungen, Literatur 17 2.1 DANTE e.V. 17 2.2 Anwendervereinigungen . 19 2.3 Tagungen »geändert« .................................... 21 2.4 Literatur »geändert« .................................... 22 3 Textsatzsystem TEX – Übersicht 32 3.1 Grundlegendes . 32 3.2 Welche TEX-Formate gibt es? Was ist LATEX? . 38 3.3 Welche TEX-Weiterentwicklungen gibt es? . 41 4 Textsatzsystem TEX – Bezugsquellen 45 4.1 Wie bekomme ich ein TEX-System? . 45 4.2 TEX-Implementierungen »geändert« ........................... 48 4.3 Editoren, Frontend-/GUI-Programme »geändert« .................... 54 5 TEX, LATEX, Makros etc. (I) 62 5.1 LATEX – Grundlegendes . 62 5.2 LATEX – Probleme beim Umstieg von LATEX 2.09 . 67 5.3 (Silben-)Trennung, Absatz-, Seitenumbruch . 68 5.4 Seitenlayout, Layout allgemein, Kopf- und Fußzeilen »geändert« . 72 6 TEX, LATEX, Makros etc. (II) 79 6.1 Abbildungen und Tafeln . -

The New Font Project: TEX Gyre

Hans Hagen, Jerzy Ludwichowski, Volker Schaa NAJAAR 2006 47 The New Font Project: TEX Gyre Abstract metric and encoding files for each font. We look for- In this short presentation, we will introduce a new ward to an extended TFM format which will lift this re- project: the “lm-ization” of the free fonts that come striction and, in conjunction with OpenType, simplify with T X distributions. We will discuss the project E delivery and usage of fonts in TEX. objectives, timeline and cross-lug funding aspects. We especially look forward to assistance from pdfTEX users, because the pdfTEX team is working on the implementation on the support for OpenType Introduction fonts. An important consideration from Hans Hagen: “In The New Font Project is a brainchild of Hans Ha- the end, even Ghostscript will benefit, so I can even gen, triggered mainly by the very good reception imagine those fonts ending up in the Ghostscript dis- of the Latin Modern (LM) font project by the TEX tribution.” community. After consulting other LUG leaders, The idea of preparing such font families was sug- Bogusław Jackowski and Janusz M. Nowacki, aka gested by the pdfTEX development team. Their pro- “GUST type.foundry”, were asked to formulate the pro- posal triggered a lively discussion by an informal ject. group of representatives of several TEX user groups — The next section contains its outline, as prepared notably Karl Berry (TUG), Hans Hagen (NTG), Jerzy by Bogusław Jackowski and Janusz M. Nowacki. The Ludwichowski (GUST), Volker RW Schaa (DANTE)— remaining sections were written by us. who suggested that we should approach this project as a research, technical and implementation team, and Project outline promised their help in taking care of promotion, integ- ration, supervising and financing. -

Gnuplot Programming Interview Questions and Answers Guide

Gnuplot Programming Interview Questions And Answers Guide. Global Guideline. https://www.globalguideline.com/ Gnuplot Programming Interview Questions And Answers Global Guideline . COM Gnuplot Programming Job Interview Preparation Guide. Question # 1 What is Gnuplot? Answer:- Gnuplot is a command-driven interactive function plotting program. It can be used to plot functions and data points in both two- and three-dimensional plots in many different formats. It is designed primarily for the visual display of scientific data. gnuplot is copyrighted, but freely distributable; you don't have to pay for it. Read More Answers. Question # 2 How to run gnuplot on your computer? Answer:- Gnuplot is in widespread use on many platforms, including MS Windows, linux, unix, and OSX. The current source code retains supports for older systems as well, including VMS, Ultrix, OS/2, MS-DOS, Amiga, OS-9/68k, Atari ST, BeOS, and Macintosh. Versions since 4.0 have not been extensively tested on legacy platforms. Please notify the FAQ-maintainer of any further ports you might be aware of. You should be able to compile the gnuplot source more or less out of the box on any reasonable standard (ANSI/ISO C, POSIX) environment. Read More Answers. Question # 3 How to edit or post-process a gnuplot graph? Answer:- This depends on the terminal type you use. * X11 toolkits: You can use the terminal type fig and use the xfig drawing program to edit the plot afterwards. You can obtain the xfig program from its web site http://www.xfig.org. More information about the text-format used for fig can be found in the fig-package. -

About Basictex-2021

About BasicTeX-2021 Richard Koch January 2, 2021 1 Introduction Most TeX distributions for Mac OS X are based on TeX Live, the reference edition of TeX produced by TeX User Groups across the world. Among these is MacTeX, which installs the full TeX Live as well as front ends, Ghostscript, and other utilities | everything needed to use TeX on the Mac. To obtain it, go to http://tug.org/mactex. 2 Basic TeX BasicTeX (92 MB) is an installation package for Mac OS X based on TeX Live 2021. Unlike MacTeX, this package is deliberately small. Yet it contains all of the standard tools needed to write TeX documents, including TeX, LaTeX, pdfTeX, MetaFont, dvips, MetaPost, and XeTeX. It would be dangerous to construct a new distribution by going directly to CTAN or the Web and collecting useful style files, fonts and so forth. Such a distribution would run into support issues as the creators move on to other projects. Luckily, the TeX Live install script has its own notion of \installation packages" and collections of such packages to make \installation schemes." BasicTeX is constructed by running the TeX Live install script and choosing the \small" scheme. Thus it is a subset of the full TeX Live with exactly the TeX Live directory structure and configuration scripts. Moreover, BasicTeX contains tlmgr, the TeX Live Manager software introduced in TeX Live 2008, which can install additional packages over the network. So it will be easy for users to add missing packages if needed. Since it is important that the install package come directly from the standard TeX Live distribution, I'm going to explain exactly how I installed TeX to produce the install package. -

AMS-LATEX Version 1.0 User's Guide

e w -LATEX Version 1.0 User’s Guide American Mathematical Society August 1990 0 Contents I General 1 1 Introduction 1 1.1 Notes XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX 1 e e 2 The w -LTEX project 2 e e 3 Major components of the w -LTEX package 3 II Font considerations 4 4 The font selection scheme of Mittelbach and Schopf¨ 4 5 Basic concepts 4 5.1 Shape XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX 5 5.2 Series XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX 6 5.3 Size XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX 6 5.4 Family XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX 7 5.5 Using other font families XXXXXXXXXXXXXXXXXXXXX 8 5.6 The oldlfont option XXXXXXXXXXXXXXXXXXXXXX 10 5.7 Warnings XXXXXXXXXXXXXXXXXXXXXXXXXXXXXX 10 6 Names of math font commands 11 7 The command \newsymbol 16 8 The amssymb option 16 III Features of the amstex option 17 9 Math spacing commands 17 10 Multiple integral signs 17 i 11 Over and under arrows 17 12 Dots 18 13 Accents in math 19 14 Roots 19 15 Boxed formulas 20 16 Extensible arrows 20 17 \overset, \underset and \sideset 20 18 The \text command 21 19 Operator names 21 20 \mod and its relatives 22 21 Fractions and related constructions 22 22 Continued fractions 23 23 Smash options 24 e 24 New LTEX environments 24 24.1 The “cases” environment XXXXXXXXXXXXXXXXXXXXX 24 24.2 Matrix XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX 25 24.3 The Sb and Sp environments XXXXXXXXXXXXXXXXXXX 26 24.4 Commutative diagrams XXXXXXXXXXXXXXXXXXXXXX 26 25 Alignment structures for equations 27 25.1 The align environment XXXXXXXXXXXXXXXXXXXXX 28 25.2 The gather environment XXXXXXXXXXXXXXXXXXXX 28 25.3 The -

The Latex Graphics Companion / Michel Goossens

i i “tlgc2” — 2007/6/15 — 15:36 — page iii — #3 i i The LATEXGraphics Companion Second Edition Michel Goossens Frank Mittelbach Sebastian Rahtz Denis Roegel Herbert Voß Upper Saddle River, NJ • Boston • Indianapolis • San Francisco New York • Toronto • Montreal • London • Munich • Paris • Madrid Capetown • Sydney • Tokyo • Singapore • Mexico City i i i i i i “tlgc2” — 2007/6/15 — 15:36 — page iv — #4 i i Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and Addison-Wesley was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals. The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The publisher offers discounts on this book when ordered in quantity for bulk purchases and special sales. For more information, please contact: U.S. Corporate and Government Sales (800) 382-3419 [email protected] For sales outside of the United States, please contact: International Sales [email protected] Visit Addison-Wesley on the Web: www.awprofessional.com Library of Congress Cataloging-in-Publication Data The LaTeX Graphics companion / Michel Goossens ... [et al.]. -- 2nd ed. p. cm. Includes bibliographical references and index. ISBN 978-0-321-50892-8 (pbk. : alk. paper) 1. -

Installation

APPENDIX A Installation In case you do not already have a LATEX installation, in Sections A.1 and A.2, we describe how to install LATEX on your computer, a PC or a Mac. The installation is much easier if you obtain TEX Live 2007 (or later) from the TEX Users Group, TUG (see Section E.2). It contains both the TEX implementations we discuss. No installation is given for UNIX computers. The attraction of UNIX to its users is the incredibly large number of options, from the UNIX dialect, to the shell, the editor, and so on. A typical UNIX user downloads the code and compiles the system. This is obviously beyond the scope of this book. Nevertheless, TEX Live 2007 (or later) from the TEX Users Group supplies the compiled (binaries) of LATEX for a number of UNIX variants. First read Chapter 1, so that in this Appendix you recognize the terminology we introduce there. I will assume that you become sufficiently familiar with your LATEX distribution to be able to perform the editing cycle with the sample documents. 490 Appendix A Installation A.1 LATEX on a PC On a PC, most mathematicians use MiKTeX and the editor WinEdt. So it seems appro- priate that we start there. A.1.1 Installing MiKTeX If you made a donation to MiKTeX or if you have the TEX Live 2007 (or later) from the TEX Users Group, then you have a CD or DVD with the MiKTeX installer. Installation then is in one step and very fast. In case you do not have this CD or DVD, we show how to install from the Internet.