How to Configure Amazon Machine Image As Global-Active Device Iscsi Quorum Target

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Security in Cloud Computing a Security Assessment of Cloud Computing Providers for an Online Receipt Storage

Security in Cloud Computing A Security Assessment of Cloud Computing Providers for an Online Receipt Storage Mats Andreassen Kåre Marius Blakstad Master of Science in Computer Science Submission date: June 2010 Supervisor: Lillian Røstad, IDI Norwegian University of Science and Technology Department of Computer and Information Science Problem Description We will survey some current cloud computing vendors and compare them to find patterns in how their feature sets are evolving. The start-up firm dSafe intends to exploit the promises of cloud computing in order to launch their business idea with only marginal hardware and licensing costs. We must define the criteria for how dSafe's application can be sufficiently secure in the cloud as well as how dSafe can get there. Assignment given: 14. January 2010 Supervisor: Lillian Røstad, IDI Abstract Considerations with regards to security issues and demands must be addressed before migrating an application into a cloud computing environment. Different vendors, Microsoft Azure, Amazon Web Services and Google AppEngine, provide different capabilities and solutions to the individual areas of concern presented by each application. Through a case study of an online receipt storage application from the company dSafe, a basis is formed for the evaluation. The three cloud computing vendors are assessed with regards to a security assessment framework provided by the Cloud Security Alliance and the application of this on the case study. Finally, the study is concluded with a set of general recommendations and the recommendation of a cloud vendor. This is based on a number of security as- pects related to the case study’s existence in the cloud. -

Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4

SUSE Linux Enterprise Server 12 SP4 Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4 Provides information about how to manage storage devices on a SUSE Linux Enterprise Server. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xii 2 Giving Feedback xiv 3 Documentation Conventions xiv 4 Product Life Cycle and Support xvi Support Statement for SUSE Linux Enterprise Server xvii • Technology Previews xviii I FILE SYSTEMS AND MOUNTING 1 1 Overview -

AWS Certified Developer – Associate (DVA-C01) Sample Exam Questions

AWS Certified Developer – Associate (DVA-C01) Sample Exam Questions 1) A company is migrating a legacy application to Amazon EC2. The application uses a user name and password stored in the source code to connect to a MySQL database. The database will be migrated to an Amazon RDS for MySQL DB instance. As part of the migration, the company wants to implement a secure way to store and automatically rotate the database credentials. Which approach meets these requirements? A) Store the database credentials in environment variables in an Amazon Machine Image (AMI). Rotate the credentials by replacing the AMI. B) Store the database credentials in AWS Systems Manager Parameter Store. Configure Parameter Store to automatically rotate the credentials. C) Store the database credentials in environment variables on the EC2 instances. Rotate the credentials by relaunching the EC2 instances. D) Store the database credentials in AWS Secrets Manager. Configure Secrets Manager to automatically rotate the credentials. 2) A Developer is designing a web application that allows the users to post comments and receive near- real-time feedback. Which architectures meet these requirements? (Select TWO.) A) Create an AWS AppSync schema and corresponding APIs. Use an Amazon DynamoDB table as the data store. B) Create a WebSocket API in Amazon API Gateway. Use an AWS Lambda function as the backend and an Amazon DynamoDB table as the data store. C) Create an AWS Elastic Beanstalk application backed by an Amazon RDS database. Configure the application to allow long-lived TCP/IP sockets. D) Create a GraphQL endpoint in Amazon API Gateway. Use an Amazon DynamoDB table as the data store. -

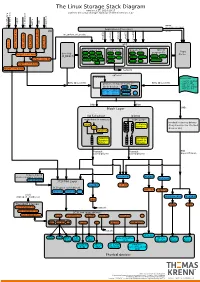

The Linux Storage Stack Diagram

The Linux Storage Stack Diagram version 3.17, 2014-10-17 outlines the Linux storage stack as of Kernel version 3.17 ISCSI USB mmap Fibre Channel Fibre over Ethernet Fibre Channel Fibre Virtual Host Virtual FireWire (anonymous pages) Applications (Processes) LIO malloc vfs_writev, vfs_readv, ... ... stat(2) read(2) open(2) write(2) chmod(2) VFS tcm_fc sbp_target tcm_usb_gadget tcm_vhost tcm_qla2xxx iscsi_target_mod block based FS Network FS pseudo FS special Page ext2 ext3 ext4 proc purpose FS target_core_mod direct I/O NFS coda sysfs Cache (O_DIRECT) xfs btrfs tmpfs ifs smbfs ... pipefs futexfs ramfs target_core_file iso9660 gfs ocfs ... devtmpfs ... ceph usbfs target_core_iblock target_core_pscsi network optional stackable struct bio - sector on disk BIOs (Block I/O) BIOs (Block I/O) - sector cnt devices on top of “normal” - bio_vec cnt block devices drbd LVM - bio_vec index - bio_vec list device mapper mdraid dm-crypt dm-mirror ... dm-cache dm-thin bcache BIOs BIOs Block Layer BIOs I/O Scheduler blkmq maps bios to requests multi queue hooked in device drivers noop Software (they hook in like stacked ... Queues cfq devices do) deadline Hardware Hardware Dispatch ... Dispatch Queue Queues Request Request BIO based Drivers based Drivers based Drivers request-based device mapper targets /dev/nullb* /dev/vd* /dev/rssd* dm-multipath SCSI Mid Layer /dev/rbd* null_blk SCSI upper level drivers virtio_blk mtip32xx /dev/sda /dev/sdb ... sysfs (transport attributes) /dev/nvme#n# /dev/skd* rbd Transport Classes nvme skd scsi_transport_fc network -

Introduction to Amazon Web Services

Introduction to Amazon Web Services Jeff Barr Senior AWS Evangelist @jeffbarr / [email protected] What Does It Take to be a Global Online Retailer? The Obvious Part… And the Not-So Obvious Part How Did Amazon Get in to Cloud Computing? • We’d been working on it for over a decade • Development of a platform to enable sellers on the Amazon global infrastructure • Internal need for centralized, scalable deployment environment for applications • Early forays into web services proved developers were hungry for more This Led to a Broader Mission • Enable businesses and developers to use web services (what people now call “the cloud”) to build scalable, sophisticated applications. “It's not the customers' job to invent for themselves. It's your job to invent on their behalf. You need to listen to customers. You need to invent on their behalf. Kindle, EC2 would not have been developed if we did not have an inventive culture.” - Jeff Bezos, Founder & CEO, Amazon.com Attributes of Cloud Computing No Up-Front Capital Low Cost Pay Only for What Expense You Use Self-Service Easily Scale Up and Improve Agility & Time- Infrastructure Down to-Market Deploy Last-Generation IT Services Cloud-Generation IT Services Cloud-Generation IT Services What’s the Difference? Last-Generation Cloud-Generation • IT department • Empowered users • Manual Setup • Automated Setup • Hours/Days/Weeks • Seconds/Minutes • Error-prone • Scripted & repeatable • Small scale • Any scale AWS PLATFORM Cloud-Powered Applications Management & Administration Administration Identity & -

Implementación De Los Algoritmos De Factorización 201

Universidad Complutense de Madrid Facultad de Informática Sistemas Informáticos 2010/2011 RSA@Cloud Criptoanálisis eficiente en la Nube Autores: Directores de proyecto: Alberto Megía Negrillo José Luis Vázquez-Poletti Antonio Molinera Lamas Ignacio Martin Llorente José Antonio Rueda Sánchez Dpto. Arquitectura de Computadores Página 0 Facultad de Informática UCM RSA@Cloud Sistemas Informáticos – FDI Se autoriza a la Universidad Complutense a difundir y utilizar con fines académicos, no comerciales y mencionando expresamente a sus autores, tanto la propia memoria, como el código, la documentación y/o el prototipo desarrollado. Alberto Megía Negrillo Antonio Molinera Lamas José Antonio Rueda Sánchez Página 1 Facultad de Informática UCM RSA@Cloud Sistemas Informáticos – FDI Página 2 Facultad de Informática UCM RSA@Cloud Sistemas Informáticos – FDI Agradecimientos Un trabajo como este no habría sido posible sin la cooperación de mucha gente. En primer lugar queremos agradecérselo a nuestras familias que tanto han confiado y nos han dado. A nuestros directores José Luis Vázquez-Poletti e Ignacio Martín Llorente, por conseguir llevar adelante este proyecto. Por último, a todas y cada una de las personas que forman parte de la Facultad de Informática de la Universidad Complutense de Madrid, nuestro hogar durante estos años. Página 3 Facultad de Informática UCM RSA@Cloud Sistemas Informáticos – FDI Página 4 Facultad de Informática UCM RSA@Cloud Sistemas Informáticos – FDI Resumen En el presente documento se describe un sistema que aprovecha las virtudes de la computación Cloud y el paralelismo para la factorización de números grandes, base de la seguridad del criptosistema RSA, mediante el empleo de diferentes algoritmos matemáticos como la división por tentativa y criba cuadrática. -

Unbreakable Enterprise Kernel Release Notes for Unbreakable Enterprise Kernel Release 3

Unbreakable Enterprise Kernel Release Notes for Unbreakable Enterprise Kernel Release 3 E48380-10 June 2020 Oracle Legal Notices Copyright © 2013, 2020, Oracle and/or its affiliates. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. -

Parallel NFS (Pnfs)

Red Hat Enterprise 7 Beta File Systems New Scale, Speed & Features Ric Wheeler Director Red Hat Kernel File & Storage Team Red Hat Storage Engineering Agenda •Red Hat Enterprise Linux 7 Storage Features •Red Hat Enterprise Linux 7 Storage Management Features •Red Hat Enterprise Linux 7 File Systems •What is Parallel NFS? •Red Hat Enterprise Linux 7 NFS Red Hat Enterprise Linux 7 Storage Foundations Red Hat Enterprise Linux 6 File & Storage Foundations •Red Hat Enterprise Linux 6 provides key foundations for Red Hat Enterprise Linux 7 • LVM Support for Scalable Snapshots • Device Mapper Thin Provisioned Storage • Expanded options for file systems •Large investment in performance enhancements •First in industry support for Parallel NFS (pNFS) LVM Thinp and Snapshot Redesign •LVM thin provisioned LV (logical volume) • Eliminates the need to pre-allocate space • Logical Volume space allocated from shared pool as needed • Typically a high end, enterprise storage array feature • Makes re-sizing a file system almost obsolete! •New snapshot design, based on thinp • Space efficient and much more scalable • Blocks are allocated from the shared pool for COW operations • Multiple snapshots can have references to same COW data • Scales to many snapshots, snapshots of snapshots RHEL Storage Provisioning Improved Resource Utilization & Ease of Use Avoids wasting space Less administration * Lower costs * Traditional Provisioning RHEL Thin Provisioning Free Allocated Unused Volume 1 Space Available Allocation Storage { Data Pool { Volume 1 Volume 2 Allocated -

Cisco Workload Automation Amazon EC2 Adapter Guide

Cisco Workload Automation Amazon EC2 Adapter Guide Version 6.3 First Published: August, 2015 Last Updated: September 6, 2016 Cisco Systems, Inc. www.cisco.com THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB’s public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS” WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

OPINION Sysadmin NETWORKING Security Columns

October 2010 VOLUME 35 NUMBER 5 OPINION Musings 2 RikR FaR ow SYSADMin Teaching System Administration in the Cloud 6 Jan Schaumann THE USENIX MAGAZINE A System Administration Parable: The Waitress and the Water Glass 12 ThomaS a. LimonceLLi Migrating from Hosted Exchange Service to In-House Exchange Solution 18 T Roy mckee NETWORKING IPv6 Transit: What You Need to Know 23 mT aT Ryanczak SU EC RITY Secure Email for Mobile Devices 29 B Rian kiRouac Co LUMNS Practical Perl Tools: Perhaps Size Really Does Matter 36 Davi D n. BLank-eDeLman Pete’s All Things Sun: The “Problem” with NAS 42 Pe TeR BaeR GaLvin iVoyeur: Pockets-o-Packets, Part 3 48 Dave JoSePhSen /dev/random: Airport Security and Other Myths 53 RoT BeR G. FeRReLL B OOK reVIEWS Book Reviews 56 El izaBeTh zwicky, wiTh BRanDon chinG anD Sam SToveR US eniX NOTES USA Wins World High School Programming Championships—Snaps Extended China Winning Streak 60 RoB koLSTaD Con FERENCES 2010 USENIX Federated Conferences Week: 2010 USENIX Annual Technical Conference Reports 62 USENIX Conference on Web Application Development (WebApps ’10) Reports 77 3rd Workshop on Online Social Networks (WOSN 2010) Reports 84 2nd USENIX Workshop on Hot Topics in Cloud Computing (HotCloud ’10) Reports 91 2nd Workshop on Hot Topics in Storage and File Systems (HotStorage ’10) Reports 100 Configuration Management Summit Reports 104 2nd USENIX Workshop on Hot Topics in Parallelism (HotPar ’10) Reports 106 The Advanced Computing Systems Association oct10covers.indd 1 9.7.10 1:54 PM Upcoming Events 24th Large InstallatIon system admInIstratIon ConferenCe (LISA ’10) Sponsored by USENIX in cooperation with LOPSA and SNIA november 7–12, 2010, San joSe, Ca, USa http://www.usenix.org/lisa10 aCm/IfIP/USENIX 11th InternatIonaL mIddLeware ConferenCe (mIddLeware 2010) nov. -

Oracle® Linux 7 Release Notes for Oracle Linux 7

Oracle® Linux 7 Release Notes for Oracle Linux 7 E53499-20 March 2021 Oracle Legal Notices Copyright © 2011,2021 Oracle and/or its affiliates. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. -

Reliable Storage for HA, DR, Clouds and Containers Philipp Reisner, CEO LINBIT LINBIT - the Company Behind It

Reliable Storage for HA, DR, Clouds and Containers Philipp Reisner, CEO LINBIT LINBIT - the company behind it COMPANY OVERVIEW TECHNOLOGY OVERVIEW • Developer of DRBD • 100% founder owned • Offices in Europe and US • Team of 30 highly experienced Linux experts • Partner in Japan REFERENCES 25 Linux Storage Gems LVM, RAID, SSD cache tiers, deduplication, targets & initiators Linux's LVM logical volume snapshot logical volume Volume Group physical volume physical volume physical volume 25 Linux's LVM • based on device mapper • original objects • PVs, VGs, LVs, snapshots • LVs can scatter over PVs in multiple segments • thinlv • thinpools = LVs • thin LVs live in thinpools • multiple snapshots became efficient! 25 Linux's LVM thin-LV thin-LV thin-sLV LV snapshot thinpool VG PV PV PV 25 Linux's RAID RAID1 • original MD code • mdadm command A1 A1 • Raid Levels: 0,1,4,5,6,10 A2 A2 • Now available in LVM as well A3 A3 A4 A4 • device mapper interface for MD code • do not call it ‘dmraid’; that is software for hardware fake-raid • lvcreate --type raid6 --size 100G VG_name 25 SSD cache for HDD • dm-cache • device mapper module • accessible via LVM tools • bcache • generic Linux block device • slightly ahead in the performance game 25 Linux’s DeDupe • Virtual Data Optimizer (VDO) since RHEL 7.5 • Red hat acquired Permabit and is GPLing VDO • Linux upstreaming is in preparation • in-line data deduplication • kernel part is a device mapper module • indexing service runs in user-space • async or synchronous writeback • Recommended to be used below LVM 25 Linux’s targets & initiators • Open-ISCSI initiator IO-requests • Ietd, STGT, SCST Initiator Target data/completion • mostly historical • LIO • iSCSI, iSER, SRP, FC, FCoE • SCSI pass through, block IO, file IO, user-specific-IO • NVMe-OF • target & initiator 25 ZFS on Linux • Ubuntu eco-system only • has its own • logic volume manager (zVols) • thin provisioning • RAID (RAIDz) • caching for SSDs (ZIL, SLOG) • and a file system! 25 Put in simplest form DRBD – think of it as ..