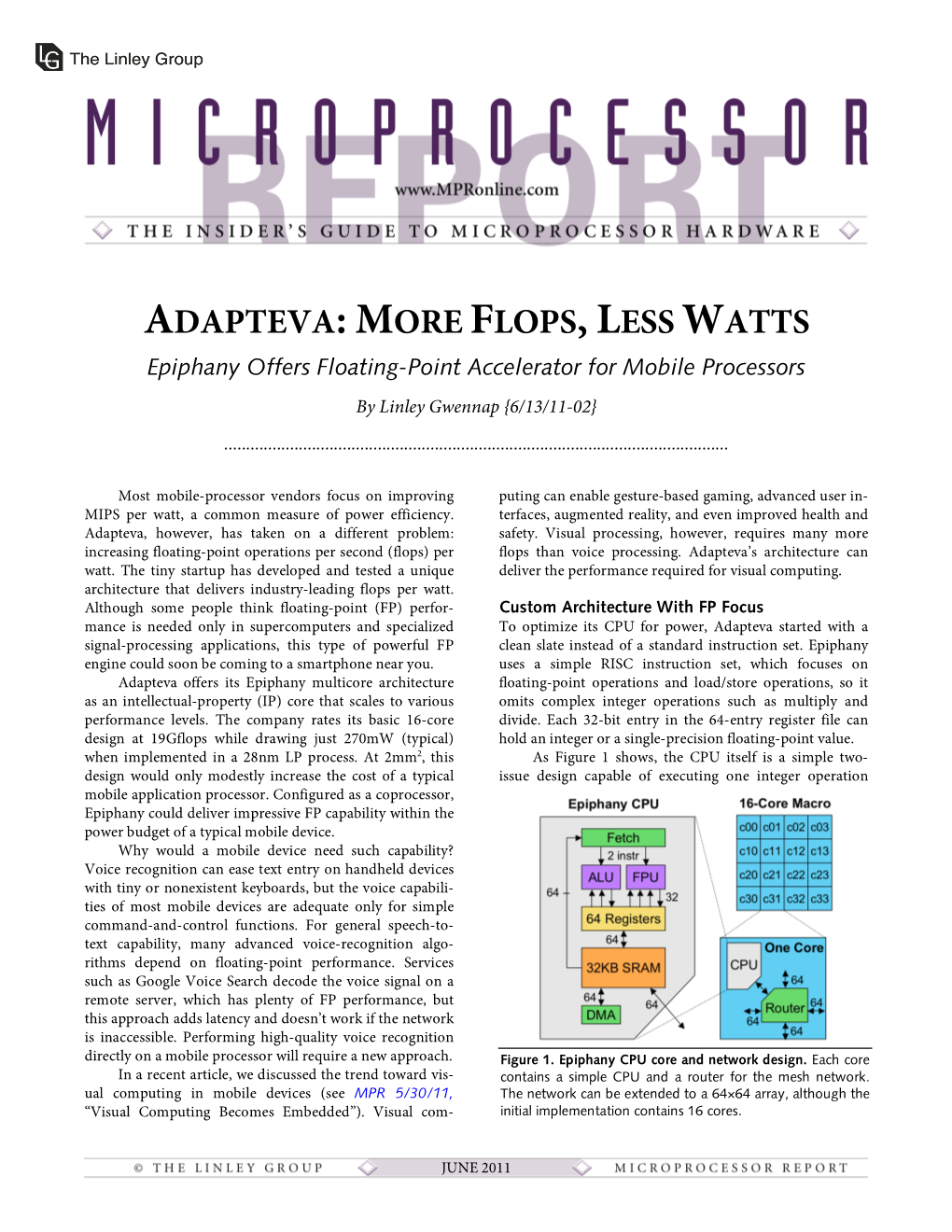

Flops, Less Watts. Epiphany Offers Floating-Point

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A 1024-Core 70GFLOPS/W Floating Point Manycore Microprocessor

A 1024-core 70GFLOPS/W Floating Point Manycore Microprocessor Andreas Olofsson, Roman Trogan, Oleg Raikhman Adapteva, Lexington, MA The Past, Present, & Future of Computing SIMD MIMD PE PE PE PE MINI MINI MINI CPU CPU CPU PE PE PE PE MINI MINI MINI CPU CPU CPU PE PE PE PE MINI MINI MINI CPU CPU CPU MINI MINI MINI BIG BIG CPU CPU CPU CPU CPU BIG BIG BIG BIG CPU CPU CPU CPU PAST PRESENT FUTURE 2 Adapteva’s Manycore Architecture C/C++ Programmable Incredibly Scalable 70 GFLOPS/W 3 Routing Architecture 4 E64G400 Specifications (Jan-2012) • 64-Core Microprocessor • 100 GFLOPS performance • 800 MHz Operation • 8GB/sec IO bandwidth • 1.6 TB/sec on chip memory BW • 0.8 TB/sec network on chip BW • 64 Billion Messages/sec IO Pads Core • 2 Watt total chip power • 2MB on chip memory Link Logic • 10 mm2 total silicon area • 324 ball 15x15mm plastic BGA 5 Lab Measurements 80 Energy Efficiency 70 60 50 GFLOPS/W 40 30 20 10 0 0 200 400 600 800 1000 1200 MHz ENERGY EFFICIENCY ENERGY EFFICIENCY (28nm) 6 Epiphany Performance Scaling 16,384 G 4,096 F 1,024 L 256 O 64 4096 P 1024 S 16 256 64 4 16 1 # Cores On‐demand scaling from 0.25W to 64 Watt 7 Hold on...the title said 1024 cores! • We can build it any time! • Waiting for customer • LEGO approach to design • No global timinga paths • Guaranteed by design • Generate any array in 1 day • ~130 mm2 silicon area 1024 Cores 1Core 8 What about 64-bit Floating Point? Single Precision Double Precision 2 FLOPS/CYCLE 2 FLOPS/CYCLE 64KB SRAM 64KB SRAM 0.215mm^2 0.237mm^2 700MHz 600MHz 9 Epiphany Latency Specifications -

Comparison of 116 Open Spec, Hacker Friendly Single Board Computers -- June 2018

Comparison of 116 Open Spec, Hacker Friendly Single Board Computers -- June 2018 Click on the product names to get more product information. In most cases these links go to LinuxGizmos.com articles with detailed product descriptions plus market analysis. HDMI or DP- USB Product Price ($) Vendor Processor Cores 3D GPU MCU RAM Storage LAN Wireless out ports Expansion OSes 86Duino Zero / Zero Plus 39, 54 DMP Vortex86EX 1x x86 @ 300MHz no no2 128MB no3 Fast no4 no5 1 headers Linux Opt. 4GB eMMC; A20-OLinuXino-Lime2 53 or 65 Olimex Allwinner A20 2x A7 @ 1GHz Mali-400 no 1GB Fast no yes 3 other Linux, Android SATA A20-OLinuXino-Micro 65 or 77 Olimex Allwinner A20 2x A7 @ 1GHz Mali-400 no 1GB opt. 4GB NAND Fast no yes 3 other Linux, Android Debian Linux A33-OLinuXino 42 or 52 Olimex Allwinner A33 4x A7 @ 1.2GHz Mali-400 no 1GB opt. 4GB NAND no no no 1 dual 40-pin 3.4.39, Android 4.4 4GB (opt. 16GB A64-OLinuXino 47 to 88 Olimex Allwinner A64 4x A53 @ 1.2GHz Mali-400 MP2 no 1GB GbE WiFi, BT yes 1 40-pin custom Linux eMMC) Banana Pi BPI-M2 Berry 36 SinoVoip Allwinner V40 4x A7 Mali-400 MP2 no 1GB SATA GbE WiFi, BT yes 4 Pi 40 Linux, Android 8GB eMMC (opt. up Banana Pi BPI-M2 Magic 21 SinoVoip Allwinner A33 4x A7 Mali-400 MP2 no 512MB no Wifi, BT no 2 Pi 40 Linux, Android to 64GB) 8GB to 64GB eMMC; Banana Pi BPI-M2 Ultra 56 SinoVoip Allwinner R40 4x A7 Mali-400 MP2 no 2GB GbE WiFi, BT yes 4 Pi 40 Linux, Android SATA Banana Pi BPI-M2 Zero 21 SinoVoip Allwinner H2+ 4x A7 @ 1.2GHz Mali-400 MP2 no 512MB no no WiFi, BT yes 1 Pi 40 Linux, Android Banana -

Webcore: Architectural Support for Mobile Web Browsing

WebCore: Architectural Support for Mobile Web Browsing Yuhao Zhu Vijay Janapa Reddi Department of Electrical and Computer Engineering The University of Texas at Austin [email protected], [email protected] Abstract The Web browser is undoubtedly the single most impor- Browser Browser tant application in the mobile ecosystem. An average user 63% 54% spends 72 minutes each day using the mobile Web browser. Web browser internal engines (e.g., WebKit) are also growing 23% 8% 32% Media 6% in importance because they provide a common substrate for 7% 7% Others developing various mobile Web applications. In a user-driven, Media Games Others interactive, and latency-sensitive environment, the browser’s Email performance is crucial. However, the battery-constrained na- (a) Time dist. of window focus. (b) Time dist. of CPU processing. ture of mobile devices limits the performance that we can de- Fig. 1: Mobile Web browser share study conducted by our industry liver for mobile Web browsing. As traditional general-purpose research partner on their employees’ devices [2]. Similar observa- techniques to improve performance and energy efficiency fall tions were reported by NVIDIA on Tegra-based mobile handsets [3,4]. short, we must employ domain-specific knowledge while still maintaining general-purpose flexibility. network limited. However, this trend is changing. With about In this paper, we first perform design-space exploration 10X improvement in round-trip time from 3G to LTE, network to identify appropriate general-purpose architectures that latency is no longer the only performance bottleneck [51]. uniquely fit the characteristics of a popular Web browsing Prior work has shown that over the past decade, network engine. -

UNIT 8B a Full Adder

UNIT 8B Computer Organization: Levels of Abstraction 15110 Principles of Computing, 1 Carnegie Mellon University - CORTINA A Full Adder C ABCin Cout S in 0 0 0 A 0 0 1 0 1 0 B 0 1 1 1 0 0 1 0 1 C S out 1 1 0 1 1 1 15110 Principles of Computing, 2 Carnegie Mellon University - CORTINA 1 A Full Adder C ABCin Cout S in 0 0 0 0 0 A 0 0 1 0 1 0 1 0 0 1 B 0 1 1 1 0 1 0 0 0 1 1 0 1 1 0 C S out 1 1 0 1 0 1 1 1 1 1 ⊕ ⊕ S = A B Cin ⊕ ∧ ∨ ∧ Cout = ((A B) C) (A B) 15110 Principles of Computing, 3 Carnegie Mellon University - CORTINA Full Adder (FA) AB 1-bit Cout Full Cin Adder S 15110 Principles of Computing, 4 Carnegie Mellon University - CORTINA 2 Another Full Adder (FA) http://students.cs.tamu.edu/wanglei/csce350/handout/lab6.html AB 1-bit Cout Full Cin Adder S 15110 Principles of Computing, 5 Carnegie Mellon University - CORTINA 8-bit Full Adder A7 B7 A2 B2 A1 B1 A0 B0 1-bit 1-bit 1-bit 1-bit ... Cout Full Full Full Full Cin Adder Adder Adder Adder S7 S2 S1 S0 AB 8 ⁄ ⁄ 8 C 8-bit C out FA in ⁄ 8 S 15110 Principles of Computing, 6 Carnegie Mellon University - CORTINA 3 Multiplexer (MUX) • A multiplexer chooses between a set of inputs. D1 D 2 MUX F D3 D ABF 4 0 0 D1 AB 0 1 D2 1 0 D3 1 1 D4 http://www.cise.ufl.edu/~mssz/CompOrg/CDAintro.html 15110 Principles of Computing, 7 Carnegie Mellon University - CORTINA Arithmetic Logic Unit (ALU) OP 1OP 0 Carry In & OP OP 0 OP 1 F 0 0 A ∧ B 0 1 A ∨ B 1 0 A 1 1 A + B http://cs-alb-pc3.massey.ac.nz/notes/59304/l4.html 15110 Principles of Computing, 8 Carnegie Mellon University - CORTINA 4 Flip Flop • A flip flop is a sequential circuit that is able to maintain (save) a state. -

EVA: an Efficient Vision Architecture for Mobile Systems

EVA: An Efficient Vision Architecture for Mobile Systems Jason Clemons, Andrea Pellegrini, Silvio Savarese, and Todd Austin Department of Electrical Engineering and Computer Science University of Michigan Ann Arbor, Michigan 48109 fjclemons, apellegrini, silvio, [email protected] Abstract The capabilities of mobile devices have been increasing at a momen- tous rate. As better processors have merged with capable cameras in mobile systems, the number of computer vision applications has grown rapidly. However, the computational and energy constraints of mobile devices have forced computer vision application devel- opers to sacrifice accuracy for the sake of meeting timing demands. To increase the computational performance of mobile systems we Figure 1: Computer Vision Example The figure shows a sock present EVA. EVA is an application-specific heterogeneous multi- monkey where a computer vision application has recognized its face. core having a mix of computationally powerful cores with energy The algorithm would utilize features such as corners and use their efficient cores. Each core of EVA has computation and memory ar- geometric relationship to accomplish this. chitectural enhancements tailored to the application traits of vision Watts over 250 mm2 of silicon, typical mobile processors are limited codes. Using a computer vision benchmarking suite, we evaluate 2 the efficiency and performance of a wide range of EVA designs. We to a few Watts with typically 5 mm of silicon [4] [22]. show that EVA can provide speedups of over 9x that of an embedded To meet the limited computation capability of mobile proces- processor while reducing energy demands by as much as 3x. sors, computer vision application developers reluctantly sacrifice image resolution, computational precision or application capabili- Categories and Subject Descriptors C.1.4 [Parallel Architec- ties for lower quality versions of vision algorithms. -

6Th Generation Intel® Core™ Processors Based on the Mobile U-Processor for Iot Solutions (Intel® Core™ I7-6600U, I5-6300U, and I3-6100U Processors)

PLATFORM BRIEF 6th Generation Intel® Core™ Mobile Processor Family Internet of Things 6th Generation Intel® Core™ Processors Based on the Mobile U-Processor for IoT Solutions (Intel® Core™ i7-6600U, i5-6300U, and i3-6100U Processors) Harness the Performance, Features, and Edge-to-Cloud Scalability to Build Tomorrow’s IoT Solutions Today Product Overview Stunning Visual Performance Intel is proud to announce its 6th The 6th generation Intel Core generation Intel® Core™ processor processors utilize the new Gen9 family featuring ultra low-power, graphics engine, which improves 64-bit, multicore processors built on graphic performance by up to the latest 14 nm technology. Designed 34 percent.1 The improvements are for small form-factor applications, this demonstrated through faster 3-D multichip package (MCP) integrates graphics performance and rendering a low-power CPU and platform applications at low power. Video controller hub (PCH) onto a common playback is also faster and smoother package substrate. thanks to the new multiplane overlay capability. The new generation offers The 6th generation Intel Core processor up to three independent audio streams family offers dramatically higher CPU and displays, Ultra HD 4K support, and and graphics performance, a broad workload consolidation for lower BOM range of power and features scaling costs and energy output. the entire Intel product line, and new, advanced features that boost edge-to- Users will also enjoy enhanced cloud Internet of Things (IoT) designs high-density streaming applications in a wide variety of markets. These and optimized 4K videoconferencing processors run at 15W thermal design with accelerated 4K hardware media power (TDP) and are ideal for small, codecs HEVC (8-bit), VP8, VP9, and energy-efficient, form-factor designs, VDENC encoding, decoding, and including digital signage, point-of-sale transcoding. -

PART I ITEM 1. BUSINESS Industry We Are

PART I ITEM 1. BUSINESS Industry We are the world’s largest semiconductor chip maker, based on revenue. We develop advanced integrated digital technology products, primarily integrated circuits, for industries such as computing and communications. Integrated circuits are semiconductor chips etched with interconnected electronic switches. We also develop platforms, which we define as integrated suites of digital computing technologies that are designed and configured to work together to provide an optimized user computing solution compared to ingredients that are used separately. Our goal is to be the preeminent provider of semiconductor chips and platforms for the worldwide digital economy. We offer products at various levels of integration, allowing our customers flexibility to create advanced computing and communications systems and products. We were incorporated in California in 1968 and reincorporated in Delaware in 1989. Our Internet address is www.intel.com. On this web site, we publish voluntary reports, which we update annually, outlining our performance with respect to corporate responsibility, including environmental, health, and safety compliance. On our Investor Relations web site, located at www.intc.com, we post the following filings as soon as reasonably practicable after they are electronically filed with, or furnished to, the U.S. Securities and Exchange Commission (SEC): our annual, quarterly, and current reports on Forms 10-K, 10-Q, and 8-K; our proxy statements; and any amendments to those reports or statements. All such filings are available on our Investor Relations web site free of charge. The SEC also maintains a web site (www.sec.gov) that contains reports, proxy and information statements, and other information regarding issuers that file electronically with the SEC. -

Survey and Benchmarking of Machine Learning Accelerators

1 Survey and Benchmarking of Machine Learning Accelerators Albert Reuther, Peter Michaleas, Michael Jones, Vijay Gadepally, Siddharth Samsi, and Jeremy Kepner MIT Lincoln Laboratory Supercomputing Center Lexington, MA, USA freuther,pmichaleas,michael.jones,vijayg,sid,[email protected] Abstract—Advances in multicore processors and accelerators components play a major role in the success or failure of an have opened the flood gates to greater exploration and application AI system. of machine learning techniques to a variety of applications. These advances, along with breakdowns of several trends including Moore’s Law, have prompted an explosion of processors and accelerators that promise even greater computational and ma- chine learning capabilities. These processors and accelerators are coming in many forms, from CPUs and GPUs to ASICs, FPGAs, and dataflow accelerators. This paper surveys the current state of these processors and accelerators that have been publicly announced with performance and power consumption numbers. The performance and power values are plotted on a scatter graph and a number of dimensions and observations from the trends on this plot are discussed and analyzed. For instance, there are interesting trends in the plot regarding power consumption, numerical precision, and inference versus training. We then select and benchmark two commercially- available low size, weight, and power (SWaP) accelerators as these processors are the most interesting for embedded and Fig. 1. Canonical AI architecture consists of sensors, data conditioning, mobile machine learning inference applications that are most algorithms, modern computing, robust AI, human-machine teaming, and users (missions). Each step is critical in developing end-to-end AI applications and applicable to the DoD and other SWaP constrained users. -

With Extreme Scale Computing the Rules Have Changed

With Extreme Scale Computing the Rules Have Changed Jack Dongarra University of Tennessee Oak Ridge National Laboratory University of Manchester 11/17/15 1 • Overview of High Performance Computing • With Extreme Computing the “rules” for computing have changed 2 3 • Create systems that can apply exaflops of computing power to exabytes of data. • Keep the United States at the forefront of HPC capabilities. • Improve HPC application developer productivity • Make HPC readily available • Establish hardware technology for future HPC systems. 4 11E+09 Eflop/s 362 PFlop/s 100000000100 Pflop/s 10000000 10 Pflop/s 33.9 PFlop/s 1000000 1 Pflop/s SUM 100000100 Tflop/s 166 TFlop/s 1000010 Tflop /s N=1 1 Tflop1000/s 1.17 TFlop/s 100 Gflop/s100 My Laptop 70 Gflop/s N=500 10 59.7 GFlop/s 10 Gflop/s My iPhone 4 Gflop/s 1 1 Gflop/s 0.1 100 Mflop/s 400 MFlop/s 1994 1996 1998 2000 2002 2004 2006 2008 2010 2012 2014 2015 1 Eflop/s 1E+09 420 PFlop/s 100000000100 Pflop/s 10000000 10 Pflop/s 33.9 PFlop/s 1000000 1 Pflop/s SUM 100000100 Tflop/s 206 TFlop/s 1000010 Tflop /s N=1 1 Tflop1000/s 1.17 TFlop/s 100 Gflop/s100 My Laptop 70 Gflop/s N=500 10 59.7 GFlop/s 10 Gflop/s My iPhone 4 Gflop/s 1 1 Gflop/s 0.1 100 Mflop/s 400 MFlop/s 1994 1996 1998 2000 2002 2004 2006 2008 2010 2012 2014 2015 1E+10 1 Eflop/s 1E+09 100 Pflop/s 100000000 10 Pflop/s 10000000 1 Pflop/s 1000000 SUM 100 Tflop/s 100000 10 Tflop/s N=1 10000 1 Tflop/s 1000 100 Gflop/s N=500 100 10 Gflop/s 10 1 Gflop/s 1 100 Mflop/s 0.1 1996 2002 2020 2008 2014 1E+10 1 Eflop/s 1E+09 100 Pflop/s 100000000 10 Pflop/s 10000000 1 Pflop/s 1000000 SUM 100 Tflop/s 100000 10 Tflop/s N=1 10000 1 Tflop/s 1000 100 Gflop/s N=500 100 10 Gflop/s 10 1 Gflop/s 1 100 Mflop/s 0.1 1996 2002 2020 2008 2014 • Pflops (> 1015 Flop/s) computing fully established with 81 systems. -

SPORK: a Summarization Pipeline for Online Repositories of Knowledge

SPORK: A SUMMARIZATION PIPELINE FOR ONLINE REPOSITORIES OF KNOWLEDGE A Thesis presented to the Faculty of California Polytechnic State University San Luis Obispo In Partial Fulfillment of the Requirements for the Degree Master of Science in Computer Science by Steffen Lyngbaek June 2013 c 2013 Steffen Lyngbaek ALL RIGHTS RESERVED ii COMMITTEE MEMBERSHIP TITLE: SPORK: A Summarization Pipeline for Online Repositories of Knowledge AUTHOR: Steffen Lyngbaek DATE SUBMITTED: June 2013 COMMITTEE CHAIR: Professor Alexander Dekhtyar, Ph.D., De- parment of Computer Science COMMITTEE MEMBER: Professor Franz Kurfess, Ph.D., Depar- ment of Computer Science COMMITTEE MEMBER: Professor Foaad Khosmood, Ph.D., Depar- ment of Computer Science iii Abstract SPORK: A Summarization Pipeline for Online Repositories of Knowledge Steffen Lyngbaek The web 2.0 era has ushered an unprecedented amount of interactivity on the Internet resulting in a flood of user-generated content. This content is of- ten unstructured and comes in the form of blog posts and comment discussions. Users can no longer keep up with the amount of content available, which causes developers to start relying on natural language techniques to help mitigate the problem. Although many natural language processing techniques have been em- ployed for years, automatic text summarization, in particular, has recently gained traction. This research proposes a graph-based, extractive text summarization system called SPORK (Summarization Pipeline for Online Repositories of Knowl- edge). The goal of SPORK is to be able to identify important key topics presented in multi-document texts, such as online comment threads. While most other automatic summarization systems simply focus on finding the top sentences rep- resented in the text, SPORK separates the text into clusters, and identifies dif- ferent topics and opinions presented in the text. -

Theoretical Peak FLOPS Per Instruction Set on Modern Intel Cpus

Theoretical Peak FLOPS per instruction set on modern Intel CPUs Romain Dolbeau Bull – Center for Excellence in Parallel Programming Email: [email protected] Abstract—It used to be that evaluating the theoretical and potentially multiple threads per core. Vector of peak performance of a CPU in FLOPS (floating point varying sizes. And more sophisticated instructions. operations per seconds) was merely a matter of multiplying Equation2 describes a more realistic view, that we the frequency by the number of floating-point instructions will explain in details in the rest of the paper, first per cycles. Today however, CPUs have features such as vectorization, fused multiply-add, hyper-threading or in general in sectionII and then for the specific “turbo” mode. In this paper, we look into this theoretical cases of Intel CPUs: first a simple one from the peak for recent full-featured Intel CPUs., taking into Nehalem/Westmere era in section III and then the account not only the simple absolute peak, but also the full complexity of the Haswell family in sectionIV. relevant instruction sets and encoding and the frequency A complement to this paper titled “Theoretical Peak scaling behavior of current Intel CPUs. FLOPS per instruction set on less conventional Revision 1.41, 2016/10/04 08:49:16 Index Terms—FLOPS hardware” [1] covers other computing devices. flop 9 I. INTRODUCTION > operation> High performance computing thrives on fast com- > > putations and high memory bandwidth. But before > operations => any code or even benchmark is run, the very first × micro − architecture instruction number to evaluate a system is the theoretical peak > > - how many floating-point operations the system > can theoretically execute in a given time. -

NVIDIA Tegra 4 Family CPU Architecture 4-PLUS-1 Quad Core

Whitepaper NVIDIA Tegra 4 Family CPU Architecture 4-PLUS-1 Quad core 1 Table of Contents ...................................................................................................................................................................... 1 Introduction .............................................................................................................................................. 3 NVIDIA Tegra 4 Family of Mobile Processors ............................................................................................ 3 Benchmarking CPU Performance .............................................................................................................. 4 Tegra 4 Family CPUs Architected for High Performance and Power Efficiency ......................................... 6 Wider Issue Execution Units for Higher Throughput ............................................................................ 6 Better Memory Level Parallelism from a Larger Instruction Window for Out-of-Order Execution ...... 7 Fast Load-To-Use Logic allows larger L1 Data Cache ............................................................................. 8 Enhanced branch prediction for higher efficiency .............................................................................. 10 Advanced Prefetcher for higher MLP and lower latency .................................................................... 10 Large Unified L2 Cache .......................................................................................................................