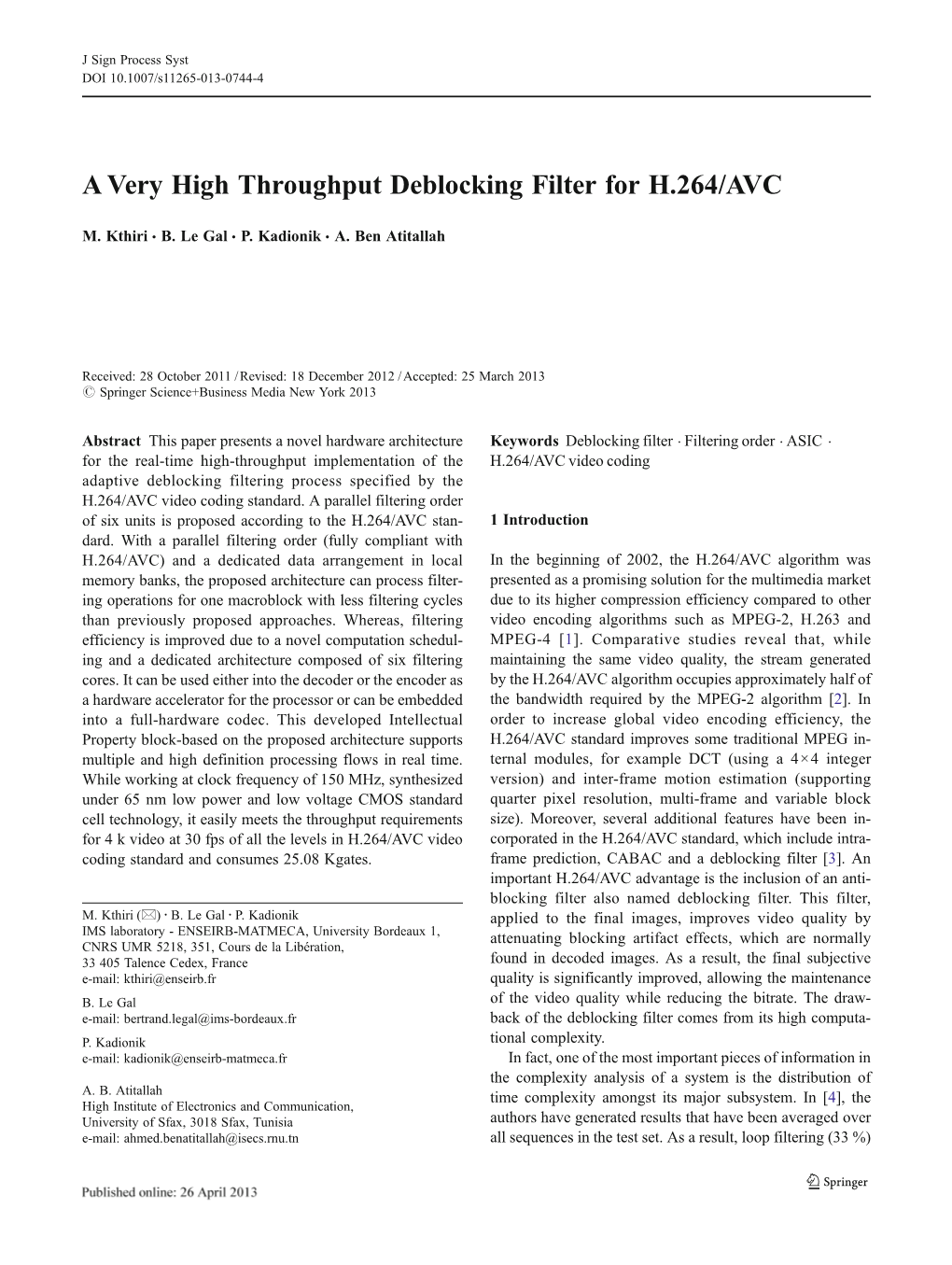

A Very High Throughput Deblocking Filter for H.264/AVC

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Deblocking Filter Hardware Architecture for the High Efficiency

A Deblocking Filter Hardware Architecture for the High Efficiency Video Coding Standard Cláudio Machado Diniz1, Muhammad Shafique2, Felipe Vogel Dalcin1, Sergio Bampi1, Jörg Henkel2 1Informatics Institute, PPGC, Federal University of Rio Grande do Sul (UFRGS), Porto Alegre, Brazil 2Chair for Embedded Systems (CES), Karlsruhe Institute of Technology (KIT), Germany {cmdiniz, fvdalcin, bampi}@inf.ufrgs.br; {muhammad.shafique, henkel}@kit.edu Abstract—The new deblocking filter (DF) tool of the next encoder configuration: (i) Random Access (RA) configuration1 generation High Efficiency Video Coding (HEVC) standard is with Group of Pictures (GOP) equal to 8 (ii) Intra period2 for one of the most time consuming algorithms in video decoding. In each video sequence is defined as in [8] depending upon the order to achieve real-time performance at low-power specific frame rate of the video sequence, e.g. 24, 30, 50 or 60 consumption, we developed a hardware accelerator for this filter. frames per second (fps); (iii) each sequence is encoded with This paper proposes a high throughput hardware architecture four different Quantization Parameter (QP) values for HEVC deblocking filter employing hardware reuse to QP={22,27,32,37} as defined in the HEVC Common Test accelerate filtering decision units with a low area cost. Our Conditions [8]. Fig. 1 shows the accumulated execution time architecture achieves either higher or equivalent throughput (in % of total decoding time) of all functions included in C++ (4096x2048 @ 60 fps) with 5X-6X lower area compared to state- class TComLoopFilter that implement the DF in HEVC of-the-art deblocking filter architectures. decoder software. DF contributes to up to 5%-18% to the total Keywords—HEVC coding; Deblocking Filter; Hardware decoding time, depending on video sequence and QP. -

An Efficient Pipeline Architecture for Deblocking Filter in H.264/AVC

IEICE TRANS. INF. & SYST., VOL.E90–D, NO.1 JANUARY 2007 99 PAPER Special Section on Advanced Image Technology An Efficient Pipeline Architecture for Deblocking Filter in H.264/AV C Chung-Ming CHEN†a), Member and Chung-Ho CHEN†b), Nonmember SUMMARY In this paper, we study and analyze the computational complexity of deblocking filter in H.264/AVC baseline decoder based on SimpleScalar/ARM simulator. The simulation result shows that the mem- ory reference, content activity check operations, and filter operations are known to be very time consuming in the decoder of this new video cod- ing standard. In order to improve overall system performance, we propose a novel processing order with efficient VLSI architecture which simultane- ously processes the horizontal filtering of vertical edge and vertical filtering of horizontal edge. As a result, the memory performance of the proposed architecture is improved by four times when compared to the software im- plementation. Moreover, the system performance of our design signifi- cantly outperforms the previous proposals. key words: deblocking filter, H.264/AVC, video coding 1. Introduction Fig. 1 Block diagram of H.264/AV C . Video compression is a critical component in today’s multi- media systems. The limited transmission bandwidth or stor- As our experiment result indicates, the operation of age capacity for applications such as DVD, digital televi- the deblocking filter is the most time consuming part of sion, or internet video streaming emphasizes the demand for the H.264/AVC video decoder. The block-based structure higher video compression rates. To achieve this demand, of the H.264/AVC architecture produces artifacts known as the new video coding standard Recommendation H.264 of blocking artifacts. -

Variable Block-Based Deblocking Filter for H.264/Avc

VARIABLE BLOCK-BASED DEBLOCKING FILTER FOR H.264/AVC Seung-Ho Shin, Young-Joon Chai, Kyu-Sik Jang, Tae-Yong Kim GSAIM, Chung-Ang University, Seoul, Korea ABSTRACT macroblocks up to 4x4 blocks, it is possible to decrease artifacts on the boundaries between 4x4 blocks. In the The deblocking filter in H.264/AVC is used on low-end process of decreasing the blocking artifacts, however, the terminals to a limited extent due to computational actual images’ edges may erroneously be blurred. And it complexity. In this paper, Variable block-based deblocking may not be used on low-end terminals due to complex filter that efficiently eliminates the blocking artifacts computation and large memory capacity. Despite such occurred during the compression of low-bit rates digital shortcomings, however, the deblocking filter can be said to motion pictures is suggested. Blocking artifacts are plaid be the most essential technology in enhancing the subjective images appear on the block boundaries due to DCT and image quality. The general opinion of the subjective image quantization. In the method proposed in this paper, the quality test proves that there is distinguishing difference in image's spatial correlational characteristics are extracted by the image qualities with and without the deblocking filter using the variable block information of motion used [6]. compensation; the filtering is divided into 4 modes according to the characteristics, and adaptive filtering is In this paper, we suggest a block-based deblocking filter by executed in the divided regions. The proposed deblocking using the variable block information of the motion method eliminates the blocking artifacts, prevents excessive compensation. -

Deblocking Scheme for JPEG-Coded Images Using Sparse Representation and All Phase Biorthogonal Transform

Journal of Communications Vol. 11, No. 12, December 2016 Deblocking Scheme for JPEG-Coded Images Using Sparse Representation and All Phase Biorthogonal Transform Liping Wang, Chengyou Wang, and Xiao Zhou School of Mechanical, Electrical and Information Engineering, Shandong University, Weihai 264209, China Email: [email protected]; {wangchengyou, zhouxiao}@sdu.edu.cn Abstract—For compressed images, a major drawback is that The image deblocking algorithm aims to alleviate those images will exhibit severe blocking artifacts at very low blocking artifacts and improve visual quality of bit rates due to adopting Block-Based Discrete Cosine compressed images. Deblocking methods can be mainly Transform (BDCT). In this paper, a novel deblocking scheme divided into two categories: in-loop processing methods using sparse representation is proposed. A new transform called All Phase Biorthogonal Transform (APBT) was proposed in and post-processing methods. The in-loop deblocking recent years. APBT has the similar energy compaction property processing methods not only avoid the propagation of with Discrete Cosine Transform (DCT). It has very good blocking artifacts between adjacent frames, but also can column properties, high frequency attenuation characteristics, enhance coding efficiency. For example, H.264/AVC [2] low frequency energy aggregation, and so on. In this paper, we adopts the in-loop deblocking processing. Some use it to generate the over-completed dictionary for sparse researchers have designed the in-loop deblocking filter coding. For Orthogonal Matching Pursuit (OMP), we select an [4]. Numerous experimental results manifest that the in- adaptive residual threshold by combining blind image blocking assessment. Experimental results show that this new scheme is loop deblocking filter can provide both objective and effective in image deblocking and can avoid over-blurring of subjective improvement compared with the compressed edges and textures. -

A Deblocking Filter Architecture for High Efficiency Video Coding Standard (HEVC)

UNIVERSIDADE FEDERAL DO RIO GRANDE DO SUL INSTITUTO DE INFORMÁTICA CURSO DE ENGENHARIA DE COMPUTAÇÃO FELIPE VOGEL DALCIN A Deblocking Filter Architecture for High Efficiency Video Coding Standard (HEVC) Final report presented in partial fulfillment of the Requirements for the degree in Computer Engineering. Advisor: Prof. Dr. Sergio Bampi Co-advisor: MSc. Cláudio Machado Diniz Porto Alegre 2014 UNIVERSIDADE FEDERAL DO RIO GRANDE DO SUL Reitor: Prof. Carlos Alexandre Netto Vice-Reitor: Prof. Rui Vicente Oppermann Pró-Reitor de Graduação: Prof. Sérgio Roberto Kieling Diretor do Instituto de Informática: Prof. Luís da Cunha Lamb Coordenador do Curso de Engenharia de Computação: Prof. Marcelo Götz Bibliotecária-Chefe do Instituto de Informática: Beatriz Regina Bastos Haro “Docendo discimus.” Seneca AKNOWLEDGEMENTS I would like to express my gratitude to my advisor, Prof. Dr. Sergio Bampi, who accepted me as lab assistant in 2010 to work for the research group under his supervision, bringing me the opportunity to stay in touch with a high-level academic environment. A very special thanks goes out to my co-advisor, MSc. Cláudio Machado Diniz, whose expertise, understanding, patience and unconditionall support were vital to the accomplishment of this work, specially taking into considerations the reduced amount of time he had to review this work. Last but not least, I would like to thank my parents and friends for their unconditional support throughout my degree. A special remark goes to my girlfriend, who kept me motivated during the last weeks of work to get this report done. In conclusion, I recognize that this research would not have been possible without the opportunities offered by both the Universidade Federal do Rio Grande do Sul, in Brazil, and the Technische Universität Kaiserslautern, in Germany. -

Optimization Methods for Data Compression

OPTIMIZATION METHODS FOR DATA COMPRESSION A Dissertation Presented to The Faculty of the Graduate School of Arts and Sciences Brandeis University Computer Science James A. Storer Advisor In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy by Giovanni Motta May 2002 This dissertation, directed and approved by Giovanni Motta's Committee, has been accepted and approved by the Graduate Faculty of Brandeis University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY _________________________ Dean of Arts and Sciences Dissertation Committee __________________________ James A. Storer ____________________________ Martin Cohn ____________________________ Jordan Pollack ____________________________ Bruno Carpentieri ii To My Parents. iii ACKNOWLEDGEMENTS I wish to thank: Bruno Carpentieri, Martin Cohn, Antonella Di Lillo, Jordan Pollack, Francesco Rizzo, James Storer for their support and collaboration. I also thank Jeanne DeBaie, Myrna Fox, Julio Santana for making my life at Brandeis easier and enjoyable. iv ABSTRACT Optimization Methods for Data Compression A dissertation presented to the Faculty of the Graduate School of Arts and Sciences of Brandeis University, Waltham, Massachusetts by Giovanni Motta Many data compression algorithms use ad–hoc techniques to compress data efficiently. Only in very few cases, can data compressors be proved to achieve optimality on a specific information source, and even in these cases, algorithms often use sub–optimal procedures in their execution. It is appropriate to ask whether the replacement of a sub–optimal strategy by an optimal one in the execution of a given algorithm results in a substantial improvement of its performance. Because of the differences between algorithms the answer to this question is domain dependent and our investigation is based on a case–by–case analysis of the effects of using an optimization procedure in a data compression algorithm. -

Low Complexity Deblocking Filter Perceptual Optimization for the Hevc Codec

2011 18th IEEE International Conference on Image Processing LOW COMPLEXITY DEBLOCKING FILTER PERCEPTUAL OPTIMIZATION FOR THE HEVC CODEC Matteo Naccari 1, Catarina Brites 1,2 , João Ascenso 1,3 and Fernando Pereira 1,2 Instituto de Telecomunicações 1 – Instituto Superior Técnico 2, Instituto Superior de Engenharia de Lisboa 3 {matteo.naccari, catarina.brites, joao.ascenso, fernando.pereira}@lx.it.pt ABSTRACT codec. The perceptual optimization is performed by varying the The compression efficiency of the state-of-art H.264/AVC video two aforementioned offsets to minimize a Generalized Block-edge coding standard must be improved to accommodate the compres- Impairment Metric (GBIM) [7], taken as good quality metric to sion needs of high definition videos. To this end, ITU and MPEG quantify the blocking artifacts visibility. The proposed novelty may started a new standardization project called High Efficiency Video be summarized in two main contributions: first, the GBIM is ex- Coding. The video codec under development still relies on trans- tended to consider the new block sizes considered in the TMuC form domain quantization and includes the same in-loop deblock- codec and, second, a low complexity deblocking filter offsets per- ing filter adopted in the H.264/AVC standard to reduce quantiza- ceptual optimization is proposed to improve the GBIM quality tion blocking artifacts. This deblocking filter provides two offsets while significantly reducing the computational resources that to vary the amount of filtering for each image area. This paper would be required by a brute force approach where all possible proposes a perceptual optimization of these offsets based on a offset values would be exhaustively tested. -

Multimedia Compression and Communication

UNIT I – MULTIMEDIA COMPONENTS DSEC/ECE/QB DHANALAKSHMI SRINIVASAN ENGINEERING COLLEGE, PERAMBALUR DEPARTMENT OF ELECTRONICS & COMMUNICATION ENGINEERING EC6018 MULTIMEDIA COMPRESSION & COMMUNICATION UNIT I MULTIMEDIA COMPONENTS PART – A 1. What are the responsibilities of interface and information designers in the development of a multimedia project? (A/M 15) An interface designer is responsible for: i) creating software device that organizes content, allows users to access or modify content and present that content on the screen, ii) building a user friendly interface. Information designers, who structure content, determine user pathways and feedback and select presentation media. 2. List the features of multimedia. (A/M 14) A Multimedia system has four basic characteristics: Multimedia systems must be computer controlled. Multimedia systems are integrated. The information they handle must be represented digitally. The interface to the final presentation of media is usually interactive. 3. What are the multimedia components? (A/M 17) Text, Audio, Images, Animations, Video and interactive content are the multimedia components. The first multimedia element is text. Text is the most common multimedia element. 4. Differentiate Serif and Sans serif fonts. (N/D 16, A/M 16, N/D 15) Answer: S.No. Serif fonts Sans serif fonts The ones without such decorative A font that has decorative corners or 1. corners are called Sans Serif (No stands at the corners is called Serif. Serif) fonts. Sans means “without”. So Sans Serif 2. Serif stands for stroke or line font means font without strokes or lines. Serif fonts have the extra stroke or Sans-Serif doesn’t have any such 3. -

Introduction to Data Compression

THIRD EDITION Introduction to Data Compression Khalid Sayood University of Nebraska AMSTERDAM • BOSTON • HEIDELBERG . LONDON f^PflÄ NEW YORK . OXFORD • PARIS • SAN DIEGO ^£^11ш&3- SAN FRANCISCO * SINGAPORE . SYDNEY . TOKYO ELSEVIER Morgan Kaufmann is an imprint of Elsevier MORGAN KAUFMANКN Contents Preface 1 Introduction 1.1 Compression Techniques 1.1.1 Lossless Compression 1.1.2 Lossy Compression 1.1.3 Measures of Performance 1.2 Modeling and Coding 1.3 Summary 1.4 Projects and Problems 2 Mathematical Preliminaries for Lossless Compression 2.1 Overview 2.2 A Brief Introduction to Information Theory 2.2.1 Derivation of Average Information -fc 2.3 Models 2.3.1 Physical Models 2.3.2 Probability Models 2.3.3 Markov Models 2.3.4 Composite Source Model 2.4 Coding 2.4.1 Uniquely Decodable Codes 2.4.2 Prefix Codes 2.4.3 The Kraft-McMillan Inequality * 2.5 Algorithmic Information Theory 2.6 Minimum Description Length Principle 2.7 Summary 2.8 Projects and Problems 3 Huffman Coding 3.1 Overview 3.2 The Huffman Coding Algorithm 3.2.1 Minimum Variance Huffman Codes 3.2.2 Optimality of Huffman Codes * 3.2.3 Length of Huffman Codes * 3.2.4 Extended Huffman Codes * CONTENTS 3.3 Nonbinary Huffman Codes if 55 3.4 Adaptive Huffman Coding 58 3.4.1 Update Procedure 59 3.4.2 Encoding Procedure 62 3.4.3 Decoding Procedure 63 3.5 Golomb Codes 65 3.6 Rice Codes 67 3.6.1 CCSDS Recommendation for Lossless Compression 67 3.7 Tunstall Codes 69 3.8 Applications of Huffman Coding 72 3.8.1 Lossless Image Compression 72 3.8.2 Text Compression 74 3.8.3 Audio Compression -

Source and Channel Coding for Audiovisual Communication Systems

Thesis for the degree of Doctor of Philosophy Source and Channel Coding for Audiovisual Communication Systems Moo Young Kim Sound and Image Processing Laboratory Department of Signals, Sensors, and Systems Kungliga Tekniska H¨ogskolan Stockholm 2004 Kim, Moo Young Source and Channel Coding for Audiovisual Communication Systems Copyright c 2004 Moo Young Kim except where otherwise stated. All rights reserved. ISBN 91-628-6198-0 TRITA-S3-SIP 2004:3 ISSN 1652-4500 ISRN KTH/S3/SIP-04:03-SE Sound and Image Processing Laboratory Department of Signals, Sensors, and Systems Kungliga Tekniska H¨ogskolan SE-100 44 Stockholm, Sweden Telephone + 46 (0)8-790 7790 To my family Abstract Topics in source and channel coding for audiovisual communication systems are studied. The goal of source coding is to represent a source with the lowest possible rate to achieve a particular distortion, or with the lowest possible distortion at a given rate. Channel coding adds redundancy to quantized source information to recover channel errors. This thesis consists of four topics. Firstly, based on high-rate theory, we propose Karhunen-Lo`eve trans- form (KLT)-based classified vector quantization (VQ) to efficiently utilize optimal VQ advantages over scalar quantization (SQ). Compared with code- excited linear predictive (CELP) speech coding, KLT-based classified VQ provides not only a higher SNR and perceptual quality, but also lower com- putational complexity. Further improvement is obtained by companding. Secondly, we compare various transmitter-based packet-loss recovery techniques from a rate-distortion viewpoint for real-time audiovisual com- munication systems over the Internet. We conclude that, in most circum- stances, multiple description coding (MDC) is the best packet-loss recov- ery technique. -

Fpga Implementation of Deblocking Filter Custom Instruction Hardware on Nios-Ii Based Soc

International Journal of VLSI design & Communication Systems (VLSICS) Vol.2, No.4, December 2011 FPGA IMPLEMENTATION OF DEBLOCKING FILTER CUSTOM INSTRUCTION HARDWARE ON NIOS-II BASED SOC Bolla Leela Naresh 1 N.V.Narayana Rao 2 and Addanki Purna Ramesh 3 Department of ECE, Sri Vasavi Engg College, Tadepalligudem, West Godavari (dt), Andhra Pradesh, India [email protected] [email protected] [email protected] ABSTRACT This paper presents a frame work for hardware acceleration for post video processing system implemented on FPGA. The deblocking filter algorithms ported on SOC having Altera NIOS-II soft core processor.SOC designed with the help of SOPC builder .Custom instructions are chosen by identifying the most frequently used tasks in the algorithm and the instruction set of NIOS-II processor has been extended. Deblocking filter new instruction added to the processor that are implemented in hardware and interfaced to the NIOS- II processor. New instruction added to the processor to boost the performance of the deblocking filter algorithm. Use of custom instructions the implemented tasks have been accelerated by 5.88%. The benefit of the speed is obtained at the cost of very small hardware resources. KEYWORDS Deblocking filter , SOC, NIOS-II soft processor, FPGA 1. INTRODUCTION Video broadcasting over the internet to handheld devices and mobile phones is becoming increasingly popular in the recent years. Also High Definition TVs are becoming common. But due to the limitation in the transmission bandwidth the videos will generally be encoded using video coding techniques which uses DCT and quantization that brings blockiness in the decoded video. -

Bit-Rate Aware Reconfigurable Architecture for H.264/Avc Deblocking Filter

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2010 Bit-rate Aware Reconfigurable Architecture For H.264/avc Deblocking Filter Rakan Khraisha University of Central Florida Part of the Electrical and Electronics Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Masters Thesis (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Khraisha, Rakan, "Bit-rate Aware Reconfigurable Architecture For H.264/avc Deblocking Filter" (2010). Electronic Theses and Dissertations, 2004-2019. 1571. https://stars.library.ucf.edu/etd/1571 BIT-RATE AWARE RECONFIGURABLE ARCHITECTURE FOR H.264/AVC DEBLOCKING FILTER by RAKAN KHRAISHA B.S. University of Jordan, 2007 A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in the School of Electrical Engineering and Computer Science in the College of Engineering and Computer Science at the University of Central Florida Orlando, Florida Summer Term 2010 ©2010 Rakan Khraisha ii ABSTARCT In H.264/AVC, DeBlocking Filter (DBF) achieves bit rate savings and it is used to improve visual quality by reducing the presence of blocking artifacts. However, these advantages come at the expense of increasing computational complexity of the DBF due to highly adaptive mode decision and small 4x4 block size. The DBF easily accounts for one third of the computational complexity of the decoder.