Reputation Systems for Anonymous Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

QUICK GUIDE How to Search for the Existing Award ID (Including Legacy

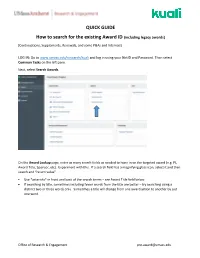

QUICK GUIDE How to search for the existing Award ID (including legacy awards) (Continuations, Supplements, Renewals, and some P&As and Internals) LOG IN: Go to www.umass.edu/research/kuali and log in using your NetID and Password. Then select Common Tasks on the left pane. Next, select Search Awards. On the Award Lookup page, enter as many search fields as needed to hone in on the targeted award (e.g. PI, Award Title, Sponsor, etc). Experiment with this. If a search field has a magnifying glass icon, select it and then search and “return value”. • Use *asterisks* in front and back of the search terms – see Award Title field below. • If searching by title, sometimes including fewer words from the title are better – try searching using a distinct two or three words only. Sometimes a title will change from one award action to another by just one word. Office of Research & Engagement [email protected] • After searching, run a report by selecting spreadsheet (seen below this chart on the left). Suggestions: Save as an Excel Workbook when multiple award records appear in order to hone in on the correct version. Delete columns as needed and add a filter to help with the sort and search process. Office of Research & Engagement [email protected] Identify the correct award Always select the so-called “Parent” award – the suffix is always “-00001” Note: To confirm linkage with the correct legacy award record: • In Kuali, select Medusa in the “-00001” record. • In the list that appears, select any award record except for the “-00001” Parent (the legacy data does not reside in the “Parent” record). -

Diptico Quiropractica Copia

VIII QUEEN Award Guidelines María CHIROPRACTIC Cristina Sponsored by the Banco Santander Award Call for Papers: VIII Queen María Cristina Award. The Real Centro Universitario Escorial-María Cristina invites authors from all nations to submit papers for this award sponsored by the Santander Bank. Category: CHIROPRACTIC AWARD GUIDELINES 1 In regard to the award, an author may submit one or more papers. The paper must be unpublished and authored by one or more people. This is an international award and the papers may be presented in English or Spanish. The research paper must be original, neither published in any journal nor presented to other award contests or conferences. 2 The research papers may be on Basic Sciences (experimental models, Anatomy, Physiology, Biomechanics, Immunology, etc), Clinical Sciences (Analytical and Diagnostic Methods, including intra- and inter-examiner reliability), Clinical Trials, Retrospective Cohort Studies, and areas of specific interest (Anthropology, Epidemiology, and Education) related to Chiropractic. 3 Papers need to be submitted using Times New Roman font in 12 pt with 1.5 line spacing, in a Word-format (.doc or .docx) to the email address [email protected] from an account that ought not to revel the identity of the author. On the first page, the category should be indicated (CHIROPRACTIC), title of the paper, and the pseudonym (pen name) of the author. The real name(s) of the author(s) may not appear on the front page or any page throughout the paper. If the real name is found in any part of the paper or the sending address displays the real identity of the sender, it will be considered disqualified for the award. -

Program Book

PROGRAM BOOK U.S. Congress’ Award for Youth The official guide to earning The Congressional Award, complete with program requirements, best practices, and Record Book. YOUR JOURNEY STARTS HERE The United States Congress established Public Law 96-114: The Congressional Award Act on November 16, 1979 to recognize initiative, service, and achievement in young people. Today, The Congressional Award remains Congress’ only charity and the highest honor a member of the U.S. Senate or House of Representatives may bestow upon a youth civilian. We hope that through your pursuit of this coveted honor, you will not only serve your community and sharpen your own skills, but discover your passions, equip yourself for your future, and see humanity through a new perspective. Your journey awaits. 2 PROGRAM BOOK - V.19 TABLE OF CONTENTS GETTING STARTED 04 PROGRAM REQUIREMENTS 06 PROGRAM AREAS 08 GENERAL ELIGIBILTY 14 AWARD PRESENTATIONS 18 ADVISORS & VALIDATORS 19 RECORD BOOK 21 OUR IMPACT 27 PROGRAM BOOK - V.19 3 GETTING STARTED Earning The Congressional Award is a proactive and enriching way to get involved. This is not an award for past accomplishments. Instead, youth are honored for setting personally challenging goals and meeting the needs of their community. The program is non-partisan, voluntary, and non-competitive. Young people may register when they turn 13 1/2 years old and must complete their activities by their 24th birthday. Participants earn Bronze, Silver, and Gold Congressional Award Certificates and Bronze, Silver, and Gold Congressional Award Medals. Each level involves setting goals in four program areas: Voluntary Public Service, Personal Development, Physical Fitness, and Expedition/Exploration. -

I Did It! I've Achieved My Award! How Do I Add My Award to Linkedin?

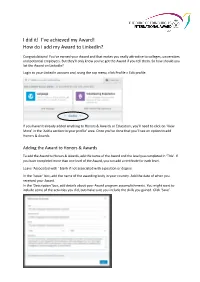

I did it! I’ve achieved my Award! How do I add my Award to LinkedIn? Congratulations! You’ve earned your Award and that makes you really attractive to colleges, universities and potential employers. But they’ll only know you’ve got the Award if you tell them. So how should you list the Award on LinkedIn? Login to your LinkedIn account and, using the top menu, click Profile > Edit profile. If you haven’t already added anything to Honors & Awards or Education, you’ll need to click on ‘View More’ in the ‘Add a section to your profile’ area. Once you’ve done that you’ll see an option to add Honors & Awards. Adding the Award to Honors & Awards To add the Award to Honors & Awards, add the name of the Award and the level you completed in ‘Title’. If you have completed more than one level of the Award, you can add a certificate for each level. Leave ‘Associated with ’ blank if not associated with a position or degree. In the ‘Issuer’ box, add the name of the awarding body in your country. Add the date of when you received your Award. In the ‘Description’ box, add details about your Award program accomplishments. You might want to include some of the activities you did, but make sure you include the skills you gained. Click ‘Save’. Adding the Award to Education You can add the Award to Education if you completed your Award through your school, college or university. Once you have added the name of your school and the dates you attended, you can add the name of the Award to ‘Activities and Societies’. -

Form Phs-6342-2

DEPARTMENT OF HEALTH AND HUMAN SERVICES Public Health Service Commissioned Corps INDIVIDUAL HONOR AWARD NOMINATION RECORD PART I OFFICER’S NAME (Last, First, MI) ENTRY ON DUTY DATE PHS RANK (O - 1 through O - 10) PHS PROFESSIONAL CATEGORY SERNO CURRENT ORGANIZATION ORGANIZATIONAL TITLE OR POSITION PROPOSED AWARD PERIOD COVERED (mm/dd/yyyy) From To NOTE: (Synopsis of specific achievement for which the individual is being nominated must be limited to 150 characters.) CITED FOR The nominator certifies that the officer is deserving of the proposed award, and that the accompanying documentation accurately and completely reflects the relevant information. Additionally, the nominator certifies that the officer has not received nor is being nominated for another award for which the basis overlaps this nomination (except as specifically cited). Fill-in Name/Title and Date before Digitally Signing as these and all fields above will lock. NOMINATOR (SIGNATURE) NAME AND TITLE (TYPED) DATE ENDORSEMENTS SUPERVISORY / LINE AUTHORITY SIGNATURE NAME AND TITLE (TYPED) AWARD ENDORSED* DATE SIGNATURE NAME AND TITLE (TYPED) AWARD ENDORSED* DATE SIGNATURE NAME AND TITLE (TYPED) AWARD ENDORSED* DATE OPERATING DIVISION (OPDIV) OR NON-HHS ORGANIZATION AWARDS BOARD CHAIRPERSON SIGNATURE NAME AND TITLE (TYPED) AWARD ENDORSED* DATE APPROVING AUTHORITY SIGNATURE NAME AND TITLE (TYPED) AWARD ENDORSED* DATE OPDIV OR NON-HHS ORGANIZATION AWARDS COORDINATOR SIGNATURE NAME AND TITLE (TYPED) AWARD ENDORSED* DATE *NOTE: If a lower level award is endorsed, give reason in "comment" section below. Also, use the section below to document external agency concurrence as needed. COMMENT DATE ACTION COMMENTS CCIAB Recommended Not Recommended DATE ACTION COMMENTS PHS-CCAB Recommended Not Recommended DATE ACTION COMMENTS SURGEON GENERAL Approved Not Approved PHS-6342-2 (Rev. -

Nomination Form

2020 Bikeability Awards Programme – NOMINATION FORM Please carefully read the Guidance Notes (click here) for the Bikeability Awards before submitting and completing this nomination. (also available on https://bikeabilitytrust.org/bikeability-awards/ ) Please send your completed form (and any attachments) by email attachment to [email protected] before noon on Friday February 28th 2020. TO NOTE: Only one nomination can be submitted per form. 1. Your Details First name: Millie Surname: Webb Organisation/Group (if applicable): Cycle Confident Address and postcode: LH.LG.04 Lincoln House, 1-3 Brixton Road, SW9 6DE Email address: [email protected] Telephone: 020 3031 6730 Mobile: 07889213908 If not a self-nomination, briefly describe your relationship with the nominee (e.g. work colleague, employer, pupil, other): Pupil at a client school 2. Nominee’s Details (main contact) If submitting a nomination for Trainee of the Year, please provide their name and/or their parent/teacher/carer contact details if more appropriate. First name: Aisha Surname: Jesani Organisation/Group (if applicable): Address and postcode: Holland House Primary School Email address: [email protected] Telephone: 020 3031 6730 Mobile: N/A Name of award for which he/she/they/the organisation is being nominated (please highlight/underline/select one): A. Instructor of the Year B. Bikeability Provider of the Year C. Local Authority/SGO Host School Partner of the Year (outsourced delivery) D. School Employee or Governor of the Year E. Trainee Rider of the Year (Pete Rollings award) F. Most Proactive Primary School Registered address: Salisbury House, Station Road, Cambridge CB1 2LA Charity registered in England and Wales no: 1171111 VAT registration number: 268 0103 23 1. -

JSP 761, Honours and Awards in the Armed Forces. Part 1

JSP 761 Honours and Awards in the Armed Forces Part 1: Directive JSP 761 Pt 1 (V5.0 Oct 16) Foreword People lie at the heart of operational capability; attracting and retaining the right numbers of capable, motivated individuals to deliver Defence outputs is critical. This is dependent upon maintaining a credible and realistic offer that earns and retains the trust of people in Defence. Part of earning and retaining that trust, and being treated fairly, is a confidence that the rules and regulations that govern our activity are relevant, current, fair and transparent. Please understand, know and use this JSP, to provide that foundation of rules and regulations that will allow that confidence to be built. JSP 761 is the authoritative guide for Honours and Awards in the Armed Services. It gives instructions on the award of Orders, Decorations and Medals and sets out the list of Honours and Awards that may be granted; detailing the nomination and recommendation procedures for each. It also provides information on the qualifying criteria for and permission to wear campaign medals, foreign medals and medals awarded by international organisations. It should be read in conjunction with Queen’s Regulations and DINs which further articulate detailed direction and specific criteria agreed by the Committee on the Grant of Honours, Decorations and Medals [Orders, Decorations and Medals (both gallantry and campaign)] or Foreign and Commonwealth Office [foreign medals and medals awarded by international organisations]. Lieutenant General Richard Nugee Chief of Defence People Defence Authority for People i JSP 761 Pt 1 (V5.0 Oct 16) Preface How to use this JSP 1. -

PETITIONING to AWARD TITLE to a MOTOR VEHICLE an Informational Guide to a North Dakota State Civil Court Process

PETITIONING TO AWARD TITLE TO A MOTOR VEHICLE An Informational Guide to a North Dakota State Civil Court Process The North Dakota Legal Self Help Center provides resources to people who represent themselves in civil matters in the North Dakota state courts. The information provided in this informational guide is not intended for legal advice but only as a general guide to a civil court process. If you decide to represent yourself, you will need to do additional research to prepare. When you represent yourself, you must abide by the following: • State or federal laws that apply to your case; • Case law, also called court opinions, that applies to your case; and • Court rules that apply to your case, which may include: o North Dakota Rules of Civil Procedure; o North Dakota Rules of Court; o North Dakota Rules of Evidence; o North Dakota Administrative Rules and Orders; o Any local court rules. Links to the laws, case law, and court rules can be found at www.ndcourts.gov. A glossary with definitions of legal terms is available at www.ndcourts.gov/legal-self-help. When you represent yourself, you are held to same requirements and responsibilities as a lawyer, even if you don’t understand the rules or procedures. If you are unsure if this information suits your circumstances, consult a lawyer. This information is not a complete statement of the law. This covers basic information about the process of petitioning a North Dakota State District Court to award title to a motor vehicle. The Center is not responsible for any consequences that may result from the information provided. -

Secretary's Awards Program

PROGRAM GUIDE SECRETARY’S AWARDS PROGRAM December 2017 SECRETARY’S AWARD S PROGRAM GUIDE Introduction | U.S. DEPARTMENT OF ENERGY Washington, D.C. 20585 The Department of Energy (DOE) has a long-standing tradition of recognizing employees who go above and beyond the call of duty in their work endeavors. The Department believes that it is important to recognize employees and contractors who have provided exceptional service to the American people and citizens of the world. Since 2007, the Secretary of Energy has acknowledged significant individual and group accomplishments through the Secretary’s Awards Program. The Secretary’s Awards Program is designed to recognize the career service and contributions of DOE employees to the mission of the Department and to the benefit of our Nation. The Program is comprised of three award types. The Secretary’s Honor Awards represent the highest internal non-monetary recognition that our employees and contractors can receive. The Secretary’s Departure Awards recognizes notable career dedication and outstanding service to the Department and the American public. The Secretary’s Appreciation Awards are given to individuals and groups for superlative contributions to the Agency mission. Each year, the Secretary recognizes a number of employees for their career service and accomplishments through the Secretary’s Departure and Appreciation Awards, respectively. Additionally, once per year, the Secretary hosts a special ceremony to present the Honor Awards to those within our workforce who have risen to the challenge and achieved significant results during the previous year. Each DOE Element, National Laboratory, Technology Center, and Power Marketing Administration is encouraged to take full advantage of this program. -

A Descriptive Analysis Fo the Presidential Faculty Fellows Program

1. Introduction The National Science Foundation uses a variety of mechanisms to support faculty and academic researchers. One form of support— fellowships— provides financial assistance directly to individuals. The intent is to provide recipients with considerable latitude in planning the focus of their academic and research activities. Since 1983, NSF has sponsored or participated in five such fellowship programs for accomplished young tenure-track faculty. What is the PFF Program? The Presidential Faculty Fellows (PFF) program was established in 1992 at the request of President George Bush to recognize and support the scholarly endeavors of young tenure-track faculty.3 Administered by the National Science Foundation (NSF), the program provided grant recipients with $100,000 per year for up to 5 years. Fellows could use PFF funding to (1) undertake self- designed, innovative research and teaching projects; (2) establish research and teaching programs; and (3) pursue other academic- related activities. By funding these activities, the Foundation sought to • recognize, honor, and promote the integration of high- quality teaching and research in science and engineering fields; • foster innovative and far-reaching developments in science and technology; • create the next generation of academic leaders; and • improve public understanding of the work of scientists and engineers. Fellows were selected by the White House (following NSF's review process) on the basis of their contributions and accomplishments in the following three areas:4 3 PFF defined "tenure-track" positions as (1) any assistant professorship or higher at institutions that offer tenure, or (2) research and teaching positions at the assistant professor or higher level at institutions that do not offer tenure. -

List of Award Abbreviations in Ndaws

LIST OF AWARD ABBREVIATIONS IN NDAWS AWARD ABBREVIATION AWARD LONG NAME SERVICE AA Army Achievement Medal USA AC Army Commendation Medal USA AD Army Distinguished Service Medal USA AE Armed Forces Expeditionary Medal ALL AF Air Medal-Individual Action USN/USMC AH Air Medal-Individual Action (with Combat V) USN/USMC AM Air Force Aerial Achievement Medal USAF AO Air Force Organizational Execellence Award USAF AR Antarctica Service Medal ALL AS Air Medal-Strike/Flight USN/USMC AT Navy Arctic Service Ribbon ALL AU Army Superior Unit Award USA AV Army Commendation Medal (with Combat V) USA AX Army Distinguished Service Cross USA BS Bronze Star Medal ALL BV Bronze Star Medal (with Combat V) ALL CA Secretary of Transportation Commendation for Achievement DOT CC Coast Guard Commendation Medal USCG CD Coast Guard Distinguished Service Medal USCG CF NAVCENT Letter of Commendation CG Coast Guard Achievement Medal USCG CL Chief of Naval Operations Letter of Commendation USN/USMC CM Coast Guard Medal USCG CR Combat Action Ribbon ALL CT Commandant of the Marine Corps Certificate of Commendation USMC CU Coast Guard Unit Commendation USCG CV Navy and Marine Corps Commendation Medal (with Combat V) USN/USMC DD Defense Distinguished Service Medal ALL DM Distinguished Service Medal USN/USMC DS Defense Superior Service Medal ALL DV Distinguished Flying Cross (with Combat V) USN/USMC DX Distinguished Flying Cross USN/USMC EC Commander in Chief, U.S. Naval Forces, Europe Letter of ALL Commendation EM Navy Expeditionary Service Medal USN/USMC EU Air Force Outstanding -

First Name Last Name Age Award Title Category School City Islah Abdulmalek 18 Silver Key Red Silk Photography Poolesville High S

First Name Last Name Age Award Title Category School City Islah Abdulmalek 18 Silver Key Red Silk Photography Poolesville High School Poolesville Rikka Angela Aguilar 17 Honorable Mention Growth Art Portfolio Albert Einstein High School Kensington Rikka Angela Aguilar 17 Silver Key Persona Digital Art Albert Einstein High School Kensington Grace Ahn 17 Gold Key Fashion Collection: Cultural Remix Art Portfolio Richard Montgomery High School Rockville Grace Ahn 17 Gold Key Samgo-Mu Fashion Richard Montgomery High School Rockville Dokyung Ahn 18 Honorable Mention power of donors Editorial Cartoon sponsoredHeritage by The AcademyHerb Block Foundation Hagerstown Elli Ahn 17 Honorable Mention Friendship Drawing & Illustration Marriotts Ridge High School Marriottsville Elli Ahn 17 Honorable Mention Eat Your Greens Drawing & Illustration Marriotts Ridge High School Marriottsville Grace Ahn 17 Honorable Mention The Perfect Body Drawing & Illustration Richard Montgomery High School Rockville Grace Ahn 17 Honorable Mention Modern Hanbok Fashion Richard Montgomery High School Rockville Elli Ahn 17 Silver Key Respite Painting Marriotts Ridge High School Marriottsville Grace Ahn 17 Silver Key More Than Meets the Eye Painting Richard Montgomery High School Rockville shanoya allwood 16 Silver Key The girl I knew before Drawing & Illustration Century High School Sykesville Chunlin An 17 Honorable Mention HELPMEOUT Painting Walt Whitman High School Bethesda Trisha Anand 15 Silver Key Beast in Awe Digital Art Mt Hebron High School Ellicott City McKenzie