Visual Complexity in Orthographic Learning: Modeling Learning Across Writing System Variations Li-Yun Changa, David C

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On the Origin of the Indian Brahma Alphabet

- ON THE <)|{I<; IN <>F TIIK INDIAN BRAHMA ALPHABET GEORG BtfHLKi; SECOND REVISED EDITION OF INDIAN STUDIES, NO III. TOGETHER WITH TWO APPENDICES ON THE OKU; IN OF THE KHAROSTHI ALPHABET AND OF THK SO-CALLED LETTER-NUMERALS OF THE BRAHMI. WITH TIIKKK PLATES. STRASSBUKi-. K A K 1. I. 1 1M I: \ I I; 1898. I'lintccl liy Adolf Ilcil/.haiisi'ii, Vicniiii. Preface to the Second Edition. .As the few separate copies of the Indian Studies No. Ill, struck off in 1895, were sold very soon and rather numerous requests for additional ones were addressed both to me and to the bookseller of the Imperial Academy, Messrs. Carl Gerold's Sohn, I asked the Academy for permission to issue a second edition, which Mr. Karl J. Trlibner had consented to publish. My petition was readily granted. In addition Messrs, von Holder, the publishers of the Wiener Zeitschrift fur die Kunde des Morgenlandes, kindly allowed me to reprint my article on the origin of the Kharosthi, which had appeared in vol. IX of that Journal and is now given in Appendix I. To these two sections I have added, in Appendix II, a brief review of the arguments for Dr. Burnell's hypothesis, which derives the so-called letter- numerals or numerical symbols of the Brahma alphabet from the ancient Egyptian numeral signs, together with a third com- parative table, in order to include in this volume all those points, which require fuller discussion, and in order to make it a serviceable companion to the palaeography of the Grund- riss. -

The Ogham-Runes and El-Mushajjar

c L ite atu e Vo l x a t n t r n o . o R So . u P R e i t ed m he T a s . 1 1 87 " p r f ro y f r r , , r , THE OGHAM - RUNES AND EL - MUSHAJJAR A D STU Y . BY RICH A R D B URTO N F . , e ad J an uar 22 (R y , PART I . The O ham-Run es g . e n u IN tr ating this first portio of my s bj ect, the - I of i Ogham Runes , have made free use the mater als r John collected by Dr . Cha les Graves , Prof. Rhys , and other students, ending it with my own work in the Orkney Islands . i The Ogham character, the fair wr ting of ' Babel - loth ancient Irish literature , is called the , ’ Bethluis Bethlm snion e or , from its initial lett rs, like “ ” Gree co- oe Al hab e t a an d the Ph nician p , the Arabo “ ” Ab ad fl d H ebrew j . It may brie y be describe as f b ormed y straight or curved strokes , of various lengths , disposed either perpendicularly or obliquely to an angle of the substa nce upon which the letters n . were i cised , punched, or rubbed In monuments supposed to be more modern , the letters were traced , b T - N E E - A HE OGHAM RU S AND L M USH JJ A R . n not on the edge , but upon the face of the recipie t f n l o t sur ace ; the latter was origi al y wo d , s aves and tablets ; then stone, rude or worked ; and , lastly, metal , Th . -

Assessment of Options for Handling Full Unicode Character Encodings in MARC21 a Study for the Library of Congress

1 Assessment of Options for Handling Full Unicode Character Encodings in MARC21 A Study for the Library of Congress Part 1: New Scripts Jack Cain Senior Consultant Trylus Computing, Toronto 1 Purpose This assessment intends to study the issues and make recommendations on the possible expansion of the character set repertoire for bibliographic records in MARC21 format. 1.1 “Encoding Scheme” vs. “Repertoire” An encoding scheme contains codes by which characters are represented in computer memory. These codes are organized according to a certain methodology called an encoding scheme. The list of all characters so encoded is referred to as the “repertoire” of characters in the given encoding schemes. For example, ASCII is one encoding scheme, perhaps the one best known to the average non-technical person in North America. “A”, “B”, & “C” are three characters in the repertoire of this encoding scheme. These three characters are assigned encodings 41, 42 & 43 in ASCII (expressed here in hexadecimal). 1.2 MARC8 "MARC8" is the term commonly used to refer both to the encoding scheme and its repertoire as used in MARC records up to 1998. The ‘8’ refers to the fact that, unlike Unicode which is a multi-byte per character code set, the MARC8 encoding scheme is principally made up of multiple one byte tables in which each character is encoded using a single 8 bit byte. (It also includes the EACC set which actually uses fixed length 3 bytes per character.) (For details on MARC8 and its specifications see: http://www.loc.gov/marc/.) MARC8 was introduced around 1968 and was initially limited to essentially Latin script only. -

Writing Systems Reading and Spelling

Writing systems Reading and spelling Writing systems LING 200: Introduction to the Study of Language Hadas Kotek February 2016 Hadas Kotek Writing systems Writing systems Reading and spelling Outline 1 Writing systems 2 Reading and spelling Spelling How we read Slides credit: David Pesetsky, Richard Sproat, Janice Fon Hadas Kotek Writing systems Writing systems Reading and spelling Writing systems What is writing? Writing is not language, but merely a way of recording language by visible marks. –Leonard Bloomfield, Language (1933) Hadas Kotek Writing systems Writing systems Reading and spelling Writing systems Writing and speech Until the 1800s, writing, not spoken language, was what linguists studied. Speech was often ignored. However, writing is secondary to spoken language in at least 3 ways: Children naturally acquire language without being taught, independently of intelligence or education levels. µ Many people struggle to learn to read. All human groups ever encountered possess spoken language. All are equal; no language is more “sophisticated” or “expressive” than others. µ Many languages have no written form. Humans have probably been speaking for as long as there have been anatomically modern Homo Sapiens in the world. µ Writing is a much younger phenomenon. Hadas Kotek Writing systems Writing systems Reading and spelling Writing systems (Possibly) Independent Inventions of Writing Sumeria: ca. 3,200 BC Egypt: ca. 3,200 BC Indus Valley: ca. 2,500 BC China: ca. 1,500 BC Central America: ca. 250 BC (Olmecs, Mayans, Zapotecs) Hadas Kotek Writing systems Writing systems Reading and spelling Writing systems Writing and pictures Let’s define the distinction between pictures and true writing. -

Sounds to Graphemes Guide

David Newman – Speech-Language Pathologist Sounds to Graphemes Guide David Newman S p e e c h - Language Pathologist David Newman – Speech-Language Pathologist A Friendly Reminder © David Newmonic Language Resources 2015 - 2018 This program and all its contents are intellectual property. No part of this publication may be stored in a retrieval system, transmitted or reproduced in any way, including but not limited to digital copying and printing without the prior agreement and written permission of the author. However, I do give permission for class teachers or speech-language pathologists to print and copy individual worksheets for student use. Table of Contents Sounds to Graphemes Guide - Introduction ............................................................... 1 Sounds to Grapheme Guide - Meanings ..................................................................... 2 Pre-Test Assessment .................................................................................................. 6 Reading Miscue Analysis Symbols .............................................................................. 8 Intervention Ideas ................................................................................................... 10 Reading Intervention Example ................................................................................. 12 44 Phonemes Charts ................................................................................................ 18 Consonant Sound Charts and Sound Stimulation .................................................... -

Part 1: Introduction to The

PREVIEW OF THE IPA HANDBOOK Handbook of the International Phonetic Association: A guide to the use of the International Phonetic Alphabet PARTI Introduction to the IPA 1. What is the International Phonetic Alphabet? The aim of the International Phonetic Association is to promote the scientific study of phonetics and the various practical applications of that science. For both these it is necessary to have a consistent way of representing the sounds of language in written form. From its foundation in 1886 the Association has been concerned to develop a system of notation which would be convenient to use, but comprehensive enough to cope with the wide variety of sounds found in the languages of the world; and to encourage the use of thjs notation as widely as possible among those concerned with language. The system is generally known as the International Phonetic Alphabet. Both the Association and its Alphabet are widely referred to by the abbreviation IPA, but here 'IPA' will be used only for the Alphabet. The IPA is based on the Roman alphabet, which has the advantage of being widely familiar, but also includes letters and additional symbols from a variety of other sources. These additions are necessary because the variety of sounds in languages is much greater than the number of letters in the Roman alphabet. The use of sequences of phonetic symbols to represent speech is known as transcription. The IPA can be used for many different purposes. For instance, it can be used as a way to show pronunciation in a dictionary, to record a language in linguistic fieldwork, to form the basis of a writing system for a language, or to annotate acoustic and other displays in the analysis of speech. -

Parietal Dysgraphia: Characterization of Abnormal Writing Stroke Sequences, Character Formation and Character Recall

Behavioural Neurology 18 (2007) 99–114 99 IOS Press Parietal dysgraphia: Characterization of abnormal writing stroke sequences, character formation and character recall Yasuhisa Sakuraia,b,∗, Yoshinobu Onumaa, Gaku Nakazawaa, Yoshikazu Ugawab, Toshimitsu Momosec, Shoji Tsujib and Toru Mannena aDepartment of Neurology, Mitsui Memorial Hospital, Tokyo, Japan bDepartment of Neurology, Graduate School of Medicine, University of Tokyo, Tokyo, Japan cDepartment of Radiology, Graduate School of Medicine, University of Tokyo, Tokyo, Japan Abstract. Objective: To characterize various dysgraphic symptoms in parietal agraphia. Method: We examined the writing impairments of four dysgraphia patients from parietal lobe lesions using a special writing test with 100 character kanji (Japanese morphograms) and their kana (Japanese phonetic writing) transcriptions, and related the test performance to a lesion site. Results: Patients 1 and 2 had postcentral gyrus lesions and showed character distortion and tactile agnosia, with patient 1 also having limb apraxia. Patients 3 and 4 had superior parietal lobule lesions and features characteristic of apraxic agraphia (grapheme deformity and a writing stroke sequence disorder) and character imagery deficits (impaired character recall). Agraphia with impaired character recall and abnormal grapheme formation were more pronounced in patient 4, in whom the lesion extended to the inferior parietal, superior occipital and precuneus gyri. Conclusion: The present findings and a review of the literature suggest that: (i) a postcentral gyrus lesion can yield graphemic distortion (somesthetic dysgraphia), (ii) abnormal grapheme formation and impaired character recall are associated with lesions surrounding the intraparietal sulcus, the symptom being more severe with the involvement of the inferior parietal, superior occipital and precuneus gyri, (iii) disordered writing stroke sequences are caused by a damaged anterior intraparietal area. -

The Personal Name Here Is Again Obscure. At

342 THE FIRST ARAMAIC INSCRIPTION FROM INDIA 1. 10 : " his conduct " ? 1. 11 : " and also his sons." 1. 12 : the personal name here is again obscure. At the end of the line are traces of the right-hand side of a letter, which might be samekh or beth; if we accept the former, it is possible to vocalize as Pavira-ram, i.e. Pavira-rdja, corresponding to the Sanskrit Pravira- rdja. The name Pravira is well known in epos, and might well be borne by a real man ; and the change of a sonant to a surd consonant, such as that of j to «, is quite common in the North-West dialects. L. D. BARNETT. THE FIRST ARAMAIC INSCRIPTION FROM INDIA I must thank Mr. F. W. Thomas for his great kindness in sending me the photograph taken by Sir J. H. Marshall, and also Dr. Barnett for letting me see his tracing and transliteration. The facsimile is made from the photo- graph, which is as good as it can be. Unfortunately, on the original, the letters are as white as the rest of the marble, and it was necessary to darken them in order to obtain a photograph. This process inti'oduces an element of uncertainty, since in some cases part of a line may have escaped, and in others an accidental scratch may appear as part of a letter. Hence the following passages are more or less doubtful: line 4, 3PI; 1. 6, Tpfl; 1. 8, "123 and y\; 1. 9, the seventh and ninth letters; 1. 10, ID; 1. -

Pahawh Hmong

ISO/IEC JTC1/SC2/WG2 N4175 L2/12-013 2012-01-20 Universal Multiple-Octet Coded Character Set International Organization for Standardization Organisation internationale de normalisation Международная организация по стандартизации Doc Type: Working Group Document Title: Final proposal to encode the Pahawh Hmong script in the UCS Source: UC Berkeley Script Encoding Initiative (Universal Scripts Project) Author: Michael Everson Status: Liaison Contribution Action: For consideration by JTC1/SC2/WG2 and UTC Date: 2012-01-20 1.0 Introduction. Pahawh Hmong is a script devised for writing the Hmong language by Shong Lue Yang (Soob Lwj Yaj [ʃɔ́ŋ lɨ̂ jâ]). Shong Lue Yang was a charismatic figure among the Hmong in Laos, and was considered by many to be a kind of messiah. It is said that in 1959 the writing system was revealed to him by two supernatural messengers who appeared to him over a period of months. A full account of this is given in Smalley, Vang, and Yang 1990. Devised in Laos, Pahawh Hmong was taken to northern Thailand refugee camps, and then moved with waves of immigrants to Minnesota and California in the United States, and to Australia. The writing system itself has had four Stages of development. In this document, the Romanized Popular Alphabet orthography (widely used by the Hmong in North America) is given alongside example text in Pahawh Hmong. Two features of the RPA are of note. Double vowels ee and oo indicate [ɛŋ] and [oŋ] respectively; final letters indicate tones thus: RPA -b v́ high-level -m ṿ low-glottalized -d v̬low-rising -j v̂ high-falling -v v̌ mid-rising -Ø v mid-level -s v̀ low-level -g v̤ falling-breathy 1.1 The Source Version, Pahawh Pa (Phajhauj Paj [pʰâ hâu pâ]), is not in current use. -

Elder Futhark Runes (1/2) (9 Points)

Ozclo2015 Round 1 1/15 <1> Elder Futhark Runes (1/2) (9 points) Old Norse was the language of the Vikings, the language spoken in Scandinavia and in the Scandinavian settlements found throughout the Northern Hemisphere from the 700’s through the 1300’s. Much as Latin was the forerunner of the Romance languages, among them Italian, Spanish, and French, Old Norse was the ancestor of the North Germanic languages: Icelandic, Faroese, Norwegian, Danish, and Swedish. Old Norse was written first in runic alphabets, then later in the Roman alphabet. The first runic alphabet, found on inscriptions dating from throughout the first millennium CE, is known as “Elder Futhark” and was used for both proto- Norse and early Old Norse. Below are nine Anglicized names of Old Norse gods and the nine Elder Futhark names to which they correspond. Listed below are also two other runic names for gods. The names given below are either based on the gods’ original Old Norse names, or on their roles in nature; for instance, the god of the dawn might be listed either as ‘Dawn’ or as Delling (his original name). Remember that the modern version of the name may not be quite the same as the original Old Norse name – think what happened to their names in our days of the week! Anglicized names: Old Norse Runes: 1 Baldur A 2 Dallinger B 3 Day C 4 Earth 5 Freya D 6 Freyr E 7 Ithun F 8 Night G 9 Sun H I J K Task 1: Match the runes to the correct names, by placing the appropriate letter (from A to K) for the runic word corresponding in meaning to the anglicized name in the first table. -

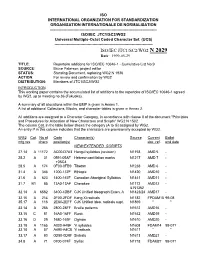

ISO/IEC JTC1/SC2/WG2 N 2029 Date: 1999-05-29

ISO INTERNATIONAL ORGANIZATION FOR STANDARDIZATION ORGANISATION INTERNATIONALE DE NORMALISATION --------------------------------------------------------------------------------------- ISO/IEC JTC1/SC2/WG2 Universal Multiple-Octet Coded Character Set (UCS) -------------------------------------------------------------------------------- ISO/IEC JTC1/SC2/WG2 N 2029 Date: 1999-05-29 TITLE: Repertoire additions for ISO/IEC 10646-1 - Cumulative List No.9 SOURCE: Bruce Paterson, project editor STATUS: Standing Document, replacing WG2 N 1936 ACTION: For review and confirmation by WG2 DISTRIBUTION: Members of JTC1/SC2/WG2 INTRODUCTION This working paper contains the accumulated list of additions to the repertoire of ISO/IEC 10646-1 agreed by WG2, up to meeting no.36 (Fukuoka). A summary of all allocations within the BMP is given in Annex 1. A list of additional Collections, Blocks, and character tables is given in Annex 2. All additions are assigned to a Character Category, in accordance with clause II of the document "Principles and Procedures for Allocation of New Characters and Scripts" WG2 N 1502. The column Cat. in the table below shows the category (A to G) assigned by WG2. An entry P in this column indicates that the characters are provisionally accepted by WG2. WG2 Cat. No of Code Character(s) Source Current Ballot mtg.res chars position(s) doc. ref. end date NEW/EXTENDED SCRIPTS 27.14 A 11172 AC00-D7A3 Hangul syllables (revision) N1158 AMD 5 - 28.2 A 31 0591-05AF Hebrew cantillation marks N1217 AMD 7 - +05C4 28.5 A 174 0F00-0FB9 Tibetan N1238 AMD 6 - 31.4 A 346 1200-137F Ethiopic N1420 AMD10 - 31.6 A 623 1400-167F Canadian Aboriginal Syllabics N1441 AMD11 - 31.7 B1 85 13A0-13AF Cherokee N1172 AMD12 - & N1362 32.14 A 6582 3400-4DBF CJK Unified Ideograph Exten. -

Neuronal Dynamics of Grapheme-Color Synesthesia A

Neuronal Dynamics of Grapheme-Color Synesthesia A Thesis Presented to The Interdivisional Committee for Biology and Psychology Reed College In Partial Fulfillment of the Requirements for the Degree Bachelor of Arts Christian Joseph Graulty May 2015 Approved for the Committee (Biology & Psychology) Enriqueta Canseco-Gonzalez Preface This is an ad hoc Biology-Psychology thesis, and consequently the introduction incorporates concepts from both disciplines. It also provides a considerable amount of information on the phenomenon of synesthesia in general. For the reader who would like to focus specifically on the experimental section of this document, I include a “Background Summary” section that should allow anyone to understand the study without needing to read the full introduction. Rather, if you start at section 1.4, findings from previous studies and the overall aim of this research should be fairly straightforward. I do not have synesthesia myself, but I have always been interested in it. Sensory systems are the only portals through which our conscious selves can gain information about the external world. But more and more, neuroscience research shows that our senses are unreliable narrators, merely secondary sources providing us with pre- processed results as opposed to completely raw data. This is a very good thing. It makes our sensory systems more efficient for survival- fast processing is what saves you from being run over or eaten every day. But the minor cost of this efficient processing is that we are doomed to a life of visual illusions and existential crises in which we wonder whether we’re all in The Matrix, or everything is just a dream.