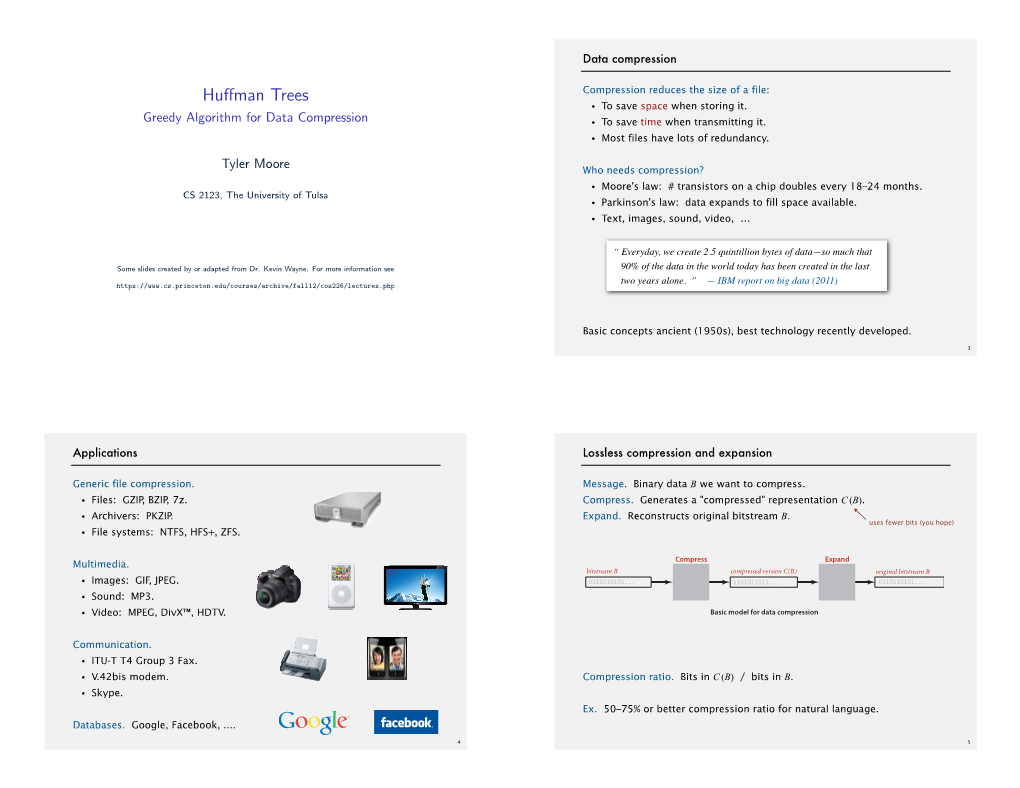

Huffman Trees

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

XAPP616 "Huffman Coding" V1.0

Product Obsolete/Under Obsolescence Application Note: Virtex Series R Huffman Coding Author: Latha Pillai XAPP616 (v1.0) April 22, 2003 Summary Huffman coding is used to code values statistically according to their probability of occurence. Short code words are assigned to highly probable values and long code words to less probable values. Huffman coding is used in MPEG-2 to further compress the bitstream. This application note describes how Huffman coding is done in MPEG-2 and its implementation. Introduction The output symbols from RLE are assigned binary code words depending on the statistics of the symbol. Frequently occurring symbols are assigned short code words whereas rarely occurring symbols are assigned long code words. The resulting code string can be uniquely decoded to get the original output of the run length encoder. The code assignment procedure developed by Huffman is used to get the optimum code word assignment for a set of input symbols. The procedure for Huffman coding involves the pairing of symbols. The input symbols are written out in the order of decreasing probability. The symbol with the highest probability is written at the top, the least probability is written down last. The least two probabilities are then paired and added. A new probability list is then formed with one entry as the previously added pair. The least symbols in the new list are then paired. This process is continued till the list consists of only one probability value. The values "0" and "1" are arbitrarily assigned to each element in each of the lists. Figure 1 shows the following symbols listed with a probability of occurrence where: A is 30%, B is 25%, C is 20%, D is 15%, and E = 10%. -

An Iterated Greedy Metaheuristic for the Blocking Job Shop Scheduling Problem

Dipartimento di Ingegneria Via della Vasca Navale, 79 00146 Roma, Italy An Iterated Greedy Metaheuristic for the Blocking Job Shop Scheduling Problem Marco Pranzo1, Dario Pacciarelli2 RT-DIA-208-2013 Novembre 2013 (1) Universit`adegli Studi Roma Tre, Via della Vasca Navale, 79 00146 Roma, Italy (2) Universit`adegli Studi di Siena Via Roma 56 53100 Siena, Italy. ABSTRACT In this paper we consider a job shop scheduling problem with blocking (zero buffer) con- straints. Blocking constraints model the absence of buffers, whereas in the traditional job shop scheduling model buffers have infinite capacity. There are two known variants of this problem, namely the blocking job shop scheduling (BJSS) with swap allowed and the BJSS with no-swap. A swap is needed when there is a cycle of two or more jobs each waiting for the machine occupied by the next job in the cycle. Such a cycle represents a deadlock in no-swap BJSS, while with a swap all the jobs in the cycle move simulta- neously to their subsequent machine. This scheduling problem is receiving an increasing interest in the recent literature, and we propose an Iterated Greedy (IG) algorithm to solve both variants of the problem. The IG is a metaheuristic based on the repetition of a destruction phase, which removes part of the solution, and a construction phase, in which a new solution is obtained by applying an underlying greedy algorithm starting from the partial solution. Comparison with recent published results shows that the iterated greedy outperforms other state-of-the-art algorithms on benchmark instances. -

Image Compression Through DCT and Huffman Coding Technique

International Journal of Current Engineering and Technology E-ISSN 2277 – 4106, P-ISSN 2347 – 5161 ©2015 INPRESSCO®, All Rights Reserved Available at http://inpressco.com/category/ijcet Research Article Image Compression through DCT and Huffman Coding Technique Rahul Shukla†* and Narender Kumar Gupta† †Department of Computer Science and Engineering, SHIATS, Allahabad, India Accepted 31 May 2015, Available online 06 June 2015, Vol.5, No.3 (June 2015) Abstract Image compression is an art used to reduce the size of a particular image. The goal of image compression is to eliminate the redundancy in a file’s code in order to reduce its size. It is useful in reducing the image storage space and in reducing the time needed to transmit the image. Image compression is more significant for reducing data redundancy for save more memory and transmission bandwidth. An efficient compression technique has been proposed which combines DCT and Huffman coding technique. This technique proposed due to its Lossless property, means using this the probability of loss the information is lowest. Result shows that high compression rates are achieved and visually negligible difference between compressed images and original images. Keywords: Huffman coding, Huffman decoding, JPEG, TIFF, DCT, PSNR, MSE 1. Introduction that can compress almost any kind of data. These are the lossless methods they retain all the information of 1 Image compression is a technique in which large the compressed data. amount of disk space is required for the raw images However, they do not take advantage of the 2- which seems to be a very big disadvantage during dimensional nature of the image data. -

GREEDY ALGORITHMS Greedy Algorithms Solve Problems by Making the Choice That Seems Best at the Particular Moment

GREEDY ALGORITHMS Greedy algorithms solve problems by making the choice that seems best at the particular moment. Many optimization problems can be solved using a greedy algorithm. Some problems have no efficient solution, but a greedy algorithm may provide a solution that is close to optimal. A greedy algorithm works if a problem exhibits the following two properties: 1. Greedy choice property: A globally optimal solution can be arrived at by making a locally optimal solution. In other words, an optimal solution can be obtained by making "greedy" choices. 2. optimal substructure. Optimal solutions contain optimal sub solutions. In other Words, solutions to sub problems of an optimal solution are optimal. Difference Between Greedy and Dynamic Programming The most difference between greedy algorithms and dynamic programming is that we don’t solve every optimal sub-problem with greedy algorithms. In some cases, greedy algorithms can be used to produce sub-optimal solutions. That is, solutions which aren't necessarily optimal, but are perhaps very dose. In dynamic programming, we make a choice at each step, but the choice may depend on the solutions to subproblems. In a greedy algorithm, we make whatever choice seems best at 'the moment and then solve the sub-problems arising after the choice is made. The choice made by a greedy algorithm may depend on choices so far, but it cannot depend on any future choices or on the solutions to sub- problems. Thus, unlike dynamic programming, which solves the sub-problems bottom up, a greedy strategy usually progresses in a top-down fashion, making one greedy choice after another, interactively reducing each given problem instance to a smaller one. -

15-122: Principles of Imperative Computation, Fall 2010 Assignment 6: Trees and Secret Codes

15-122: Principles of Imperative Computation, Fall 2010 Assignment 6: Trees and Secret Codes Karl Naden (kbn@cs) Out: Tuesday, March 22, 2010 Due (Written): Tuesday, March 29, 2011 (before lecture) Due (Programming): Tuesday, March 29, 2011 (11:59 pm) 1 Written: (25 points) The written portion of this week’s homework will give you some practice reasoning about variants of binary search trees. You can either type up your solutions or write them neatly by hand, and you should submit your work in class on the due date just before lecture begins. Please remember to staple your written homework before submission. 1.1 Heaps and BSTs Exercise 1 (3 pts). Draw a tree that matches each of the following descriptions. a) Draw a heap that is not a BST. b) Draw a BST that is not a heap. c) Draw a non-empty BST that is also a heap. 1.2 Binary Search Trees Refer to the binary search tree code posted on the course website for Lecture 17 if you need to remind yourself how BSTs work. Exercise 2 (4 pts). The height of a binary search tree (or any binary tree for that matter) is the number of levels it has. (An empty binary tree has height 0.) (a) Write a function bst height that returns the height of a binary search tree. You will need a wrapper function with the following specification: 1 int bst_height(bst B); This function should not be recursive, but it will require a helper function that will be recursive. See the BST code for examples of wrapper functions and recursive helper functions. -

The Strengths and Weaknesses of Different Image Compression Methods Samuel Teare and Brady Jacobson Lossy Vs Lossless

The Strengths and Weaknesses of Different Image Compression Methods Samuel Teare and Brady Jacobson Lossy vs Lossless Lossy compression reduces a file size by permanently removing parts of the data that may be redundant or not as noticeable. Lossless compression guarantees the original data can be recovered or decompressed from the compressed file. PNG Compression PNG Compression consists of three parts: 1. Filtering 2. LZ77 Compression Deflate Compression 3. Huffman Coding Filtering Five types of Filters: 1. None - No filter 2. Sub - difference between this byte and the byte to its left a. Sub(x) = Original(x) - Original(x - bpp) 3. Up - difference between this byte and the byte above it a. Up(x) = Original(x) - Above(x) 4. Average - difference between this byte and the average of the byte to the left and the byte above. a. Avg(x) = Original(x) − (Original(x-bpp) + Above(x))/2 5. Paeth - Uses the byte to the left, above, and above left. a. The nearest of the left, above, or above left to the estimate is the Paeth Predictor b. Paeth(x) = Original(x) - Paeth Predictor(x) Paeth Algorithm Estimate = left + above - above left Distance to left = Absolute(estimate - left) Distance to above = Absolute(estimate - above) Distance to above left = Absolute(estimate - above left) The byte with the smallest distance is the Paeth Predictor LZ77 Compression LZ77 Compression looks for sequences in the data that are repeated. LZ77 uses a sliding window to keep track of previous bytes. This is then used to compress a group of bytes that exhibit the same sequence as previous bytes. -

An Optimized Huffman's Coding by the Method of Grouping

An Optimized Huffman’s Coding by the method of Grouping Gautam.R Dr. S Murali Department of Electronics and Communication Engineering, Professor, Department of Computer Science Engineering Maharaja Institute of Technology, Mysore Maharaja Institute of Technology, Mysore [email protected] [email protected] Abstract— Data compression has become a necessity not only the 3 depending on the size of the data. Huffman's coding basically in the field of communication but also in various scientific works on the principle of frequency of occurrence for each experiments. The data that is being received is more and the symbol or character in the input. For example we can know processing time required has also become more. A significant the number of times a letter has appeared in a text document by change in the algorithms will help to optimize the processing processing that particular document. After which we will speed. With the invention of Technologies like IoT and in assign a variable string to the letter that will represent the technologies like Machine Learning there is a need to compress character. Here the encoding take place in the form of tree data. For example training an Artificial Neural Network requires structure which will be explained in detail in the following a lot of data that should be processed and trained in small paragraph where encoding takes place in the form of binary interval of time for which compression will be very helpful. There tree. is a need to process the data faster and quicker. In this paper we present a method that reduces the data size. -

Greedy Estimation of Distributed Algorithm to Solve Bounded Knapsack Problem

Shweta Gupta et al, / (IJCSIT) International Journal of Computer Science and Information Technologies, Vol. 5 (3) , 2014, 4313-4316 Greedy Estimation of Distributed Algorithm to Solve Bounded knapsack Problem Shweta Gupta#1, Devesh Batra#2, Pragya Verma#3 #1Indian Institute of Technology (IIT) Roorkee, India #2Stanford University CA-94305, USA #3IEEE, USA Abstract— This paper develops a new approach to find called probabilistic model-building genetic algorithms, solution to the Bounded Knapsack problem (BKP).The are stochastic optimization methods that guide the search Knapsack problem is a combinatorial optimization problem for the optimum by building probabilistic models of where the aim is to maximize the profits of objects in a favourable candidate solutions. The main difference knapsack without exceeding its capacity. BKP is a between EDAs and evolutionary algorithms is that generalization of 0/1 knapsack problem in which multiple instances of distinct items but a single knapsack is taken. Real evolutionary algorithms produces new candidate solutions life applications of this problem include cryptography, using an implicit distribution defined by variation finance, etc. This paper proposes an estimation of distribution operators like crossover, mutation, whereas EDAs use algorithm (EDA) using greedy operator approach to find an explicit probability distribution model such as Bayesian solution to the BKP. EDA is stochastic optimization technique network, a multivariate normal distribution etc. EDAs can that explores the space of potential solutions by modelling be used to solve optimization problems like other probabilistic models of promising candidate solutions. conventional evolutionary algorithms. The rest of the paper is organized as follows: next section Index Terms— Bounded Knapsack, Greedy Algorithm, deals with the related work followed by the section III Estimation of Distribution Algorithm, Combinatorial Problem, which contains theoretical procedure of EDA. -

Arithmetic Coding

Arithmetic Coding Arithmetic coding is the most efficient method to code symbols according to the probability of their occurrence. The average code length corresponds exactly to the possible minimum given by information theory. Deviations which are caused by the bit-resolution of binary code trees do not exist. In contrast to a binary Huffman code tree the arithmetic coding offers a clearly better compression rate. Its implementation is more complex on the other hand. In arithmetic coding, a message is encoded as a real number in an interval from one to zero. Arithmetic coding typically has a better compression ratio than Huffman coding, as it produces a single symbol rather than several separate codewords. Arithmetic coding differs from other forms of entropy encoding such as Huffman coding in that rather than separating the input into component symbols and replacing each with a code, arithmetic coding encodes the entire message into a single number, a fraction n where (0.0 ≤ n < 1.0) Arithmetic coding is a lossless coding technique. There are a few disadvantages of arithmetic coding. One is that the whole codeword must be received to start decoding the symbols, and if there is a corrupt bit in the codeword, the entire message could become corrupt. Another is that there is a limit to the precision of the number which can be encoded, thus limiting the number of symbols to encode within a codeword. There also exist many patents upon arithmetic coding, so the use of some of the algorithms also call upon royalty fees. Arithmetic coding is part of the JPEG data format. -

Algorithms Documentation Release 1.0.0

algorithms Documentation Release 1.0.0 Nic Young April 14, 2018 Contents 1 Usage 3 2 Features 5 3 Installation: 7 4 Tests: 9 5 Contributing: 11 6 Table of Contents: 13 6.1 Algorithms................................................ 13 Python Module Index 31 i ii algorithms Documentation, Release 1.0.0 Algorithms is a library of algorithms and data structures implemented in Python. The main purpose of this library is to be an educational tool. You probably shouldn’t use these in production, instead, opting for the optimized versions of these algorithms that can be found else where. You should totally check out the docs for implementation details, complexities and further info. Contents 1 algorithms Documentation, Release 1.0.0 2 Contents CHAPTER 1 Usage If you want to use the algorithms in your code it is as simple as: from algorithms.sorting import bubble_sort my_list= bubble_sort.sort(my_list) 3 algorithms Documentation, Release 1.0.0 4 Chapter 1. Usage CHAPTER 2 Features • Pseudo code, algorithm complexities and futher info with each algorithm. • Test coverage for each algorithm and data structure. • Super sweet documentation. 5 algorithms Documentation, Release 1.0.0 6 Chapter 2. Features CHAPTER 3 Installation: Installation is as easy as: $ pip install algorithms 7 algorithms Documentation, Release 1.0.0 8 Chapter 3. Installation: CHAPTER 4 Tests: Pytest is used as the main test runner and all Unit Tests can be run with: $ ./run_tests.py 9 algorithms Documentation, Release 1.0.0 10 Chapter 4. Tests: CHAPTER 5 Contributing: Contributions are always welcome. Check out the contributing guidelines to get started. -

Image Compression Using Discrete Cosine Transform Method

Qusay Kanaan Kadhim, International Journal of Computer Science and Mobile Computing, Vol.5 Issue.9, September- 2016, pg. 186-192 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IMPACT FACTOR: 5.258 IJCSMC, Vol. 5, Issue. 9, September 2016, pg.186 – 192 Image Compression Using Discrete Cosine Transform Method Qusay Kanaan Kadhim Al-Yarmook University College / Computer Science Department, Iraq [email protected] ABSTRACT: The processing of digital images took a wide importance in the knowledge field in the last decades ago due to the rapid development in the communication techniques and the need to find and develop methods assist in enhancing and exploiting the image information. The field of digital images compression becomes an important field of digital images processing fields due to the need to exploit the available storage space as much as possible and reduce the time required to transmit the image. Baseline JPEG Standard technique is used in compression of images with 8-bit color depth. Basically, this scheme consists of seven operations which are the sampling, the partitioning, the transform, the quantization, the entropy coding and Huffman coding. First, the sampling process is used to reduce the size of the image and the number bits required to represent it. Next, the partitioning process is applied to the image to get (8×8) image block. Then, the discrete cosine transform is used to transform the image block data from spatial domain to frequency domain to make the data easy to process. -

Greedy Algorithms Greedy Strategy: Make a Locally Optimal Choice, Or Simply, What Appears Best at the Moment R

Overview Kruskal Dijkstra Human Compression Overview Kruskal Dijkstra Human Compression Overview One of the strategies used to solve optimization problems CSE 548: (Design and) Analysis of Algorithms Multiple solutions exist; pick one of low (or least) cost Greedy Algorithms Greedy strategy: make a locally optimal choice, or simply, what appears best at the moment R. Sekar Often, locally optimality 6) global optimality So, use with a great deal of care Always need to prove optimality If it is unpredictable, why use it? It simplifies the task! 1 / 35 2 / 35 Overview Kruskal Dijkstra Human Compression Overview Kruskal Dijkstra Human Compression Making change When does a Greedy algorithm work? Given coins of denominations 25cj, 10cj, 5cj and 1cj, make change for x cents (0 < x < 100) using minimum number of coins. Greedy choice property Greedy solution The greedy (i.e., locally optimal) choice is always consistent with makeChange(x) some (globally) optimal solution if (x = 0) return What does this mean for the coin change problem? Let y be the largest denomination that satisfies y ≤ x Optimal substructure Issue bx=yc coins of denomination y The optimal solution contains optimal solutions to subproblems. makeChange(x mod y) Implies that a greedy algorithm can invoke itself recursively after Show that it is optimal making a greedy choice. Is it optimal for arbitrary denominations? 3 / 35 4 / 35 Chapter 5 Overview Kruskal Dijkstra Human Compression Overview Kruskal Dijkstra Human Compression Knapsack Problem Greedy algorithmsFractional Knapsack A sack that can hold a maximum of x lbs Greedy choice property Proof by contradiction: Start with the assumption that there is an You have a choice of items you can pack in the sack optimal solution that does not include the greedy choice, and show a A game like chess can be won only by thinking ahead: a player who is focused entirely on Maximize the combined “value” of items in the sack contradiction.