Ghost Imaging of Space Objects

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lurking in the Shadows: Wide-Separation Gas Giants As Tracers of Planet Formation

Lurking in the Shadows: Wide-Separation Gas Giants as Tracers of Planet Formation Thesis by Marta Levesque Bryan In Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy CALIFORNIA INSTITUTE OF TECHNOLOGY Pasadena, California 2018 Defended May 1, 2018 ii © 2018 Marta Levesque Bryan ORCID: [0000-0002-6076-5967] All rights reserved iii ACKNOWLEDGEMENTS First and foremost I would like to thank Heather Knutson, who I had the great privilege of working with as my thesis advisor. Her encouragement, guidance, and perspective helped me navigate many a challenging problem, and my conversations with her were a consistent source of positivity and learning throughout my time at Caltech. I leave graduate school a better scientist and person for having her as a role model. Heather fostered a wonderfully positive and supportive environment for her students, giving us the space to explore and grow - I could not have asked for a better advisor or research experience. I would also like to thank Konstantin Batygin for enthusiastic and illuminating discussions that always left me more excited to explore the result at hand. Thank you as well to Dimitri Mawet for providing both expertise and contagious optimism for some of my latest direct imaging endeavors. Thank you to the rest of my thesis committee, namely Geoff Blake, Evan Kirby, and Chuck Steidel for their support, helpful conversations, and insightful questions. I am grateful to have had the opportunity to collaborate with Brendan Bowler. His talk at Caltech my second year of graduate school introduced me to an unexpected population of massive wide-separation planetary-mass companions, and lead to a long-running collaboration from which several of my thesis projects were born. -

BRAS Newsletter August 2013

www.brastro.org August 2013 Next meeting Aug 12th 7:00PM at the HRPO Dark Site Observing Dates: Primary on Aug. 3rd, Secondary on Aug. 10th Photo credit: Saturn taken on 20” OGS + Orion Starshoot - Ben Toman 1 What's in this issue: PRESIDENT'S MESSAGE....................................................................................................................3 NOTES FROM THE VICE PRESIDENT ............................................................................................4 MESSAGE FROM THE HRPO …....................................................................................................5 MONTHLY OBSERVING NOTES ....................................................................................................6 OUTREACH CHAIRPERSON’S NOTES .........................................................................................13 MEMBERSHIP APPLICATION .......................................................................................................14 2 PRESIDENT'S MESSAGE Hi Everyone, I hope you’ve been having a great Summer so far and had luck beating the heat as much as possible. The weather sure hasn’t been cooperative for observing, though! First I have a pretty cool announcement. Thanks to the efforts of club member Walt Cooney, there are 5 newly named asteroids in the sky. (53256) Sinitiere - Named for former BRAS Treasurer Bob Sinitiere (74439) Brenden - Named for founding member Craig Brenden (85878) Guzik - Named for LSU professor T. Greg Guzik (101722) Pursell - Named for founding member Wally Pursell -

I N S I D E T H I S I S S

Publications from February/février 1998 Volume/volume 92 Number/numero 1 [669] The Royal Astronomical Society of Canada The Beginner’s Observing Guide This guide is for anyone with little or no experience in observing the night sky. Large, easy to read star maps are provided to acquaint the reader with the constellations and bright stars. Basic information on observing the moon, planets and eclipses through the year 2000 is provided. There The Journal of the Royal Astronomical Society of Canada Le Journal de la Société royale d’astronomie du Canada is also a special section to help Scouts, Cubs, Guides and Brownies achieve their respective astronomy badges. Written by Leo Enright (160 pages of information in a soft-cover book with a spiral binding which allows the book to lie flat). Price: $12 (includes taxes, postage and handling) Looking Up: A History of the Royal Astronomical Society of Canada Published to commemorate the 125th anniversary of the first meeting of the Toronto Astronomical Club, “Looking Up — A History of the RASC” is an excellent overall history of Canada’s national astronomy organization. The book was written by R. Peter Broughton, a Past President and expert on the history of astronomy in Canada. Histories on each of the centres across the country are included as well as dozens of biographical sketches of the many people who have volunteered their time and skills to the Society. (hard cover with cloth binding, 300 pages with 150 b&w illustrations) Price: $43 (includes taxes, postage and handling) Observers Calendar — 1998 This calendar was created by members of the RASC. -

Catalog of Nearby Exoplanets

Catalog of Nearby Exoplanets1 R. P. Butler2, J. T. Wright3, G. W. Marcy3,4, D. A Fischer3,4, S. S. Vogt5, C. G. Tinney6, H. R. A. Jones7, B. D. Carter8, J. A. Johnson3, C. McCarthy2,4, A. J. Penny9,10 ABSTRACT We present a catalog of nearby exoplanets. It contains the 172 known low- mass companions with orbits established through radial velocity and transit mea- surements around stars within 200 pc. We include 5 previously unpublished exo- planets orbiting the stars HD 11964, HD 66428, HD 99109, HD 107148, and HD 164922. We update orbits for 90 additional exoplanets including many whose orbits have not been revised since their announcement, and include radial ve- locity time series from the Lick, Keck, and Anglo-Australian Observatory planet searches. Both these new and previously published velocities are more precise here due to improvements in our data reduction pipeline, which we applied to archival spectra. We present a brief summary of the global properties of the known exoplanets, including their distributions of orbital semimajor axis, mini- mum mass, and orbital eccentricity. Subject headings: catalogs — stars: exoplanets — techniques: radial velocities 1Based on observations obtained at the W. M. Keck Observatory, which is operated jointly by the Uni- versity of California and the California Institute of Technology. The Keck Observatory was made possible by the generous financial support of the W. M. Keck Foundation. arXiv:astro-ph/0607493v1 21 Jul 2006 2Department of Terrestrial Magnetism, Carnegie Institute of Washington, 5241 Broad Branch Road NW, Washington, DC 20015-1305 3Department of Astronomy, 601 Campbell Hall, University of California, Berkeley, CA 94720-3411 4Department of Physics and Astronomy, San Francisco State University, San Francisco, CA 94132 5UCO/Lick Observatory, University of California, Santa Cruz, CA 95064 6Anglo-Australian Observatory, PO Box 296, Epping. -

Apus Constellation Visible at Latitudes Between +5° and -90°

Apus Constellation Visible at latitudes between +5° and -90°. Best visible at 21:00 (9 p.m.) during the month of July. Apus is a small constellation in the southern sky. It represents a bird-of-paradise, and its name means "without feet" in Greek because the bird-of-paradise was once wrongly believed to lack feet. First depicted on a celestial globe by Petrus Plancius in 1598, it was charted on a star atlas by Johann Bayer in his 1603 Uranometria. The French explorer and astronomer Nicolas Louis de Lacaille charted and gave the brighter stars their Bayer designations in 1756. The five brightest stars are all reddish in hue. Shading the others at apparent magnitude 3.8 is Alpha Apodis, an orange giant that has around 48 times the diameter and 928 times the luminosity of the Sun. Marginally fainter is Gamma Apodis, another ageing giant star. Delta Apodis is a double star, the two components of which are 103 arcseconds apart and visible with the naked eye. Two star systems have been found to have planets. Apus was one of twelve constellations published by Petrus Plancius from the observations of Pieter Dirkszoon Keyser and Frederick de Houtman who had sailed on the first Dutch trading expedition, known as the Eerste Schipvaart, to the East Indies. It first appeared on a 35-cm diameter celestial globe published in 1598 in Amsterdam by Plancius with Jodocus Hondius. De Houtman included it in his southern star catalogue in 1603 under the Dutch name De Paradijs Voghel, "The Bird of Paradise", and Plancius called the constellation Paradysvogel Apis Indica; the first word is Dutch for "bird of paradise". -

Naming the Extrasolar Planets

Naming the extrasolar planets W. Lyra Max Planck Institute for Astronomy, K¨onigstuhl 17, 69177, Heidelberg, Germany [email protected] Abstract and OGLE-TR-182 b, which does not help educators convey the message that these planets are quite similar to Jupiter. Extrasolar planets are not named and are referred to only In stark contrast, the sentence“planet Apollo is a gas giant by their assigned scientific designation. The reason given like Jupiter” is heavily - yet invisibly - coated with Coper- by the IAU to not name the planets is that it is consid- nicanism. ered impractical as planets are expected to be common. I One reason given by the IAU for not considering naming advance some reasons as to why this logic is flawed, and sug- the extrasolar planets is that it is a task deemed impractical. gest names for the 403 extrasolar planet candidates known One source is quoted as having said “if planets are found to as of Oct 2009. The names follow a scheme of association occur very frequently in the Universe, a system of individual with the constellation that the host star pertains to, and names for planets might well rapidly be found equally im- therefore are mostly drawn from Roman-Greek mythology. practicable as it is for stars, as planet discoveries progress.” Other mythologies may also be used given that a suitable 1. This leads to a second argument. It is indeed impractical association is established. to name all stars. But some stars are named nonetheless. In fact, all other classes of astronomical bodies are named. -

An Upper Boundary in the Mass-Metallicity Plane of Exo-Neptunes

MNRAS 000, 1{8 (2016) Preprint 8 November 2018 Compiled using MNRAS LATEX style file v3.0 An upper boundary in the mass-metallicity plane of exo-Neptunes Bastien Courcol,1? Fran¸cois Bouchy,1 and Magali Deleuil1 1Aix Marseille University, CNRS, Laboratoire d'Astrophysique de Marseille UMR 7326, 13388 Marseille cedex 13, France Accepted XXX. Received YYY; in original form ZZZ ABSTRACT With the progress of detection techniques, the number of low-mass and small-size exo- planets is increasing rapidly. However their characteristics and formation mechanisms are not yet fully understood. The metallicity of the host star is a critical parameter in such processes and can impact the occurence rate or physical properties of these plan- ets. While a frequency-metallicity correlation has been found for giant planets, this is still an ongoing debate for their smaller counterparts. Using the published parameters of a sample of 157 exoplanets lighter than 40 M⊕, we explore the mass-metallicity space of Neptunes and Super-Earths. We show the existence of a maximal mass that increases with metallicity, that also depends on the period of these planets. This seems to favor in situ formation or alternatively a metallicity-driven migration mechanism. It also suggests that the frequency of Neptunes (between 10 and 40 M⊕) is, like giant planets, correlated with the host star metallicity, whereas no correlation is found for Super-Earths (<10 M⊕). Key words: Planetary Systems, planets and satellites: terrestrial planets { Plan- etary Systems, methods: statistical { Astronomical instrumentation, methods, and techniques 1 INTRODUCTION lation was not observed (.e.g. -

![Arxiv:2105.11583V2 [Astro-Ph.EP] 2 Jul 2021 Keck-HIRES, APF-Levy, and Lick-Hamilton Spectrographs](https://docslib.b-cdn.net/cover/4203/arxiv-2105-11583v2-astro-ph-ep-2-jul-2021-keck-hires-apf-levy-and-lick-hamilton-spectrographs-364203.webp)

Arxiv:2105.11583V2 [Astro-Ph.EP] 2 Jul 2021 Keck-HIRES, APF-Levy, and Lick-Hamilton Spectrographs

Draft version July 6, 2021 Typeset using LATEX twocolumn style in AASTeX63 The California Legacy Survey I. A Catalog of 178 Planets from Precision Radial Velocity Monitoring of 719 Nearby Stars over Three Decades Lee J. Rosenthal,1 Benjamin J. Fulton,1, 2 Lea A. Hirsch,3 Howard T. Isaacson,4 Andrew W. Howard,1 Cayla M. Dedrick,5, 6 Ilya A. Sherstyuk,1 Sarah C. Blunt,1, 7 Erik A. Petigura,8 Heather A. Knutson,9 Aida Behmard,9, 7 Ashley Chontos,10, 7 Justin R. Crepp,11 Ian J. M. Crossfield,12 Paul A. Dalba,13, 14 Debra A. Fischer,15 Gregory W. Henry,16 Stephen R. Kane,13 Molly Kosiarek,17, 7 Geoffrey W. Marcy,1, 7 Ryan A. Rubenzahl,1, 7 Lauren M. Weiss,10 and Jason T. Wright18, 19, 20 1Cahill Center for Astronomy & Astrophysics, California Institute of Technology, Pasadena, CA 91125, USA 2IPAC-NASA Exoplanet Science Institute, Pasadena, CA 91125, USA 3Kavli Institute for Particle Astrophysics and Cosmology, Stanford University, Stanford, CA 94305, USA 4Department of Astronomy, University of California Berkeley, Berkeley, CA 94720, USA 5Cahill Center for Astronomy & Astrophysics, California Institute of Technology, Pasadena, CA 91125, USA 6Department of Astronomy & Astrophysics, The Pennsylvania State University, 525 Davey Lab, University Park, PA 16802, USA 7NSF Graduate Research Fellow 8Department of Physics & Astronomy, University of California Los Angeles, Los Angeles, CA 90095, USA 9Division of Geological and Planetary Sciences, California Institute of Technology, Pasadena, CA 91125, USA 10Institute for Astronomy, University of Hawai`i, -

Biosignatures Search in Habitable Planets

galaxies Review Biosignatures Search in Habitable Planets Riccardo Claudi 1,* and Eleonora Alei 1,2 1 INAF-Astronomical Observatory of Padova, Vicolo Osservatorio, 5, 35122 Padova, Italy 2 Physics and Astronomy Department, Padova University, 35131 Padova, Italy * Correspondence: [email protected] Received: 2 August 2019; Accepted: 25 September 2019; Published: 29 September 2019 Abstract: The search for life has had a new enthusiastic restart in the last two decades thanks to the large number of new worlds discovered. The about 4100 exoplanets found so far, show a large diversity of planets, from hot giants to rocky planets orbiting small and cold stars. Most of them are very different from those of the Solar System and one of the striking case is that of the super-Earths, rocky planets with masses ranging between 1 and 10 M⊕ with dimensions up to twice those of Earth. In the right environment, these planets could be the cradle of alien life that could modify the chemical composition of their atmospheres. So, the search for life signatures requires as the first step the knowledge of planet atmospheres, the main objective of future exoplanetary space explorations. Indeed, the quest for the determination of the chemical composition of those planetary atmospheres rises also more general interest than that given by the mere directory of the atmospheric compounds. It opens out to the more general speculation on what such detection might tell us about the presence of life on those planets. As, for now, we have only one example of life in the universe, we are bound to study terrestrial organisms to assess possibilities of life on other planets and guide our search for possible extinct or extant life on other planetary bodies. -

Astronomy Binder

Astronomy Binder Bloomington High School South 2011 Contents 1 Astronomical Distances 2 1.1 Geometric Methods . 2 1.2 Spectroscopic Methods . 4 1.3 Standard Candle Methods . 4 1.4 Cosmological Redshift . 5 1.5 Distances to Galaxies . 5 2 Age and Size 6 2.1 Measuring Age . 6 2.2 Measuring Size . 7 3 Variable Stars 7 3.1 Pulsating Variable Stars . 7 3.1.1 Cepheid Variables . 7 3.1.2 RR Lyrae Variables . 8 3.1.3 RV Tauri Variables . 8 3.1.4 Long Period/Semiregular Variables . 8 3.2 Binary Variables . 8 3.3 Cataclysmic Variables . 11 3.3.1 Classical Nova . 11 3.3.2 Recurrent Novae . 11 3.3.3 Dwarf Novae (U Geminorum) . 11 3.3.4 X-Ray Binary . 11 3.3.5 Polar (AM Herculis) star . 12 3.3.6 Intermediate Polar (DQ Herculis) star . 12 3.3.7 Super Soft Source (SSS) . 12 3.3.8 VY Sculptoris stars . 12 3.3.9 AM Canum Venaticorum stars . 12 3.3.10 SW Sextantis stars . 13 3.3.11 Symbiotic Stars . 13 3.3.12 Pulsating White Dwarfs . 13 4 Galaxy Classification 14 4.1 Elliptical Galaxies . 14 4.2 Spirals . 15 4.3 Classification . 16 4.4 The Milky Way Galaxy (MWG . 19 4.4.1 Scale Height . 19 4.4.2 Magellanic Clouds . 20 5 Galaxy Interactions 20 6 Interstellar Medium 21 7 Active Galactic Nuclei 22 7.1 AGN Equations . 23 1 8 Spectra 25 8.1 21 cm line . 26 9 Black Holes 26 9.1 Stellar Black Holes . -

Today in Astronomy 106: Exoplanets

Today in Astronomy 106: exoplanets The successful search for extrasolar planets Prospects for determining the fraction of stars with planets, and the number of habitable planets per planetary system (fp and ne). T. Pyle, SSC/JPL/Caltech/NASA. 26 May 2011 Astronomy 106, Summer 2011 1 Observing exoplanets Stars are vastly brighter and more massive than planets, and most stars are far enough away that the planets are lost in the glare. So astronomers have had to be more clever and employ the motion of the orbiting planet. The methods they use (exoplanets detected thereby): Astrometry (0): tiny wobble in star’s motion across the sky. Radial velocity (399): tiny wobble in star’s motion along the line of sight by Doppler shift. Timing (9): tiny delay or advance in arrival of pulses from regularly-pulsating stars. Gravitational microlensing (10): brightening of very distant star as it passes behind a planet. 26 May 2011 Astronomy 106, Summer 2011 2 Observing exoplanets (continued) Transits (69): periodic eclipsing of star by planet, or vice versa. Very small effect, about like that of a bug flying in front of the headlight of a car 10 miles away. Imaging (11 but 6 are most likely to be faint stars): taking a picture of the planet, usually by blotting out the star. Of these by far the most useful so far has been the combination of radial-velocity and transit detection. Astrometry and gravitational microlensing of sufficient precision to detect lots of planets would need dedicated, specialized observatories in space. Imaging lots of planets will require 30-meter-diameter telescopes for visible and infrared wavelengths. -

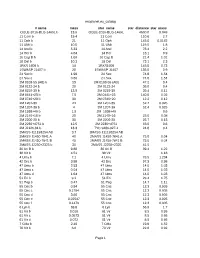

Exoplanet.Eu Catalog Page 1 # Name Mass Star Name

exoplanet.eu_catalog # name mass star_name star_distance star_mass OGLE-2016-BLG-1469L b 13.6 OGLE-2016-BLG-1469L 4500.0 0.048 11 Com b 19.4 11 Com 110.6 2.7 11 Oph b 21 11 Oph 145.0 0.0162 11 UMi b 10.5 11 UMi 119.5 1.8 14 And b 5.33 14 And 76.4 2.2 14 Her b 4.64 14 Her 18.1 0.9 16 Cyg B b 1.68 16 Cyg B 21.4 1.01 18 Del b 10.3 18 Del 73.1 2.3 1RXS 1609 b 14 1RXS1609 145.0 0.73 1SWASP J1407 b 20 1SWASP J1407 133.0 0.9 24 Sex b 1.99 24 Sex 74.8 1.54 24 Sex c 0.86 24 Sex 74.8 1.54 2M 0103-55 (AB) b 13 2M 0103-55 (AB) 47.2 0.4 2M 0122-24 b 20 2M 0122-24 36.0 0.4 2M 0219-39 b 13.9 2M 0219-39 39.4 0.11 2M 0441+23 b 7.5 2M 0441+23 140.0 0.02 2M 0746+20 b 30 2M 0746+20 12.2 0.12 2M 1207-39 24 2M 1207-39 52.4 0.025 2M 1207-39 b 4 2M 1207-39 52.4 0.025 2M 1938+46 b 1.9 2M 1938+46 0.6 2M 2140+16 b 20 2M 2140+16 25.0 0.08 2M 2206-20 b 30 2M 2206-20 26.7 0.13 2M 2236+4751 b 12.5 2M 2236+4751 63.0 0.6 2M J2126-81 b 13.3 TYC 9486-927-1 24.8 0.4 2MASS J11193254 AB 3.7 2MASS J11193254 AB 2MASS J1450-7841 A 40 2MASS J1450-7841 A 75.0 0.04 2MASS J1450-7841 B 40 2MASS J1450-7841 B 75.0 0.04 2MASS J2250+2325 b 30 2MASS J2250+2325 41.5 30 Ari B b 9.88 30 Ari B 39.4 1.22 38 Vir b 4.51 38 Vir 1.18 4 Uma b 7.1 4 Uma 78.5 1.234 42 Dra b 3.88 42 Dra 97.3 0.98 47 Uma b 2.53 47 Uma 14.0 1.03 47 Uma c 0.54 47 Uma 14.0 1.03 47 Uma d 1.64 47 Uma 14.0 1.03 51 Eri b 9.1 51 Eri 29.4 1.75 51 Peg b 0.47 51 Peg 14.7 1.11 55 Cnc b 0.84 55 Cnc 12.3 0.905 55 Cnc c 0.1784 55 Cnc 12.3 0.905 55 Cnc d 3.86 55 Cnc 12.3 0.905 55 Cnc e 0.02547 55 Cnc 12.3 0.905 55 Cnc f 0.1479 55