Alexander Karatarakis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

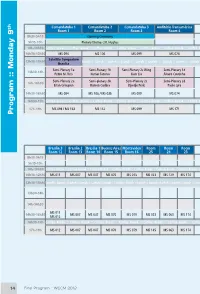

Program :: Monday 9

Comandatuba 1 Comandatuba 2 Comandatuba 3 Auditório Transamérica Una Ilhéus São Paulo 3 São Paulo 2 São Paulo 1 Quito Santiago th Room 1 Room 2 Room 3 Room 4 Room 5 Room 6 Room 7 Room 8 Room 9 Room 10 Room 11 8h30-9h15 Opening Ceremony 9h15-10h Plenary Thomas J.R. Hughes 10h-10h30 Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break 10h30-12h30 MS 094 MS 106 MS 099 MS 074 MS 142 MS 133 MS 001 MS 075 MS 085 MS 002 Satellite Symposium 12h30-13h30 Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch :: Lunch Brasília Semi-Plenary 1a Semi-Plenary 1b Semi-Plenary 2c Wing Semi-Plenary 1d 13h30-14h Pedro M. Reis Kumar Tamma Kam Liu Álvaro Coutinho Semi-Plenary 2a Semi-plenary 2b Semi-Plenary 2c Semi-Plenary 2d 14h-14h30 Eitan Grinspun Ramon Codina Djordje Peric Paulo Lyra 14h30-16h30 MS 094 MS 106 / MS 035 MS 099 MS 074 MS 142 MS 133 MS 001 MS 075 MS 085 MS 002 16h30-17h Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break :: Coffee Break 17h-19h MS 094 / MS 163 MS 132 MS 099 MS 171 MS 142 / MS 005 MS 116 MS 001 MS 075 MS 085 MS 002 Program :: Monday 9 Brasília 3 Brasília 2 Brasília 1 Buenos Aires Montevideo Room Room Room Room Room Room Room Room -

Numerical Solution for Two-Dimensional Flow Under Sluice Gates Using the Natural Element Method

1550 Numerical solution for two-dimensional flow under sluice gates using the natural element method Farhang Daneshmand, S.A. Samad Javanmard, Tahereh Liaghat, Mohammad Mohsen Moshksar, and Jan F. Adamowski Abstract: Fluid loads on a variety of hydraulic structures and the free surface profile of the flow are important for design purposes. This is a difficult task because the governing equations have nonlinear boundary conditions. The main objective of this paper is to develop a procedure based on the natural element method (NEM) for computation of free surface pro- files, velocity and pressure distributions, and flow rates for a two-dimensional gravity fluid flow under sluice gates. Natu- ral element method is a numerical technique in the field of computational mechanics and can be considered as a meshless method. In this analysis, the fluid was assumed to be inviscid and incompressible. The results obtained in the paper were confirmed via a hydraulic model test. Calculation results indicate a good agreement with previous flow solutions for the water surface profiles and pressure distributions throughout the flow domain and on the gate. Key words: free surface flow, meshless methods, meshfree methods, natural element method, sluice gate, hydraulic struc- tures. Re´sume´ : Les charges de fluides sur les structures hydrauliques et le profil de la surface libre de l’e´coulement sont impor- tants aux fins de conception. Cette conception est une taˆche difficile puisque les e´quations principales pre´sentent des conditions limites non line´aires. Cet article a comme objectif principal de de´velopper une proce´dure base´e sur la me´thode des e´le´ments naturels (NEM) pour calculer les profils de la surface libre, les distributions de vitesse et de pression ainsi que les de´bits pour un e´coulement gravitaire bidimensionnel sous les panneaux de vannes. -

14Th U.S. National Congress on Computational Mechanics

14th U.S. National Congress on Computational Mechanics Montréal • July 17-20, 2017 Congress Program at a Glance Sunday, July 16 Monday, July 17 Tuesday, July 18 Wednesday, July 19 Thursday, July 20 Registration Registration Registration Registration Short Course 7:30 am - 5:30 pm 7:30 am - 5:30 pm 7:30 am - 5:30 pm 7:30 am - 11:30 am Registration 8:00 am - 9:30 am 8:30 am - 9:00 am OPENING PL: Tarek Zohdi PL: Andrew Stuart PL: Mark Ainsworth PL: Anthony Patera 9:00 am - 9.45 am Chair: J.T. Oden Chair: T. Hughes Chair: L. Demkowicz Chair: M. Paraschivoiu Short Courses 9:45 am - 10:15 am Coffee Break Coffee Break Coffee Break Coffee Break 9:00 am - 12:00 pm 10:15 am - 11:55 am Technical Session TS1 Technical Session TS4 Technical Session TS7 Technical Session TS10 Lunch Break 11:55 am - 1:30 pm Lunch Break Lunch Break Lunch Break CLOSING aSPL: Raúl Tempone aSPL: Ron Miller aSPL: Eldad Haber 1:30 pm - 2:15 pm bSPL: Marino Arroyo bSPL: Beth Wingate bSPL: Margot Gerritsen Short Courses 2:15 pm - 2:30 pm Break-out Break-out Break-out 1:00 pm - 4:00 pm 2:30 pm - 4:10 pm Technical Session TS2 Technical Session TS5 Technical Session TS8 4:10 pm - 4:40 pm Coffee Break Coffee Break Coffee Break Congress Registration 2:00 pm - 8:00 pm 4:40 pm - 6:20 pm Technical Session TS3 Poster Session TS6 Technical Session TS9 Reception Opening in 517BC Cocktail Coffee Breaks in 517A 7th floor Terrace Plenary Lectures (PL) in 517BC 7:00 pm - 7:30 pm Cocktail and Banquet 6:00 pm - 8:00 pm Semi-Plenary Lectures (SPL): Banquet in 517BC aSPL in 517D 7:30 pm - 9:30 pm Viewing of Fireworks bSPL in 516BC Fireworks and Closing Reception Poster Session in 517A 10:00 pm - 10:30 pm on 7th floor Terrace On behalf of Polytechnique Montréal, it is my pleasure to welcome, to Montreal, the 14th U.S. -

Analysis-Guided Improvements of the Material Point Method

ANALYSIS-GUIDED IMPROVEMENTS OF THE MATERIAL POINT METHOD by Michael Dietel Steffen A dissertation submitted to the faculty of The University of Utah in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computing School of Computing The University of Utah December 2009 Copyright c Michael Dietel Steffen 2009 ° All Rights Reserved THE UNIVERSITY OF UTAH GRADUATE SCHOOL SUPERVISORY COMMITTEE APPROVAL of a dissertation submitted by Michael Dietel Steffen This dissertation has been read by each member of the following supervisory committee and by majority vote has been found to be satisfactory. Chair: Robert M. Kirby Martin Berzins Christopher R. Johnson Steven G. Parker James E. Guilkey THE UNIVERSITY OF UTAH GRADUATE SCHOOL FINAL READING APPROVAL To the Graduate Council of the University of Utah: I have read the dissertation of Michael Dietel Steffen in its final form and have found that (1) its format, citations, and bibliographic style are consistent and acceptable; (2) its illustrative materials including figures, tables, and charts are in place; and (3) the final manuscript is satisfactory to the Supervisory Committee and is ready for submission to The Graduate School. Date Robert M. Kirby Chair, Supervisory Committee Approved for the Major Department Martin Berzins Chair/Dean Approved for the Graduate Council Charles A. Wight Dean of The Graduate School ABSTRACT The Material Point Method (MPM) has shown itself to be a powerful tool in the simulation of large deformation problems, especially those involving complex geometries and contact where typical finite element type methods frequently fail. While these large complex problems lead to some impressive simulations and so- lutions, there has been a lack of basic analysis characterizing the errors present in the method, even on the simplest of problems. -

Scalable Large-Scale Fluid-Structure Interaction Solvers in the Uintah Framework Via Hybrid Task-Based Parallelism Algorithms

1 Scalable Large-scale Fluid-structure Interaction Solvers in the Uintah Framework via Hybrid Task-based Parallelism Algorithms Qingyu Meng, Martin Berzins UUSCI-2012-004 Scientific Computing and Imaging Institute University of Utah Salt Lake City, UT 84112 USA May 7, 2012 Abstract: Uintah is a software framework that provides an environment for solving fluid-structure interaction problems on structured adaptive grids on large-scale science and engineering problems involving the solution of partial differential equations. Uintah uses a combination of fluid-flow solvers and particle-based methods for solids, together with adaptive meshing and a novel asynchronous task-based approach with fully automated load balancing. When applying Uintah to fluid-structure interaction problems with mesh refinement, the combination of adaptive meshing and the move- ment of structures through space present a formidable challenge in terms of achieving scalability on large-scale parallel computers. With core counts per socket continuing to grow along with the prospect of less memory per core, adopting a model that uses MPI to communicate between nodes and a shared memory model on-node is one approach to achieve scalability at full machine capacity on current and emerging large-scale systems. For this approach to be successful, it is necessary to design data-structures that large numbers of cores can simultaneously access without contention. These data structures and algorithms must also be designed to avoid the overhead involved with locks and other synchronization primitives when running on large number of cores per node, as contention for acquiring locks quickly becomes untenable. This scalability challenge is addressed here for Uintah, by the development of new hybrid runtime and scheduling algorithms combined with novel lockfree data structures, making it possible for Uintah to achieve excellent scalability for a challenging fluid-structure problem with mesh refinement on as many as 260K cores. -

The Material-Point Method for Granular Materials

Comput. Methods Appl. Mech. Engrg. 187 (2000) 529±541 www.elsevier.com/locate/cma The material-point method for granular materials S.G. Bardenhagen a, J.U. Brackbill b,*, D. Sulsky c a ESA-EA, MS P946 Los Alamos National Laboratory, Los Alamos, NM 87545, USA b T-3, MS B216 Los Alamos National Laboratory, Los Alamos, NM 87545, USA c Department of Mathematics and Statistics, University of New Mexico, Albuquerqe, NM 87131, USA Abstract A model for granular materials is presented that describes both the internal deformation of each granule and the interactions between grains. The model, which is based on the FLIP-material point, particle-in-cell method, solves continuum constitutive models for each grain. Interactions between grains are calculated with a contact algorithm that forbids interpenetration, but allows separation and sliding and rolling with friction. The particle-in-cell method eliminates the need for a separate contact detection step. The use of a common rest frame in the contact model yields a linear scaling of the computational cost with the number of grains. The properties of the model are illustrated by numerical solutions of sliding and rolling contacts, and for granular materials by a shear calculation. The results of numerical calculations demonstrate that contacts are modeled accurately for smooth granules whose shape is resolved by the computation mesh. Ó 2000 Elsevier Science S.A. All rights reserved. 1. Introduction Granular materials are large conglomerations of discrete macroscopic particles, which may slide against one another but not penetrate [9]. Like a liquid, granular materials can ¯ow to assume the shape of the container. -

References (In Progress)

R References (in progress) R–1 Appendix R: REFERENCES (IN PROGRESS) TABLE OF CONTENTS Page §R.1 Foreword ...................... R–3 §R.2 Reference Database .................. R–3 R–2 §R.2 REFERENCE DATABASE §R.1. Foreword Collected references for most Chapters (except those in progress) for books Advanced Finite Element Methods; master-elective and doctoral level, abbrv. AFEM Advanced Variational Methods in Mechanics; master-elective and doctoral level, abbrv. AVMM Fluid Structure Interaction; doctoral level, abbrv. FSI Introduction to Aerospace Structures; junior undergraduate level, abbrv. IAST Matrix Finite Element Methods in Statics; senior-elective and master level, abbrv. MFEMS Matrix Finite Element Methods in Dynamics; senior-elective and master level, abbrv. MFEMD Introduction to Finite Element Methods; senior-elective and master level, abbrv. IFEM Nonlinear Finite Element Methods; master-elective and doctoral level, abbrv. NFEM Margin letters are to facilitate sort; will be removed on completion. Note 1: Many books listed below are out of print. The advent of the Internet has meant that it is easier to surf for used books across the world without moving from your desk. There is a fast search “metaengine” for comparing prices at URL http://www.addall.com: click on the “search for used books” link. Amazon.com has also a search engine, which is poorly organized, confusing and full of unnecessary hype, but does link to online reviews. [Since about 2008, old scanned books posted online on Google are an additional potential source; free of charge if the useful pages happen to be displayed. Such files cannot be downloaded or printed.] Note 2. -

BOOK of ABSTRACTS Conferências 5: Book of Abstracts 15/05/2018, 08:15

4th International Conference on Mechanics of Composites 9-12 July 2018 Universidad Carlos III de Madrid, Spain Chairs: A. J. M. Ferreira (Univ. Porto, Portugal), Carlos Santiuste (Univ. Carlos III, Spain) BOOK OF ABSTRACTS Conferências 5: Book of Abstracts 15/05/2018, 08:15 Book of Abstracts 14470 | NUMERICAL MODELLING OF SOFT ARMOUR PANELS UNDER HIGH-VELOCITY IMPACTS (Composite Structures) Ester Jalón ([email protected]), Department of Continuum Mechanics and Structural Analysis, Universidad Carlos III de Madrid, Spain Marcos Rodriguez , Department of Mechanical Engineering, Universidad Carlos III de Madrid, Spain José Antonio Loya , Department of Continuum Mechanics and Structural Analysis, Universidad Carlos III de Madrid, Spain Carlos Santiuste , Department of Continuum Mechanics and Structural Analysis, Universidad Carlos III de Madrid, Spain Nowadays, the role of personal protection is crucial in order to minimize the morbidity and mortality from ballistic injuries. The aim of these research is to investigate the behavior of UHMWPE fibre under high-velocity impact. There are two different UHMWPE packages: ‘soft’ ballistics packages (SB) and rigid ‘hard’ ballistics packages (SB). UHMWPE UD has not been sufficiently analyzed since new SB layers have been manufactured. This work, compares the impact behavior of different UHMWPE composites using spherical projectiles. Firstly, the parameter affecting the ballistic capacity are determined in order to define an optimal configuration to fulfill the requirements of manufactured. Then, the experimental test are conducted on different single SB sheets under spherical projectiles. Finally, the FEM model is validated with experimental test on multilayer specimens of the different UD materials considered. A FEM model is developed to predict the response of UHMWPE sheets under ballistic impact and calibrated with the previous experimental test. -

A Numerical Formulation and Algorithm for Limit and Shakedown Analysis Of

A numerical formulation and algorithm for limit and shakedown analysis of large-scale elastoplastic structures Heng Peng1, Yinghua Liu1,* and Haofeng Chen2 1Department of Engineering Mechanics, AML, Tsinghua University, Beijing 100084, People’s Republic of China 2Department of Mechanical and Aerospace Engineering, University of Strathclyde, Glasgow G1 1XJ, UK *Corresponding author: [email protected] Abstract In this paper, a novel direct method called the stress compensation method (SCM) is proposed for limit and shakedown analysis of large-scale elastoplastic structures. Without needing to solve the specific mathematical programming problem, the SCM is a two-level iterative procedure based on a sequence of linear elastic finite element solutions where the global stiffness matrix is decomposed only once. In the inner loop, the static admissible residual stress field for shakedown analysis is constructed. In the outer loop, a series of decreasing load multipliers are updated to approach to the shakedown limit multiplier by using an efficient and robust iteration control technique, where the static shakedown theorem is adopted. Three numerical examples up to about 140,000 finite element nodes confirm the applicability and efficiency of this method for two-dimensional and three-dimensional elastoplastic structures, with detailed discussions on the convergence and the accuracy of the proposed algorithm. Keywords Direct method; Shakedown analysis; Stress compensation method; Large-scale; Elastoplastic structures 1 Introduction In many fields of technology, such as petrochemical, civil, mechanical and space engineering, structures are usually subjected to variable repeated loading. The computation of the load-carrying capability of those structures beyond the elastic limit is an important but 1 difficult task in structural design and integrity assessment. -

And Smoothed Particle Hydrodynamics (SPH) Computational Techniques

A strategy to couple the material point method (MPM) and smoothed particle hydrodynamics (SPH) computational techniques The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation Raymond, Samuel J., Bruce Jones, and John R. Williams. “A Strategy to Couple the Material Point Method (MPM) and Smoothed Particle Hydrodynamics (SPH) Computational Techniques.” Computational Particle Mechanics 5, no. 1 (December 10, 2016): 49– 58. As Published http://dx.doi.org/10.1007/s40571-016-0149-9 Publisher Springer International Publishing Version Author's final manuscript Citable link http://hdl.handle.net/1721.1/116233 Terms of Use Creative Commons Attribution-Noncommercial-Share Alike Detailed Terms http://creativecommons.org/licenses/by-nc-sa/4.0/ Computational Particle Mechanics manuscript No. (will be inserted by the editor) A strategy to couple the Material Point Method (MPM) and Smoothed Particle Hydrodynamics (SPH) computational techniques Samuel J. Raymond · Bruce Jones · John R. Williams Received: date / Accepted: date Abstract A strategy is introduced to allow coupling of the Material Point Method (MPM) and Smoothed Particle Hydrodynamics (SPH) for numerical simulations. This new strategy partitions the domain into SPH and MPM regions, particles carry all state variables and as such no special treatment is required for the transition between regions. The aim of this work is to derive and validate the coupling methodology between MPM and SPH. Such coupling allows for general boundary conditions to be used in an SPH simulation without further augmentation. Additionally, as SPH is a purely particle method and MPM is a combination of particles and a mesh, this coupling also permits a smooth transition from particle methods to mesh methods. -

Numerical Modeling of Metal Cutting Processes Using the Particle Finite Element Method

Numerical modeling of metal cutting processes using the Particle Finite Element Method by Juan Manuel Rodriguez Prieto Advisors Juan Carlos Cante Terán Xavier Oliver Olivella Barcelona, October 2013 1 Abstract Metal cutting or machining is a process in which a thin layer or metal, the chip, is removed by a wedge-shaped tool from a large body. Metal cutting processes are present in big industries (automotive, aerospace, home appliance, etc.) that manufacture big products, but also high tech industries where small piece but high precision is needed. The importance of machining is such that, it is the most common manufacturing processes for producing parts and obtaining specified geometrical dimensions and surface finish, its cost represent 15% of the value of all manufactured products in all industrialized countries. Cutting is a complex physical phenomena in which friction, adiabatic shear bands, excessive heating, large strains and high rate strains are present. Tool geometry, rake angle and cutting speed play an important role in chip morphology, cutting forces, energy consumption and tool wear. The study of metal cutting is difficult from an experimental point of view, because of the high speed at which it takes place under industrial machining conditions (experiments are difficult to carry out), the small scale of the phenomena which are to be observed, the continuous development of tool and workpiece materials and the continuous development of tool geometries, among others reasons. Simulation of machining processes in which the workpiece material is highly deformed on metal cutting is a major challenge of the finite element method (FEM). The principal problem in using a conventional FE model with langrangian mesh are mesh distortion in the high deformation. -

A Stabilized and Coupled Meshfree/Meshbased Method for Fluid-Structure Interaction Problems

Braunschweiger Schriften zur Mechanik Nr. 59-2005 A Stabilized and Coupled Meshfree/Meshbased Method for Fluid-Structure Interaction Problems von Thomas-Peter Fries aus Lubeck¨ Herausgegeben vom Institut fur¨ Angewandte Mechanik der Technischen Universit¨at Braunschweig Schriftleiter: Prof. H. Antes Institut fur¨ Angewandte Mechanik Postfach 3329 38023 Braunschweig ISBN 3-920395-58-1 Vom Fachbereich Bauingenieurwesen der Technischen Universit¨at Carolo-Wilhelmina zu Braunschweig zur Erlangung des Grades eines Doktor-Ingenieurs (Dr.-Ing.) genehmigte Dissertation From the Faculty of Civil Engineering at the Technische Universit¨at Carolo-Wilhelmina zu Braunschweig in Brunswick, Germany, approved dissertation Eingereicht am: 10.05.2005 Mundliche¨ Prufung¨ am: 21.07.2005 Berichterstatter: Prof. H.G. Matthies, Inst. fur¨ Wissenschaftliches Rechnen Prof. M. Krafczyk, Inst. fur¨ Computeranwendungen im Bauingenieurwesen c Copyright 2005 Thomas-Peter Fries, Brunswick, Germany Abstract A method is presented which combines features of meshfree and meshbased methods in order to enable the simulation of complex flow problems involving large deformations of the domain or moving and rotating objects. Conventional meshbased methods like the finite element method have matured as standard tools for the simulation of fluid and structure problems. They offer efficient and reliable approximations, provided that a conforming mesh with sufficient quality can be maintained throughout the simulation. This, however, may not be guaranteed for complex fluid and fluid-structure interaction problems. Meshfree methods on the other hand approximate partial differential equations based on a set of nodes without the need for an additional mesh. Therefore, these methods are frequently used for problems where suitable meshes are prohibitively expensive to construct and maintain.