How Misinformation Spreads Through Twitter

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Touchstones of Popular Culture Among Contemporary College Students in the United States

Minnesota State University Moorhead RED: a Repository of Digital Collections Dissertations, Theses, and Projects Graduate Studies Spring 5-17-2019 Touchstones of Popular Culture Among Contemporary College Students in the United States Margaret Thoemke [email protected] Follow this and additional works at: https://red.mnstate.edu/thesis Part of the Higher Education and Teaching Commons Recommended Citation Thoemke, Margaret, "Touchstones of Popular Culture Among Contemporary College Students in the United States" (2019). Dissertations, Theses, and Projects. 167. https://red.mnstate.edu/thesis/167 This Thesis (699 registration) is brought to you for free and open access by the Graduate Studies at RED: a Repository of Digital Collections. It has been accepted for inclusion in Dissertations, Theses, and Projects by an authorized administrator of RED: a Repository of Digital Collections. For more information, please contact [email protected]. Touchstones of Popular Culture Among Contemporary College Students in the United States A Thesis Presented to The Graduate Faculty of Minnesota State University Moorhead By Margaret Elizabeth Thoemke In Partial Fulfillment of the Requirements for the Degree of Master of Arts in Teaching English as a Second Language May 2019 Moorhead, Minnesota iii Copyright 2019 Margaret Elizabeth Thoemke iv Dedication I would like to dedicate this thesis to my three most favorite people in the world. To my mother, Heather Flaherty, for always supporting me and guiding me to where I am today. To my husband, Jake Thoemke, for pushing me to be the best I can be and reminding me that I’m okay. Lastly, to my son, Liam, who is my biggest fan and my reason to be the best person I can be. -

1 Implementación Del Closed Caption Y/O Subtitulos Para Desarrollar La Habilidad De Comprensión Auditiva En Inglés Como Leng

IMPLEMENTACIÓN DEL CLOSED CAPTION Y/O SUBTITULOS PARA DESARROLLAR LA HABILIDAD DE COMPRENSIÓN AUDITIVA EN INGLÉS COMO LENGUA EXTRANJERA SERGIO ESTEBAN OSEJO FONSECA Trabajo de grado para optar al título de Licenciado en Lenguas Modernas Director Ruth M. Domínguez C. PONTIFICIA UNIVERSIDAD JAVERIANA FACULTAD DE CIENCIAS COMUNICACIÓN Y LENGUAJE DEPARTAMENTO DE LENGUAS MODERNAS Bogotá 2009 1 A Sarah y Mac por su lealtad y fortaleza. 3 CONTENIDO Pág. INTRODUCCIÓN 13 1. PROBLEMA 19 1.1 PRESENTACIÓN DEL PROBLEMA 19 1.1.1 Interrogante 22 1.2 IMPORTANCIA DE LA INVESTIGACIÓN 22 1.2.1 Justificación del Problema 22 1.2.2 Estado de la cuestión 24 2. OBJETIVOS 30 2.1 OBJETIVO GENERAL 30 2.1 OBJETIVOS ESPECÍFICOS 30 3. MARCO DE REFERENCIA 32 3.1 MARCO CONCEPTUAL 32 3.1.1 Aprendizaje del Inglés como lengua extranjera 32 3.1.2 Enseñanza del Inglés como lengua extranjera 35 3.1.2.1 Metodología de la enseñanza del Inglés como lengua 35 extranjera 3.1.2.2 Enfoque comunicativo 39 3.1.3 Estrategias pedagógicas 46 3.1.4 Desarrollo de la habilidad de Listening 48 4 3.1.5 Nuevas Tecnologías 55 3.1.6 Closed Caption 61 3.1.6.1 En qué consiste el servicio de Closed Caption? 62 3.1.6.2 Educación y Closed Caption 63 3.1.6.3 Closed Caption en Colombia 64 3.2. MARCO METODOLÓGICO 65 3.2.1 Fases de la investigación 72 3.2.2 Contexto de la investigación 75 3.2.3 Población y muestra 75 3.2.4 Técnicas e instrumentos para la recolección de la información 76 3.2.5 Validez y confiabilidad de los instrumentos de recolección 79 de la información 4. -

Wood Cutting Permit

CITY OF CRETE DEPARTMENTS OF PUBLIC WORKS • Permit to enter City property for purposes of woodcutting and wood hauling Conditions: 1. A valid permit is required to enter City property for purposes of cutting and hauling wood. 2. Permit is valid only for the undersigned as listed. 3. Permit only valid during the hours between 8:00 am to 8:00 pm Monday through Saturday and 10:00 am to 8:00 pm on Sunday. 4. City personnel will not aid or assist in cutting or loading. 5. Each permit holder is responsible for their own personal safety. The City of Crete assumes no responsibility or liability for permit holder or accompanying parties in case of accidents. 6. Permit holders are expected to be courteous of others. The City reserves the right to expel permit holders for any reason. 7. This wood is for individual personal use only. No resale for profit or commercial use is allowed. 8. By signing below, applicant agrees to all terms as listed above. Applicant will also read and sign the release statement accompanying this permit for permit to be valid. 9. This permit is valid from January 1 to December 31. 10.$10.00 fee charged to residents outside of City Limits. Name: _____________________________________________________________________ License number of vehicle/and trailer to be used: ___________________________________ Date permit issued: ___________________________________________________________ Signature of applicant: _________________________________________________________ Issued by: _____ City of Crete ! Departments of Public Works ! 243 -

Hand-Arm Vibration in the Aetiology of Hearing Loss in Lumberjacks

British Journal of Industrial Medicine 1981 ;38 :281-289 Hand-arm vibration in the aetiology of hearing loss in lumberjacks I PYYKKO,l J STARCK,2 M FARKKILA,l M HOIKKALA,2 0 KORHONEN,2 AND M NURMINEN2 From the Institute ofPhysiology,' University of Helsinki, and the Institute of Occupational Health,2 Helsinki, Finland ABSTRACT A longitudinal study of hearing loss was conducted among a group of lumberjacks in the years 1972 and 1974-8. The number of subjects increased from 72 in 1972 to 203 in 1978. They were classified according to (1) a history of vibration-induced white finger (VWF), (2) age, (3) duration of exposure, and (4) duration of ear muff usage. The hearing level at 4000 Hz was used to indicate the noise-induced permanent threshold shift (NIPTS). The lumberjacks were exposed, at their present pace of work, to noise, Leq values 96-103 dB(A), and to the vibration of a chain saw (linear acceleration 30-70 ms-2). The chain saws of the early 1960s were more hazardous, with the average noise level of 111 dB(A) and a variation acceleration of 60-180 ms2. When classified on the basis of age, the lumberjacks with VWF had about a 10 dB greater NIPTS than subjects without VWF. NIPTS increased with the duration of exposure to chain saw noise, but with equal noise exposure the NIPTS was about 10 dB greater in lumberjacks with VWF than without VWF. With the same duration of ear protection the lumberjacks with VWF consistently had about a 10 dB greater NIPTS than those without VWF. -

CLR 54-2.Pdf (779.2Kb)

Vol. 54, No. 2 2021 SCHOOL OF LAW CREIGHTON UNIVERSITY OMAHA, NEBRASKA BOARD OF EDITORS HALLIE A. HAMILTON Editor-in-Chief CHRISTOPHER GREENE SAPPHIRE ANDERSEN Senior Executive Editor Research Editor CALLIE A. KANTHACK SARAH MIELKE Senior Lead Articles Editor Executive Editor THOMAS R. NORVELL DEANNA M. MATHEWS Executive Editor Executive Editor ERIC M. HAGEN BEAU R. MORGAN JUSTICE A. SIMANEK Student Articles Editor Student Articles Editor Student Articles Editor EDITORIAL STAFF DANIEL J. MCDOWELL ASSOCIATE STAFF RILEY E. ARNOLD NICHOLAS BANELLI KIMBERLY DUGGAN JACQUELIN FARQUHAR NATALIE C. KOZEL ROBERT NORTON ROBERT J. TOTH JR.KAITLYN WESTHOFF GENERAL STAFF KRYSTA APPLEGATE-HAMPTON DON HARSH FRANKIE HASS ADDISON C. MCCAULEY NICKOLAS SACHAU LAURA STEELE FACULTY ADVISOR CAROL C. KNOEPFLER DANIEL L. REAL BUSINESS MANAGER DIANE KRILEY SPECIAL TRIBUTE A TRIBUTE TO PROFESSOR KEN MELILLI .... Patrick J. Borchers i. ARTICLES MEDICAL PAROLE-RELATED PETITIONS IN U.S. COURTS: SUPPORT FOR REFORMING COMPASSIONATE RELEASE .................Dr. Sarah L. Cooper 173 & Cory Bernard FEDERAL-STATE PROGRAMS AND STATE— OR IS IT FEDERAL?—ACTION .............. Michael E. Rosman 203 FOURTH AMENDMENT CONSENT SEARCHES AND THE DUTY OF FURTHER INQUIRY .........................Norman Hobbie Jr. 227 CATHOLIC SOCIAL TEACHING AND THE ROLE OF THE PROSECUTOR ................Zachary B. Pohlman 269 EXTRALEGAL INFLUENCES ON JUROR DECISION MAKING IN SUITS AGAINST FIREARM MANUFACTURERS ....................Nathan D. Harp 297 NOTES “WHAT IS A “REASON TO BELIEVE”? EXECUTION OF AN ARREST WARRANT AT A SUSPECT’S RESIDENCE SHOULD REQUIRE PROBABLE CAUSE ............................... Robert Norton 323 The CREIGHTON LAW REVIEW (ISSN 0011—1155) is published four times a year in December, March, June and September by the students of the Creighton University School of Law, 2133 California St., Omaha, NE 68178. -

What Is Gab? a Bastion of Free Speech Or an Alt-Right Echo Chamber?

What is Gab? A Bastion of Free Speech or an Alt-Right Echo Chamber? Savvas Zannettou Barry Bradlyn Emiliano De Cristofaro Cyprus University of Technology Princeton Center for Theoretical Science University College London [email protected] [email protected] [email protected] Haewoon Kwak Michael Sirivianos Gianluca Stringhini Qatar Computing Research Institute Cyprus University of Technology University College London & Hamad Bin Khalifa University [email protected] [email protected] [email protected] Jeremy Blackburn University of Alabama at Birmingham [email protected] ABSTRACT ACM Reference Format: Over the past few years, a number of new “fringe” communities, Savvas Zannettou, Barry Bradlyn, Emiliano De Cristofaro, Haewoon Kwak, like 4chan or certain subreddits, have gained traction on the Web Michael Sirivianos, Gianluca Stringhini, and Jeremy Blackburn. 2018. What is Gab? A Bastion of Free Speech or an Alt-Right Echo Chamber?. In WWW at a rapid pace. However, more often than not, little is known about ’18 Companion: The 2018 Web Conference Companion, April 23–27, 2018, Lyon, how they evolve or what kind of activities they attract, despite France. ACM, New York, NY, USA, 8 pages. https://doi.org/10.1145/3184558. recent research has shown that they influence how false informa- 3191531 tion reaches mainstream communities. This motivates the need to monitor these communities and analyze their impact on the Web’s information ecosystem. 1 INTRODUCTION In August 2016, a new social network called Gab was created The Web’s information ecosystem is composed of multiple com- as an alternative to Twitter. -

Teodor Mateoc Editor

TEODOR MATEOC editor ------------------------------------------------ Cultural Texts and Contexts in the English Speaking World (V) Teodor Mateoc editor CULTURAL TEXTS AND CONTEXTS IN THE ENGLISH SPEAKING WORLD (V) Editura Universităţii din Oradea 2017 Editor: TEODOR MATEOC Editorial Board: IOANA CISTELECAN MADALINA PANTEA GIULIA SUCIU EVA SZEKELY Advisory Board JOSE ANTONIO ALVAREZ AMOROS University of Alicante, Spaian ANDREI AVRAM University of Bucharest, Romania ROGER CRAIK University of Ohio, USA SILVIE CRINQUAND University of Bourgogne, France SEAN DARMODY Trinity College, Dublin, Ireland ANDRZEJ DOROBEK Instytut Neofilologii, Plock, Poland STANISLAV KOLAR University of Ostrava, Czech Republic ELISABETTA MARINO University Tor Vergata, Rome MIRCEA MIHAES Universitatea de Vest, Timisoara VIRGIL STANCIU Babes Bolyai University, Cluj-Napoca PAUL WILSON University of Lodz, Poland DANIELA FRANCESCA VIRDIS University of Cagliari, Italy INGRIDA ZINDZIUVIENE Vytautas Magnus University, Kaunas, Lithuania Publisher The Department of English Language and Literature Faculty of Letters University of Oradea ISSN 2067-5348 CONTENTS Introduction Cultural Texts and Contexts in the English Speaking World: The Fifth Edition ............................................................................. 9 I. BRITISH AND COMMONWEALTH LITERATURE Adela Dumitrescu, Physiognomy of Fashion in Fiction: Jane Austen ..... 17 Elisabetta Marino, “Unmaidenly” Maidens: Rhoda Broughton’s Controversial Heroines ................................................ 23 Alexandru -

How National and Local Incidents Sparked Action at the UNLV University Libraries

Library Faculty Publications Library Faculty/Staff Scholarship & Research 1-2-2020 Responding to Hate: How National and Local Incidents Sparked Action at the UNLV University Libraries Brittany Paloma Fiedler University of Nevada, Las Vegas, [email protected] Rosan Mitola University of Nevada, Las Vegas, [email protected] James Cheng University of Nevada, Las Vegas, [email protected] Follow this and additional works at: https://digitalscholarship.unlv.edu/lib_articles Part of the Library and Information Science Commons Repository Citation Fiedler, B. P., Mitola, R., Cheng, J. (2020). Responding to Hate: How National and Local Incidents Sparked Action at the UNLV University Libraries. Reference Services Review 1-28. Emerald. http://dx.doi.org/10.1108/RSR-09-2019-0071 This Article is protected by copyright and/or related rights. It has been brought to you by Digital Scholarship@UNLV with permission from the rights-holder(s). You are free to use this Article in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you need to obtain permission from the rights-holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/ or on the work itself. This Article has been accepted for inclusion in Library Faculty Publications by an authorized administrator of Digital Scholarship@UNLV. For more information, please contact [email protected]. Responding to Hate: How National and Local Incidents Sparked Action at the UNLV University Libraries Abstract Purpose: This paper describes how an academic library at one of the most diverse universities in the country responded to the 2016 election through the newly formed Inclusion and Equity Committee and through student outreach. -

Why Is Junk Food So Addictive?

The Z Cal Young MiddleINGER School Spring Term, 2019 Why is Junk Food so Addictive? By Laurel Bonham Have you ever gone to a chip bag just for concentrated salt added to food to enhance flavor. one chip and came back with way more than one? MSG produces a savory but salty taste when You’re not the only one. 53.12% of Americans eat added to food ,which excites your taste buds and 1-3 cans of Pringles in 30 days! That’s over half of stimulates the release of brain chemicals called the people in the U.S.! Most people know that junk neurotransmitters. The pleasant taste of MSG food is well junk. Even people who eat junk food and the release of neurotransmitters are thought aren’t fooled. They know it’s not healthy but what to be the basis for mild levels of addiction. MSG they don’t know is that it has released something makes food salty and it is cheap to make so that called dopamine in their brain. Dopamine is a they can put more of it and it will make people buy chemical that is released in the brain when you more of it because its addictive. MSG is extremely “reward” yourself. You can “reward” yourself by bad for you. Some side effects of MSG are doing pretty much anything. For example when severe headaches, sweating excessively, muscle you go on your phone that is a “reward” for your weakness, and numbness. brain. Eating junk food is just as bad as tobacco is Why do holidays encourage eating for the brain. -

Red River Radio Ascertainment Files October 2017 – December 2017 STORY LOG – Chuck Smith, NEWS PRODUCER, RED RIVER RADIO

Red River Radio Ascertainment Files October 2017 – December 2017 STORY LOG – Chuck Smith, NEWS PRODUCER, RED RIVER RADIO 2498 University of Louisiana System Raises College Grad Goals (1:08) Aired: October 10, 2017 Interview: Jim Henderson, President - University of Louisiana System Type: Interview Wrap 2499 La. Film Prize Wraps 6th Festival Season (3:28) Aired: October 11, 2017 Interview: Gregory Kallenberg, Exec. Dir.-LaFilmPrize, Shreveport, LA Type: Interview Wrap 2500 Many Still Haven't Applied For La. 2016 Flood Recovery Funds (1:53) Aired: October 12, 2017 Interview: Pat Forbes, director for the Louisiana Office of Community Development Type: Interview Wrap 2501 LSUS Pioneer Day Takes Us Back In Time This Saturday (3:28) Aired: October 13, 2017 Interview: Marty Young, Director – Pioneer Heritage Center, LSU-Shreveport Type: Interview Wrap 2502 Share A Story With StoryCorps In Shreveport (2:11) Aired: October 16, 2017 Interview: Morgan Feigalstickles, Site Manager / StoryCorps Type: Interview Wrap 2503 La. Coastal Restoration Projects $50 Billion Over 50 Years (2:15) Aired: Oct 17, 2017 Interview: Johny Bradberry, La. Coastal Protection and Restoration Authority Type: Interview Wrap 2504 Selling Pumpkins To Support Charities in Shreveport-Bossier (2:44) Aired: Oct. 19, 2017 Interview: Janice Boller, Chairman – St. Luke’s Pumpkin Patch Committee Type: Interview Wrap 2505 No Action From Bossier School Board Regarding Student Rights Allegations (1:54) Aired: Oct. 20, 2017 Interview: Charles Roads, South Texas College of Law / Houston, TX Type: Interview Wrap 2506 Caddo Commission Votes For Confederate Monument Removal (1:08) Aired: Oct. 20, 2017 Interview: Lloyd Thompson, President - Caddo Parish NAACP Type: Interview Wrap 2507 National Wildlife Refuge President Visits East Texas Wildlife Refuges (3:26) Aired: Oct 23, 2017 Interview: Geoffrey Haskett, President - National Wildlife Refuge Association Type: Interview Wrap 2508 NW La. -

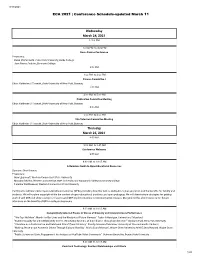

ECA 2021 | Conference Schedule-Updated March 11

3/11/2021 ECA 2021 | Conference Schedule-updated March 11 Wednesday March 24, 2021 12:00 PM 12:00 PM to 4:30 PM Basic Course Conference Presenters: Dawn Pfeifer Reitz, Penn State University, Berks College Jane Pierce Saulnier, Emerson College 3:00 PM 3:00 PM to 4:00 PM Finance Committee I Chair: Katherine S Thweatt, State University of New York, Oswego 4:00 PM 4:00 PM to 5:00 PM Publication Committee Meeting Chair: Katherine S Thweatt, State University of New York, Oswego 5:00 PM 5:00 PM to 6:00 PM Site Selection Committee Meeting Chair: Katherine S Thweatt, State University of New York, Oswego Thursday March 25, 2021 8:00 AM 8:00 AM to 8:45 AM Conference Welcome 9:00 AM 9:00 AM to 10:15 AM A Dummies Guide to Open Educational Resources Sponsor: Short Course Presenters: Aura Lippincott, Western Connecticut State University MaryAnn Murtha, Western Connecticut State University and Naugatuck Valley Community College Caroline Waldbuesser, Western Connecticut State University Participants will learn about open educational resources (OERs), including how this term is dened in higher education and the benets for faculty and students. We will explore copyright within the context of open educational practices and open pedagogy. We will demonstrate strategies for getting started with OER and show examples of successful OER implementations in communication courses. Our goal for this short course is to educate attendees on the benets of OER in college classrooms. 9:00 AM to 10:15 AM Competitively Selected Papers in Voices of Diversity and Interpretation -

Online Media and the 2016 US Presidential Election

Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Faris, Robert M., Hal Roberts, Bruce Etling, Nikki Bourassa, Ethan Zuckerman, and Yochai Benkler. 2017. Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election. Berkman Klein Center for Internet & Society Research Paper. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:33759251 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA AUGUST 2017 PARTISANSHIP, Robert Faris Hal Roberts PROPAGANDA, & Bruce Etling Nikki Bourassa DISINFORMATION Ethan Zuckerman Yochai Benkler Online Media & the 2016 U.S. Presidential Election ACKNOWLEDGMENTS This paper is the result of months of effort and has only come to be as a result of the generous input of many people from the Berkman Klein Center and beyond. Jonas Kaiser and Paola Villarreal expanded our thinking around methods and interpretation. Brendan Roach provided excellent research assistance. Rebekah Heacock Jones helped get this research off the ground, and Justin Clark helped bring it home. We are grateful to Gretchen Weber, David Talbot, and Daniel Dennis Jones for their assistance in the production and publication of this study. This paper has also benefited from contributions of many outside the Berkman Klein community. The entire Media Cloud team at the Center for Civic Media at MIT’s Media Lab has been essential to this research.