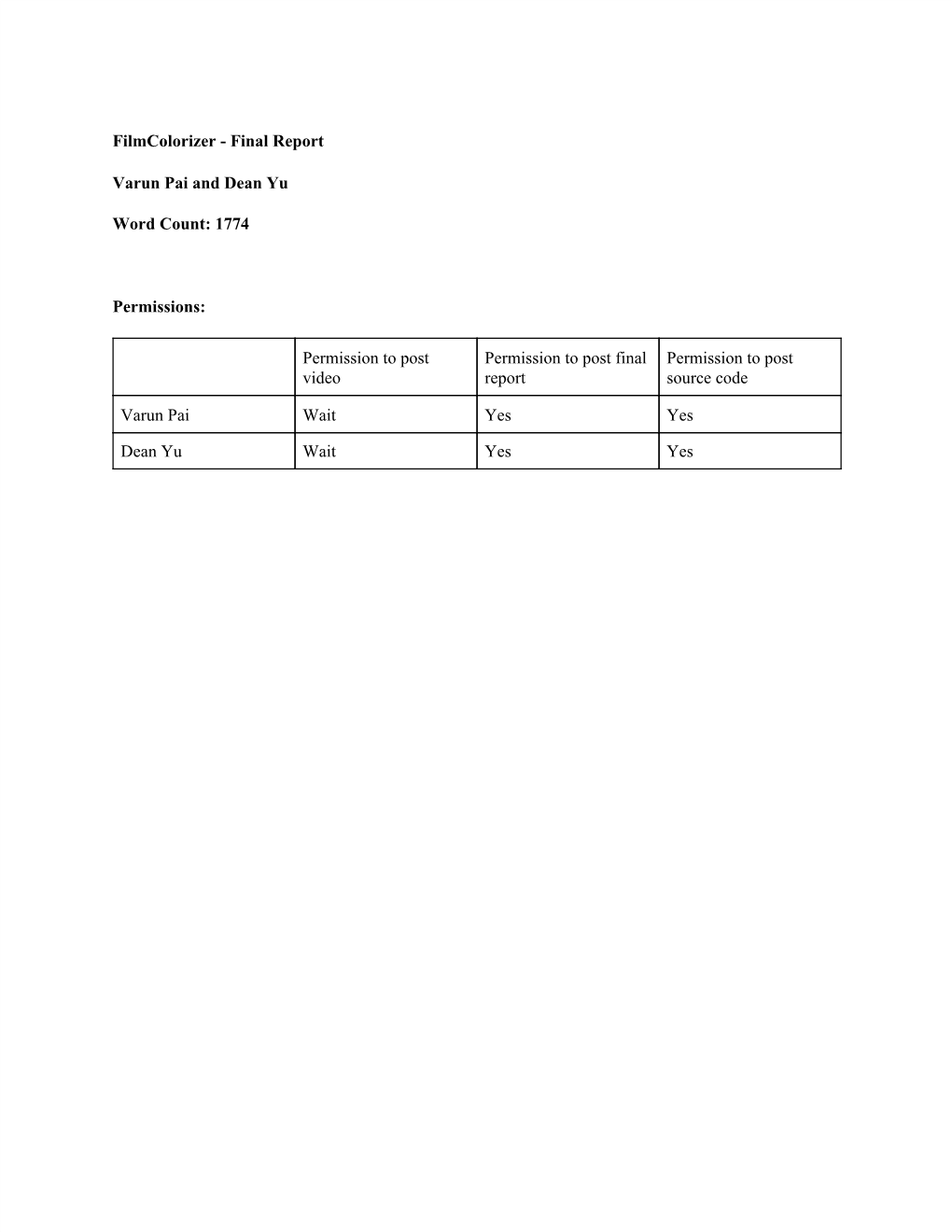

Filmcolorizer - Final Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technological Alterations to Motion Pictures and Other Audiovisual Works

Loyola of Los Angeles Entertainment Law Review Volume 10 Number 1 Article 1 1-1-1990 Technological Alterations to Motion Pictures and Other Audiovisual Works: Implications for Creators, Copyright Owners, and Consumers—Report of the Register of Copyrights Follow this and additional works at: https://digitalcommons.lmu.edu/elr Part of the Law Commons Recommended Citation , Technological Alterations to Motion Pictures and Other Audiovisual Works: Implications for Creators, Copyright Owners, and Consumers—Report of the Register of Copyrights, 10 Loy. L.A. Ent. L. Rev. 1 (1989). Available at: https://digitalcommons.lmu.edu/elr/vol10/iss1/1 This Other is brought to you for free and open access by the Law Reviews at Digital Commons @ Loyola Marymount University and Loyola Law School. It has been accepted for inclusion in Loyola of Los Angeles Entertainment Law Review by an authorized administrator of Digital Commons@Loyola Marymount University and Loyola Law School. For more information, please contact [email protected]. REPORT TECHNOLOGICAL ALTERATIONS TO MOTION PICTURES AND OTHER AUDIOVISUAL WORKS: IMPLICATIONS FOR CREATORS, COPYRIGHT OWNERS, AND CONSUMERS Report Of The Register Of Copyrights March 1989 United States Copyright Office Washington, D.C.t TABLE OF CONTENTS Executive Sum m ary ............................................ 4 Chapter 1: Introduction ........................................ 11 Scope of the Copyright Office Study .......................... 11 Previous Copyright Office Actions ............................ 14 Issues Examined in this Report ............................... 16 Chapter 2: Copyright In The Motion Picture And Television Industries ...................................................... 18 Copyright Protection for Motion Pictures and Television Program s .................................................... 19 International Conventions .................................... 20 Universal Copyright Convention ............................ 20 The Berne Convention for the Protection of Literary and Artistic Works ......... -

Nafta's Regime for Intellectual Property: in the Mainstream of Public International

NAFTA’S REGIME FOR INTELLECTUAL PROPERTY: IN THE MAINSTREAM OF PUBLIC INTERNATIONAL LAW* James A.R. Nafziger† TABLE OF CONTENTS I. INTRODUCTION ................................................................................ 807 II. TRANSFORMATION OF UNILATERAL MEASURES INTO PUBLIC INTERNATIONAL LAW......................................................... 808 III. THE NAFTA REGIME ...................................................................... 816 IV. DISPUTE RESOLUTION UNDER THE NAFTA ................................... 821 V. CONCLUSION ................................................................................... 822 I. INTRODUCTION In this Decade of International Law,1 economic integration is un- doubtedly the greatest achievement of global and regional communities. New institutions—particularly the World Trade Organization (WTO);2 the North American Free Trade Agreement (NAFTA);3 the Treaty Establishing * This article is based on remarks made during a panel presentation entitled Protection of Intellectual Property in International and National Law at a conference on The Role of International Law in the Americas: Rethinking National Sovereignty in an Age of Regional Integration, which was held in Mexico City, June 6–7, 1996, and was co-sponsored by the American Society of International Law and El Instituto de Investigaciones Jurídicas de la Universidad Nacional Autónoma de México. Joint copyright is held by the Houston Journal of International Law, the author, and El Instituto de Investigaciones Jurídicas de la Universidad -

Artistic Integrity, Public Policy and Copyright: Colorization Reduced To

Artistic Integrity, Public Policy and Copyright: Colorization Reduced to Black and White [A]rt is a human activity having for its purpose the transmission to others of the highest and best feelings to which men have risen.' I. INTRODucTION Art reflects society. Each person in modern society-a society that prides itself on freedom of expression-interprets differently the beauty in art. Thus, when a musician composes a popular tune, a poet drafts a new verse, or a painter touches her 2 brush to canvas, their creations are subject to criticism, debate, and often ridicule. Some artists choose faster tempos, shorter sentences, brighter pastels; others choose 3 melodic tunes, complex clauses, or no color at all. These are all artistic choices. They characterize both the work and the artist himself.4 Despite opposing viewpoints, the art world respects the artistic intent of the work, condemning any modification 5 based upon society's standards or an individual's preference. Quite often technology discovers ways we can "improve" 6 the products of art. Synthesizers electronically alter instrumental sounds, springfloors lift the dancer higher and farther, and word processors cut an editor's work in half. These advances facilitate the artist's ability to create or perform; 7 they aid the artist. However, with the recent evolution of colorization, 8 technology has infringed upon the artist's creative intent and control.9 Now, computers can paint color into black and white motion pictures. This advance differs significantly from the former examples. Where these other advances may bolster or facilitate an artist's intent, the colorization process alters black and white films that were intended to remain colorless. -

Article Casting Call at Forest Lawn: the Digital Resurrection of Deceased Entertainers - a 21St Century Challenge for Intellectual Property La W Joseph J

ARTICLE CASTING CALL AT FOREST LAWN: THE DIGITAL RESURRECTION OF DECEASED ENTERTAINERS - A 21ST CENTURY CHALLENGE FOR INTELLECTUAL PROPERTY LA W JOSEPH J. BEARD Table of Contents I. IN TRO D U CTIO N .................................................................................. 102 II. CREATION OF THE SYNTHETIC REPLICA - THE COPYRIGHT ISSUE .............................................................................. 107 A . Scope of the Issue .......................................................................... 107 B. Reproduction Rights ...................................................................... 109 C. Derivative Work Rights ................................................................ 124 D . D istribution Rights ......................................................................... 125 E. Perform ance Rights ........................................................................ 125 F. D isplay Rights ................................................................................ 127 G . Fair U se ............................................................................................ 128 II. COPYRIGHT/TRADE SECRET PROTECTION FOR THE REANIMATED ACTOR ....................................................................... 135 A . Introduction ..................................................................................... 135 B. Copyright Protection ..................................................................... 135 C. Trade Secret Protection ................................................................. -

History and Ethics of Film Restoration by Jeffrey Lauber a Thesis

History and Ethics of Film Restoration by Jeffrey Lauber A thesis submitted in partial fulfillment of the requirements for the degree of Master of Arts Moving Image Archiving and Preservation Program Department of Cinema Studies New York University May 2019 ii ABSTRACT Film restoration and its products have been subjects of scrutiny and debate since the dawn of the practice. The process inherently bears an innumerable quantity of unresolvable uncertainties, a characteristic which has sparked discussions amongst archivists, restorers, scholars, critics, and audiences alike about the ethical implications of restoring motion pictures. This thesis contends that it would be both impractical and unproductive to impose a rigid set of ethical principles on a practice which so inevitably relies on subjectivity. Instead, it devises a more holistic understanding of film restoration practice and ethics to work towards a conceptual framework for film restoration ethics. Drawing from theoretical discourse and real-world case studies, this thesis examines the ways in which restorers have confronted and mitigated uncertainties in their work, and explores the ethical implications of their decisions. As an understanding of the philosophical and economic motivations behind film restoration provides essential foundational knowledge for understanding the evolution of its ethical discourse, the thesis begins by charting a contextual history of the practice before exploring the discourse and its core ethical concerns. iii TABLE OF CONTENTS Acknowledgements -

CONGRESSIONAL LIMITS on TECHNOLOGICAL ALTERATIONS to FILM: the PUBLIC INTEREST and the ARTISTS' MORAL RIGHT by JANINE V.Mcnallv Y

COMMENT CONGRESSIONAL LIMITS ON TECHNOLOGICAL ALTERATIONS TO FILM: THE PUBLIC INTEREST AND THE ARTISTS' MORAL RIGHT BY JANINE V.McNALLV Y Table of Contents IN TRO DU CTIO N .......................................................................................... 130 I. COLORIZATION AND OTHER TECHNOLOGICAL ALTERATIONS TO FILM .................................................................... 132 A . C olorization ..................................................................................... 132 B. Letterboxing and Panning and Scanning .................................... 133 C . Lexiconning ..................................................................................... 134 D. Computer Generation of Images .................................................. 135 II. THE PUBLIC INTEREST IN THE AUTHENTIC DISPLAY OF FIL M S ...................................................................................................... 135 A. U.S. Law: Film Preservation and Film Archives ........................ 136 B. International Precedents: National Cultural Identity and Film D isplay ..................................................................................... 138 IlI. ARTISTS' PROTECTIONS AGAINST ALTERATIONS TO REPRODUCTIONS: THE MORAL RIGHT ....................................... 139 A. Gilliam v. American Broadcasting Co ......................................... 142 B. States Moral Rights Legislation .................................................... 144 C. U.S. Adherence to the Berne Convention ................................... -

Low-Level Perceptual Features of Children's

LOW-LEVEL PERCEPTUAL FEATURES OF CHILDREN’S FILMS AND THEIR COGNITIVE IMPLICATIONS A Dissertation Presented to the Faculty of the Graduate School of Cornell University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by Kaitlin L. Brunick August 2014 © 2014 Kaitlin L. Brunick LOW-LEVEL PERCEPTUAL FEATURES OF CHILDREN’S FILMS AND THEIR COGNITIVE IMPLICATIONS Kaitlin L. Brunick, Ph.D. Cornell University 2014 Discussions of children’s media have previously focused on curriculum, content, or subjective formal features like pacing and style. The current work examines a series of low- level, objectively-quantifiable, and psychologically-relevant formal features in a sample of children’s films and a matched sample of adult-geared Hollywood films from the same period. This dissertation will examine average shot duration (ASD), shot structure (specifically, adherence of shot patterns to 1/ f ), visual activity (a combined metric of on- screen motion and movement), luminance, and two parameters of color (saturation and hue). These metrics are objectively quantified computationally and analyzed across films. Patterns in children’s formal film features are predicted reliably by two variables: the intended age of the film’s audience and the release year. More recent children’s films have shorter ASDs, less visual activity, and greater luminance than older children’s films. Luminance also reliably predicts character motivation (protagonist or antagonist) within films. Films for older children are reliably darker than films for younger children. When comparing the Hollywood sample to the children’s sample, children’s films are reliably more saturated and have higher ASDs than their Hollywood counterparts; however, these effects are largely driven by expected differences between animated and live action films rather than the intended audience of the film. -

Preserving Film Preservation from the Right of Publicity1

de•C ARDOZOnovo L AW R EVIEW PRESERVING FILM PRESERVATION FROM THE RIGHT OF PUBLICITY1 Christopher Buccafusco† Jared Vasconcellos Grubow* Ian J. Postman# INTRODUCTION Newly available digital tools enable content producers to recreate or reanimate people’s likenesses, voices, and behaviors with almost perfect fidelity. We will have soon reached the point (if we haven’t already) when a movie studio could make an entire “live action” feature film without having to film any living actors. Computer generated images (CGI) could entirely replace the need for human beings to stand in front of cameras and recite lines. Digital animation raises a number of important legal and social issues, including labor relations between actors and movie studios, the creation and dissemination of fake news items, and the production of 1 Copyright 2018 by Christopher Buccafusco, Jared Vasconcellos Grubow, and Ian J. Postman. The authors are grateful for comments on an earlier draft from Jennifer Rothman and Rebecca Tushnet and for a helpful discussion of film restoration with Lee Kline. † Professor of Law, Director of the Intellectual Property + Information Law Program, Associate Dean for Faculty Development, Benjamin N. Cardozo School of Law, Yeshiva University. DISCLOSURE: Professor Buccafusco’s spouse, Penelope Bartlett, is an employee of The Criterion Collection, one of the major restorers and distributors of classic films. * Editor-in-Chief, Cardozo Law Review Volume 40, J.D. Candidate (June 2019), Benjamin N. Cardozo School of Law; B.M. Syracuse University Setnor School of Music, 2013. # Submissions Editor, Cardozo Law Review Volume 40, J.D. Candidate (June 2019), Benjamin N. Cardozo School of Law; B.S. -

VOLUME 16, NUMBER 10, MARCH 1995 ENTERTAINMENT LAW REPORTER Broadcast Rights Were Licensed to Channel Five, Huston's Heirs and Maddox Filed Suit in France

ENTERTAINMENT LAW REPORTER RECENT CASES French appellate court rules that colorization and broadcast of the "Asphalt Jungle" violated the moral rights of director John Huston and screen- writer Ben Maddow An appellate court in Versailles, France, has ruled that the colorization and broadcast of the "Asphalt Jungle" violated the moral rights of the film's director and screenwriter, John Huston and Ben Maddow. The ruling came in a long-pending and closely watched case brought by Huston's heirs and by Maddow against Turner Entertainment Co. and France's Channel Five. The film, originally produced by MGM in black-and- white in 1950, was colorized by Turner following its ac- quisition of the MGM library in 1986. When television VOLUME 16, NUMBER 10, MARCH 1995 ENTERTAINMENT LAW REPORTER broadcast rights were licensed to Channel Five, Huston's heirs and Maddox filed suit in France. Early in the case, a French trial court enjoined the broadcast. But that rul- ing was overturned by an appellate court in Paris which held that U.S. law, rather than French law, determined who the "author" of the film was, and thus who owned moral rights in it. Under U.S. law, MGM was the film's "author"; and since Turner acquired MGM's rights, the Paris court ruled that Turner, rather than Huston and Maddox, owned whatever moral rights might be in- volved. As a result of that ruling, Channel Five broad- cast the colorized version in 1989. Huston's heirs and Maddox, with the support of several French guilds, took the case to France's highest court, the Cour de Cassation, which reversed the Paris court of appeals and ruled in their favor. -

The Copyright Wars

© Copyright, Princeton University Press. No part of this book may be distributed, posted, or reproduced in any form by digital or mechanical means without prior written permission of the publisher. 1 The Battle between Anglo- American Copyright and European Authors’ Rights Works are created by their authors, reproduced and distributed by their disseminators, and enjoyed by the audience. These three actors, each with their own concerns, negotiate a delicate dance. Most gen- erally, all must be kept content: the author productive, the dissemi- nator profitable, and the audience enlightened. Get the balance wrong and things fall out of kilter. If authors become too exacting, the audience suffers. If the disseminators are greedy or the audience miserly, culture and eventually the public domain dessicate. But within these extremes there is much room for adjustment. Will copyright laws take as their first task protecting authors? Or will they consider the audience and the public domain also as impor- tant? Seen historically, that has been the fundamental choice faced as copyright developed in the Anglo- American world and in the major continental European nations, France and Germany. Each po- sition has much to recommend it: public enlightenment for one, nurturing high- quality culture for the other. Neither can exist alone. The choice between them has never been either/or but always a question of emphasis, a positioning along a spectrum. And yet the battle between these views has also been what the Germans call a Kulturkampf, a clash of ideologies and fundamental assumptions, that has stretched back well over two centuries. The laws governing how artists, writers, musicians, choreographers, directors, and other authors relate to their works are usually called “copyright” in English. -

Film Colorization, Using Artificial Neural Networks and Laws Filters

1094 JOURNAL OF COMPUTERS, VOL. 5, NO. 7, JULY 2010 Film Colorization, Using Artificial Neural Networks and Laws Filters Mohammad Reza Lavvafi Department Computer, Islamic Azad University of Mahallat Mahallat, Arak, Iran S. Amirhassan Monadjemi and Payman Moallem Department of Computer Engineering, Department of Electrical Engineering Faculty of Engineering, University of Isfahan Email: [email protected] Abstract—In this study a new artificial neural network human operator in the colorization. While the ultimate based approach to automatic or semi-automatic colorization goal is getting through a fully automatic, high precision, of black and white film footages is introduced. Different and robust colorization method of frame images. features of black and white images are tried as the input of a Wilson Markel was the first one who did colorize the MLP neural network which has been trained to colorize the black and white (B/W) movies in the early 80's. Since movie using its first frame as the ground truth. Amongst the features tried, e.g. position, relaxed position, luminance, then, several manual or semi-automatic colorization and so on, we are most interested on the texture features methods have been developed for black and white movies namely the Laws filter responses, and what their and image colorization. In most of these methods, a black performance would be in the process of colorization. Also, and white image is divided into some parts and then, the network parameter optimization, the effects of color color is transferred to it through the source of color reduction, and relaxed x-y position of pixels as the feature, image, or human user will specify color of these are investigated in this study. -

Film Colorization and the 100Th Congress Dan Renberg

Hastings Communications and Entertainment Law Journal Volume 11 | Number 3 Article 1 1-1-1989 The oneM y of Color: Film Colorization and the 100th Congress Dan Renberg Follow this and additional works at: https://repository.uchastings.edu/ hastings_comm_ent_law_journal Part of the Communications Law Commons, Entertainment, Arts, and Sports Law Commons, and the Intellectual Property Law Commons Recommended Citation Dan Renberg, The Money of Color: Film Colorization and the 100th Congress, 11 Hastings Comm. & Ent. L.J. 391 (1989). Available at: https://repository.uchastings.edu/hastings_comm_ent_law_journal/vol11/iss3/1 This Article is brought to you for free and open access by the Law Journals at UC Hastings Scholarship Repository. It has been accepted for inclusion in Hastings Communications and Entertainment Law Journal by an authorized editor of UC Hastings Scholarship Repository. For more information, please contact [email protected]. The Money of Color: Film Colorization and the 100th Congress by DAN RENBERG* The new technology in the service of the artist is wonderful, but in the service of people who are not the originators of the film, it is a weapon.3 Woody Allen on film colorization Introduction During the late 1980's, numerous time-honored black-and- white films were altered through the process of colorization.2 Proponents of film colorization argue that the addition of color to black-and-white films revitalizes public interest in them.3 In contrast, some motion picture industry professionals assert that the colorization process "mutilates" a filmmaker's work.4 Meanwhile, classic film buffs are still recovering from the shock of seeing the national television debut in November 1988 of the colorized version of Casablanca, one of America's most highly-revered films.5 * A.B., Princeton University, 1986.