05Principalinvestigatorledmissio

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mars Helicopter/Ingenuity

National Aeronautics and Space Administration Mars Helicopter/Ingenuity When NASA’s Perseverance rover lands on February 18, 2021, it will be carrying a passenger onboard: the first helicopter ever designed to fly in the thin Martian air. The Mars Helicopter, Ingenuity, is a small, or as full standalone science craft carrying autonomous aircraft that will be carried to instrument payloads. Taking to the air would the surface of the Red Planet attached to the give scientists a new perspective on a region’s belly of the Perseverance rover. Its mission geology and even allow them to peer into is experimental in nature and completely areas that are too steep or slippery to send independent of the rover’s science mission. a rover. In the distant future, they might even In the months after landing, the helicopter help astronauts explore Mars. will be placed on the surface to test – for the first time ever – powered flight in the thin The project is solely a demonstration of Martian air. Its performance during these technology; it is not designed to support the experimental test flights will help inform Mars 2020/Perseverance mission, which decisions relating to considering small is searching for signs of ancient life and helicopters for future Mars missions, where collecting samples of rock and sediment in they could perform in a support role as tubes for potential return to Earth by later robotic scouts, surveying terrain from above, missions. This illustration shows the Mars Helicopter Ingenuity on the surface of Mars. Key Objectives Key Features • Prove powered flight in the thin atmosphere of • Weighs 4 pounds (1.8 kg) Mars. -

The Planetary Report

A Publication of THEPLANETA SOCIETY o o o • o -e o o Board of Directors CARL SAGAN NORMAN R. AUGUSTI NE President Chairman and CEO, Director, Martin Marietta Corporation Laboratory for Planetary Studies, Cornelf University JOHN E. BRYSON Chairman and CEO, BRUCE MURRAY Southern California Edison Vice President Professor of Planetary Science, JOSEPH RYAN California Institute of Technology O'Melveny & Myers LOU IS FRIEDMAN STEVEN SPI ELBERG A WARNING FROM YOUR EDITOR Bringing People Together Through Executive Director director and producer You may soon be getting a phone call Planetary Science-Page 13-Education Board of Advisors from me. No, I won't be asking you for has always been close to the hearts of DIANE ACKERMAN JOHN M. LOGSDON donations or nagging you about a missed Planetary Society members, and we have poet and author Director, Space Policy Institute, George Washington University deadline (a common fear among planetary sponsored many programs to promote sci BUZZ ALDRIN Apollo 11 astronaut HANS MARK scientists). Instead, I will be asking for ence education around the world. We've The University of Texas at Austin RICHARD BERENDZEN your opinion about The Planetary Report. gathered together reports on three projects educator and astrophysicist JAMES MICHENER author JACQUES BLAMONT Each month our computer will randomly now completed and one just beginning. Chief Scientist, Centre MARVIN MINSKY Nationa! d'Etudes Spatia/es, Toshiba Professor of Media Arts select several members whom I will call to A Planetary Readers' Service-Page 16- France and Sciences, Massachusetts Institute of Technology discuss the contents of our latest issue-or For most of its history, the science we call RAY BRADBURY poet and author PHILIP MORRISON any other topic related to our publications. -

Build Your Own Mars Helicopter

Aeronautics Research Mission Directorate Build Your Own Mars Helicopter Suggested Grades: 3-8 Activity Overview Time: 30 minutes NASA is sending a helicopter to Mars! This helicopter Materials is called Ingenuity and is designed to test whether or not • 1 Large marshmallow flight is a good way to study distant bodies in space. • 4 Small marshmallows • 5 Toothpicks We have sent spacecraft to other planets, but this is the • Cardstock (to print out the last first aircraft that will fly on another world. In this page of this document) activity, you will learn about this amazing feat of engineering as you build your own Mars helicopter • Scissors model. Steps 1. The large marshmallow will represent Ingenuity’s fuselage. Ingenuity is fairly small, about 19 inches tall and weighing about 4 pounds, and the fuselage is about the size of a softball. The fuselage contains batteries, sensors, and cameras to power and control Ingenuity’s flight. It is insulated and has heaters to protect the equipment in the cold Martian environment where it can reach -130 oC at night. 2. Insert the four toothpicks into the marshmallow so they come out at angles as shown in Figure 1. The toothpicks represent the four hollow carbon-fiber legs on Ingenuity. Ingenuity’s legs are designed to be lightweight while still supporting the helicopter when it’s on the Martian surface. To take up less space, Ingenuity’s legs are folded while it’s being carried to Mars. / Figure 1. Insert the four toothpicks into the marshmallow to represent Ingenuity's legs. 3. -

Atmosphere of Freedom: 70 Years at the NASA Ames Research Center

Atmosphere of Freedom: 70 Years at the NASA Ames Research Center 7 0 T H A N N I V E R S A R Y E D I T I O N G l e n n E . B u g o s National Aeronautics and Space Administration NASA History Office Washington, D.C. 2010 NASA SP-2010-4314 Table of Contents FOREWORD 1 PREFACE 3 A D M I N I S T R A T I V E H I S T O R Y DeFrance Aligns His Center with NASA 6 Harvey Allen as Director 13 Hans Mark 15 Clarence A. Syvertson 21 William F. Ballhaus, Jr. 24 Dale L. Compton 27 The Goldin Age 30 Moffett Field and Cultural Climate 33 Ken K. Munechika 37 Zero Base Review 41 Henry McDonald 44 G. Scott Hubbard 49 A Time of Transition 57 Simon “Pete” Worden 60 Once Again, Re-inventing NASA Ames 63 The Importance of Directors 71 S P A C E P R O J E C T S Spacecraft Program Management 76 Early Spaceflight Experiments 80 Pioneers 6 to 9 82 Magnetometers 85 Pioneers 10 and 11 86 Pioneer Venus 91 III Galileo Jupiter Probe 96 Lunar Prospector 98 Stardust 101 SOFIA 105 Kepler 110 LCROSS 117 Continuing Missions 121 E N G I N E E R I N G H U M A N S P A C E C R A F T “…returning him safely to earth” 125 Reentry Test Facilities 127 The Apollo Program 130 Space Shuttle Technology 135 Return To Flight 138 Nanotechology 141 Constellation 151 P L A N E T A R Y S C I E N C E S Impact Physics and Tektites 155 Planetary Atmospheres and Airborne Science 157 Infrared Astronomy 162 Exobiology and Astrochemistry 165 Theoretical Space Science 168 Search for Extraterrestrial Intelligence 171 Near-Earth Objects 173 NASA Astrobiology Institute 178 Lunar Science 183 S P A C E -

The Logic of the Grail in Old French and Middle English Arthurian Romance

The Logic of the Grail in Old French and Middle English Arthurian Romance Submitted in part fulfilment of the degree of Doctor of Philosophy Martha Claire Baldon September 2017 Table of Contents Introduction ................................................................................................................................ 8 Introducing the Grail Quest ................................................................................................................ 9 The Grail Narratives ......................................................................................................................... 15 Grail Logic ........................................................................................................................................ 30 Medieval Forms of Argumentation .................................................................................................. 35 Literature Review ............................................................................................................................. 44 Narrative Structure and the Grail Texts ............................................................................................ 52 Conceptualising and Interpreting the Grail Quest ............................................................................ 64 Chapter I: Hermeneutic Progression: Sight, Knowledge, and Perception ............................... 78 Introduction ..................................................................................................................................... -

Mars Science Laboratory Entry Capsule Aerothermodynamics and Thermal Protection System

Mars Science Laboratory Entry Capsule Aerothermodynamics and Thermal Protection System Karl T. Edquist ([email protected], 757-864-4566) Brian R. Hollis ([email protected], 757-864-5247) NASA Langley Research Center, Hampton, VA 23681 Artem A. Dyakonov ([email protected], 757-864-4121) National Institute of Aerospace, Hampton, VA 23666 Bernard Laub ([email protected], 650-604-5017) Michael J. Wright ([email protected], 650-604-4210) NASA Ames Research Center, Moffett Field, CA 94035 Tomasso P. Rivellini ([email protected], 818-354-5919) Eric M. Slimko ([email protected], 818-354-5940) Jet Propulsion Laboratory, Pasadena, CA 91109 William H. Willcockson ([email protected], 303-977-5094) Lockheed Martin Space Systems Company, Littleton, CO 80125 Abstract—The Mars Science Laboratory (MSL) spacecraft TABLE OF CONTENTS is being designed to carry a large rover (> 800 kg) to the 1. INTRODUCTION ..................................................... 1 surface of Mars using a blunt-body entry capsule as the 2. COMPUTATIONAL RESULTS ................................. 2 primary decelerator. The spacecraft is being designed for 3. EXPERIMENTAL RESULTS .................................... 5 launch in 2009 and arrival at Mars in 2010. The 4. TPS TESTING AND MODEL DEVELOPMENT.......... 7 combination of large mass and diameter with non-zero 5. SUMMARY ........................................................... 11 angle-of-attack for MSL will result in unprecedented REFERENCES........................................................... 11 convective heating environments caused by turbulence prior BIOGRAPHY ............................................................ 12 to peak heating. Navier-Stokes computations predict a large turbulent heating augmentation for which there are no supporting flight data1 and little ground data for validation. -

Ingenuity Rules (And Flies) Brief Hover Demonstrates That Controlled Flight Is Possible in Thin Martian Atmosphere

Ingenuity rules (and flies) Brief hover demonstrates that controlled flight is possible in thin Martian atmosphere. The first flight of NASA’s Ingenuity Mars Helicopter was captured in this image from Mastcam-Z, a pair of zoomable cameras aboard NASA’s Perseverance Mars. The milestone marked the first powered, controlled flight on another planet. Credit: NASA / JPL-Caltech / ASU After an eight-day delay due to a software glitch, NASA’s Ingenuity Mars helicopter has had its Wright Brothers moment. At about noon Mars time, yesterday, it lifted off the Martian surface, hovered about 3 metres above the ground, rotated 90 degrees and set back down. In the process, it even managed to take selfies of its shadow on the ground below it. Video of the flight taken from the Perseverance rover, which was standing off at a safe distance, looks so ordinary it almost seems banal… until you realise that this helicopter is flying on Mars, where the atmosphere is comparable to that high in the Earth’s stratosphere. The flight itself occurred at about 12:30am Pacific Time in the US (4:30pm Monday AEST), but due to complexities in the downlink to Earth, NASA’s mission crew wasn’t able to learn what had happened until several hours later. (The data had to be transferred from the helicopter to a base unit, then to the Perseverance rover, then to an orbiting satellite, and then to Earth – a slow process.) But when it came, the scientists and mission controllers crew were waiting in a conference room at NASA’s Jet Propulsion Laboratory. -

,.II Launch in Late 2001 Pasadena, California Vol

c SIRTF gets 0 - - - go-ahead Design, development phase now under way; .._,.II launch in late 2001 Pasadena, California Vol. 28, No. 7 April 3, 1998 By MARY BETH MURRILL NASA Administrator Dan Goldin last week authorized the start of work on the IPL-man aged Space Infrared Telescope Facility MGS will target imaging areas (SIRTF), an advanced orbiting observatory that will give astronomers unprecedented views of phenomena in the universe that are invisible to Attempts will include other types of telescopes. The authorization signals the start of the design and development phase of the SIRTF Pathfinder and project. Scheduled for launch in December 2001 on a Delta7920-H rocket from Cape Viking landing sites, Canaveral, Fla., SIRTF represents the culmina tion of more than a decade of planning and Cydonia region design to develop an infrared space telescope with high sensitivity, low cost and long lifetime. "The Space Infrared Telescope Facility will do By DIANE AINSWORTH for infrared astronomy what the Hubble Space Telescope has done in its unveiling of the visible JPL's Mars Global Surveyor project has universe, and it will do it faster, better and cheaper resumed scientific observations of the surface than its predecessors," said Dr. Wesley Huntress, of Mars and has scheduled opportunities to NASA's associate administrator for space science. image four selected sites: the Viking l and 2 "By sensing the heat given off by objects in landing sites, the Mars Pathfinder landing site space, this new observatory will see behind the and the Cydonia region. cosmic curtains of dust particles that obscure Three opportunities to image each of the Target areas for Mars Global Surveyor imaging include the landing sites of Pathfinder (near much of the visible universe," Huntress said. -

Nasa Advisory Council

National Aeronautics and Space Administration Washington, DC NASA ADVISORY COUNCIL February 18~19, 2010 NASA Headquarters Washington, DC MEETING MINUTES ~~7(~ P. Diane Rausch Kenneth M. Ford Executive Director Chair NASA Advisory Council February 18-19. 2010 NASA ADVISORY COUNCIL NASA Headquarters Washington, DC February 18-19, 2010 Meeting Report TABLE OF CONTENTS Announcements and Opening Remarks 2 NASA Administrator Remarks 2 The President's FY 2011 Budget Request for NASA 4 NASA Exploration Update 6 Aeronautics Committee Report 8 Audit, Finance & Analysis Committee Report 9 Commercial Space Committee Report II Education & Public Outreach Committee Report II Non-Traditional International Partnerships 12 IT Infrastructure Committee Report 13 Science Committee Report 14 Space Operations Committee Report 17 Technology & Innovation Committee Report 18 Council Roundtable Discussion 19 Appendix A Agenda AppendixB Council Membership AppendixC Meeting Attendees AppendixD List of Presentation Material Meeting Report Prepared By: David J. Frankel 1 NASA Advisory Council February 18-19. 2010 NASA ADVISORY COUNCIL NASA Headquarters Washington, DC February 18-19, 2010 February 18,2010 Announcements and Opening Remarks Ms. Diane Rausch, NASA Advisory Council (NAC) Executive Director, called the meeting to order and welcomed the NAC members and attendees to Washington, DC, and NASA Headquarters. She reminded everyone that the meeting was open to the public and held in accordance with the Federal Advisory Committee Act (FACA) requirements. All comments and discussions should be considered to be on the record. The meeting minutes will be taken by Mr. David Frankel, and will be posted to the NAC website: www.nasa.gov/offices/nac/, shortly after the meeting. -

Rtg Impact Response to Hard Landing During Mars Environmental Survey (Mesur) Mission

RTG IMPACT RESPONSE TO HARD LANDING DURING MARS ENVIRONMENTAL SURVEY (MESUR) MISSION A. Schock M. Mukunda Space ABSTRACT Since the simultaneous operation of large number of landers over a long period of time is required, the landers The National Aeronautics and Space Administration must be capable of long life. They must be simple so that (NASA) is studying a seven-year robotic mission (MESUR, a large number can be sent at affordable cost, and yet Mars Environmental Survey) for the seismic, meteorological, rugged and robust In order to survive a wide range of and geochemical exploration of the Martian surface by means landing and environmental conditions, of a network of -16 small, inexpensive landers spread from pole to pole. To permit operation at high Martian latitudes, NASA has basellned the use of Radioisotope NASA has tentatively decided to power the landers with small Thermoelectric Generators (RTGs) to power the probe, RTGs (Radioisotope Thermoelectric Generators). To support lander, and scientific Instruments. Considerations favoring the NASA mission study, the Department of Energy's Office of the use of RTGs are their applicability at both low and high Special Applications commissioned Fairchild to perform Martian latitudes, their ability to operate during and after specialized RTG design studies. Those studies indicated that Martian sandstorms, and their ability to withstand Martian the cost and complexity of the mission could be significantly ground impacts at high velocities and g-loads. reduced if the RTGs had sufficient impact resistance to survive ground impact of the landers without retrorockets. High Impact resistance of the RTGs can be of critical Fairchild designs of RTGs configured for high impact importance In reducing the complexity and cost of the resistance were reported previously. -

Out for a Spin Activity

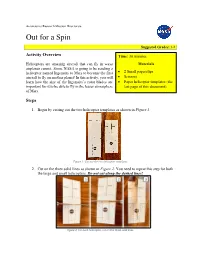

Aeronautics Research Mission Directorate Out for a Spin Suggested Grades: 3-8 Activity Overview Time: 30 minutes Helicopters are amazing aircraft that can fly in ways Materials airplanes cannot. Soon, NASA is going to be sending a helicopter named Ingenuity to Mars to become the first • 2 Small paperclips aircraft to fly on another planet! In this activity, you will • Scissors learn how the size of the Ingenuity’s rotor blades are • Paper helicopter templates (the important for it to be able to fly in the lesser atmosphere last page of this document) of Mars. Steps 1. Begin by cutting out the two helicopter templates as shown in Figure 1. Figure 1. Cut out the two helicopter templates. 2. Cut on the three solid lines as shown in Figure 2. You need to repeat this step for both the large and small helicopters. Do not cut along the dashed lines! Figure 2. For each helicopter, cut on the three solid lines. 3. For each helicopter: Fold the flaps labelled X and Y on the dashed lines toward the back (see Figure 3). Run your finger down the fold to make sure it is a tight fold. Figure 3. Fold flaps X and Y toward the back of the helicopter. Your helicopters should now look like Figure 4. Figure 4. Once the flaps are folded, your helicopters should look like this. 4. For each helicopter: Fold flap Z toward the back as shown in Figure 5. Run your finger along the fold to make sure it is a tight fold. Figure 5. -

Bangor University DOCTOR of PHILOSOPHY Representations Of

Bangor University DOCTOR OF PHILOSOPHY Representations of the grail quest in medieval and modern literature Ropa, Anastasija Award date: 2014 Awarding institution: Bangor University Link to publication General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal ? Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. Download date: 26. Sep. 2021 Representations of the Grail Quest in Medieval and Modern Literature Anastasija Ropa In fulfilment of the requirements of the degree of Doctor of Philosophy of Bangor University Bangor University 2014 i Abstract This thesis explores the representation and meaning of the Grail quest in medieval and modern literature, using the methodologies of historically informed criticism and feminist criticism. In the thesis, I consider the themes of death, gender relations and history in two medieval romances and three modern novels in which the Grail quest is the structuring motif. Comparing two sets of texts coming from different historical periods enhances our understanding of each text, because not only are the modern texts influenced by their medieval precursors, but also our perception of medieval Grail quest romances is modified by modern literature.