Building Highly Scalable Enterprise File Serving Solutions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Title Title: Subtitle March 2007

sub title The Title Title: Subtitle March 2007 Copyright c 2006-2007 BSD Certification Group, Inc. Permission to use, copy, modify, and distribute this documentation for any purpose with or without fee is hereby granted, provided that the above copyright notice and this permission notice appear in all copies. THE DOCUMENTATION IS PROVIDED "AS IS" AND THE AUTHOR DISCLAIMS ALL WARRANTIES WITH REGARD TO THIS DOCUMENTATION INCLUDING ALL IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS. IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR ANY SPECIAL, DIRECT, INDIRECT, OR CON- SEQUENTIAL DAMAGES OR ANY DAMAGES WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER IN AN ACTION OF CONTRACT, NEG- LIGENCE OR OTHER TORTIOUS ACTION, ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS DOCUMENTATION. NetBSD and pkgsrc are registered trademarks of the NetBSD Foundation, Inc. FreeBSD is a registered trademark of the FreeBSD Foundation. Contents Introduction vii 1 Installing and Upgrading the OS and Software 1 1.1 Recognize the installation program used by each operating system . 2 1.2 Recognize which commands are available for upgrading the operating system 6 1.3 Understand the difference between a pre-compiled binary and compiling from source . 8 1.4 Understand when it is preferable to install a pre-compiled binary and how to doso ...................................... 9 1.5 Recognize the available methods for compiling a customized binary . 10 1.6 Determine what software is installed on a system . 11 1.7 Determine which software requires upgrading . 12 1.8 Upgrade installed software . 12 1.9 Determine which software have outstanding security advisories . -

Disk Array Data Organizations and RAID

Guest Lecture for 15-440 Disk Array Data Organizations and RAID October 2010, Greg Ganger © 1 Plan for today Why have multiple disks? Storage capacity, performance capacity, reliability Load distribution problem and approaches disk striping Fault tolerance replication parity-based protection “RAID” and the Disk Array Matrix Rebuild October 2010, Greg Ganger © 2 Why multi-disk systems? A single storage device may not provide enough storage capacity, performance capacity, reliability So, what is the simplest arrangement? October 2010, Greg Ganger © 3 Just a bunch of disks (JBOD) A0 B0 C0 D0 A1 B1 C1 D1 A2 B2 C2 D2 A3 B3 C3 D3 Yes, it’s a goofy name industry really does sell “JBOD enclosures” October 2010, Greg Ganger © 4 Disk Subsystem Load Balancing I/O requests are almost never evenly distributed Some data is requested more than other data Depends on the apps, usage, time, … October 2010, Greg Ganger © 5 Disk Subsystem Load Balancing I/O requests are almost never evenly distributed Some data is requested more than other data Depends on the apps, usage, time, … What is the right data-to-disk assignment policy? Common approach: Fixed data placement Your data is on disk X, period! For good reasons too: you bought it or you’re paying more … Fancy: Dynamic data placement If some of your files are accessed a lot, the admin (or even system) may separate the “hot” files across multiple disks In this scenario, entire files systems (or even files) are manually moved by the system admin to specific disks October 2010, Greg -

Identify Storage Technologies and Understand RAID

LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals IdentifyIdentify StorageStorage TechnologiesTechnologies andand UnderstandUnderstand RAIDRAID LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Lesson Overview In this lesson, you will learn: Local storage options Network storage options Redundant Array of Independent Disk (RAID) options LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Anticipatory Set List three different RAID configurations. Which of these three bus types has the fastest transfer speed? o Parallel ATA (PATA) o Serial ATA (SATA) o USB 2.0 LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Local Storage Options Local storage options can range from a simple single disk to a Redundant Array of Independent Disks (RAID). Local storage options can be broken down into bus types: o Serial Advanced Technology Attachment (SATA) o Integrated Drive Electronics (IDE, now called Parallel ATA or PATA) o Small Computer System Interface (SCSI) o Serial Attached SCSI (SAS) LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Local Storage Options SATA drives have taken the place of the tradition PATA drives. SATA have several advantages over PATA: o Reduced cable bulk and cost o Faster and more efficient data transfer o Hot-swapping technology LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Local Storage Options (continued) SAS drives have taken the place of the traditional SCSI and Ultra SCSI drives in server class machines. SAS have several -

• RAID, an Acronym for Redundant Array of Independent Disks Was Invented to Address Problems of Disk Reliability, Cost, and Performance

RAID • RAID, an acronym for Redundant Array of Independent Disks was invented to address problems of disk reliability, cost, and performance. • In RAID, data is stored across many disks, with extra disks added to the array to provide error correction (redundancy). • The inventors of RAID, David Patterson, Garth Gibson, and Randy Katz, provided a RAID taxonomy that has persisted for a quarter of a century, despite many efforts to redefine it. 1 RAID 0: Striped Disk Array • RAID Level 0 is also known as drive spanning – Data is written in blocks across the entire array . 2 RAID 0 • Recommended Uses: – Video/image production/edition – Any app requiring high bandwidth – Good for non-critical storage of data that needs to be accessed at high speed • Good performance on reads and writes • Simple design, easy to implement • No fault tolerance (no redundancy) • Not reliable 3 RAID 1: Mirroring • RAID Level 1, also known as disk mirroring , provides 100% redundancy, and good performance. – Two matched sets of disks contain the same data. 4 RAID 1 • Recommended Uses: – Accounting, payroll, financial – Any app requiring high reliability (mission critical storage) • For best performance, controller should be able to do concurrent reads/writes per mirrored pair • Very simple technology • Storage capacity cut in half • S/W solutions often do not allow “hot swap” • High disk overhead, high cost 5 RAID 2: Bit-level Hamming Code ECC Parity • A RAID Level 2 configuration consists of a set of data drives, and a set of Hamming code drives. – Hamming code drives provide error correction for the data drives. -

Architectures and Algorithms for On-Line Failure Recovery in Redundant Disk Arrays

Architectures and Algorithms for On-Line Failure Recovery in Redundant Disk Arrays Draft copy submitted to the Journal of Distributed and Parallel Databases. A revised copy is published in this journal, vol. 2 no. 3, July 1994.. Mark Holland Department of Electrical and Computer Engineering Carnegie Mellon University 5000 Forbes Ave. Pittsburgh, PA 15213-3890 (412) 268-5237 [email protected] Garth A. Gibson School of Computer Science Carnegie Mellon University 5000 Forbes Ave. Pittsburgh, PA 15213-3890 (412) 268-5890 [email protected] Daniel P. Siewiorek School of Computer Science Carnegie Mellon University 5000 Forbes Ave. Pittsburgh, PA 15213-3890 (412) 268-2570 [email protected] Architectures and Algorithms for On-Line Failure Recovery In Redundant Disk Arrays1 Abstract The performance of traditional RAID Level 5 arrays is, for many applications, unacceptably poor while one of its constituent disks is non-functional. This paper describes and evaluates mechanisms by which this disk array failure-recovery performance can be improved. The two key issues addressed are the data layout, the mapping by which data and parity blocks are assigned to physical disk blocks in an array, and the reconstruction algorithm, which is the technique used to recover data that is lost when a component disk fails. The data layout techniques this paper investigates are variations on the declustered parity organiza- tion, a derivative of RAID Level 5 that allows a system to trade some of its data capacity for improved failure-recovery performance. Parity declustering improves the failure-mode performance of an array significantly, and a parity-declustered architecture is preferable to an equivalent-size multiple-group RAID Level 5 organization in environments where failure-recovery performance is important. -

Techsmart Representatives

Wave TechSmart representatives RAID BASICS ARE YOUR SECURITY SOLUTIONS FAULT TOLERANT? Redundant Array of Independent Disks (RAID) is a Enclosure: The "box" which contains the controller, storage technology used to improve the processing drives/drive trays and bays, power supplies, and fans is capability of storage systems. This technology is called an "enclosure." The enclosure includes various designed to provide reliability in disk array systems and controls, ports, and other features used to connect the to take advantage of the performance gains offered by RAID to a host for example. an array of mulple disks over single-disk storage. Wave RepresentaCves has experience with both high- RAID’s two primary underlying concepts are (1) that performance compuCng and enterprise storage, providing distribuCng data over mulple hard drives improves soluCons to large financial instuCons to research performance and (2) that using mulple drives properly laboratories. The security industry adopted superior allows for any one drive to fail without loss of data and compuCng and storage technologies aGer the transiCon without system downCme. In the event of a disk from analog systems to IP based networks. This failure, disk access will conCnue normally and the failure evoluCon has created robust and resilient systems that will be transparent to the host system. can handle high bandwidth from video surveillance soluCons to availability for access control and emergency Originally designed and implemented for SCSI drives, communicaCons. RAID principles have been applied to SATA and SAS drives in many video systems. Redundancy of any system, especially of components that have a lower tolerance in MTBF makes sense. -

RAID — Begin with the Basics How Does RAID Work? RAID Increases Data Protection and Performance by What Is RAID? Duplicating And/Or Spreading Data Over Multiple Disks

RAID — Begin With The Basics How Does RAID Work? RAID increases data protection and performance by What is RAID? duplicating and/or spreading data over multiple disks. DRIVE 1 RAID stands for Redundant Array of Inexpensive Disks. RAID is a method of logically treating Mirroring Mirroring & Striping several hard drives as one unit. It can offer fault tolerance and higher throughput levels than a Duplicates data from primary Mirrors data that is striped, spread single hard drive or group of independent hard drives. DRIVE 2 drive to secondary drive evenly across multiple disks Why Do We Need It? RAID provides real-time data recovery when a hard drive fails, increasing system uptime and DRIVE 1 DRIVE 1 DRIVE 3 availability while protecting against loss of data. Multiple drives working together also increase system performance. DRIVE 2 DRIVE 2 DRIVE 4 Levels of RAID DRIVE 1 DRIVE 3 RAID Level Description Minimum # of Drives Benefit RAID 0 Data striping (no data protection) 2 Highest performance DRIVE 2 DRIVE 4 RAID 1 Disk mirroring 2 Highest data protection RAID 1E Disk mirroring 3 Highest data protection for an odd number of disks RAID 5 Data striping with distributed parity 3 Best cost/performance balance for multi-drive environments RAID 5EE Data striping with distributed parity with 4 The cost/performance balance of RAID 5 without setting aside a dedicated hotspare disk hotspare integrated into the array RAID 6 Data striping with dual distributed parity 4 Highest fault tolerance with the ability to survive two disk failures RAID 10 Data -

Chapter 1 : " Introduction "

Chapter 1 : " Introduction " This chapter will introduce you to your new Disk Array's features and provide information on general RAID concept. 1-1 Introduction Features This section provides an overview of the features. For more detailed information, please refer to the technical specifications appendix at the end of this manual . Your Disk Array includes the following features : Easy Operation As everyone knows, conventional Disk Arrays are designed for experienced computer specialists. To solve complicated and time consuming operating procedures, we came up with a revolutionary idea : -- Innovative Plug And Play RAID -- As compared to a conventional Disk Array's long-winded setup procedures, your Disk Array can be ready to go after using the simple step by step built-in setup program. Ultra High performance Your Disk Array combines an extremely high speed microprocessor with the latest chip set, SCSI hardware technology, perfect firmware and an artistic design. The result is one of the fastest, most reliable Disk Array systems on the market. Supports virtually all popular operating systems ,platforms and network environments because it works independently from the O.S. Ultra 160 LVD SCSI channel interface to your Host computer, up to 160MB data transfer rate provides the processing and access power for you to handle complex and large files. Selective SCSI ID 0 ~ 14 , support with active termination. Tagged-command queuing : allows processing of up to 255 simultaneous data requests. Selective RAID levels 0, 1, 0+1, 3 or 5. Build-in 128MB cache memory, expandable up to 512MB. Serial communication port ( Terminal Port ) permits array controller operation through a standard VT100 terminal (or equivalent). -

Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 15

SUSE Linux Enterprise Server 15 Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 15 Provides information about how to manage storage devices on a SUSE Linux Enterprise Server. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xi 1 Available Documentation xi 2 Giving Feedback xiii 3 Documentation Conventions xiii 4 Product Life Cycle and Support xv Support Statement for SUSE Linux Enterprise Server xvi • Technology Previews xvii I FILE SYSTEMS AND MOUNTING 1 1 Overview of File Systems -

A Primer on Raid Levels: Standard Raid Levels Defined

A PRIMER ON RAID LEVELS: STANDARD RAID LEVELS DEFINED RAID 0: Disk striping Description: Data is striped across one or more disks in the array. Performance: Since more writers and readers can access bits of data at the same time, performance can be improved. Upside: Performance. Downside: No redundancy. One disk failure will result in lost data. Application(s): Applications that can access data from more sources than just the RAID 0 storage array. RAID 1: Disk mirroring Description: Data is written to two or more disks. No master or primary, the disks are peers. Performance: Read operations are fast since both disks are operational data can be read from both disks at the same time. Write operations, however, are slower because every write operation is done twice. Upside: If one disk fails, the second keeps running with no interruption of data availability. Downside: Cost. Requires twice as much disk space for mirroring. Application(s): Applications that require high performance and high availability including transactional applications, email, and operating systems. RAID 3: Virtual disk blocks Description: Byte-level striping across multiple disks. An additional disk is added that calculates and stores parity information. Performance: Since every write touches multiple disks, every write slows performance. Upside: Data remains fully available if a disk fails. Downside: Performance. Application(s): Good for sequential processing applications such as video imaging. RAID 4: Dedicated parity disk Description: Block-level striping across multiple disks with a cache added to increase read and write performance. Performance: Striping blocks instead of bytes and adding a cache increases performance over RAID 3. -

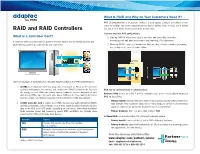

RAID and RAID Controllersdrive 1 Unit That Is Seen by the Attached System As a Single Drive

DRIVE 1 DRIVE 2 DRIVE 1 DRIVE 3 DRIVE 2 DRIVE 4 What is RAID and Why do Your Customers Need it? RAID (Redundant Array of Inexpensive Disks) is a data storage structure that allows a data center to combine two or more physical storage devices (HDDs, SSDs, or both) into a logical RAID and RAID ControllersDRIVE 1 unit that is seen by the attached system as a single drive. There are two basic RAID configurations: What is a Controller Card? 1. Striping (RAID 0) writes some data to one drive and some data to another, DRIVE 2 minimizing read and write access times and improving I/O performance. A controller card is a device that sits between the host system and the storage system, and allows the two systems to communicate with each other. 2. Mirroring (RAID 1) copies all information from one drive directly to another, preventing loss of data in the event of a drive failure. DRIVE 1 Mirroring Mirroring & Striping Duplicates data from primary Mirrors data that is striped, spread DRIVE 2 DRIVE 1 drive to secondary drive evenly across multiple disks DRIVE 1 DRIVE 1 DRIVE 3 DRIVE 2 DRIVE 2 DRIVE 2 DRIVE 4 There are two types of controller cards: Host Bus Adapters (HBAs), and RAID controller cards. 1. An HBA is an expansion card that plugs into a slot (such as PCI-e) on the computer DRIVE 1 system’s motherboard and provides fast, reliable non-RAID I/O between the host and RAID can be hardware-based or software-based.DRIVE 1 DRIVE 3 the storage devices. -

Raid Cheat Sheet AJA Sales Team

Raid Cheat sheet AJA Sales Team Raids RAID (Redundant Array of Independent Disks) The purpose of a RAID array is to increase data reliability and performance. When hard drives are running together in a RAID array, (depending on the RAID configuration or “level”) drives can instantaneously store redundant copies of your data and/or increase data read speed by spreading out smaller blocks of data across multiple drives. Some basic definitions: Data striping is the technique of segmenting logically sequential data, such as a single file, so that segments can be assigned to multiple physical devices. A Parity bit is a bit that is added to ensure that the number of bits with the value one in a set of bits is even or odd. Parity bits are used as the simplest form of error detection code. Disk mirroring is the replication of logical disk volumes onto separate physical hard disks in real time to ensure continuous availability. Hamming code is a linear error-correcting code named after its inventor, Richard Hamming. Hamming codes can detect up to two simultaneous bit errors, and correct single-bit errors; thus, reliable communication is possible when the Hamming distance between the transmitted and received bit patterns is less than or equal to one. By contrast, the simple parity code cannot correct errors, and can only detect an odd number of errors. Some of the most common RAID levels are: RAID 0 Striped set without parity or Striping – Data is distributed across an array of drives to improve speed. RAID 0 does not back up your data like other arrays, so if a single drive fails then all data on the array would be lost.