Techsmart Representatives

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

VIA RAID Configurations

VIA RAID configurations The motherboard includes a high performance IDE RAID controller integrated in the VIA VT8237R southbridge chipset. It supports RAID 0, RAID 1 and JBOD with two independent Serial ATA channels. RAID 0 (called Data striping) optimizes two identical hard disk drives to read and write data in parallel, interleaved stacks. Two hard disks perform the same work as a single drive but at a sustained data transfer rate, double that of a single disk alone, thus improving data access and storage. Use of two new identical hard disk drives is required for this setup. RAID 1 (called Data mirroring) copies and maintains an identical image of data from one drive to a second drive. If one drive fails, the disk array management software directs all applications to the surviving drive as it contains a complete copy of the data in the other drive. This RAID configuration provides data protection and increases fault tolerance to the entire system. Use two new drives or use an existing drive and a new drive for this setup. The new drive must be of the same size or larger than the existing drive. JBOD (Spanning) stands for Just a Bunch of Disks and refers to hard disk drives that are not yet configured as a RAID set. This configuration stores the same data redundantly on multiple disks that appear as a single disk on the operating system. Spanning does not deliver any advantage over using separate disks independently and does not provide fault tolerance or other RAID performance benefits. If you use either Windows® XP or Windows® 2000 operating system (OS), copy first the RAID driver from the support CD to a floppy disk before creating RAID configurations. -

D:\Documents and Settings\Steph

P45D3 Platinum Series MS-7513 (v1.X) Mainboard G52-75131X1 i Copyright Notice The material in this document is the intellectual property of MICRO-STAR INTERNATIONAL. We take every care in the preparation of this document, but no guarantee is given as to the correctness of its contents. Our products are under continual improvement and we reserve the right to make changes without notice. Trademarks All trademarks are the properties of their respective owners. NVIDIA, the NVIDIA logo, DualNet, and nForce are registered trademarks or trade- marks of NVIDIA Corporation in the United States and/or other countries. AMD, Athlon™, Athlon™ XP, Thoroughbred™, and Duron™ are registered trade- marks of AMD Corporation. Intel® and Pentium® are registered trademarks of Intel Corporation. PS/2 and OS®/2 are registered trademarks of International Business Machines Corporation. Windows® 95/98/2000/NT/XP/Vista are registered trademarks of Microsoft Corporation. Netware® is a registered trademark of Novell, Inc. Award® is a registered trademark of Phoenix Technologies Ltd. AMI® is a registered trademark of American Megatrends Inc. Revision History Revision Revision History Date V1.0 First release for PCB 1.X March 2008 (P45D3 Platinum) Technical Support If a problem arises with your system and no solution can be obtained from the user’s manual, please contact your place of purchase or local distributor. Alternatively, please try the following help resources for further guidance. Visit the MSI website for FAQ, technical guide, BIOS updates, driver updates, and other information: http://global.msi.com.tw/index.php? func=faqIndex Contact our technical staff at: http://support.msi.com.tw/ ii Safety Instructions 1. -

The Title Title: Subtitle March 2007

sub title The Title Title: Subtitle March 2007 Copyright c 2006-2007 BSD Certification Group, Inc. Permission to use, copy, modify, and distribute this documentation for any purpose with or without fee is hereby granted, provided that the above copyright notice and this permission notice appear in all copies. THE DOCUMENTATION IS PROVIDED "AS IS" AND THE AUTHOR DISCLAIMS ALL WARRANTIES WITH REGARD TO THIS DOCUMENTATION INCLUDING ALL IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS. IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR ANY SPECIAL, DIRECT, INDIRECT, OR CON- SEQUENTIAL DAMAGES OR ANY DAMAGES WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER IN AN ACTION OF CONTRACT, NEG- LIGENCE OR OTHER TORTIOUS ACTION, ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS DOCUMENTATION. NetBSD and pkgsrc are registered trademarks of the NetBSD Foundation, Inc. FreeBSD is a registered trademark of the FreeBSD Foundation. Contents Introduction vii 1 Installing and Upgrading the OS and Software 1 1.1 Recognize the installation program used by each operating system . 2 1.2 Recognize which commands are available for upgrading the operating system 6 1.3 Understand the difference between a pre-compiled binary and compiling from source . 8 1.4 Understand when it is preferable to install a pre-compiled binary and how to doso ...................................... 9 1.5 Recognize the available methods for compiling a customized binary . 10 1.6 Determine what software is installed on a system . 11 1.7 Determine which software requires upgrading . 12 1.8 Upgrade installed software . 12 1.9 Determine which software have outstanding security advisories . -

Designing Disk Arrays for High Data Reliability

Designing Disk Arrays for High Data Reliability Garth A. Gibson School of Computer Science Carnegie Mellon University 5000 Forbes Ave., Pittsbugh PA 15213 David A. Patterson Computer Science Division Electrical Engineering and Computer Sciences University of California at Berkeley Berkeley, CA 94720 hhhhhhhhhhhhhhhhhhhhhhhhhhhhh This research was funded by NSF grant MIP-8715235, NASA/DARPA grant NAG 2-591, a Computer Measurement Group fellowship, and an IBM predoctoral fellowship. 1 Proposed running head: Designing Disk Arrays for High Data Reliability Please forward communication to: Garth A. Gibson School of Computer Science Carnegie Mellon University 5000 Forbes Ave. Pittsburgh PA 15213-3890 412-268-5890 FAX 412-681-5739 [email protected] ABSTRACT Redundancy based on a parity encoding has been proposed for insuring that disk arrays provide highly reliable data. Parity-based redundancy will tolerate many independent and dependent disk failures (shared support hardware) without on-line spare disks and many more such failures with on-line spare disks. This paper explores the design of reliable, redundant disk arrays. In the context of a 70 disk strawman array, it presents and applies analytic and simulation models for the time until data is lost. It shows how to balance requirements for high data reliability against the overhead cost of redundant data, on-line spares, and on-site repair personnel in terms of an array's architecture, its component reliabilities, and its repair policies. 2 Recent advances in computing speeds can be matched by the I/O performance afforded by parallelism in striped disk arrays [12, 13, 24]. Arrays of small disks further utilize advances in the technology of the magnetic recording industry to provide cost-effective I/O systems based on disk striping [19]. -

Building Reliable Massive Capacity Ssds Through a Flash Aware RAID-Like Protection †

applied sciences Article Building Reliable Massive Capacity SSDs through a Flash Aware RAID-Like Protection † Jaeho Kim 1 and Jung Kyu Park 2,* 1 Department of Aerospace and Software Engineering & Engineering Research Institute, Gyeongsang National University, Jinju 52828, Korea; [email protected] 2 Department of Computer Software Engineering, Changshin University, Changwon 51352, Korea * Correspondence: [email protected] † This Paper Is an Extended Version of Paper Published in the IEEE International Conference on Consumer Electronics (ICCE) 2020, Las Vegas, NV, USA, 4–6 January 2020. Received: 14 November 2020; Accepted: 16 December 2020; Published: 21 December 2020 Abstract: The demand for mass storage devices has become an inevitable consequence of the explosive increase in data volume. The three-dimensional (3D) vertical NAND (V-NAND) and quad-level cell (QLC) technologies rapidly accelerate the capacity increase of flash memory based storage system, such as SSDs (Solid State Drives). Massive capacity SSDs adopt dozens or hundreds of flash memory chips in order to implement large capacity storage. However, employing such a large number of flash chips increases the error rate in SSDs. A RAID-like technique inside an SSD has been used in a variety of commercial products, along with various studies, in order to protect user data. With the advent of new types of massive storage devices, studies on the design of RAID-like protection techniques for such huge capacity SSDs are important and essential. In this paper, we propose a massive SSD-Aware Parity Logging (mSAPL) scheme that protects against n-failures at the same time in a stripe, where n is protection strength that is specified by the user. -

Rethinking RAID for SSD Reliability

Differential RAID: Rethinking RAID for SSD Reliability Asim Kadav Mahesh Balakrishnan University of Wisconsin Microsoft Research Silicon Valley Madison, WI Mountain View, CA [email protected] [email protected] Vijayan Prabhakaran Dahlia Malkhi Microsoft Research Silicon Valley Microsoft Research Silicon Valley Mountain View, CA Mountain View, CA [email protected] [email protected] ABSTRACT sult, a write-intensive workload can wear out the SSD within Deployment of SSDs in enterprise settings is limited by the months. Also, this erasure limit continues to decrease as low erase cycles available on commodity devices. Redun- MLC devices increase in capacity and density. As a conse- dancy solutions such as RAID can potentially be used to pro- quence, the reliability of MLC devices remains a paramount tect against the high Bit Error Rate (BER) of aging SSDs. concern for its adoption in servers [4]. Unfortunately, such solutions wear out redundant devices at similar rates, inducing correlated failures as arrays age in In this paper, we explore the possibility of using device-level unison. We present Diff-RAID, a new RAID variant that redundancy to mask the effects of aging on SSDs. Clustering distributes parity unevenly across SSDs to create age dispari- options such as RAID can potentially be used to tolerate the ties within arrays. By doing so, Diff-RAID balances the high higher BERs exhibited by worn out SSDs. However, these BER of old SSDs against the low BER of young SSDs. Diff- techniques do not automatically provide adequate protec- RAID provides much greater reliability for SSDs compared tion for aging SSDs; by balancing write load across devices, to RAID-4 and RAID-5 for the same space overhead, and solutions such as RAID-5 cause all SSDs to wear out at ap- offers a trade-off curve between throughput and reliability. -

Disk Array Data Organizations and RAID

Guest Lecture for 15-440 Disk Array Data Organizations and RAID October 2010, Greg Ganger © 1 Plan for today Why have multiple disks? Storage capacity, performance capacity, reliability Load distribution problem and approaches disk striping Fault tolerance replication parity-based protection “RAID” and the Disk Array Matrix Rebuild October 2010, Greg Ganger © 2 Why multi-disk systems? A single storage device may not provide enough storage capacity, performance capacity, reliability So, what is the simplest arrangement? October 2010, Greg Ganger © 3 Just a bunch of disks (JBOD) A0 B0 C0 D0 A1 B1 C1 D1 A2 B2 C2 D2 A3 B3 C3 D3 Yes, it’s a goofy name industry really does sell “JBOD enclosures” October 2010, Greg Ganger © 4 Disk Subsystem Load Balancing I/O requests are almost never evenly distributed Some data is requested more than other data Depends on the apps, usage, time, … October 2010, Greg Ganger © 5 Disk Subsystem Load Balancing I/O requests are almost never evenly distributed Some data is requested more than other data Depends on the apps, usage, time, … What is the right data-to-disk assignment policy? Common approach: Fixed data placement Your data is on disk X, period! For good reasons too: you bought it or you’re paying more … Fancy: Dynamic data placement If some of your files are accessed a lot, the admin (or even system) may separate the “hot” files across multiple disks In this scenario, entire files systems (or even files) are manually moved by the system admin to specific disks October 2010, Greg -

Identify Storage Technologies and Understand RAID

LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals IdentifyIdentify StorageStorage TechnologiesTechnologies andand UnderstandUnderstand RAIDRAID LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Lesson Overview In this lesson, you will learn: Local storage options Network storage options Redundant Array of Independent Disk (RAID) options LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Anticipatory Set List three different RAID configurations. Which of these three bus types has the fastest transfer speed? o Parallel ATA (PATA) o Serial ATA (SATA) o USB 2.0 LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Local Storage Options Local storage options can range from a simple single disk to a Redundant Array of Independent Disks (RAID). Local storage options can be broken down into bus types: o Serial Advanced Technology Attachment (SATA) o Integrated Drive Electronics (IDE, now called Parallel ATA or PATA) o Small Computer System Interface (SCSI) o Serial Attached SCSI (SAS) LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Local Storage Options SATA drives have taken the place of the tradition PATA drives. SATA have several advantages over PATA: o Reduced cable bulk and cost o Faster and more efficient data transfer o Hot-swapping technology LESSON 4.1_4.2 98-365 Windows Server Administration Fundamentals Local Storage Options (continued) SAS drives have taken the place of the traditional SCSI and Ultra SCSI drives in server class machines. SAS have several -

• RAID, an Acronym for Redundant Array of Independent Disks Was Invented to Address Problems of Disk Reliability, Cost, and Performance

RAID • RAID, an acronym for Redundant Array of Independent Disks was invented to address problems of disk reliability, cost, and performance. • In RAID, data is stored across many disks, with extra disks added to the array to provide error correction (redundancy). • The inventors of RAID, David Patterson, Garth Gibson, and Randy Katz, provided a RAID taxonomy that has persisted for a quarter of a century, despite many efforts to redefine it. 1 RAID 0: Striped Disk Array • RAID Level 0 is also known as drive spanning – Data is written in blocks across the entire array . 2 RAID 0 • Recommended Uses: – Video/image production/edition – Any app requiring high bandwidth – Good for non-critical storage of data that needs to be accessed at high speed • Good performance on reads and writes • Simple design, easy to implement • No fault tolerance (no redundancy) • Not reliable 3 RAID 1: Mirroring • RAID Level 1, also known as disk mirroring , provides 100% redundancy, and good performance. – Two matched sets of disks contain the same data. 4 RAID 1 • Recommended Uses: – Accounting, payroll, financial – Any app requiring high reliability (mission critical storage) • For best performance, controller should be able to do concurrent reads/writes per mirrored pair • Very simple technology • Storage capacity cut in half • S/W solutions often do not allow “hot swap” • High disk overhead, high cost 5 RAID 2: Bit-level Hamming Code ECC Parity • A RAID Level 2 configuration consists of a set of data drives, and a set of Hamming code drives. – Hamming code drives provide error correction for the data drives. -

6 O--C/?__I RAID-II: Design and Implementation Of

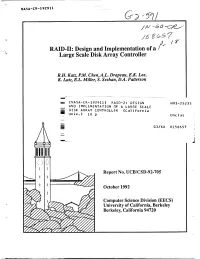

f r : NASA-CR-192911 I I /N --6 o--c/?__i _ /f( RAID-II: Design and Implementation of a/t 't Large Scale Disk Array Controller R.H. Katz, P.M. Chen, A.L. Drapeau, E.K. Lee, K. Lutz, E.L. Miller, S. Seshan, D.A. Patterson r u i (NASA-CR-192911) RAID-Z: DESIGN N93-25233 AND IMPLEMENTATION OF A LARGE SCALE u DISK ARRAY CONTROLLER (California i Univ.) 18 p Unclas J II ! G3160 0158657 ! I i I \ i O"-_ Y'O J i!i111 ,= -, • • ,°. °.° o.o I I Report No. UCB/CSD-92-705 "-----! I October 1992 _,'_-_,_ i i I , " Computer Science Division (EECS) University of California, Berkeley Berkeley, California 94720 RAID-II: Design and Implementation of a Large Scale Disk Array Controller 1 R. H. Katz P. M. Chen, A. L Drapeau, E. K. Lee, K. Lutz, E. L Miller, S. Seshan, D. A. Patterson Computer Science Division Electrical Engineering and Computer Science Department University of California, Berkeley Berkeley, CA 94720 Abstract: We describe the implementation of a large scale disk array controller and subsystem incorporating over 100 high performance 3.5" disk chives. It is designed to provide 40 MB/s sustained performance and 40 GB capacity in three 19" racks. The array controller forms an integral part of a file server that attaches to a Gb/s local area network. The controller implements a high bandwidth interconnect between an interleaved memory, an XOR calculation engine, the network interface (HIPPI), and the disk interfaces (SCSI). The system is now functionally operational, and we are tuning its performance. -

Architectures and Algorithms for On-Line Failure Recovery in Redundant Disk Arrays

Architectures and Algorithms for On-Line Failure Recovery in Redundant Disk Arrays Draft copy submitted to the Journal of Distributed and Parallel Databases. A revised copy is published in this journal, vol. 2 no. 3, July 1994.. Mark Holland Department of Electrical and Computer Engineering Carnegie Mellon University 5000 Forbes Ave. Pittsburgh, PA 15213-3890 (412) 268-5237 [email protected] Garth A. Gibson School of Computer Science Carnegie Mellon University 5000 Forbes Ave. Pittsburgh, PA 15213-3890 (412) 268-5890 [email protected] Daniel P. Siewiorek School of Computer Science Carnegie Mellon University 5000 Forbes Ave. Pittsburgh, PA 15213-3890 (412) 268-2570 [email protected] Architectures and Algorithms for On-Line Failure Recovery In Redundant Disk Arrays1 Abstract The performance of traditional RAID Level 5 arrays is, for many applications, unacceptably poor while one of its constituent disks is non-functional. This paper describes and evaluates mechanisms by which this disk array failure-recovery performance can be improved. The two key issues addressed are the data layout, the mapping by which data and parity blocks are assigned to physical disk blocks in an array, and the reconstruction algorithm, which is the technique used to recover data that is lost when a component disk fails. The data layout techniques this paper investigates are variations on the declustered parity organiza- tion, a derivative of RAID Level 5 that allows a system to trade some of its data capacity for improved failure-recovery performance. Parity declustering improves the failure-mode performance of an array significantly, and a parity-declustered architecture is preferable to an equivalent-size multiple-group RAID Level 5 organization in environments where failure-recovery performance is important. -

1 Configuring SATA Controllers A

RAID Levels RAID 0 RAID 1 RAID 5 RAID 10 Minimum Number of Hard ≥2 2 ≥3 ≥4 Drives Array Capacity Number of hard Size of the smallest (Number of hard (Number of hard drives * Size of the drive drives -1) * Size of drives/2) * Size of the smallest drive the smallest drive smallest drive Fault Tolerance No Yes Yes Yes To create a RAID set, follow the steps below: A. Install SATA hard drive(s) in your computer. B. Configure SATA controller mode in BIOS Setup. C. Configure a RAID array in RAID BIOS. (Note 1) D. Install the SATA RAID/AHCI driver and operating system. Before you begin, please prepare the following items: • At least two SATA hard drives or M.2 SSDs (Note 2) (to ensure optimal performance, it is recommended that you use two hard drives with identical model and capacity). (Note 3) • A Windows setup disk. • Motherboard driver disk. • A USB thumb drive. 1 Configuring SATA Controllers A. Installing hard drives Connect the SATA signal cables to SATA hard drives and the Intel® Chipset controlled SATA ports (SATA3 0~5) on the motherboard. Then connect the power connectors from your power supply to the hard drives. Or install your M.2 SSD(s) in the M.2 connector(s) on the motherboard. (Note 1) Skip this step if you do not want to create RAID array on the SATA controller. (Note 2) An M.2 PCIe SSD cannot be used to set up a RAID set either with an M.2 SATA SSD or a SATA hard drive.