2.3 Reverse Engineering 22 2.4 Summary 25

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

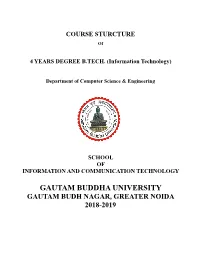

Department of Computer Science & Engineering

COURSE STURCTURE Of 4 YEARS DEGREE B.TECH. (Information Technology) Department of Computer Science & Engineering SCHOOL OF INFORMATION AND COMMUNICATION TECHNOLOGY GAUTAM BUDDHA UNIVERSITY GAUTAM BUDH NAGAR, GREATER NOIDA 2018-2019 4-Years Degree B. TECH. (Information Technology) I-YEAR (I-SEMESTER) (Effective from session: 2018-19) SEMESTER-I S r . Subject Code Courses L-T-P Credits CBCS/AICTE No. THEORY 1 PH102 Engineering Physics 3-1-0 4 CC/BS 2 MA101 Engineering Mathematics – I 3-1-0 4 CC/BS 3 ME101 Engineering Mechanics 3-1-0 4 CC/ESC 4 EE102 Basics Electrical Engineering 3-1-0 4 CC/ESC 5 ES101 Environmental Science 2-0-0 2 AECC/BS 6 EN101 English Proficiency 2-0-0 2 AECC/HSMC PRACTICALS 7 PH 104 Engineering Physics Lab 0-0-2 1 CC/BS 8 EE 104 Basic Electrical Engineering Lab 0-0-2 1 CC/ESC 9 ME102* Workshop Practices 1-0-2 2 CC/ESC 10 EN151 Language Lab 0-0-2 1 AECC/HSMC 11 GP General Proficiency - Non Credit Total 17-4-8 25 Total Contact Hours 29 I-YEAR (II-SEMESTER) (Effective from session: 2018-19) SEMESTER – II S r. Subject Code Courses L-T-P Credits CBCS/AICTE No. THEORY 1 CY101 Engineering Chemistry 3-1-0 4 CC/BS 2 MA102 Engineering Mathematics – II 3-1-0 4 CC/BS 3 EC101 Basic Electronics Engineering 3-1-0 4 CC/ESC 4 CS101 Fundamentals of Computer 3-1-0 4 SEC/ESC Programming 5 BS101 Human Values & Buddhist Ethics 2-0-0 2 AECC/HSMC PRACTICALS 6 CY 103 Engineering Chemistry Lab 0-0-2 1 CC/BS 7 EC 181 Basic Electronics Engineering Lab 0-0-2 1 CC/ESC 8 CS 181 Computer Programming Lab 0-0-2 1 SEC/ESC 9 CE103* Engineering Graphics 1-0-2 2 CC/ESC 10 GP General Proficiency - Non Credit Total 15-4-8 23 Total Contact Hours 27 II-YEAR (III-SEMESTER) (Effective from session: 2018-19) EVALUATION SCHEME PERIO SESSIO MID CBC CREDI COUR DS S. -

Formal Specification— Z Notation— Syntax, Type and Semantics

Formal Specification— Z Notation| Syntax, Type and Semantics Consensus Working Draft 2.6 August 24, 2000 Developed by members of the Z Standards Panel BSI Panel IST/5/-/19/2 (Z Notation) ISO Panel JTC1/SC22/WG19 (Rapporteur Group for Z) Project number JTC1.22.45 Project editor: Ian Toyn [email protected] http://www.cs.york.ac.uk/~ian/zstan/ This is a Working Draft by the Z Standards Panel. It has evolved from Consensus Working Draft 2.5 of June 20, 2000 according to remarks received and discussed at Meeting 56 of the Z Panel. ISO/IEC 13568:2000(E) Contents Page Foreword . iv Introduction . v 1 Scope ....................................................... 1 2 Normative references . 1 3 Terms and definitions . 1 4 Symbols and definitions . 3 5 Conformance . 14 6 Z characters . 18 7 Lexis........................................................ 24 8 Concrete syntax . 30 9 Characterisation rules . 38 10 Annotated syntax . 40 11 Prelude . 43 12 Syntactic transformation rules . 44 13 Type inference rules . 54 14 Semantic transformation rules . 66 15 Semantic relations . 71 Annex A (normative) Mark-ups . 79 Annex B (normative) Mathematical toolkit . 90 Annex C (normative) Organisation by concrete syntax production . 107 Annex D (informative) Tutorial . 153 Annex E (informative) Conventions for state-based descriptions . 166 Bibliography . 168 Index . 169 ii c ISO/IEC 2000|All rights reserved ISO/IEC 13568:2000(E) Figures 1 Phases of the definition . 15 B.1 Parent relation between sections of the mathematical toolkit . 90 D.1 Parse tree of birthday book example . 155 D.2 Annotated parse tree of part of axiomatic example . -

Research on Consistency Checking of Different Aspects Models of the Information System

VILNIUS GEDIMINAS TECHNICAL UNIVERSITY Rūta DUBAUSKAITĖ RESEARCH ON CONSISTENCY CHECKING OF DIFFERENT ASPECTS MODELS OF THE INFORMATION SYSTEM DOCTORAL DISSERTATION TECHNOLOGICAL SCIENCES, INFORMATICS ENGINEERING (07T) Vilnius 2012 Doctoral dissertation was prepared at Vilnius Gediminas Technical University in 2008–2012. Scientific Supervisor Prof Dr Olegas VASILECAS (Vilnius Gediminas Technical University, Technological Sciences, Informatics Engineering – 07T). Consultant – įrašoma, jeigu reikia Assoc Prof Dr Name SURNAME (Vilnius Gediminas Technical University, Technolo- gical Sciences, Electrical and Electronic Engineering – 01T). VGTU leidyklos TECHNIKA 2022-M mokslo literatūros knyga http://leidykla.vgtu.lt ISBN 978-609-457-298-2 © VGTU leidykla TECHNIKA, 2012 © Rūta Dubauskaitė, 2012 [email protected] VILNIAUS GEDIMINO TECHNIKOS UNIVERSITETAS Rūta DUBAUSKAITĖ INFORMACINĖS SISTEMOS SKIRTINGŲ ASPEKTŲ MODELIŲ DARNOS TIKRINIMO TYRIMAS DAKTARO DISERTACIJA TECHNOLOGIJOS MOKSLAI, INFORMATIKOS INŽINERIJA (07T) Vilnius 2012 Disertacija rengta 2008–2012 metais Vilniaus Gedimino technikos universi- tete. (Disertacija ginama eksternu.) – įrašoma, jeigu reikia Mokslinis vadovas (eksternui – Mokslinis konsultantas) prof. dr. Olegas VASILECAS (Vilniaus Gedimino technikos universitetas, tech- nologijos mokslai, informatikos inžinerija – 07T). Konsultantas – įrašoma, jeigu buvo skirtas doc. dr. Vardas PAVARDĖ (Vilniaus Gedimino technikos universitetas, technologijos mokslai, elektros ir elektronikos inžinerija – 01T). Abstract Modelling of -

A Formal Methodology for Deriving Purely Functional Programs from Z Specifications Via the Intermediate Specification Language Funz

Louisiana State University LSU Digital Commons LSU Historical Dissertations and Theses Graduate School 1995 A Formal Methodology for Deriving Purely Functional Programs From Z Specifications via the Intermediate Specification Language FunZ. Linda Bostwick Sherrell Louisiana State University and Agricultural & Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_disstheses Recommended Citation Sherrell, Linda Bostwick, "A Formal Methodology for Deriving Purely Functional Programs From Z Specifications via the Intermediate Specification Language FunZ." (1995). LSU Historical Dissertations and Theses. 5981. https://digitalcommons.lsu.edu/gradschool_disstheses/5981 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Historical Dissertations and Theses by an authorized administrator of LSU Digital Commons. For more information, please contact [email protected]. INFORMATION TO USERS This manuscript has been reproduced from the microfilm master. UMI films the text directly from the original or copy submitted. Thus, some thesis and dissertation copies are in typewriter face, while others may be from any type of computer printer. H ie quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleedthrough, substandardm argins, and improper alignment can adversely affect reproduction. In the unlikely, event that the author did not send UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. Oversize materials (e.g., maps, drawings, charts) are reproduced by sectioning the original, beginning at the upper left-hand comer and continuing from left to right in equal sections with small overlaps. -

Improvement in the Requirement Specification Using Z Language And

IMPROVEMENT IN THE REQUIREMENT SPECIFICATION USING Z LANGUAGE AND ITS SUCCESSOR A thesis submitted in partial fulfillment of the requirements for the award of degree of Master of Engineering in Software Engineering Submitted By SATHISH KUMAR MIDDE (Roll No. 800831007) Under the supervision of Mrs. SHIVANI GOEL Assistant Professor DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING THAPAR UNIVERSITY PATIALA – 147004 June 2010 . i ii Abstract Z (pronounced Zed!) is a Formal Specification language that works at a high level of abstraction so that even complex behaviors can be described precisely and concisely, which is also used to specify the functional requirement of the system. A functional specification is a formal document used to describe in details for software developers a product's intended capabilities, appearance, and interactions with users. The Z notation is a strongly typed, mathematical, specification language. It is not an executable notation; it cannot be interpreted or compiled into a running program. The Z notation is a model-based specification language based on set theory and first-order predicate logic. It provides rich data structures and facilities to define operations on them. An abstract Z specification of an Automated Teller Machine (ATM) environment is defined in this thesis. This specification is used to represent the state of the world and the operations defined on it. The Z schemas are written by using Z/Word tool, supports all types of notations which are used in Z language. Then, attempt to ensure that some constraints on this system are not violated. For this, converted the Z specification into an Alloy model that can be put into the Alloy Analyzer for fully automatic analysis. -

Z: GRAMMAR and CONCRETE and ABSTRACT SYNTAXES (Version 2.0)

z: GRAMMAR AND CONCRETE AND ABSTRACT SYNTAXES (Version 2.0) by Steve King lb Holm 8flrensen Jim Woodcock Te~nical Monograph PRG-68 ISBN 0-90292&.50-3 July 1988 Oxford University Computing Laboratory Program.ming Research Group 8-11 Keble Road Oxford OX] 3QD England CGpyright © 1988 IBM United Kingdom Laboratories Limited Authors' address: Oxford University Computing Laboratory Programming Research Group 8-11 Keble Road Oxford OXl 3QD England Electronic mail: kingG.nk.ac.orlord.prg (JANET) Preface This monograph, which presents a grammar and an a.bstract syntax {or the Z specification language, is produced as part of a joint project between IBM United Kingdom Labo. ratories Limited a.t Hursley, England and the Programming Research Group of Oxford University Computing Laboratory, into the application of formal software specification techniques to industrial problems. The work WM sopported by a research contract be tween IBM and Oxford University and is published by pennission of the Company. [Abrial 811 provided the starting point in the development of the Z notation. The syntax for definitions, predicates and terms presented here was developed from Jea.n·Ra.ymond Abrial's paper. The notation has been further developed and described in [Sufrin 86]. The type roles and the semantics oCZ have been described in [Spivey 85]. The commen tary in this paper on the mea.ning of the language constructs is an informal description of what is formally described in [Spivey 85]. The schema concept is an extension to conventional set theory and preliminary descrip tions can be found in [Sumn 81], [Sfilirensen 82] and [Morgan 84]. -

Support for Model Checking Z Specifications Maria Ulfah Siregar

Support for Model Checking Z Specifications By: Maria Ulfah Siregar A thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy The University of Sheffield Faculty of Engineering Department of Computer Science 20 December 2016 Abstract One of deficiencies in the Z tools is that there is limited support for model checking Z specifications. To build a model checker directly for a Z spec- ification would take considerable effort and time due to the abstraction of the language. Translating inputs of a Z specification into a language that an existing model checker tool accepts is an alternative method. Researchers at the University of Sheffield implemented a translation tool which took a Z specification and translated it into the input for the Symbolic Analysis Labo- ratory (SAL) tool, a framework for combining different tools for abstraction, program analysis, theorem proving and model checking, which they called Z2SAL. In this paper, support for model checking Z specifications is dis- cussed, in which the ability of the existing Z2SAL is extended. This support includes a translation for generic constant and schema calculus. Instead of translating these aspects of the Z language into the SAL language as Z2SAL does, a Z specification containing these two notations will be pre-processed, in which a generic constant definition will be redefined to its equivalent ax- iomatic definition, and schema calculus will be expanded to a new schema definition. This paper discusses the implementation of these types of sup- port, and illustration of some working examples. The discussion also includes other several issues related to a new approach in translating Z functions and constants in SAL language, which originates from the type incompatibility obtained during execution by the SAL tool, an approach to a SAL transla- tion of embedded theorems on Z specifications, and a manual experiment on applying an abstraction on Z specifications. -

Annotated Z Bibliography

Annotated Z Bibliography Jonathan Bowen Oxford University Computing Laboratory, Wolfson Building, Parks Road, Oxford OX1 3QD, UK. Susan Stepney, Rosalind Barden Logica Cambridge Limited, Betjeman House, 104 Hills Road, Cambridge, CB2 1LQ, UK. 1 Introduction This annotated Z bibliography contains a selected list of some pertinent publications for Z users. Most of those included are readily available, either as books or in journals. A few unpublished items have been included, where they are particularly relevant and can be obtained reasonably easily. Some references are accompanied by an annotation. This may include a contents list (of a book), a list of the titles of Z related papers (in a collection) with cross references to the full details, or a summary of the work. 2 Cross references The bibliography in the last section lists all references in alphabetical order by author. In this section papers are arranged by subject (with authors and brief details of the subject matter), together with cross references to the full details in the bibliography. 2.1 Management, style, and method For justifications for using formality, and quick introductions to Z, see: [63, 199] Cohen/McDermid. Justification of formal methods and notations [204] Meyer. On formalism in specifications [269] Spivey. Introduction to Z [305, 306] Wing. General introduction to formal methods including Z [311] Woodcock. Structuring specifications For discussion about using formal methods in practice, see: 1 [20] Barden et al. Z in practice [22, 45, 46, 118, 201] Barroca/McDermid, Bowen/Stavridou and Gerhart et al. Formal methods and safety-critical systems [116] Gerhart. Applications of formal methods [124, 40, 41, 42] Hall and Bowen/Hinchey. -

Process and Progress of Requirement Formalization in Software Engineering

Ingeniare. Revista chilena de ingeniería, vol. 28 Nº 3, 2020, pp. 411-423 Process and progress of requirement formalization in Software Engineering Proceso y progreso de la formalización de requisitos en Ingeniería del Software Edgar Serna M.1* Alexei Serna A.1 Recibido 26 de febrero de 2019, aceptado 26 de marzo de 2019 Received: February 26, 2019 Accepted: March 26, 2019 ABSTRACT Since the middle of the last century was initiated the research in formal methods and was presented proposals and methodologies to apply them in software development. The idea was overcome the diagnosed software crisis through the materialization of the life cycle of this product development. In this article is presented the results of a revision of the literature, about progress and develop the requirement formalization. The conclusion is that both are slow: there is not enough interest in the industry, the academy does not train in formal methods, and there is no sponsorship for this research field, professionals fear mathematics and traditional methodologies of software engineering are still the most used in development teams. Due to the quality efficiency, software security and reliability, it is necessary to reactivate the research and experimentation with all the formal methods, because the hope is that mathematics will be the toll with which the crisis promulgated in the 60s is overcome. Keywords: Formal methods, requirement engineering, software quality, formal language. RESUMEN Desde mediados del siglo pasado se inició la investigación en métodos formales y se presentaron propuestas y metodologías para aplicarlos en el desarrollo de software. La idea era superar la diagnosticada crisis del software mediante la matematización del ciclo de vida del desarrollo de este producto. -

Comparative Analysis of Formal Specification Languages Z, VDM and B

International Journal of Current Engineering and Technology E-ISSN 2277 – 4106, P-ISSN 2347 – 5161 ©2015INPRESSCO®, All Rights Reserved Available at http://inpressco.com/category/ijcet Research Article Comparative Analysis of Formal Specification Languages Z, VDM and B Tulika Pandey† and Saurabh Srivastava‡* †Department of Computer Science and Information Technology, SHIATAS, Allahabad, Uttar Pradesh, India ‡Department of Computer Science Engineering, SHIATS Allahabad, Uttar Pradesh, India Accepted 20 June 2015, Available online 25 June 2015, Vol.5, No.3 (June 2015) Abstract This paper focuses on comparison on formal specification languages and chooses the appropriate one for a particular problem. Formal specification is a better way to identifying specification errors and describing specification in unambiguous ways. Formal specification is a specification written in a formal language where a formal language is either based on rigorous mathematical model or simply on standardized programming or specification language. Formal specification language expressed the specification in a language whose vocabulary syntax and semantic are properly defined. Formal specification language provides mathematical representation of the system. In this paper I will introduce three formal specification languages such as Z, VDM, and B and perform comparison among to them. Keywords: Formal Methods, Formal Specification, Formal Specification Languages, Schema. 1. Introduction parameters such as correctness, consistency, verification of the system requirements and provide 1 This paper reports experience gained in use of formal code verification. Formal methods are associated with specification languages in formal methods for complex three techniques such as formal specification, formal and critical system development or decision making verification, and refinements system. Formal methods are the mathematical approach supported by tools and techniques for Formal Specification verifying the essential properties of desired software + system or hardware system. -

A Case Study of a Formalized Security Architecture

Research Collection Conference Paper A case study of a formalized security architecture Author(s): Brucker, Achim D.; Wolff, Burkhart Publication Date: 2012 Permanent Link: https://doi.org/10.3929/ethz-a-007329404 Rights / License: In Copyright - Non-Commercial Use Permitted This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Electronic Notes in Theoretical Computer Science 80 (2009) URL: http://www.elsevier.nl/locate/entcs/volume80.html 40 pages A Case Study of a Formalized Security Architecture Achim D. Brucker 1 ETH Z¨urich Burkhart Wolff 2 Albert-Ludwigs-Universit¨atFreiburg Abstract CVS is a widely known version management system, which can be used for the dis- tributed development of software as well as its distribution from a central database. In this paper, we provide an outline of a formal security analysis of a CVS-Server architecture performed in [1]. The analysis is based on an abstract architecture (enforcing a role-based access control on the repository), which is refined to an im- plementation architecture (based on the usual discretionary access control provided by the POSIX environment). Both architectures serve as framework to formulate access control and confidentiality properties. Both the abstract as well as the concrete architecture are specified in the language Z. Based on a logical embedding of Z into Isabelle/HOL, we provide formal, machine- checked proofs for consistency properties of the specification, for the correctness of the refinement, and for some security properties. Thus, we present a case study for the security analysis of realistic models over an off-the-shelf system by formal machine-checked proofs. -

A Descriptive Formalisation of Student Management System (SMS)

Published by : International Journal of Engineering Research & Technology (IJERT) http://www.ijert.org ISSN: 2278-0181 Vol. 6 Issue 11, November - 2017 A Descriptive Formalisation of Student Management System (SMS) Dr. Okengwu U. A1 Dr.Ejiofor C. I 2 Department of Computer Science, Department of Computer Science, University of Port-Harcourt, Port-Harcourt. University of Port-Harcourt, Port-Harcourt. Nigeria Nigeria Abstract - The formalisation of Student Management System administration system is a management information (SMS), can invariably contribute to the success, profitability system for education establishments to manage student data. and customer-based approach of Educational Institutes. The Student information systems provide capabilities for use of formal specification creates a formal approach for registering students in courses, specifying the underlying functions and properties of the documenting grading, transcripts, results of student tests and system. This paper has attempted to give a formal description of the activities of a SMS Using Zed notation. The interaction other assessment scores, building student schedules, tracking within the system is visualized using Unified Modelling student attendance, and managing many other student- Language (UML) sequence diagrams. related data needs in a school. A SIS should not be confused with a learning management system or virtual learning Keywords: Student Mangement System (SMS), Z-Notation, UML. environment, where course materials, assignments and assessment tests can be published electronically. 1 BACKGROUND OF STUDY In this paper, a descriptive formalization of Student Software systems are system built on the premise of Management System (SMS) properties is captured using Z- software, integrating within hardware components. These notation. The choice of Z- notation as opposed to other systems over time have become so complex in term of formalization languages was based on: Z-notation components integration and software usage.