Bayesian Model Selection and Estimation Without Mcmc

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pan Fish Biting Well Throughou T Sta Le TIRE

Pan fish Biting Well They'll Do It Every Time By Jimmy Hatlo [THE CAPITAL TIMES. ThurMla^Aug.jU966-27 jPancakes No Colts Hammer 1'DON'T Pltf AW STOCKS NONE OF OUR ZfRAJS GOING TO NOT THAT I BEUEVE\1N THAT STUFF, BUT r/GALS GO FOR CROSS WATER ALL 'Skius, 35 to 0 IK !T,YDU UNDERSTAHD.'JWHERE IS SHE? I'D THAT BALONEY' RIGHT'- THERES Help Now to Throughou t Sta le BUT THIS FORTUNE-V LIKE HER TO TELU NOT MUCH! OUR PUDDLE IN OUR WASHINGTON' t.fi - Quarter- TELllER IS UNCANNY'"! MINE 3UST-FOR FUN- DOUGH WILL BE KITCHEN FROM j backs John Unilas and Gary SHE SAH>1'/A GOING PUTTING THE THE LEAKY ROOF.' C'uo/zo ignited an explosive of- TO CROSS WATER . OF COURSE I'M NOT ^ Bird Hurler 35-Pound Cat GYPSY£ ki OS- fensive attack and a defensa AND THAT I'M SUPERSTITIOUS, BUT I'M, THRU COLLEGE , MV BRIDE MUST BE BALTIMORE W - Pitcher JimWednesday night as the Baltimore COMING INTO WILLING TO TRY- HOW GOING IN THE BUSINESS Lew Cornelius' List \MOKEV! MUCH DOES SHE Palmer of the Baltimore Orioles, Celts slaughtered the Washington HERSELF. OUR SINK IS who had been winning every Wisconsin's big fish of the week CHARGE? ALWAYS FULL OF Redskins, 33-0, in a National Knot- TEA LEAVES time he ate pancakes for break- ball Jx>ague exhibition. as a 35-pound catfish caught out fast, has soured on flapjacks. the Wolf river in Shawano A sellout croud of 45.803, in- The Cleveland Indians shook eluding President Johnson, SCOREBOOK lunty, "How's Fishing?" reports Palmer's faith in the supersti- om conservation wardens tion Wednesday night by smash- Unilas showed no ill effects howed today. -

Daily Iowan (Iowa City, Iowa), 1968-04-10

Bowen Announces Plans For King Scholarships See T.xt Of S.... ch. Pa.. 2 of discussions with students [rom the Afro. Bowen's office is now accepting contrI By MIKF. FINN American Student ASSOCiation, other stu butions and Williard L. Boyd, dean of the Pres. Howard R. Bowen announced dent leaders. faculty members and Iowa faculties aod vice president for academic Citians. Bowen proposed that th · dudents, affairs, is heading a fund raising drive plans Tuesday for the creation of a $50,- faculty and lownspeoplr share equally in among faculty members. 000 Martin Luther I·Jnlt scholarship fund the iund rllising. An organized student drive is expected to bring students of a minority back· Bowen originally wllnted to use the mono to begin after spring vacation. ground, especially Negroes, to the Uni Other POints in Bowen's speech on what versity. ey 10 strengthen RILEEH, a University cultural exchange program under which the University can do to help the "national Bowen made his announcement at a 100 students from Rust and LeMoyne col· problem oC equality among men" in University convocaU(ln in memory of leges allend summer classes here. While cluded : King. Over 1,000 persons, including about Bowen advocated strenghtening ties with • A new sense o[ dedication and com one third of the 175 Negro students on the two predominantly Negro Mississppi mitmentlo the cause of equality. campus. attended the convocation. Near colleges, It was evident [rom University • Individual and group expression oC ly all of the 50 Negroes wore white arm student leaders that they wanted Bowen to views regarding federal and stale legisla bands in memory o~ the slain civil rights seek students of a minority background tion about civil rights, educatio.1 and eco leader. -

Sunday's Lineup 2018 WORLD SERIES QUEST BEGINS TODAY

The Official News of the 2018 Cleveland Indians Fantasy Camp Sunday, January 21, 2018 2018 WORLD SERIES QUEST BEGINS TODAY Sunday’s The hard work and relentless dedica- “It is about how we bring families, Lineup tion needed to be a winning team and neighbors, friends, business associates, gain a postseason berth begins long be- and even strangers together. fore the crowds are in the stands for “But we all know it is the play on the Opening Day. It begins on the practice field that is the spark of it all.” fields, in the classroom, and in the The Indians won an American League 7:00 - 8:25 Breakfast at the complex weight room. -best 102 games in 2017 and are poised Today marks that beginning, when the to be one of the top teams in 2018 due to 7:30 - 8:00 Bat selection 2018 Cleveland Indians Fantasy Camp its deeply talented core of players, award players make the first footprints at the -winning front office executives, com- Tribe’s Player Development Complex mitted ownership, and one of the best - if 8:30 - 8:55 Stretching on agility field here in Goodyear, AZ. not the best - managers in all of baseball Nestled in the scenic views of the Es- in Terry Francona. 9:00 -10:00 Instructional Clinics on fields trella Mountains just west of Phoenix, Named AL Manager of the year in the complex features six full practice both 2013 and 2016, the Tribe skipper fields, two half practice fields, an agility finished second for the award in 2017. -

Steers Gore Ags Twice, 13-2,4-0

The Battalion College Station (Brazos County), Texas Tuesday May 13,1958 PAGE 3 Save your temper.. Steers Gore Ags Twice, 13-2,4-0 Texas continued its sports domi Donald in the seven-run fourth in nation over the Aggies in Austin ning. last weekend, downing the Farmers Paul Zavorskas and Bob Sud- 13-2 and 4-0 in the season finale derth combined efforts on a five- Ags Seventh in SWC Meet; for both teams. hit shutout in the second game of The losses dropped the Cadets the two game series Saturday, de Lowest in SchooVs History into second division in final SWC feating the Aggies, 4-0, and posting standings, while the two wins gave Steer victory No. 1,001 since they The Texas Longhorns raced to point man, placing third in the the Longhorns a 13-2 record, their have been playing baseball in the their fifth consecutive SWC track broad jump with a leap of 23-5%. third victory over the outclassed conference. and field championship Saturday in Homer Smith placed fifth in the Ags this season and their 1,000th Donnie Hullum was credited with Dallas, leaving the Aggies far be 120-yard high hurdles and Bob hind with a seventh place finish. Carter took fifth in the javelin win in SWC history. the loss for the Ags, as he closed Texas won with 91 points to 47 throw for individual points in the In Friday’s action the Steers out the season with a 2-4 record. for SMU, 34% for Baylor, 31 for two-day meet. -

Game Summary

Miscucs Net 16 Unearned Runs- Jefferson Rams Clobber Carroll Tigers, 20-8 A triumphant Jefferson ball thL> sixth Inning. After five hobbled bringing Hastings for the first out, but Mick drive to deep right field, but Faaborg cracked a double to torn of third. Pettltt's single and surrendered one hit and club rallied for 11 sixth-inning Innings of play, the Tigers across the plate safely. Kendall Everett cracked a single over the catch was fumbled and deep left-center, but Zelle could up the center got things four walks to the Tigers. Lynch only make it to third. A passed started for Carroll. Everett came in relief for Kendall, but runs off the error-plagued Car- held a 7-6 lead over their op- stole second and Bob 7x;ller third base to bring Vetter Wand rode around to third roll Tigers, and bombarded the ponents. across for the score. Roger safely. Carlson proceeded to ball rectified that situation for made It to first on an error, walked the first batter, so the rapped a single to left to plate Fuller grounded out to third, steal second and Kendall Zeller and he scampered across and Puller plated Pettitt with Jefferson coach brought Carlson hapless Carroll club 20-B, in a Jefferson hopped to an early West Central League showdown Kendall. Jim Faaborg grounded and Jim Bell popped out to cen- sacrificed to shortstop, but a the plate safely to put the score a rap to left. Bell smacked back to the mound for the re- first inning lead on a pair of a two-run double to deep left here Monday evening. -

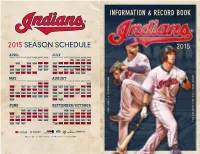

Information & Record Book

INFORMATION & RECORD BOOK 2015 SEASON SCHEDULE 2015 APRIL JULY SUN MON TUE WED THU FRI SAT SUN MON TUE WED THU FRI SAT 1 2 3 4 1 7:10 2 12:10 3 7:05 4 4:05 tb tb pit pit 5 6 7:05 7 8 8:05 9 2:05 10 4:10 11 4:10 5 1:35 6 7:10 7 7:10 8 7:10 9 7:10 10 7:10 11 6:35 HOU HOU HOU HOU det det pit HOU HOU HOU HOU oakdet oakdet 12 1:10 13 14 7:10 15 12:10 16 17 8:10 18 2:10 12 1:10 13 14 15 16 17 7:10 18 7:10 det cws cws min min oakdet ALL-STARcws BREAK cwsIN CINCINNATI mincin mincin 19 2:10 20 8:10 21 8:10 22 2:10 23 24 7:08 25 1:08 19 1:10 20 21 8:10 22 2:10 23 7:10 24 7:10 25 7:10 min cws cws cws det det mincin cws cwsmil cwsmil cws cwsdet cwsdet 26 1:08 27 6:10 28 6:10 29 6:10 30 7:10 26 1:10 27 7:10 28 7:10 29 12:10 30 10:07 31 9:37 det kc kc kc torkc cwsdet kc kc kc oakkc oak MAY AUGUST SUN MON TUE WED THU FRI SAT SUN MON TUE WED THU FRI SAT 1 2 13 7:10 42 4:10 1 2 3 41 9:07 tor tor oak 53 1:10 64 75 8:10 86 8:10 79 2:10 108 7:10 911 4:10 25 4:07 63 10:05 47 10:05 85 3:35 96 710 7:10 118 7:10 tor HOUkc HOUkc HOUkc detmin detmin oak laa HOUlaa laaHOU HOU detmin detmin 1210 1:10 1113 1412 6:10 1315 6:10 1614 12:10 1715 8:05 1816 8:05 129 1:10 1310 1411 7:10 1512 7:10 1316 7:10 1714 8:10 1518 7:10 detmin cwsstl cwsstl stl texmin mintex detmin cwsnyy cwsnyy nyy min min 1719 3:05 2018 8:10 1921 8:10 2220 8:10 2321 8:10 2224 7:10 2325 4:10 1619 2:10 1720 7:10 1821 7:10 1922 7:10 2320 7:05 2124 7:05 2225 1:05 mintex cws cws cws cws detcin detcin min cwsbos cwsbos cwsbos nyy detnyy nyydet 2426 1:10 2725 4:10 2628 7:10 2927 12:10 2830 10:10 29 10:10 -

HELLO GOODYEAR! Sunday’S Players at the 2013 Cleveland Indians 1,500 More

The Official News of the 2013 Cleveland Indians Fantasy Camp Sunday, January 20, 2013 HELLO GOODYEAR! Sunday’s Players at the 2013 Cleveland Indians 1,500 more. It is the Cactus League Lineup Fantasy Camp are set for game action spring training home of the Tribe and the and a baseball-packed week of fun. Cincinnati Reds, and their Arizona Sum- Happy to shake the cold and snow of mer League teams during the season. winter, these boys of summer are ready To every Indians fan, spring training 7:00 - 8:25 Breakfast at the complex to bask in the sun and blue sky glory of is a time of renewal. A time when the Goodyear, Arizona, at the Indians player spirit of the heart overtakes the mind and development complex and spring train- body to make us young and wide-eyed, 7:30 - 8:00 Bat selection ing home, Goodyear Ballpark. with visions of bringing the World Series Nestled in the shadows of the Estrella trophy back to the best location in the 8:30 - 8:55 Stretching on the field Mountains with its scenic views, desert nation. vistas, lakes, and golf courses, Goodyear Now it's your turn to swing the bat, 9:00 -10:15 Clinics on Fields is one of the fastest growing cities in the flash the leather, strike 'em out with your Valley, with a population over 65,000. wicked curveball, and create your own 10:15 -11:30 Batting practice on all fields Just twenty minutes west of downtown piece of Cleveland Indians history. -

1964 Topps Baseball Checklist

1964 Topps Baseball Checklist 1 Dick Ellswo1963 NL ERA Leaders Bob Friend Sandy Koufax 2 Camilo Pasc1963 AL ERA Leaders Gary Peters Juan Pizarro 3 Sandy Kouf1963 NL Pitching Leaders Jim Maloney Juan Marichal Warren Spahn 4 Jim Bouton1963 AL Pitching Leaders Whitey Ford Camilo Pascual 5 Don Drysda1963 NL Strikeout Leaders Sandy Koufax Jim Maloney 6 Jim Bunnin 1963 AL Strikeout Leaders Camilo Pascual Dick Stigman 7 Hank Aaron1963 NL Batting Leaders Roberto Clemente Tommy Davis Dick Groat 8 Al Kaline 1963 AL Batting Leaders Rich Rollins Carl Yastrzemski 9 Hank Aaron1963 NL Home Run Leaders Orlando Cepeda Willie Mays Willie McCovey 10 Bob Allison1963 AL Home Run Leaders Harmon Killebrew Dick Stuart 11 Hank Aaron1963 NL RBI Leaders Ken Boyer Bill White 12 Al Kaline 1963 AL RBI Leaders Harmon Killebrew Dick Stuart 13 Hoyt Wilhelm 14 Dick Nen Dodgers Rookies Nick Willhite 15 Zoilo Versalles Compliments of BaseballCardBinders.com© 2019 1 16 John Boozer 17 Willie Kirkland 18 Billy O'Dell 19 Don Wert 20 Bob Friend 21 Yogi Berra 22 Jerry Adair 23 Chris Zachary 24 Carl Sawatski 25 Bill Monbouquette 26 Gino Cimoli 27 New York Mets Team Card 28 Claude Osteen 29 Lou Brock 30 Ron Perranoski 31 Dave Nicholson 32 Dean Chance 33 Sammy EllisReds Rookies Mel Queen 34 Jim Perry 35 Eddie Mathews 36 Hal Reniff 37 Smoky Burgess 38 Jimmy Wynn 39 Hank Aguirre 40 Dick Groat 41 Willie McCoFriendly Foes Leon Wagner 42 Moe Drabowsky 43 Roy Sievers 44 Duke Carmel 45 Milt Pappas 46 Ed Brinkman 47 Jesus Alou Giants Rookies Ron Herbel 48 Bob Perry 49 Bill Henry 50 Mickey -

PERO ®TED ® TRUDON Eumtttg Hrraui

/ FRTOAT, APRIL 2, 1965 f A G B TWENTY-POUk Eapmttg Cancer Canvasser at Door Today or Tomorrow—Give case of two parked oars bolBK Audition* wiU be held Tues Sunset Rebekah Lodge will bangedalnto by an.unknown meet Monday at 8 p.m. at Odd Women to See TIME TO GET GROWING Arsngc Daily Net Press Rim The WeRtber day at 7 pjrt’. at Bailey Audi Police Arrests passing vehicle last night about Forecast ed U, 8. Wealhsr Awo About Town torium of Manchester High FeUowa Hall. Mrs. Clyde Beck Eye Bank Film 10:30 on Charter Oak S t near For the Week Ekided School for high school etiidents with will head a refreshment Spruce S t The two cars, parked March 27, 1888 H)« ZlpMT Club will qwnsor wMiing to participate in a committee. James W. Havens, 17, of 31 on Charter Oak S t facing east TURF F O O D Mrs. Lois Stevens, executive Clear and cold teolght, law A card party Saturday night variety show May If ahd 16 at jvuirifnaMarshall Rd„ was charged with art cwned by Richard L. Trex- 2«e. Sonny and pleasant tsesi at 8 o’clock at the club house on 8 p.m. at the auditorium.' Pro Dr. Max Dubrow, direct larceny sr *16 and ordered to director of the Visual Research ler of 30 Goalee Dr. and the and CRABGRASS KILLER 14,125 Eumtttg HrraUi row. High • • to 88. Bralnard Place. ceeds of the show will benefit the New York Aasoci n 10 ^ appear the Manchester ses- Foundation of OonnecUcut, will Launder Rite Textile Oorp. -

Syria Joins UAR-Jordan in UN Cease-Fire

WEATHER Cloudy 1JWATER High Tide Bravo 9:23 am 4 k Low Tide 11:23 pm 5:40 am 3:34 pm U. S. NAVAL BASE, GUANTANAMO BAY, CUBA Phone 9-5247 Friday Date June 9, 1967 Radio (1340) TV (Ch. 8) * Israelis Hit US Ship By Accident WASHINGTON (AP) ISRAELI WAR- Syria Joins UAR-Jordan PLANES AND boats mistakenly strafed and torpedoed a U.S. Navy ship off the Egyptian coast yesterday, killing In 10 UN Cease-Fire Americans and wounding 75 BEIRUT, LEBANON (AP) others, the Pentagon SYRIA ANNOUNCED EARLY Friday it has said. accepted a cease-fire with Israel. The United States promptly The announcement was made by Radio Damascus protested the incident, which decision which said the- was taken at an emergency session of came a few hours before Egypt Ministers. the Council of notified the United Nations it was ready to accept a At the same time the Damas- cease- Sword Passes cus Radio fire heralding an end to the On made its first ref- four-day old Middle East erance to acceptance of the War. cease-fire The Pentagon said 15 of the by Egypt. wounded were in critical Syria, which had been the con- most vociferous dition. Two destroyers were in support of speeding through war, was the last of the four the Mediter- principal ranean to aid the damaged ves- belligerents to ac- sel, a lightly armed cept the cease-fire call of research the ship that had been helping U.N. Security Council. relay communications from U.S. installations in the Mideast. -

Daily Iowan (Iowa City, Iowa), 1968-06-27

House Approves, Forecast After a Fashion, ail Iowan Serving the University of Iowa and the People of Iowa City $17 Billion Bill Established in 1868 10 centa a copy Iowa City, [owa S22e-Tbunday, JWIe !'T, lSI&I Was th, WASHINGTON t.1'! - The House voted a normany . battered $17 billion appropriation for a Day,~ group of socia l. medical and educational single.. agencies Wednesday night after slashing poverty lunds $100 million - and then re WOlllen's lin ~ versing the vote and restoring the money. Congressional Reaction The final amount approved for the Office of Economic Opportuity, which administers the poverty program, was $307 million be low President Johnson's budget rerom mendation due to previous cuts by Ibe House Appropriations Committee. To High Court Bid Mixed After seesaw votes on a number of edu cational items, the total allowed the Of· WASHINGTON III - Opposition to two a president appointing a chief justice in lions, dedined comment But the nexl fice of Education fell $414.5 million below his ranlI:in, Democrat, Sen. L. McClell Johnson's recommendations. presidential appointmEnts to the Supreme the waning months of term, indicat John Key votes came under the eyes of a Court was voiced Wednesday nithl by at ed, how~ver, that he and others may fi· an of Arkansas, said be doell not expect delegation of the Poor People's Campaign least one congre man, who said he had lilmster agamst conftrmotJons. tile nomination of a new chief justice in the House gallery. But the group of considerable support in the Senate and If the Dominal:on are broutht up, he "will uil tb:rouIh" the Senate_ about 45 left quietly before final passage raised the possibility of filibusters aeainsl teClellan said ill view ollhe many con which came on a voice vote. -

VETS SET PACE for ‘14 CAMPAIGN Sunday’S a Record Number of Veterans Are Set to the World, Including My Brothers

The Official News of the 2014 Cleveland Indians Fantasy Camp Sunday, January 19, 2014 VETS SET PACE FOR ‘14 CAMPAIGN Sunday’s A record number of veterans are set to the world, including my brothers. Lineup take the field for a week of fun at the “We come from different places, 2014 Cleveland Indians Fantasy Camp. backgrounds, political and religious af- Sixty-nine. filiations. We range in age from 30 to 80 Incredibly, that’s the number of veter- and in ability from none to plenty. We 7:00 - 8:25 Breakfast at the complex ans who have attended at least one fan- have in common only a love of baseball and the Cleveland Indians. And I cannot tasy camp before this year. The vets will 7:30 - 8:00 Bat selection be joined by twenty-six rookies, who wait to see all of them.” will get their first taste of what is argua- Back for his eighth camp is Chris’ bly the best experience a Cleveland Indi- brother, Bill Schubert. 8:30 - 8:55 Stretching on the field ans fan can have. “My favorite thing about fantasy Is it the fabulous Goodyear, AZ state- camp is spending a week with my 9:00 -10:15 Clinics on Fields of-the-art player development complex brother, where the only things we have that keeps them coming back? Some- to worry about is, can we get a base hit, 10:15 -11:30 Batting practice on all fields thing else? or throw a 3-2 strike, or catch a routine “I love baseball.