DOM-Based Content Extraction of HTML Documents

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

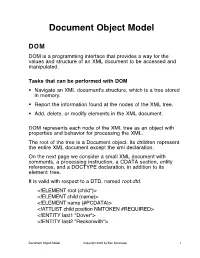

Document Object Model

Document Object Model DOM DOM is a programming interface that provides a way for the values and structure of an XML document to be accessed and manipulated. Tasks that can be performed with DOM . Navigate an XML document's structure, which is a tree stored in memory. Report the information found at the nodes of the XML tree. Add, delete, or modify elements in the XML document. DOM represents each node of the XML tree as an object with properties and behavior for processing the XML. The root of the tree is a Document object. Its children represent the entire XML document except the xml declaration. On the next page we consider a small XML document with comments, a processing instruction, a CDATA section, entity references, and a DOCTYPE declaration, in addition to its element tree. It is valid with respect to a DTD, named root.dtd. <!ELEMENT root (child*)> <!ELEMENT child (name)> <!ELEMENT name (#PCDATA)> <!ATTLIST child position NMTOKEN #REQUIRED> <!ENTITY last1 "Dover"> <!ENTITY last2 "Reckonwith"> Document Object Model Copyright 2005 by Ken Slonneger 1 Example: root.xml <?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE root SYSTEM "root.dtd"> <!-- root.xml --> <?DomParse usage="java DomParse root.xml"?> <root> <child position="first"> <name>Eileen &last1;</name> </child> <child position="second"> <name><![CDATA[<<<Amanda>>>]]> &last2;</name> </child> <!-- Could be more children later. --> </root> DOM imagines that this XML information has a document root with four children: 1. A DOCTYPE declaration. 2. A comment. 3. A processing instruction, whose target is DomParse. 4. The root element of the document. The second comment is a child of the root element. -

Exploring and Extracting Nodes from Large XML Files

Exploring and Extracting Nodes from Large XML Files Guy Lapalme January 2010 Abstract This article shows how to deal simply with large XML files that cannot be read as a whole in memory and for which the usual XML exploration and extraction mechanisms cannot work or are very inefficient in processing time. We define the notion of a skeleton document that is maintained as the file is read using a pull- parser. It is used for showing the structure of the document and for selecting parts of it. 1 Introduction XML has been developed to facilitate the annotation of information to be shared between computer systems. Because it is intended to be easily generated and parsed by computer systems on all platforms, its format is based on character streams rather than internal binary ones. Being character-based, it also has the nice property of being readable and editable by humans using standard text editors. XML is based on a uniform, simple and yet powerful model of data organization: the generalized tree. Such a tree is defined as either a single element or an element having other trees as its sub-elements called children. This is the same model as the one chosen for the Lisp programming language 50 years ago. This hierarchical model is very simple and allows a simple annotation of the data. The left part of Figure 1 shows a very small XML file illustrating the basic notation: an arbitrary name between < and > symbols is given to a node of a tree. This is called a start-tag. -

WEB-BASED ANNOTATION and COLLABORATION Electronic Document Annotation Using a Standards-Compliant Web Browser

WEB-BASED ANNOTATION AND COLLABORATION Electronic Document Annotation Using a Standards-compliant Web Browser Trev Harmon School of Technology, Brigham Young University, 265 CTB, Provo, Utah, USA Keywords: Annotation, collaboration, web-based, e-learning. Abstract: The Internet provides a powerful medium for communication and collaboration through web-based applications. However, most web-based annotation and collaboration applications require additional software, such as applets, plug-ins, and extensions, in order to work correctly with the web browsers typically found on today’s computers. This in combination with the ever-growing number of file formats poses an obstacle to the wide-scale deployment of annotation and collaboration systems across the heterogeneous networks common in the academic and corporate worlds. In order to address these issues, a web-based system was developed that allows for freeform (handwritten) and typed annotation of over twenty common file formats via a standards-compliant web browser without the need of additional software. The system also provides a multi-tiered security architecture that allows authors control over who has access to read and annotate their documents. While initially designed for use in academia, flexibility within the system allows it to be used for many annotation and collaborative tasks such as distance-learning, collaborative projects, online discussion and bulletin boards, graphical wikis, and electronic grading. The open-source nature of the system provides the opportunity for its continued development and extension. 1 INTRODUCTION did not foresee the broad spectrum of uses expected of their technologies by today’s users. While serving While the telegraph and telephone may have cracked this useful purpose, such add-on software can open the door of instantaneous, worldwide become problematic in some circumstances because communication, the Internet has flung it wide open. -

Document Object Model

Document Object Model CITS3403: Agile Web Development Semester 1, 2021 Introduction • We’ve seen JavaScript core – provides a general scripting language – but why is it so useful for the web? • Client-side JavaScript adds collection of objects, methods and properties that allow scripts to interact with HTML documents dynamic documents client-side programming • This is done by bindings to the Document Object Model (DOM) – “The Document Object Model is a platform- and language-neutral interface that will allow programs and scripts to dynamically access and update the content, structure and style of documents.” – “The document can be further processed and the results of that processing can be incorporated back into the presented page.” • DOM specifications describe an abstract model of a document – API between HTML document and program – Interfaces describe methods and properties – Different languages will bind the interfaces to specific implementations – Data are represented as properties and operations as methods • https://www.w3schools.com/js/js_htmldom.asp The DOM Tree • DOM API describes a tree structure – reflects the hierarchy in the XTML document – example... <html xmlns = "http://www.w3.org/1999/xhtml"> <head> <title> A simple document </title> </head> <body> <table> <tr> <th>Breakfast</th> <td>0</td> <td>1</td> </tr> <tr> <th>Lunch</th> <td>1</td> <td>0</td> </tr> </table> </body> </html> Execution Environment • The DOM tree also includes nodes for the execution environment in a browser • Window object represents the window displaying a document – All properties are visible to all scripts – Global variables are properties of the Window object • Document object represents the HTML document displayed – Accessed through document property of Window – Property arrays for forms, links, images, anchors, … • The Browser Object Model is sometimes used to refer to bindings to the browser, not specific to the current page (document) being rendered. -

Chapter 10 Document Object Model and Dynamic HTML

Chapter 10 Document Object Model and Dynamic HTML The term Dynamic HTML, often abbreviated as DHTML, refers to the technique of making Web pages dynamic by client-side scripting to manipulate the document content and presen- tation. Web pages can be made more lively, dynamic, or interactive by DHTML techniques. With DHTML you can prescribe actions triggered by browser events to make the page more lively and responsive. Such actions may alter the content and appearance of any parts of the page. The changes are fast and e±cient because they are made by the browser without having to network with any servers. Typically the client-side scripting is written in Javascript which is being standardized. Chapter 9 already introduced Javascript and basic techniques for making Web pages dynamic. Contrary to what the name may suggest, DHTML is not a markup language or a software tool. It is a technique to make dynamic Web pages via client-side programming. In the past, DHTML relies on browser/vendor speci¯c features to work. Making such pages work for all browsers requires much e®ort, testing, and unnecessarily long programs. Standardization e®orts at W3C and elsewhere are making it possible to write standard- based DHTML that work for all compliant browsers. Standard-based DHTML involves three aspects: 447 448 CHAPTER 10. DOCUMENT OBJECT MODEL AND DYNAMIC HTML Figure 10.1: DOM Compliant Browser Browser Javascript DOM API XHTML Document 1. Javascript|for cross-browser scripting (Chapter 9) 2. Cascading Style Sheets (CSS)|for style and presentation control (Chapter 6) 3. Document Object Model (DOM)|for a uniform programming interface to access and manipulate the Web page as a document When these three aspects are combined, you get the ability to program changes in Web pages in reaction to user or browser generated events, and therefore to make HTML pages more dynamic. -

Ch08-Dom.Pdf

Web Programming Step by Step Chapter 8 The Document Object Model (DOM) Except where otherwise noted, the contents of this presentation are Copyright 2009 Marty Stepp and Jessica Miller. 8.1: Global DOM Objects 8.1: Global DOM Objects 8.2: DOM Element Objects 8.3: The DOM Tree The six global DOM objects Every Javascript program can refer to the following global objects: name description document current HTML page and its content history list of pages the user has visited location URL of the current HTML page navigator info about the web browser you are using screen info about the screen area occupied by the browser window the browser window The window object the entire browser window; the top-level object in DOM hierarchy technically, all global code and variables become part of the window object properties: document , history , location , name methods: alert , confirm , prompt (popup boxes) setInterval , setTimeout clearInterval , clearTimeout (timers) open , close (popping up new browser windows) blur , focus , moveBy , moveTo , print , resizeBy , resizeTo , scrollBy , scrollTo The document object the current web page and the elements inside it properties: anchors , body , cookie , domain , forms , images , links , referrer , title , URL methods: getElementById getElementsByName getElementsByTagName close , open , write , writeln complete list The location object the URL of the current web page properties: host , hostname , href , pathname , port , protocol , search methods: assign , reload , replace complete list The navigator object information about the web browser application properties: appName , appVersion , browserLanguage , cookieEnabled , platform , userAgent complete list Some web programmers examine the navigator object to see what browser is being used, and write browser-specific scripts and hacks: if (navigator.appName === "Microsoft Internet Explorer") { .. -

XPATH in NETCONF and YANG Table of Contents

XPATH IN NETCONF AND YANG Table of Contents 1. Introduction ............................................................................................................3 2. XPath 1.0 Introduction ...................................................................................3 3. The Use of XPath in NETCONF ...............................................................4 4. The Use of XPath in YANG .........................................................................5 5. XPath and ConfD ...............................................................................................8 6. Conclusion ...............................................................................................................9 7. Additional Resourcese ..................................................................................9 2 XPath in NETCONF and YANG 1. Introduction XPath is a powerful tool used by NETCONF and YANG. This application note will help you to understand and utilize this advanced feature of NETCONF and YANG. This application note gives a brief introduction to XPath, then describes how XPath is used in NETCONF and YANG, and finishes with a discussion of XPath in ConfD. The XPath 1.0 standard was defined by the W3C in 1999. It is a language which is used to address the parts of an XML document and was originally design to be used by XML Transformations. XPath gets its name from its use of path notation for navigating through the hierarchical structure of an XML document. Since XML serves as the encoding format for NETCONF and a data model defined in YANG is represented in XML, it was natural for NETCONF and XML to utilize XPath. 2. XPath 1.0 Introduction XML Path Language, or XPath 1.0, is a W3C recommendation first introduced in 1999. It is a language that is used to address and match parts of an XML document. XPath sees the XML document as a tree containing different kinds of nodes. The types of nodes can be root, element, text, attribute, namespace, processing instruction, and comment nodes. -

Basic DOM Scripting Objectives

Basic DOM scripting Objectives Applied Write code that uses the properties and methods of the DOM and DOM HTML nodes. Write an event handler that accesses the event object and cancels the default action. Write code that preloads images. Write code that uses timers. Objectives (continued) Knowledge Describe these properties and methods of the DOM Node type: nodeType, nodeName, nodeValue, parentNode, childNodes, firstChild, hasChildNodes. Describe these properties and methods of the DOM Document type: documentElement, getElementsByTagName, getElementsByName, getElementById. Describe these properties and methods of the DOM Element type: tagName, hasAttribute, getAttribute, setAttribute, removeAttribute. Describe the id and title properties of the DOM HTMLElement type. Describe the href property of the DOM HTMLAnchorElement type. Objectives (continued) Describe the src property of the DOM HTMLImageElement type. Describe the disabled property and the focus and blur methods of the DOM HTMLInputElement and HTMLButtonElement types. Describe these timer methods: setTimeout, setInterval, clearTimeout, clearInterval. The XHTML for a web page <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd"> <html xmlns="http://www.w3.org/1999/xhtml"> <head> <title>Image Gallery</title> <link rel="stylesheet" type="text/css" href="image_gallery.css"/> </head> <body> <div id="content"> <h1 class="center">Fishing Image Gallery</h1> <p class="center">Click one of the links below to view -

Cascading Style Sheet Web Tool

CASCADING STYLE SHEET WEB TOOL _______________ A Thesis Presented to the Faculty of San Diego State University _______________ In Partial Fulfillment of the Requirements for the Degree Master of Science in Computer Science _______________ by Kalthoum Y. Adam Summer 2011 iii Copyright © 2011 by Kalthoum Y. Adam All Rights Reserved iv DEDICATION I dedicate this work to my parents who taught me not to give up on fulfilling my dreams. To my faithful husband for his continued support and motivation. To my sons who were my great inspiration. To all my family and friends for being there for me when I needed them most. v ABSTRACT OF THE THESIS Cascading Style Sheet Web Tool by Kalthoum Y. Adam Master of Science in Computer Science San Diego State University, 2011 Cascading Style Sheet (CSS) is a style language that separates the style of a web document from its content. It is used to customize the layout and control the appearance of web pages written by markup languages. CSS saves time while developing the web page by applying the same layout and style to all pages in the website. Furthermore, it makes the website easy to maintain by just editing one file. In this thesis, we developed a CSS web tool that is intended to web developers who will hand-code their HTML and CSS to have a complete control over the web page layout and style. The tool is a form wizard that helps developers through a user-friendly interface to create a website template with a valid CSS and XHTML code. -

Node.Js: Building for Scalability with Server-Side Javascript

#141 CONTENTS INCLUDE: n What is Node? Node.js: Building for Scalability n Where does Node fit? n Installation n Quick Start with Server-Side JavaScript n Node Ecosystem n Node API Guide and more... By Todd Eichel Visit refcardz.com Consider a food vending WHAT IS NODE? truck on a city street or at a festival. A food truck In its simplest form, Node is a set of libraries for writing high- operating like a traditional performance, scalable network programs in JavaScript. Take a synchronous web server look at this application that will respond with the text “Hello would have a worker take world!” on every HTTP request: an order from the first customer in line, and then // require the HTTP module so we can create a server object the worker would go off to var http = require(‘http’); prepare the order while the customer waits at the window. Once Get More Refcardz! Refcardz! Get More // Create an HTTP server, passing a callback function to be the order is complete, the worker would return to the window, // executed on each request. The callback function will be give it to the customer, and take the next customer’s order. // passed two objects representing the incoming HTTP // request and our response. Contrast this with a food truck operating like an asynchronous var helloServer = http.createServer(function (req, res) { web server. The workers in this truck would take an order from // send back the response headers with an HTTP status the first customer in line, issue that customer an order number, // code of 200 and an HTTP header for the content type res.writeHead(200, {‘Content-Type’: ‘text/plain’}); and have the customer stand off to the side to wait while the order is prepared. -

Abstracts Ing XML Information

394 TUGboat, Volume 20 (1999), No. 4 new precise meanings assigned old familar words.Such pairings are indispensable to those who must work in both languages — or those who must translate from one into the other! The specification covers 90 pages, the introduction another 124.And this double issue has still more: a comparison between SGML and XML, an introduction to Document Object Models (interfaces for XML doc- uments), generating MathML in Omega, a program to generate MathML-encoded mathematics, and finally, the issue closes with a translation of the XML FAQ (v.1.5, June 1999), maintained by Peter Flynn. In all, over 300 pages devoted to XML. Michel Goossens, XML et XSL : unnouveau d´epart pour le web [XML and XSL:Anewventure for the Web]; pp. 3–126 Late in1996, the W3C andseveral major soft- ware vendors decided to define a markup language specifically optimized for the Web: XML (eXtensible Markup Language) was born. It is a simple dialect of SGML, which does not use many of SGML’s seldom- used and complex functions, and does away with most limitations of HTML. After anintroduction to the XML standard, we describe XSL (eXtensible Stylesheet Language) for presenting and transform- Abstracts ing XML information. Finally we say a few words about other recent developments in the XML arena. [Author’s abstract] LesCahiersGUTenberg As mentioned in the editorial, this article is intended Contents of Double Issue 33/34 to be read in conjunction with the actual specification, (November 1999) provided later in the same issue. Michel Goossens, Editorial´ : XML ou la d´emocratisationdu web [Editorial: XML or, the Sarra Ben Lagha, Walid Sadfi and democratisationof the web]; pp. -

Security Considerations Around the Usage of Client-Side Storage Apis

Security considerations around the usage of client-side storage APIs Stefano Belloro (BBC) Alexios Mylonas (Bournemouth University) Technical Report No. BUCSR-2018-01 January 12 2018 ABSTRACT Web Storage, Indexed Database API and Web SQL Database are primitives that allow web browsers to store information in the client in a much more advanced way compared to other techniques such as HTTP Cookies. They were originally introduced with the goal of enhancing the capabilities of websites, however, they are often exploited as a way of tracking users across multiple sessions and websites. This work is divided in two parts. First, it quantifies the usage of these three primitives in the context of user tracking. This is done by performing a large-scale analysis on the usage of these techniques in the wild. The results highlight that code snippets belonging to those primitives can be found in tracking scripts at a surprising high rate, suggesting that user tracking is a major use case of these technologies. The second part reviews of the effectiveness of the removal of client-side storage data in modern browsers. A web application, built for specifically for this study, is used to highlight that it is often extremely hard, if not impossible, for users to remove personal data stored using the three primitives considered. This finding has significant implications, because those techniques are often uses as vector for cookie resurrection. CONTENTS Abstract ........................................................................................................................