Critical CSS Rules Decreasing Time to first Render by Inlining CSS Rules for Over-The-Fold Elements

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

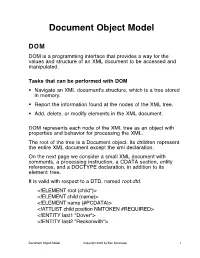

Document Object Model

Document Object Model DOM DOM is a programming interface that provides a way for the values and structure of an XML document to be accessed and manipulated. Tasks that can be performed with DOM . Navigate an XML document's structure, which is a tree stored in memory. Report the information found at the nodes of the XML tree. Add, delete, or modify elements in the XML document. DOM represents each node of the XML tree as an object with properties and behavior for processing the XML. The root of the tree is a Document object. Its children represent the entire XML document except the xml declaration. On the next page we consider a small XML document with comments, a processing instruction, a CDATA section, entity references, and a DOCTYPE declaration, in addition to its element tree. It is valid with respect to a DTD, named root.dtd. <!ELEMENT root (child*)> <!ELEMENT child (name)> <!ELEMENT name (#PCDATA)> <!ATTLIST child position NMTOKEN #REQUIRED> <!ENTITY last1 "Dover"> <!ENTITY last2 "Reckonwith"> Document Object Model Copyright 2005 by Ken Slonneger 1 Example: root.xml <?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE root SYSTEM "root.dtd"> <!-- root.xml --> <?DomParse usage="java DomParse root.xml"?> <root> <child position="first"> <name>Eileen &last1;</name> </child> <child position="second"> <name><![CDATA[<<<Amanda>>>]]> &last2;</name> </child> <!-- Could be more children later. --> </root> DOM imagines that this XML information has a document root with four children: 1. A DOCTYPE declaration. 2. A comment. 3. A processing instruction, whose target is DomParse. 4. The root element of the document. The second comment is a child of the root element. -

Re-Architecting Web and Mobile Information Access for Emerging Regions

Re-architecting Web and Mobile Information Access for Emerging Regions by Jay Chen A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Mathematics Courant Institute of Mathematical Sciences New York University September 2011 Professor Lakshminarayanan Subramanian c Jay Chen All Rights Reserved, 2011 Acknowledgments I would like to start by expressing my deepest gratitude to my advisor, Lakshminarayanan Sub- ramanian (or just “Lakshmi”). It was Lakshmi who set me on the path toward my eventual area of research. Lakshmi has always been generous with his time, and never short on ideas or en- thusiasm. Without Lakshmi’s courage to pursue the research that inspires him, I would not have found my own passion: to build systems that benefit people - as many people as much as possible by inventing ways to bring technology to people living outside of the privileged regions of the world. Contributors to this dissertation - This thesis is based on research that I performed over the past five years with many colleagues contributing directly to the work in this dissertation. Many people helped me along the way whose help I could not have done without. The RuralCafe user study would not have been possible without the help of Saleema Amershi and Aditya Dhananjay (Chapter 6.6). Our low bandwidth transport modeling and analysis (Chapter 3.1) was an effort largely attributable to Janardhan Iyengar and long discussions with Bryan Ford. Russell Power implemented the feature reduction algorithm for CIPs (Chapter 7.2.2) in his “spare time”. Our ELF deployments (Chapters 2.2 and 5.3) were only possible with help from David Hutchful. -

Exploring and Extracting Nodes from Large XML Files

Exploring and Extracting Nodes from Large XML Files Guy Lapalme January 2010 Abstract This article shows how to deal simply with large XML files that cannot be read as a whole in memory and for which the usual XML exploration and extraction mechanisms cannot work or are very inefficient in processing time. We define the notion of a skeleton document that is maintained as the file is read using a pull- parser. It is used for showing the structure of the document and for selecting parts of it. 1 Introduction XML has been developed to facilitate the annotation of information to be shared between computer systems. Because it is intended to be easily generated and parsed by computer systems on all platforms, its format is based on character streams rather than internal binary ones. Being character-based, it also has the nice property of being readable and editable by humans using standard text editors. XML is based on a uniform, simple and yet powerful model of data organization: the generalized tree. Such a tree is defined as either a single element or an element having other trees as its sub-elements called children. This is the same model as the one chosen for the Lisp programming language 50 years ago. This hierarchical model is very simple and allows a simple annotation of the data. The left part of Figure 1 shows a very small XML file illustrating the basic notation: an arbitrary name between < and > symbols is given to a node of a tree. This is called a start-tag. -

Swarovsky: Optimizing Resource Loading for Mobile Web Browsing

IEEE TRANSACTIONS ON MOBILE COMPUTING, VOL. XX, NO. XX, XXXX 201X 1 SWAROVsky: Optimizing Resource Loading for Mobile Web Browsing Xuanzhe Liu, Member, IEEE, Yun Ma, Xinyang Wang, Yunxin Liu Senior Member, IEEE, Tao Xie Senior Member, IEEE, and Gang Huang Senior Member, IEEE Abstract—Imperfect Web resource loading prevents mobile Web browsing from providing satisfactory user experience. In this article, we design and implement the SWAROVsky system to address three main issues of current inefficient Web resource loading: (1) on-demand and thus slow loading of sub-resources of webpages; (2) duplicated loading of resources with different URLs but the same content; and (3) redundant loading of the same resource due to improper cache configurations. SWAROVsky employs a dual-proxy architecture that comprises a remote cloud-side proxy and a local proxy on mobile devices. The remote proxy proactively loads webpages from their original Web servers and maintains a resource loading graph for every single webpage. Based on the graph, the remote proxy is capable of deciding which resources are “really” needed for the webpage and their loading orders, and thus can synchronize these needed resources with the local proxy of a client efficiently and timely. The local proxy also runs an intelligent and light-weight algorithm to identify resources with different URLs but the same content, and thus can avoid duplicated downloading of the same content via network. Our system can be used with existing Web browsers and Web servers, and does not break the normal semantics of a webpage. Evaluations with 50 websites show that on average our system can reduce the page load time by 43.1% and the network data transmission by 57.6%, while imposing marginal system overhead. -

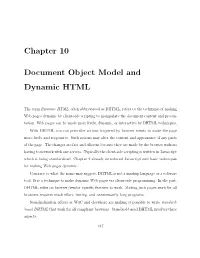

Chapter 10 Document Object Model and Dynamic HTML

Chapter 10 Document Object Model and Dynamic HTML The term Dynamic HTML, often abbreviated as DHTML, refers to the technique of making Web pages dynamic by client-side scripting to manipulate the document content and presen- tation. Web pages can be made more lively, dynamic, or interactive by DHTML techniques. With DHTML you can prescribe actions triggered by browser events to make the page more lively and responsive. Such actions may alter the content and appearance of any parts of the page. The changes are fast and e±cient because they are made by the browser without having to network with any servers. Typically the client-side scripting is written in Javascript which is being standardized. Chapter 9 already introduced Javascript and basic techniques for making Web pages dynamic. Contrary to what the name may suggest, DHTML is not a markup language or a software tool. It is a technique to make dynamic Web pages via client-side programming. In the past, DHTML relies on browser/vendor speci¯c features to work. Making such pages work for all browsers requires much e®ort, testing, and unnecessarily long programs. Standardization e®orts at W3C and elsewhere are making it possible to write standard- based DHTML that work for all compliant browsers. Standard-based DHTML involves three aspects: 447 448 CHAPTER 10. DOCUMENT OBJECT MODEL AND DYNAMIC HTML Figure 10.1: DOM Compliant Browser Browser Javascript DOM API XHTML Document 1. Javascript|for cross-browser scripting (Chapter 9) 2. Cascading Style Sheets (CSS)|for style and presentation control (Chapter 6) 3. Document Object Model (DOM)|for a uniform programming interface to access and manipulate the Web page as a document When these three aspects are combined, you get the ability to program changes in Web pages in reaction to user or browser generated events, and therefore to make HTML pages more dynamic. -

Ch08-Dom.Pdf

Web Programming Step by Step Chapter 8 The Document Object Model (DOM) Except where otherwise noted, the contents of this presentation are Copyright 2009 Marty Stepp and Jessica Miller. 8.1: Global DOM Objects 8.1: Global DOM Objects 8.2: DOM Element Objects 8.3: The DOM Tree The six global DOM objects Every Javascript program can refer to the following global objects: name description document current HTML page and its content history list of pages the user has visited location URL of the current HTML page navigator info about the web browser you are using screen info about the screen area occupied by the browser window the browser window The window object the entire browser window; the top-level object in DOM hierarchy technically, all global code and variables become part of the window object properties: document , history , location , name methods: alert , confirm , prompt (popup boxes) setInterval , setTimeout clearInterval , clearTimeout (timers) open , close (popping up new browser windows) blur , focus , moveBy , moveTo , print , resizeBy , resizeTo , scrollBy , scrollTo The document object the current web page and the elements inside it properties: anchors , body , cookie , domain , forms , images , links , referrer , title , URL methods: getElementById getElementsByName getElementsByTagName close , open , write , writeln complete list The location object the URL of the current web page properties: host , hostname , href , pathname , port , protocol , search methods: assign , reload , replace complete list The navigator object information about the web browser application properties: appName , appVersion , browserLanguage , cookieEnabled , platform , userAgent complete list Some web programmers examine the navigator object to see what browser is being used, and write browser-specific scripts and hacks: if (navigator.appName === "Microsoft Internet Explorer") { .. -

XPATH in NETCONF and YANG Table of Contents

XPATH IN NETCONF AND YANG Table of Contents 1. Introduction ............................................................................................................3 2. XPath 1.0 Introduction ...................................................................................3 3. The Use of XPath in NETCONF ...............................................................4 4. The Use of XPath in YANG .........................................................................5 5. XPath and ConfD ...............................................................................................8 6. Conclusion ...............................................................................................................9 7. Additional Resourcese ..................................................................................9 2 XPath in NETCONF and YANG 1. Introduction XPath is a powerful tool used by NETCONF and YANG. This application note will help you to understand and utilize this advanced feature of NETCONF and YANG. This application note gives a brief introduction to XPath, then describes how XPath is used in NETCONF and YANG, and finishes with a discussion of XPath in ConfD. The XPath 1.0 standard was defined by the W3C in 1999. It is a language which is used to address the parts of an XML document and was originally design to be used by XML Transformations. XPath gets its name from its use of path notation for navigating through the hierarchical structure of an XML document. Since XML serves as the encoding format for NETCONF and a data model defined in YANG is represented in XML, it was natural for NETCONF and XML to utilize XPath. 2. XPath 1.0 Introduction XML Path Language, or XPath 1.0, is a W3C recommendation first introduced in 1999. It is a language that is used to address and match parts of an XML document. XPath sees the XML document as a tree containing different kinds of nodes. The types of nodes can be root, element, text, attribute, namespace, processing instruction, and comment nodes. -

Basic DOM Scripting Objectives

Basic DOM scripting Objectives Applied Write code that uses the properties and methods of the DOM and DOM HTML nodes. Write an event handler that accesses the event object and cancels the default action. Write code that preloads images. Write code that uses timers. Objectives (continued) Knowledge Describe these properties and methods of the DOM Node type: nodeType, nodeName, nodeValue, parentNode, childNodes, firstChild, hasChildNodes. Describe these properties and methods of the DOM Document type: documentElement, getElementsByTagName, getElementsByName, getElementById. Describe these properties and methods of the DOM Element type: tagName, hasAttribute, getAttribute, setAttribute, removeAttribute. Describe the id and title properties of the DOM HTMLElement type. Describe the href property of the DOM HTMLAnchorElement type. Objectives (continued) Describe the src property of the DOM HTMLImageElement type. Describe the disabled property and the focus and blur methods of the DOM HTMLInputElement and HTMLButtonElement types. Describe these timer methods: setTimeout, setInterval, clearTimeout, clearInterval. The XHTML for a web page <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd"> <html xmlns="http://www.w3.org/1999/xhtml"> <head> <title>Image Gallery</title> <link rel="stylesheet" type="text/css" href="image_gallery.css"/> </head> <body> <div id="content"> <h1 class="center">Fishing Image Gallery</h1> <p class="center">Click one of the links below to view -

Node.Js: Building for Scalability with Server-Side Javascript

#141 CONTENTS INCLUDE: n What is Node? Node.js: Building for Scalability n Where does Node fit? n Installation n Quick Start with Server-Side JavaScript n Node Ecosystem n Node API Guide and more... By Todd Eichel Visit refcardz.com Consider a food vending WHAT IS NODE? truck on a city street or at a festival. A food truck In its simplest form, Node is a set of libraries for writing high- operating like a traditional performance, scalable network programs in JavaScript. Take a synchronous web server look at this application that will respond with the text “Hello would have a worker take world!” on every HTTP request: an order from the first customer in line, and then // require the HTTP module so we can create a server object the worker would go off to var http = require(‘http’); prepare the order while the customer waits at the window. Once Get More Refcardz! Refcardz! Get More // Create an HTTP server, passing a callback function to be the order is complete, the worker would return to the window, // executed on each request. The callback function will be give it to the customer, and take the next customer’s order. // passed two objects representing the incoming HTTP // request and our response. Contrast this with a food truck operating like an asynchronous var helloServer = http.createServer(function (req, res) { web server. The workers in this truck would take an order from // send back the response headers with an HTTP status the first customer in line, issue that customer an order number, // code of 200 and an HTTP header for the content type res.writeHead(200, {‘Content-Type’: ‘text/plain’}); and have the customer stand off to the side to wait while the order is prepared. -

Master Thesis

ABSTRACT Speeding Up Mobile Browsers without Infrastructure Support by Zhen Wang Mobile browsers are known to be slow. We characterize the performance of mobile browsers and find out that resource loading is the bottleneck. Leveraging an unprecedent- ed set of web usage data collected from 24 iPhone users continuously over one year, we examine the three fundamental, orthogonal approaches to improve resource loading with- out infrastructure support: caching, prefetching, and speculative loading, which is first proposed and studied in this work. Speculative loading predicts and speculatively loads the subresources needed to open a webpage once its URL is given. We show that while caching and prefetching are highly limited for mobile browsing, speculative loading can be significantly more effective. Empirically, we show that client-only solutions can im- prove the browser speed by 1.4 seconds on average. We also report the design, realiza- tion, and evaluation of speculative loading in a WebKit-based browser called Tempo. On average, Tempo can reduce browser delay by 1 second (~20%). Acknowledgements I would like to thank my advisor, Professor Lin Zhong, for his guidance and encour- agement during my study and research at Rice University. He has not only given me in- sightful suggestions, but also helped me to develop the right way to do research. I am also grateful to work with Mansoor Chishtie from Texas Instruments, who sup- ports my research and gives me inspiring advice. I would like to thank Professor Dan Wallach and Professor T. S. Eugene Ng for serv- ing as my thesis committee. Their comments and feedback to this work are of great value. -

Node.Js Application Developer's Guide (PDF)

MarkLogic Server Node.js Application Developer’s Guide 1 MarkLogic 10 June, 2019 Last Revised: 10.0-1, June 2019 Copyright © 2019 MarkLogic Corporation. All rights reserved. MarkLogic Server Table of Contents Table of Contents Node.js Application Developer’s Guide 1.0 Introduction to the Node.js Client API ..........................................................9 1.1 Getting Started ........................................................................................................9 1.2 Required Software ................................................................................................14 1.3 Security Requirements ..........................................................................................15 1.3.1 Basic Security Requirements ....................................................................15 1.3.2 Controlling Document Access ..................................................................16 1.3.3 Evaluating Requests Against a Different Database ..................................16 1.3.4 Evaluating or Invoking Server-Side Code ................................................16 1.4 Terms and Definitions ..........................................................................................17 1.5 Key Concepts and Conventions ............................................................................18 1.5.1 MarkLogic Namespace .............................................................................18 1.5.2 Parameter Passing Conventions ................................................................18 -

![[MS-DOM3C]: Internet Explorer Document Object Model (DOM) Level 3 Core Standards Support Document](https://docslib.b-cdn.net/cover/6035/ms-dom3c-internet-explorer-document-object-model-dom-level-3-core-standards-support-document-1586035.webp)

[MS-DOM3C]: Internet Explorer Document Object Model (DOM) Level 3 Core Standards Support Document

[MS-DOM3C]: Internet Explorer Document Object Model (DOM) Level 3 Core Standards Support Document Intellectual Property Rights Notice for Open Specifications Documentation . Technical Documentation. Microsoft publishes Open Specifications documentation (“this documentation”) for protocols, file formats, data portability, computer languages, and standards support. Additionally, overview documents cover inter-protocol relationships and interactions. Copyrights. This documentation is covered by Microsoft copyrights. Regardless of any other terms that are contained in the terms of use for the Microsoft website that hosts this documentation, you can make copies of it in order to develop implementations of the technologies that are described in this documentation and can distribute portions of it in your implementations that use these technologies or in your documentation as necessary to properly document the implementation. You can also distribute in your implementation, with or without modification, any schemas, IDLs, or code samples that are included in the documentation. This permission also applies to any documents that are referenced in the Open Specifications documentation. No Trade Secrets. Microsoft does not claim any trade secret rights in this documentation. Patents. Microsoft has patents that might cover your implementations of the technologies described in the Open Specifications documentation. Neither this notice nor Microsoft's delivery of this documentation grants any licenses under those patents or any other Microsoft patents. However, a given Open Specifications document might be covered by the Microsoft Open Specifications Promise or the Microsoft Community Promise. If you would prefer a written license, or if the technologies described in this documentation are not covered by the Open Specifications Promise or Community Promise, as applicable, patent licenses are available by contacting [email protected].