Xml2’ April 23, 2020 Title Parse XML Version 1.3.2 Description Work with XML files Using a Simple, Consistent Interface

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

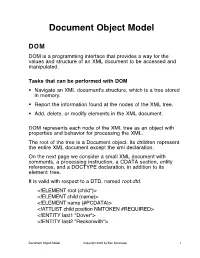

Document Object Model

Document Object Model DOM DOM is a programming interface that provides a way for the values and structure of an XML document to be accessed and manipulated. Tasks that can be performed with DOM . Navigate an XML document's structure, which is a tree stored in memory. Report the information found at the nodes of the XML tree. Add, delete, or modify elements in the XML document. DOM represents each node of the XML tree as an object with properties and behavior for processing the XML. The root of the tree is a Document object. Its children represent the entire XML document except the xml declaration. On the next page we consider a small XML document with comments, a processing instruction, a CDATA section, entity references, and a DOCTYPE declaration, in addition to its element tree. It is valid with respect to a DTD, named root.dtd. <!ELEMENT root (child*)> <!ELEMENT child (name)> <!ELEMENT name (#PCDATA)> <!ATTLIST child position NMTOKEN #REQUIRED> <!ENTITY last1 "Dover"> <!ENTITY last2 "Reckonwith"> Document Object Model Copyright 2005 by Ken Slonneger 1 Example: root.xml <?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE root SYSTEM "root.dtd"> <!-- root.xml --> <?DomParse usage="java DomParse root.xml"?> <root> <child position="first"> <name>Eileen &last1;</name> </child> <child position="second"> <name><![CDATA[<<<Amanda>>>]]> &last2;</name> </child> <!-- Could be more children later. --> </root> DOM imagines that this XML information has a document root with four children: 1. A DOCTYPE declaration. 2. A comment. 3. A processing instruction, whose target is DomParse. 4. The root element of the document. The second comment is a child of the root element. -

Nix on SHARCNET

Nix on SHARCNET Tyson Whitehead May 14, 2015 Nix Overview An enterprise approach to package management I a package is a specific piece of code compiled in a specific way I each package is entirely self contained and does not change I each users select what packages they want and gets a custom enviornment https://nixos.org/nix Ships with several thousand packages already created https://nixos.org/nixos/packages.html SHARCNET What this adds to SHARCNET I each user can have their own custom environments I environments should work everywhere (closed with no external dependencies) I several thousand new and newer packages Current issues (first is permanent, second will likely be resolved) I newer glibc requires kernel 2.6.32 so no requin I package can be used but not installed/removed on viz/vdi https: //sourceware.org/ml/libc-alpha/2014-01/msg00511.html Enabling Nix Nix is installed under /home/nixbld on SHARCNET. Enable for a single sessiong by running source /home/nixbld/profile.d/nix-profile.sh To always enable add this to the end of ~/.bash_profile echo source /home/nixbld/profile.d/nix-profile.sh \ >> ~/.bash_profile Reseting Nix A basic reset is done by removing all .nix* files from your home directory rm -fr ~/.nix* A complete reset done by remove your Nix per-user directories rm -fr /home/nixbld/var/nix/profile/per-user/$USER rm -fr /home/nixbld/var/nix/gcroots/per-user/$USER The nix-profile.sh script will re-create these with the defaults next time it runs. Environment The nix-env commands maintains your environments I query packages (available and installed) I create a new environment from current one by adding packages I create a new environment from current one by removing packages I switching between existing environments I delete unused environements Querying Packages The nix-env {--query | -q} .. -

Rdfa in XHTML: Syntax and Processing Rdfa in XHTML: Syntax and Processing

RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing RDFa in XHTML: Syntax and Processing A collection of attributes and processing rules for extending XHTML to support RDF W3C Recommendation 14 October 2008 This version: http://www.w3.org/TR/2008/REC-rdfa-syntax-20081014 Latest version: http://www.w3.org/TR/rdfa-syntax Previous version: http://www.w3.org/TR/2008/PR-rdfa-syntax-20080904 Diff from previous version: rdfa-syntax-diff.html Editors: Ben Adida, Creative Commons [email protected] Mark Birbeck, webBackplane [email protected] Shane McCarron, Applied Testing and Technology, Inc. [email protected] Steven Pemberton, CWI Please refer to the errata for this document, which may include some normative corrections. This document is also available in these non-normative formats: PostScript version, PDF version, ZIP archive, and Gzip’d TAR archive. The English version of this specification is the only normative version. Non-normative translations may also be available. Copyright © 2007-2008 W3C® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark and document use rules apply. Abstract The current Web is primarily made up of an enormous number of documents that have been created using HTML. These documents contain significant amounts of structured data, which is largely unavailable to tools and applications. When publishers can express this data more completely, and when tools can read it, a new world of user functionality becomes available, letting users transfer structured data between applications and web sites, and allowing browsing applications to improve the user experience: an event on a web page can be directly imported - 1 - How to Read this Document RDFa in XHTML: Syntax and Processing into a user’s desktop calendar; a license on a document can be detected so that users can be informed of their rights automatically; a photo’s creator, camera setting information, resolution, location and topic can be published as easily as the original photo itself, enabling structured search and sharing. -

Bibliography of Erik Wilde

dretbiblio dretbiblio Erik Wilde's Bibliography References [1] AFIPS Fall Joint Computer Conference, San Francisco, California, December 1968. [2] Seventeenth IEEE Conference on Computer Communication Networks, Washington, D.C., 1978. [3] ACM SIGACT-SIGMOD Symposium on Principles of Database Systems, Los Angeles, Cal- ifornia, March 1982. ACM Press. [4] First Conference on Computer-Supported Cooperative Work, 1986. [5] 1987 ACM Conference on Hypertext, Chapel Hill, North Carolina, November 1987. ACM Press. [6] 18th IEEE International Symposium on Fault-Tolerant Computing, Tokyo, Japan, 1988. IEEE Computer Society Press. [7] Conference on Computer-Supported Cooperative Work, Portland, Oregon, 1988. ACM Press. [8] Conference on Office Information Systems, Palo Alto, California, March 1988. [9] 1989 ACM Conference on Hypertext, Pittsburgh, Pennsylvania, November 1989. ACM Press. [10] UNIX | The Legend Evolves. Summer 1990 UKUUG Conference, Buntingford, UK, 1990. UKUUG. [11] Fourth ACM Symposium on User Interface Software and Technology, Hilton Head, South Carolina, November 1991. [12] GLOBECOM'91 Conference, Phoenix, Arizona, 1991. IEEE Computer Society Press. [13] IEEE INFOCOM '91 Conference on Computer Communications, Bal Harbour, Florida, 1991. IEEE Computer Society Press. [14] IEEE International Conference on Communications, Denver, Colorado, June 1991. [15] International Workshop on CSCW, Berlin, Germany, April 1991. [16] Third ACM Conference on Hypertext, San Antonio, Texas, December 1991. ACM Press. [17] 11th Symposium on Reliable Distributed Systems, Houston, Texas, 1992. IEEE Computer Society Press. [18] 3rd Joint European Networking Conference, Innsbruck, Austria, May 1992. [19] Fourth ACM Conference on Hypertext, Milano, Italy, November 1992. ACM Press. [20] GLOBECOM'92 Conference, Orlando, Florida, December 1992. IEEE Computer Society Press. http://github.com/dret/biblio (August 29, 2018) 1 dretbiblio [21] IEEE INFOCOM '92 Conference on Computer Communications, Florence, Italy, 1992. -

Exploring and Extracting Nodes from Large XML Files

Exploring and Extracting Nodes from Large XML Files Guy Lapalme January 2010 Abstract This article shows how to deal simply with large XML files that cannot be read as a whole in memory and for which the usual XML exploration and extraction mechanisms cannot work or are very inefficient in processing time. We define the notion of a skeleton document that is maintained as the file is read using a pull- parser. It is used for showing the structure of the document and for selecting parts of it. 1 Introduction XML has been developed to facilitate the annotation of information to be shared between computer systems. Because it is intended to be easily generated and parsed by computer systems on all platforms, its format is based on character streams rather than internal binary ones. Being character-based, it also has the nice property of being readable and editable by humans using standard text editors. XML is based on a uniform, simple and yet powerful model of data organization: the generalized tree. Such a tree is defined as either a single element or an element having other trees as its sub-elements called children. This is the same model as the one chosen for the Lisp programming language 50 years ago. This hierarchical model is very simple and allows a simple annotation of the data. The left part of Figure 1 shows a very small XML file illustrating the basic notation: an arbitrary name between < and > symbols is given to a node of a tree. This is called a start-tag. -

RDF/XML: RDF Data on the Web

Developing Ontologies • have an idea of the required concepts and relationships (ER, UML, ...), • generate a (draft) n3 or RDF/XML instance, • write a separate file for the metadata, • load it into Jena with activating a reasoner. • If the reasoner complains about an inconsistent ontology, check the metadata file alone. If this is consistent, and it complains only when also data is loaded: – it may be due to populating a class whose definition is inconsistent and that thus must be empty. – often it is due to wrong datatypes. Recall that datatype specification is not interpreted as a constraint (that is violated for a given value), but as additional knowledge. 220 Chapter 6 RDF/XML: RDF Data on the Web • An XML representation of RDF data for providing RDF data on the Web could be done straightforwardly as a “holds” relation mapped according to SQLX (see ⇒ next slide). • would be highly redundant and very different from an XML representation of the same data • search for a more similar way: leads to “striped XML/RDF” – data feels like XML: can be queried by XPath/Query and transformed by XSLT – can be parsed into an RDF graph. • usually: provide RDF/XML data to an agreed RDFS/OWL ontology. 221 A STRAIGHTFORWARD XML REPRESENTATION OF RDF DATA Note: this is not RDF/XML, but just some possible representation. • RDF data are triples, • their components are either URIs or literals (of XML Schema datatypes), • straightforward XML markup in SQLX style, • since N3 has a term structure, it is easy to find an XML markup. <my-n3:rdf-graph xmlns:my-n3="http://simple-silly-rdf-xml.de#"> <my-n3:triple> <my-n3:subject type="uri">foo://bar/persons/john</my-n3:subject> <my-n3:predicate type="uri">foo://bar/meta#name</my-n3:predicate> <my-n3:object type="http://www.w3.org/2001/XMLSchema#string">John</my-n3:object> </my-n3 triple> <my-n3:triple> .. -

Downloads." the Open Information Security Foundation

Performance Testing Suricata The Effect of Configuration Variables On Offline Suricata Performance A Project Completed for CS 6266 Under Jonathon T. Giffin, Assistant Professor, Georgia Institute of Technology by Winston H Messer Project Advisor: Matt Jonkman, President, Open Information Security Foundation December 2011 Messer ii Abstract The Suricata IDS/IPS engine, a viable alternative to Snort, has a multitude of potential configurations. A simplified automated testing system was devised for the purpose of performance testing Suricata in an offline environment. Of the available configuration variables, seventeen were analyzed independently by testing in fifty-six configurations. Of these, three variables were found to have a statistically significant effect on performance: Detect Engine Profile, Multi Pattern Algorithm, and CPU affinity. Acknowledgements In writing the final report on this endeavor, I would like to start by thanking four people who made this project possible: Matt Jonkman, President, Open Information Security Foundation: For allowing me the opportunity to carry out this project under his supervision. Victor Julien, Lead Programmer, Open Information Security Foundation and Anne-Fleur Koolstra, Documentation Specialist, Open Information Security Foundation: For their willingness to share their wisdom and experience of Suricata via email for the past four months. John M. Weathersby, Jr., Executive Director, Open Source Software Institute: For allowing me the use of Institute equipment for the creation of a suitable testing -

Ontology Matching • Semantic Social Networks and Peer-To-Peer Systems

The web: from XML to OWL Rough Outline 1. Foundations of XML (Pierre Genevès & Nabil Layaïda) • Core XML • Programming with XML Development of the future web • Foundations of XML types (tree grammars, tree automata) • Tree logics (FO, MSO, µ-calculus) • Expressing information ! Languages • A taste of research: introduction to some grand challenges • Manipulating it ! Algorithms 2. Semantics of knowledge representation on the web (Jérôme Euzenat & • in the most correct, efficient and ! Logic Marie-Christine Rousset) meaningful way ! Semantics • Semantic web languages (URI, RDF, RDFS and OWL) • Querying RDF and RDFS (SPARQL) • Querying data though ontologies (DL-Lite) • Ontology matching • Semantic social networks and peer-to-peer systems 1 / 8 2 / 8 Foundations of XML Semantic web We will talk about languages, algorithms, and semantics for efficiently and meaningfully manipulating formalised knowledge. We will talk about languages, algorithms, and programming techniques for efficiently and safely manipulating XML data. You will learn about: You will learn about: • Expressing formalised knowledge on the semantic web (RDF) • Tree structured data (XML) ! Syntax and semantics ! Tree grammars & validation You will not learn about: • Expressing ontologies on the semantic • XML programming (XPath, XSLT...) You will not learn about: web (RDFS, OWL, DL-Lite) • Tagging pictures ! Queries & transformations ! Syntax and semantics • Hacking CGI scripts ! Reasoning • Sharing MP3 • Foundational theory & tools • HTML • Creating facebook ! Regular expressions -

XML for Java Developers G22.3033-002 Course Roadmap

XML for Java Developers G22.3033-002 Session 1 - Main Theme Markup Language Technologies (Part I) Dr. Jean-Claude Franchitti New York University Computer Science Department Courant Institute of Mathematical Sciences 1 Course Roadmap Consider the Spectrum of Applications Architectures Distributed vs. Decentralized Apps + Thick vs. Thin Clients J2EE for eCommerce vs. J2EE/Web Services, JXTA, etc. Learn Specific XML/Java “Patterns” Used for Data/Content Presentation, Data Exchange, and Application Configuration Cover XML/Java Technologies According to their Use in the Various Phases of the Application Development Lifecycle (i.e., Discovery, Design, Development, Deployment, Administration) e.g., Modeling, Configuration Management, Processing, Rendering, Querying, Secure Messaging, etc. Develop XML Applications as Assemblies of Reusable XML- Based Services (Applications of XML + Java Applications) 2 1 Agenda XML Generics Course Logistics, Structure and Objectives History of Meta-Markup Languages XML Applications: Markup Languages XML Information Modeling Applications XML-Based Architectures XML and Java XML Development Tools Summary Class Project Readings Assignment #1a 3 Part I Introduction 4 2 XML Generics XML means eXtensible Markup Language XML expresses the structure of information (i.e., document content) separately from its presentation XSL style sheets are used to convert documents to a presentation format that can be processed by a target presentation device (e.g., HTML in the case of legacy browsers) Need a -

FFV1, Matroska, LPCM (And More)

MediaConch Implementation and policy checking on FFV1, Matroska, LPCM (and more) Jérôme Martinez, MediaArea Innovation Workshop ‑ March 2017 What is MediaConch? MediaConch is a conformance checker Implementation checker Policy checker Reporter Fixer What is MediaConch? Implementation and Policy reporter What is MediaConch? Implementation report: Policy report: What is MediaConch? General information about your files What is MediaConch? Inspect your files What is MediaConch? Policy editor What is MediaConch? Public policies What is MediaConch? Fixer Segment sizes in Matroska Matroska “bit flip” correction FFV1 “bit flip” correction Integration Archivematica is an integrated suite of open‑source software tools that allows users to process digital objects from ingest to access in compliance with the ISO‑OAIS functional model MediaConch interfaces Graphical interface Web interface Command line Server (REST API) (Work in progress) a library (.dll/.so/.dylib) MediaConch output formats XML (native format) Text HTML (Work in progress) PDF Tweakable! (with XSL) Open source GPLv3+ and MPLv2+ Relies on MediaInfo (metadata extraction tool) Use well‑known open source libraries: Qt, sqlite, libevent, libxml2, libxslt, libexslt... Supported formats Priorities for the implementation checker Matroska FFV1 PCM Can accept any format supported by MediaInfo for the policy checker MXF + JP2k QuickTime/MOV Audio files (WAV, BWF, AIFF...) ... Supported formats Can be expanded By plugins Support of PDF checker: VeraPDF plugin Support of TIFF checker: DPF Manager plugin You use another checker? Let us know By internal development More tests on your preferred format is possible It depends on you! Versatile Several input formats are accepted FFV1 from MOV or AVI Matroska with other video formats (Work in progress) Extraction of a PDF or TIFF aachement from a Matroska container and analyze with a plugin (e.g. -

Feature-Oriented Defect Prediction: Scenarios, Metrics, and Classifiers

1 Feature-Oriented Defect Prediction: Scenarios, Metrics, and Classifiers Mukelabai Mukelabai, Stefan Strüder, Daniel Strüber, Thorsten Berger Abstract—Software defects are a major nuisance in software development and can lead to considerable financial losses or reputation damage for companies. To this end, a large number of techniques for predicting software defects, largely based on machine learning methods, has been developed over the past decades. These techniques usually rely on code-structure and process metrics to predict defects at the granularity of typical software assets, such as subsystems, components, and files. In this paper, we systematically investigate feature-oriented defect prediction: predicting defects at the granularity of features—domain-entities that abstractly represent software functionality and often cross-cut software assets. Feature-oriented prediction can be beneficial, since: (i) particular features might be more error-prone than others, (ii) characteristics of features known as defective might be useful to predict other error-prone features, and (iii) feature-specific code might be especially prone to faults arising from feature interactions. We explore the feasibility and solution space for feature-oriented defect prediction. We design and investigate scenarios, metrics, and classifiers. Our study relies on 12 software projects from which we analyzed 13,685 bug-introducing and corrective commits, and systematically generated 62,868 training and test datasets to evaluate the designed classifiers, metrics, and scenarios. The datasets were generated based on the 13,685 commits, 81 releases, and 24, 532 permutations of our 12 projects depending on the scenario addressed. We covered scenarios, such as just-in-time (JIT) and cross-project defect prediction. -

Creating Custom Debian Live for USB FD with Encrypted Persistence

Creating Custom Debian Live for USB FD with Encrypted Persistence INTRO Debian is a free operating system (OS) for your computer. An operating system is the set of basic programs and utilities that make your computer run. Debian provides more than a pure OS: it comes with over 43000 packages, precompiled software bundled up in a nice format for easy installation on your machine. PRE-REQ * Debian distro installed * Free Disk Space (Depends on you) Recommended Free Space >20GB * Internet Connection Fast * USB Flash Drive atleast 4GB Installing Required Softwares on your distro: Open Root Terminal or use sudo: $ sudo apt-get install debootstrap syslinux squashfs-tools genisoimage memtest86+ rsync apt-cacher-ng live-build live-config live-boot live-boot-doc live-config-doc live-manual live-tools live-manual-pdf qemu-kvm qemu-utils virtualbox virtualbox-qt virtualbox-dkms p7zip-full gparted mbr dosfstools parted Configuring APT Proxy Server (to save bandwidth) Start apt-cacher-ng service if not running # service apt-cacher-ng start Edit /etc/apt/sources.list with your favorite text editor. Terminal # nano /etc/apt/sources.list Output: (depends on your APT Mirror configuration) deb http://security.debian.org/ jessie/updates main contrib non-free deb http://http.debian.org/debian jessie main contrib non-free deb http://ftp.debian.org/debian jessie main contrib non-free Add “localhost:3142” : deb http://localhost:3142/security.debian.org/ jessie/updates main contrib non-free deb http://localhost:3142/http.debian.org/debian jessie main contrib non-free deb http://localhost:3142/ftp.debian.org/debian jessie main contrib non-free Press Ctrl + X and Y to save changes Terminal # apt-get update # apt-get upgrade NOTE: BUG in Debian Live.