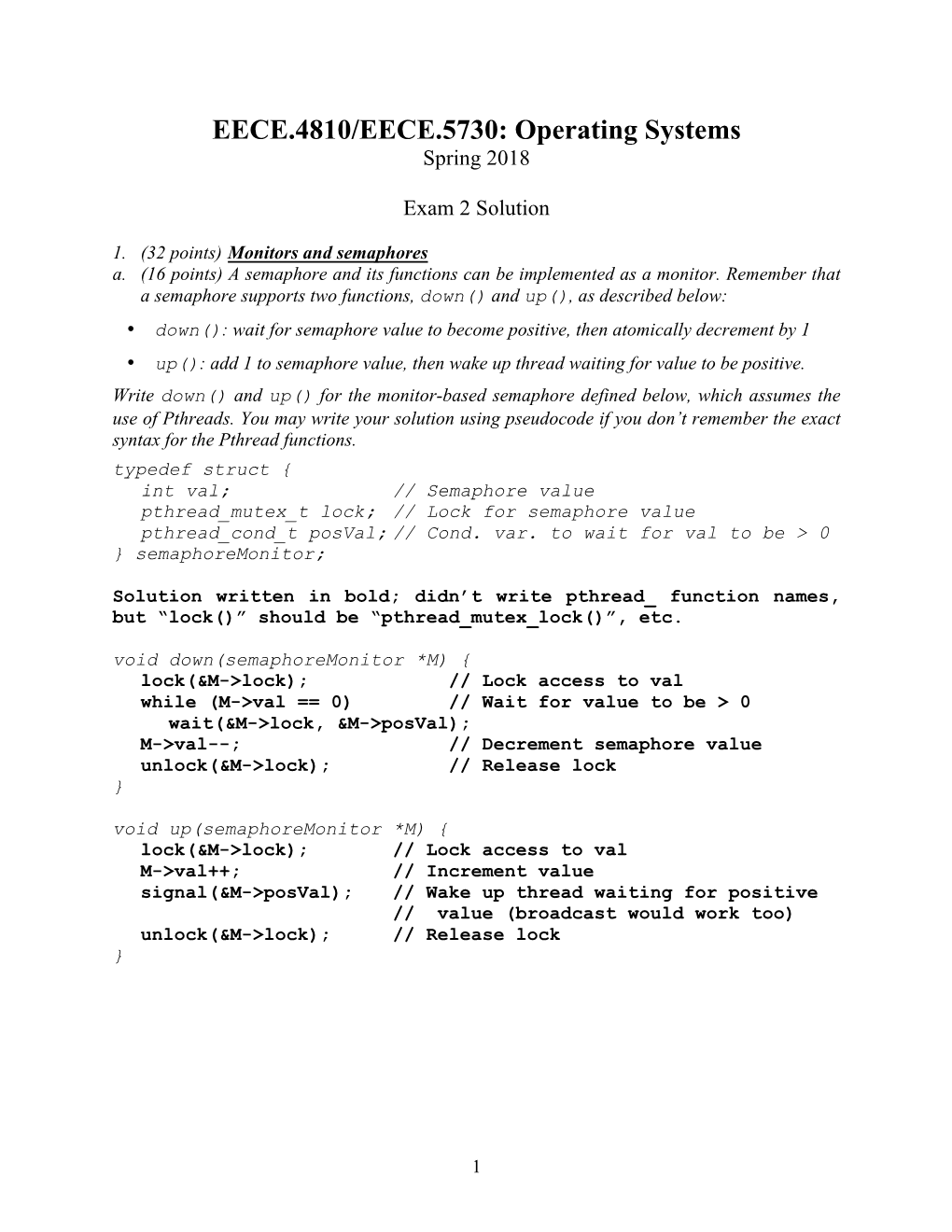

EECE.4810/EECE.5730: Operating Systems Spring 2018

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Synchronization Spinlocks - Semaphores

CS 4410 Operating Systems Synchronization Spinlocks - Semaphores Summer 2013 Cornell University 1 Today ● How can I synchronize the execution of multiple threads of the same process? ● Example ● Race condition ● Critical-Section Problem ● Spinlocks ● Semaphors ● Usage 2 Problem Context ● Multiple threads of the same process have: ● Private registers and stack memory ● Shared access to the remainder of the process “state” ● Preemptive CPU Scheduling: ● The execution of a thread is interrupted unexpectedly. ● Multiple cores executing multiple threads of the same process. 3 Share Counting ● Mr Skroutz wants to count his $1-bills. ● Initially, he uses one thread that increases a variable bills_counter for every $1-bill. ● Then he thought to accelerate the counting by using two threads and keeping the variable bills_counter shared. 4 Share Counting bills_counter = 0 ● Thread A ● Thread B while (machine_A_has_bills) while (machine_B_has_bills) bills_counter++ bills_counter++ print bills_counter ● What it might go wrong? 5 Share Counting ● Thread A ● Thread B r1 = bills_counter r2 = bills_counter r1 = r1 +1 r2 = r2 +1 bills_counter = r1 bills_counter = r2 ● If bills_counter = 42, what are its possible values after the execution of one A/B loop ? 6 Shared counters ● One possible result: everything works! ● Another possible result: lost update! ● Called a “race condition”. 7 Race conditions ● Def: a timing dependent error involving shared state ● It depends on how threads are scheduled. ● Hard to detect 8 Critical-Section Problem bills_counter = 0 ● Thread A ● Thread B while (my_machine_has_bills) while (my_machine_has_bills) – enter critical section – enter critical section bills_counter++ bills_counter++ – exit critical section – exit critical section print bills_counter 9 Critical-Section Problem ● The solution should ● enter section satisfy: ● critical section ● Mutual exclusion ● exit section ● Progress ● remainder section ● Bounded waiting 10 General Solution ● LOCK ● A process must acquire a lock to enter a critical section. -

Deadlock: Why Does It Happen? CS 537 Andrea C

UNIVERSITY of WISCONSIN-MADISON Computer Sciences Department Deadlock: Why does it happen? CS 537 Andrea C. Arpaci-Dusseau Introduction to Operating Systems Remzi H. Arpaci-Dusseau Informal: Every entity is waiting for resource held by another entity; none release until it gets what it is Deadlock waiting for Questions answered in this lecture: What are the four necessary conditions for deadlock? How can deadlock be prevented? How can deadlock be avoided? How can deadlock be detected and recovered from? Deadlock Example Deadlock Example Two threads access two shared variables, A and B int A, B; Variable A is protected by lock x, variable B by lock y lock_t x, y; How to add lock and unlock statements? Thread 1 Thread 2 int A, B; lock(x); lock(y); A += 10; B += 10; lock(y); lock(x); Thread 1 Thread 2 B += 20; A += 20; A += 10; B += 10; A += B; A += B; B += 20; A += 20; unlock(y); unlock(x); A += B; A += B; A += 30; B += 30; A += 30; B += 30; unlock(x); unlock(y); What can go wrong?? 1 Representing Deadlock Conditions for Deadlock Two common ways of representing deadlock Mutual exclusion • Vertices: • Resource can not be shared – Threads (or processes) in system – Resources (anything of value, including locks and semaphores) • Requests are delayed until resource is released • Edges: Indicate thread is waiting for the other Hold-and-wait Wait-For Graph Resource-Allocation Graph • Thread holds one resource while waits for another No preemption “waiting for” wants y held by • Resources are released voluntarily after completion T1 T2 T1 T2 Circular -

The Dining Philosophers Problem Cache Memory

The Dining Philosophers Problem Cache Memory 254 The dining philosophers problem: definition It is an artificial problem widely used to illustrate the problems linked to resource sharing in concurrent programming. The problem is usually described as follows. • A given number of philosopher are seated at a round table. • Each of the philosophers shares his time between two activities: thinking and eating. • To think, a philosopher does not need any resources; to eat he needs two pieces of silverware. 255 • However, the table is set in a very peculiar way: between every pair of adjacent plates, there is only one fork. • A philosopher being clumsy, he needs two forks to eat: the one on his right and the one on his left. • It is thus impossible for a philosopher to eat at the same time as one of his neighbors: the forks are a shared resource for which the philosophers are competing. • The problem is to organize access to these shared resources in such a way that everything proceeds smoothly. 256 The dining philosophers problem: illustration f4 P4 f0 P3 f3 P0 P2 P1 f1 f2 257 The dining philosophers problem: a first solution • This first solution uses a semaphore to model each fork. • Taking a fork is then done by executing a operation wait on the semaphore, which suspends the process if the fork is not available. • Freeing a fork is naturally done with a signal operation. 258 /* Definitions and global initializations */ #define N = ? /* number of philosophers */ semaphore fork[N]; /* semaphores modeling the forks */ int j; for (j=0, j < N, j++) fork[j]=1; Each philosopher (0 to N-1) corresponds to a process executing the following procedure, where i is the number of the philosopher. -

CSC 553 Operating Systems Multiple Processes

CSC 553 Operating Systems Lecture 4 - Concurrency: Mutual Exclusion and Synchronization Multiple Processes • Operating System design is concerned with the management of processes and threads: • Multiprogramming • Multiprocessing • Distributed Processing Concurrency Arises in Three Different Contexts: • Multiple Applications – invented to allow processing time to be shared among active applications • Structured Applications – extension of modular design and structured programming • Operating System Structure – OS themselves implemented as a set of processes or threads Key Terms Related to Concurrency Principles of Concurrency • Interleaving and overlapping • can be viewed as examples of concurrent processing • both present the same problems • Uniprocessor – the relative speed of execution of processes cannot be predicted • depends on activities of other processes • the way the OS handles interrupts • scheduling policies of the OS Difficulties of Concurrency • Sharing of global resources • Difficult for the OS to manage the allocation of resources optimally • Difficult to locate programming errors as results are not deterministic and reproducible Race Condition • Occurs when multiple processes or threads read and write data items • The final result depends on the order of execution – the “loser” of the race is the process that updates last and will determine the final value of the variable Operating System Concerns • Design and management issues raised by the existence of concurrency: • The OS must: – be able to keep track of various processes -

Static Analysis of a Concurrent Programming Language by Abstract Interpretation

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Concordia University Research Repository STATIC ANALYSIS OF A CONCURRENT PROGRAMMING LANGUAGE BY ABSTRACT INTERPRETATION Maryam Zakeryfar A thesis in The Department of Computer Science and Software Engineering Presented in Partial Fulfillment of the Requirements For the Degree of Doctor of Philosophy (Computer Science) Concordia University Montreal,´ Quebec,´ Canada March 2014 © Maryam Zakeryfar, 2014 Concordia University School of Graduate Studies This is to certify that the thesis prepared By: Mrs. Maryam Zakeryfar Entitled: Static Analysis of a Concurrent Programming Language by Abstract Interpretation and submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Computer Science) complies with the regulations of this University and meets the accepted standards with respect to originality and quality. Signed by the final examining committee: Dr. Deborah Dysart-Gale Chair Dr. Weichang Du External Examiner Dr. Mourad Debbabi Examiner Dr. Olga Ormandjieva Examiner Dr. Joey Paquet Examiner Dr. Peter Grogono Supervisor Approved by Dr. V. Haarslev, Graduate Program Director Christopher W. Trueman, Dean Faculty of Engineering and Computer Science Abstract Static Analysis of a Concurrent Programming Language by Abstract Interpretation Maryam Zakeryfar, Ph.D. Concordia University, 2014 Static analysis is an approach to determine information about the program without actually executing it. There has been much research in the static analysis of concurrent programs. However, very little academic research has been done on the formal analysis of message passing or process-oriented languages. We currently miss formal analysis tools and tech- niques for concurrent process-oriented languages such as Erasmus . -

Supervision 1: Semaphores, Generalised Producer-Consumer, and Priorities

Concurrent and Distributed Systems - 2015–2016 Supervision 1: Semaphores, generalised producer-consumer, and priorities Q0 Semaphores (a) Counting semaphores are initialised to a value — 0, 1, or some arbitrary n. For each case, list one situation in which that initialisation would make sense. (b) Write down two fragments of pseudo-code, to be run in two different threads, that experience deadlock as a result of poor use of mutual exclusion. (c) Deadlock is not limited to mutual exclusion; it can occur any time its preconditions (especially hold-and-wait, cyclic dependence) occur. Describe a situation in which two threads making use of semaphores for condition synchronisation (e.g., in producer-consumer) can deadlock. int buffer[N]; int in = 0, out = 0; spaces = new Semaphore(N); items = new Semaphore(0); guard = new Semaphore(1); // for mutual exclusion // producer threads while(true) { item = produce(); wait(spaces); wait(guard); buffer[in] = item; in = (in + 1) % N; signal(guard); signal(items); } // consumer threads while(true) { wait(items); wait(guard); item = buffer[out]; out =(out+1) % N; signal(guard); signal(spaces); consume(item); } Figure 1: Pseudo-code for a producer-consumer queue using semaphores. 1 (d) In Figure 1, items and spaces are used for condition synchronisation, and guard is used for mutual exclusion. Why will this implementation become unsafe in the presence of multiple consumer threads or multiple producer threads, if we remove guard? (e) Semaphores are introduced in part to improve efficiency under contention around critical sections by preferring blocking to spinning. Describe a situation in which this might not be the case; more generally, under what circumstances will semaphores hurt, rather than help, performance? (f) The implementation of semaphores themselves depends on two classes of operations: in- crement/decrement of an integer, and blocking/waking up threads. -

Sleeping Barber

Sleeping Barber CSCI 201 Principles of Software Development Jeffrey Miller, Ph.D. [email protected] Outline • Sleeping Barber USC CSCI 201L Sleeping Barber Overview ▪ The Sleeping Barber problem contains one barber and a number of customers ▪ There are a certain number of waiting seats › If a customer enters and there is a seat available, he will wait › If a customer enters and there is no seat available, he will leave ▪ When the barber isn’t cutting someone’s hair, he sits in his barber chair and sleeps › If the barber is sleeping when a customer enters, he must wake the barber up USC CSCI 201L 3/8 Program ▪ Write a solution to the Sleeping Barber problem. You will need to utilize synchronization and conditions. USC CSCI 201L 4/8 Program Steps – main Method ▪ The SleepingBarber will be the main class, so create the SleepingBarber class with a main method › The main method will instantiate the SleepingBarber › Then create a certain number of Customer threads that arrive at random times • Have the program sleep for a random amount of time between customer arrivals › Have the main method wait to finish executing until all of the Customer threads have finished › Print out that there are no more customers, then wake up the barber if he is sleeping so he can go home for the day USC CSCI 201L 5/8 Program Steps – SleepingBarber ▪ The SleepingBarber constructor will initialize some member variables › Total number of seats in the waiting room › Total number of customers who will be coming into the barber shop › A boolean variable indicating -

The Deadlock Problem

The deadlock problem More deadlocks mutex_t m1, m2; Same problem with condition variables void p1 (void *ignored) { • lock (m1); - Suppose resource 1 managed by c1, resource 2 by c2 lock (m2); - A has 1, waits on 2, B has 2, waits on 1 /* critical section */ c c unlock (m2); Or have combined mutex/condition variable unlock (m1); • } deadlock: - lock (a); lock (b); while (!ready) wait (b, c); void p2 (void *ignored) { unlock (b); unlock (a); lock (m2); - lock (a); lock (b); ready = true; signal (c); lock (m1); unlock (b); unlock (a); /* critical section */ unlock (m1); One lesson: Dangerous to hold locks when crossing unlock (m2); • } abstraction barriers! This program can cease to make progress – how? - I.e., lock (a) then call function that uses condition variable • Can you have deadlock w/o mutexes? • 1/15 2/15 Deadlocks w/o computers Deadlock conditions 1. Limited access (mutual exclusion): - Resource can only be shared with finite users. 2. No preemption: - once resource granted, cannot be taken away. Real issue is resources & how required • 3. Multiple independent requests (hold and wait): E.g., bridge only allows traffic in one direction - don’t ask all at once (wait for next resource while holding • - Each section of a bridge can be viewed as a resource. current one) - If a deadlock occurs, it can be resolved if one car backs up 4. Circularity in graph of requests (preempt resources and rollback). All of 1–4 necessary for deadlock to occur - Several cars may have to be backed up if a deadlock occurs. • Two approaches to dealing with deadlock: - Starvation is possible. -

Q1. Multiple Producers and Consumers

CS39002: Operating Systems Lab. Assignment 5 Floating date: 29/2/2016 Due date: 14/3/2016 Q1. Multiple Producers and Consumers Problem Definition: You have to implement a system which ensures synchronisation in a producer-consumer scenario. You also have to demonstrate deadlock condition and provide solutions for avoiding deadlock. In this system a main process creates 5 producer processes and 5 consumer processes who share 2 resources (queues). The producer's job is to generate a piece of data, put it into the queue and repeat. At the same time, the consumer process consumes the data i.e., removes it from the queue. In the implementation, you are asked to ensure synchronization and mutual exclusion. For instance, the producer should be stopped if the buffer is full and that the consumer should be blocked if the buffer is empty. You also have to enforce mutual exclusion while the processes are trying to acquire the resources. Manager (manager.c): It is the main process that creates the producers and consumer processes (5 each). After that it periodically checks whether the system is in deadlock. Deadlock refers to a specific condition when two or more processes are each waiting for another to release a resource, or more than two processes are waiting for resources in a circular chain. Implementation : The manager process (manager.c) does the following : i. It creates a file matrix.txt which holds a matrix with 2 rows (number of resources) and 10 columns (ID of producer and consumer processes). Each entry (i, j) of that matrix can have three values: ● 0 => process i has not requested for queue j or released queue j ● 1 => process i requested for queue j ● 2 => process i acquired lock of queue j. -

Fair K Mutual Exclusion Algorithm for Peer to Peer Systems ∗

Fair K Mutual Exclusion Algorithm for Peer To Peer Systems ∗ Vijay Anand Reddy, Prateek Mittal, and Indranil Gupta University of Illinois, Urbana Champaign, USA {vkortho2, mittal2, indy}@uiuc.edu Abstract is when multiple clients are simultaneously downloading a large multimedia file, e.g., [3, 12]. Finally applications k-mutual exclusion is an important problem for resource- where a service has limited computational resources will intensive peer-to-peer applications ranging from aggrega- also benefit from a k mutual exclusion primitive, preventing tion to file downloads. In order to be practically useful, scenarios where all the nodes of the p2p system simultane- k-mutual exclusion algorithms not only need to be safe and ously overwhelm the server. live, but they also need to be fair across hosts. We pro- The k mutual exclusion problem involves a group of pro- pose a new solution to the k-mutual exclusion problem that cesses, each of which intermittently requires access to an provides a notion of time-based fairness. Specifically, our identical resource called the critical section (CS). A k mu- algorithm attempts to minimize the spread of access time tual exclusion algorithm must satisfy both safety and live- for the critical resource. While a client’s access time is ness. Safety means that at most k processes, 1 ≤ k ≤ n, the time between it requesting and accessing the resource, may be in the CS at any given time. Liveness means that the spread is defined as a system-wide metric that measures every request for critical section access is satisfied in finite some notion of the variance of access times across a homo- time. -

Deadlock-Free Oblivious Routing for Arbitrary Topologies

Deadlock-Free Oblivious Routing for Arbitrary Topologies Jens Domke Torsten Hoefler Wolfgang E. Nagel Center for Information Services and Blue Waters Directorate Center for Information Services and High Performance Computing National Center for Supercomputing Applications High Performance Computing Technische Universitat¨ Dresden University of Illinois at Urbana-Champaign Technische Universitat¨ Dresden Dresden, Germany Urbana, IL 61801, USA Dresden, Germany [email protected] [email protected] [email protected] Abstract—Efficient deadlock-free routing strategies are cru- network performance without inclusion of the routing al- cial to the performance of large-scale computing systems. There gorithm or the application communication pattern. In reg- are many methods but it remains a challenge to achieve lowest ular operation these values can hardly be achieved due latency and highest bandwidth for irregular or unstructured high-performance networks. We investigate a novel routing to network congestion. The largest gap between real and strategy based on the single-source-shortest-path routing al- idealized performance is often in bisection bandwidth which gorithm and extend it to use virtual channels to guarantee by its definition only considers the topology. The effective deadlock-freedom. We show that this algorithm achieves min- bisection bandwidth [2] is the average bandwidth for routing imal latency and high bandwidth with only a low number messages between random perfect matchings of endpoints of virtual channels and can be implemented in practice. We demonstrate that the problem of finding the minimal number (also known as permutation routing) through the network of virtual channels needed to route a general network deadlock- and thus considers the routing algorithm. -

Bounded Model Checking for Asynchronous Concurrent Systems

Bounded Model Checking for Asynchronous Concurrent Systems Manitra Johanesa Rakotoarisoa Department of Computer Architecture Technical University of Catalonia Advisor: Enric Pastor Llorens Thesis submitted to obtain the qualification of Doctor from the Technical University of Catalonia To my mother Contents Abstract xvii Acknowledgments xix 1 Introduction1 1.1 Symbolic Model Checking...........................3 1.1.1 BDD-based approach..........................3 1.1.2 SAT-based Approach..........................6 1.2 Synchronous Versus Asynchronous Systems.................8 1.2.1 Synchronous systems..........................8 1.2.2 Asynchronous systems......................... 10 1.3 Scope of This Work.............................. 11 1.4 Structure of the Thesis............................. 12 2 Background 13 2.1 Transition Systems............................... 13 2.1.1 Definitions............................... 13 2.1.2 Symbolic Representation........................ 15 2.2 Other Models for Concurrent Systems.................... 17 2.2.1 Kripke Structure............................ 17 2.2.2 Petri Nets................................ 21 2.2.3 Automata................................ 22 2.3 Linear Temporal Logic............................. 24 2.4 Satisfiability Problem............................. 26 2.4.1 DPLL Algorithm............................ 27 2.4.2 Stålmarck’s Algorithm......................... 28 2.4.3 Other Methods for Solving SAT................... 32 2.5 Bounded Model Checking........................... 34 2.5.1 BMC Idea...............................