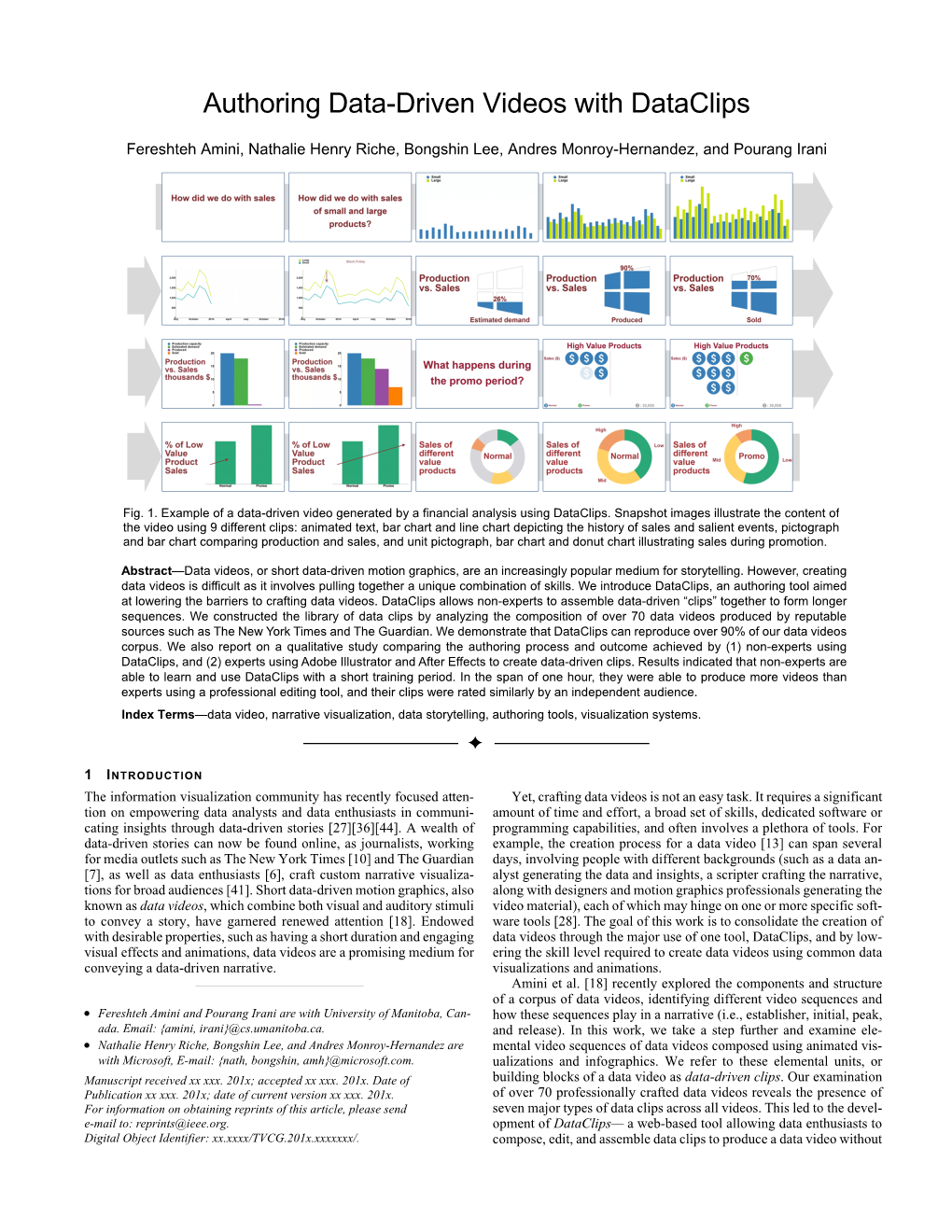

Authoring Data-Driven Videos with Dataclips

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evolution of the Infographic

EVOLUTION OF THE INFOGRAPHIC: Then, now, and future-now. EVOLUTION People have been using images and data to tell stories for ages—long before the days of the Internet, smartphones, and Excel. In fact, the history of infographics pre-dates the web by more than 30,000 years with the earliest forms of these visuals being cave paintings that helped early humans find food, resources, and shelter. But as technology has advanced, so has our ability to tell meaningful stories. Here’s a look into the evolution of modern infographics—where they’ve been, how they’ve evolved, and where they’re headed. Then: Printed, static infographics The 20th Century introduced the infographic—a staple for how we communicate, visualize, and share information today. Early on, these print graphics married illustration and data to communicate information in a revolutionary way. ADVANTAGE Design elements enable people to quickly absorb information previously confined to long paragraphs of text. LIMITATION Static infographics didn’t allow for deeper dives into the data to explore granularities. Hoping to drill down for more detail or context? Tough luck—what you see is what you get. Source: http://www.wired.co.uk/news/archive/2012-01/16/painting- by-numbers-at-london-transport-museum INFOGRAPHICS THROUGH THE AGES DOMO 03 Now: Web-based, interactive infographics While the first wave of modern infographics made complex data more consumable, web-based, interactive infographics made data more explorable. These are everywhere today. ADVANTAGE Everyone looking to make data an asset, from executives to graphic designers, are now building interactive data stories that deliver additional context and value. -

The Use and Potential Misuse of Data in Higher Education: a Compilation of Examples

IHE Research Projects Series IHE Research in Progress Series 2019-001 Submitted to series: April 5, 2019 The Use and Potential Misuse of Data in Higher Education: A Compilation of Examples Karen L. Webber Institute of Higher Education, University of Georgia, [email protected] Find this research paper and other faculty works at: https://ihe.uga.edu/rps Webber, K.L. “The Use and Potential Misuse of Data in Higher Education: A Compilation of Examples” (2019). IHE Research Projects Series 2019-001. Available at: https://ihe.uga.edu/rps/2019_001 The Use and Potential Misuse of Data in Higher Education A Compilation of Examples Karen L. Webber, Ph.D. RAPID INCREASES IN TECHNOLOGY have led to a heightened demand for and use of data collection and data visualization software. There are a number of data analyses or visualisations that, when presented without the proper context or that do not follow principles of good graphic design, have resulted in inaccurate conclusions. Principles of cognition and good graphic design are available a number of scholars, including Amos Tversky, Daniel Kahneman, Edward Tufte, Stephen Kosslyn, and Alberto Cairo. In addition to good graphic design, context is critical for correct interpretation, as there are many questions around the legitimacy, intentionality, and even the ideology of data use within higher education (Calderon 2015). Inaccurate conclusions can lead to incorrect discussions for possible program development and/or policy changes. You may wish to read more detail from several important scholars; see references at the end of this document. Below are some examples found in books or on the internet that may help you see the possibility of misrepresentation or misunderstanding. -

State Infographic: Using Infographics to Visually Represent Data

Lesson/Activity Title: STATE INFOGRAPHIC: USING INFOGRAPHICS TO VISUALLY REPRESENT DATA Time: 1 class period for Teach/Model and Guided Practice; several class periods or homework nights for Independent Practice; 1 class period for Closure and Extend/Enrich Instructional Goals: • The student will use the PebbleGo Next State Studies and American Indian History database to research statistical information on a state in the United States of America. • The student will navigate an online database to locate needed information. • The student will use other information sources (such as nonfiction books and websites) to locate additional information, as needed. • The student will analyze data to determine the best way to visually represent that data. • The student will create an infographic that illustrates the state information discovered. Materials/Resources: • PebbleGo Next online database • Additional information sources, such as the U.S. Census Bureau’s “State amd County Quick Facts” website (quickfacts.census.gov) • Free app or website for creating infographics (search for “free infographic app” online or in applicable app store) or paper, markers, and pencils for hand-drawing an infographic • Example infographic (available online by searching “infographics for kids” or in a nonfiction book from the Infographics series by Chris Oxlade, Raintree, ©2014) • Text-based source (such as a nonfiction book or magazine article) presenting similar information as found in the example infographic, for comparison purposes • Note-taking graphic organizer, such as State Infographic Notes handout Procedures/Lesson Activities: Focus 1. Show students an age-appropriate infographic from the Infographic series by Chris Oxlade or one found on the Internet. Show them similar information provided in a text-based format. -

Geotime As an Adjunct Analysis Tool for Social Media Threat Analysis and Investigations for the Boston Police Department Offeror: Uncharted Software Inc

GeoTime as an Adjunct Analysis Tool for Social Media Threat Analysis and Investigations for the Boston Police Department Offeror: Uncharted Software Inc. 2 Berkeley St, Suite 600 Toronto ON M5A 4J5 Canada Business Type: Canadian Small Business Jurisdiction: Federally incorporated in Canada Date of Incorporation: October 8, 2001 Federal Tax Identification Number: 98-0691013 ATTN: Jenny Prosser, Contract Manager, [email protected] Subject: Acquiring Technology and Services of Social Media Threats for the Boston Police Department Uncharted Software Inc. (formerly Oculus Info Inc.) respectfully submits the following response to the Technology and Services of Social Media Threats RFP. Uncharted accepts all conditions and requirements contained in the RFP. Uncharted designs, develops and deploys innovative visual analytics systems and products for analysis and decision-making in complex information environments. Please direct any questions about this response to our point of contact for this response, Adeel Khamisa at 416-203-3003 x250 or [email protected]. Sincerely, Adeel Khamisa Law Enforcement Industry Manager, GeoTime® Uncharted Software Inc. [email protected] 416-203-3003 x250 416-708-6677 Company Proprietary Notice: This proposal includes data that shall not be disclosed outside the Government and shall not be duplicated, used, or disclosed – in whole or in part – for any purpose other than to evaluate this proposal. If, however, a contract is awarded to this offeror as a result of – or in connection with – the submission of this data, the Government shall have the right to duplicate, use, or disclose the data to the extent provided in the resulting contract. GeoTime as an Adjunct Analysis Tool for Social Media Threat Analysis and Investigations 1. -

Animated Presentation of Static Infographics with Infomotion

Eurographics Conference on Visualization (EuroVis) 2021 Volume 40 (2021), Number 3 R. Borgo, G. E. Marai, and T. von Landesberger (Guest Editors) Animated Presentation of Static Infographics with InfoMotion Yun Wang1, Yi Gao1;2, Ray Huang1, Weiwei Cui1, Haidong Zhang1, and Dongmei Zhang1 1Microsoft Research Asia 2Nanjing University (a) 5% (b) time Element Animation effect Meats, sweets slice spin link wipe dot zoom 35% icon zoom 10% Whole grains, title zoom OliVer oil pasta, beans, description wipe whole grain bread Mediterranean Diet 20% 30% 30% Vegetables and fruits Fish, seafood, poultry, Vegetables and fruits dairy food, eggs Figure 1: (a) An example infographic design. (b) The animated presentations for this infographic show the start time, duration, and animation effects applied to the visual elements. InfoMotion automatically generates animated presentations of static infographics. Abstract By displaying visual elements logically in temporal order, animated infographics can help readers better understand layers of information expressed in an infographic. While many techniques and tools target the quick generation of static infographics, few support animation designs. We propose InfoMotion that automatically generates animated presentations of static infographics. We first conduct a survey to explore the design space of animated infographics. Based on this survey, InfoMotion extracts graphical properties of an infographic to analyze the underlying information structures; then, animation effects are applied to the visual elements in the infographic in temporal order to present the infographic. The generated animations can be used in data videos or presentations. We demonstrate the utility of InfoMotion with two example applications, including mixed-initiative animation authoring and animation recommendation. -

Arcgis® + Geotime®: GIS Technology to Support the Analysis Of

® ® In many application areas, this is not enough: Geo- spatial and temporal correlations between the data ArcGIS + GeoTime : GIS technology should be studied, so that, on the basis of this insight, the available data - more and more numerous - can to support the analysis of telephone be translated into knowledge and therefore in appropriate decisions . In the area of security, to name an example, all this results in the predictive traffic data analysis of the spatial-temporal occurrence of crimes. Lastly, a technologically advanced GIS platform must Giorgio Forti, Miriam Marta, Fabrizio Pauri ® ensure data sharing and enable the world of mobile devices (system of engagement). ® There are several ways to share data / information, all supported by ArcGIS, such as: sharing within a single Historical mobile phone traffic billboards analysis is organization, according to the profiles assigned becoming increasingly important in investigative Figure 2: Sample data representation of two (identity); the sharing of multiple organizations that activities of public security organizations around the cellphone users in GeoTime may / should share confidential data (a very common world, and leading technology companies have been situation in both Public Security and Emergency trying to respond to the strong demand for the most Other predefined analysis features are already Management); public communication, open to all (for suitable tools for supporting such activities. available (automatic cluster search, who attends sites example, to report investigative success, or to of investigation interest, mobility compatibility with communicate to citizens unsafe areas for the Originally developed as a project funded in the United participation in events, etc.), allowing considerable frequency of criminal offenses). -

Mapping Deforestation Deforestation Has Caused the Destruction of Millions of Acres of Forest Habitat

Mapping deforestation Deforestation has caused the destruction of millions of acres of forest habitat. But what is driving people to cut down so much forested land? And can reforestation efforts help rebuild what has been lost? Part 1: Global Forest Watch Map Directions: Global Forest Watch, a non-profit that monitors deforestation across the planet, has an interactive map that shows how forests have been cut down and expanded since 2000. Go to their website and click on the map (or go to https://www.globalforestwatch.org/map). To the left of the screen, there is a tool (Figure 1) that will allow you to see the change in tree cover gain (blue) and tree cover loss (pink) with unchanged forest (green). Click on the “play” button towards the bottom of the screen to see how each factor has changed since the year 2000 (Figure 1). As the simulation runs, observe different parts of the world and how their forests have changed. 1. What continents saw the most tree cover loss? ____________________________________________ 2. What continents saw the most tree cover gain? ____________________________________________ Part 2: Change in tree cover by country Deforestation has not been evenly distributed across the Earth, some countries have seen a lot of their forests cut down while other have preserved and even expanded their natural habitats. Directions: Select a country by either using the search tool at the bottom left of the screen or by clicking on that country on the map. Once you have selected your country, click analyze (Figure 2) which will show that country’s forested area. -

Information Graphics Design Challenges and Workflow Management Marco Giardina, University of Neuchâtel, Switzerland, Pablo Medi

Online Journal of Communication and Media Technologies Volume: 3 – Issue: 1 – January - 2013 Information Graphics Design Challenges and Workflow Management Marco Giardina, University of Neuchâtel, Switzerland, Pablo Medina, Sensiel Research, Switzerland Abstract Infographics, though still in its infancy in the digital world, may offer an opportunity for media companies to enhance their business processes and value creation activities. This paper describes research about the influence of infographics production and dissemination on media companies’ workflow management. Drawing on infographics examples from New York Times print and online version, this contribution empirically explores the evolution from static to interactive multimedia infographics, the possibilities and design challenges of this journalistic emerging field and its impact on media companies’ activities in relation to technology changes and media-use patterns. Findings highlight some explorative ideas about the required workflow and journalism activities for a successful inception of infographics into online news dissemination practices of media companies. Conclusions suggest that delivering infographics represents a yet not fully tapped opportunity for media companies, but its successful inception on news production routines requires skilled professionals in audiovisual journalism and revised business models. Keywords: newspapers, visual communication, infographics, digital media technology © Online Journal of Communication and Media Technologies 108 Online Journal of Communication and Media Technologies Volume: 3 – Issue: 1 – January - 2013 During this time of unprecedented change in journalism, media practitioners and scholars find themselves mired in a new debate on the storytelling potential of data visualization narratives. News organization including the New York Times, Washington Post and The Guardian are at the fore of innovation and experimentation and regularly incorporate dynamic graphics into their journalism products (Segel, 2011). -

Stories in Geotime

Stories in GeoTime Ryan Eccles, Thomas Kapler, Robert Harper, William Wright Information Visualization ( 2008 ) © Stefan John 2008 Summer term 2008 1 Introduction • Story a powerful abstraction − Used by intelligence analysts to conceptualize threats and understand patterns − Part of the analytical process • Oculus Info’s GeoTime™ − Geo-temporal event visualization tool − Augmented with story system − Narratives, hypertext-linked visualizations, visual annotations, and pattern detection − Environment for analytic exploration and communication − Detects geo-temporal patterns − Integrates story narration to increase analytic sense-making cohesion Summer term 2008 2 1 Introduction • Assisting the analyst in: − Identifying, − Extracting, − Arranging, and − Presenting stories within the data • Story system − Lets analysts operate at story level − Higher level abstractions of data (behaviors and events) − Staying connected to the evidence − Developed in collaboration with analysts • Formal evaluation showed high utility and usability Summer term 2008 3 Overview • Storytelling • Related Work • Geo-Temporal Visualization in GeoTime • Stories in GeoTime • Evaluation Summer term 2008 4 2 Storytelling • First described in Aristotle’s Poetics − Objects of a tragedy (story): - Plot -> arrangements of incidents -Character - Thought -> processes of reasoning leading characters to their respective behavior • Narrative theory suggests: − People are essentially storytellers − Implicit ability to evaluate a story for: - Consistency -Detail - Structure Summer -

Harvard University Center for Geographic Analysis Newsletter November 2009

Harvard University Center for Geographic Analysis Newsletter November 2009 CENTER FOR GEOGRAPHIC ANALYSIS NEWSLETTER November 2009 ******************************************************************************** HIGHLIGHTS • CGA 2010 Conference Announcement: Research on Religion • Getty Thesaurus of Geographic Names available for Harvard Use • GeoTime Software Available for Trial • Harvard University Web Map • HealthMap on the iPhone • UCGIS 2010 Winter Meeting • Call for Papers: GIScience Research Track • 2010 GeoDesign Summit • Geography of a Recession • WorldView-2 Satellite Launch • Cartography 2.0 • Reverse Geocoding • Whatever Map • Satellite Image Search Tool • ScanEx Oil Spill Monitoring Project • Diversitydata.org • Google’s Free Navigation Service for Cellphones More... ******************************************************************************** CGA NEWS *CGA 2010 Conference Announcement: Research on Religion* The CGA is pleased to announce our co-sponsored 2010 conference: “New Technologies and Interdisciplinary Research on Religion” to be held March 12-13, 2010. The conference is organized by the Political Economy of Religion Program, Taubman Center, Harvard Kennedy School of Government, and co- sponsored by the Center for Geographic Analysis, Institute for Quantitative Social Science, Harvard University, as well as the Sigma Xi Harvard Chapter. Conference presentations will focus on combining the content of research on religion with database design, geo-referencing, social network analysis, text-mining, remote-sensing, -

Introduction Infographics 3D Computer Graphics

Planetary Science Multimedia Animated Infographics for Scientific Education and Public Outreach INTRODUCTION INFOGRAPHICS 3D COMPUTER GRAPHICS Visual and graphic representation of scientific knowledge is one of the most effective ways to present The production of infographics are made by using software creation and manipulation of vector The 3D computer graphics are modeled in CAD software like Blender, 3DSMax and Bryce and then complex scientific information in a clear and fast way. Furthermore, the use of animated infographics, graphics, such as Adobe Illustrator, CorelDraw and Inkscape. These programs generate SVG files to be rendered with plugins like Vray, Maxwell and Flamingo for a photorealistic finish. Terrain models are video and computerized graphics becomes a vital tool for education in Planetary Science. Using viewed in the multimedia. taken directly from DTM (Digital Terrain Models) data available of Solar System objects in various infographics resources arouse the interest of new generations of scientists, engineers and general official sources as NASA, ESA, JAXA, USGS, Google Mars and Google Moon. The DTM can also be raised public, and if it visually represents the concepts and data with high scientific rigor, outreach of from topographic maps available online from the same sources, using GIS tools like ArcScene, ArcMap infographics resources multiplies exponentially and Planetary Science will be broadcast with a precise and Global Mapper. conceptualization and interest generated and it will benefit immensely the ability to stimulate the formation of new scientists, engineers and researchers. This multimedia work mixes animated infographics, 3D computer graphics and video with vfx, with the goal of making an introduction to the Planetary Science and its basic concepts. -

Poster Summary

Grand Challenge Award 2008: Support for Diverse Analytic Techniques - nSpace2 and GeoTime Visual Analytics Lynn Chien, Annie Tat, Pascale Proulx, Adeel Khamisa, William Wright* Oculus Info Inc. ABSTRACT nSpace2 allows analysts to share TRIST and Sandbox files in a GeoTime and nSpace2 are interactive visual analytics tools that web environment. Multiple Sandboxes can be made and opened were used to examine and interpret all four of the 2008 VAST in different browser windows so the analyst can interact with Challenge datasets. GeoTime excels in visualizing event patterns different facets of information at the same time, as shown in in time and space, or in time and any abstract landscape, while Figure 2. The Sandbox is an integrating analytic tool. Results nSpace2 is a web-based analytical tool designed to support every from each of the quite different mini-challenges were combined in step of the analytical process. nSpace2 is an integrating analytic the Sandbox environment. environment. This paper highlights the VAST analytical experience with these tools that contributed to the success of these The Sandbox is a flexible and expressive thinking environment tools and this team for the third consecutive year. where ideas are unrestricted and thoughts can flow freely and be recorded by pointing and typing anywhere [3]. The Pasteboard CR Categories: H.5.2 [Information Interfaces & Presentations]: sits at the bottom panel of the browser and relevant information User Interfaces – Graphical User Interfaces (GUI); I.3.6 such as entities, evidence and an analyst’s hypotheses can be [Methodology and Techniques]: Interaction Techniques. copied to it and then transferred to other Sandboxes to be assembled according to a different perspective for example.