On-Screen Pre-Deblurring of Digital Images Using the Wavefront

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vision Screening Training

Vision Screening Training Child Health and Disability Prevention (CHDP) Program State of California CMS/CHDP Department of Health Care Services Revised 7/8/2013 Acknowledgements Vision Screening Training Workgroup – comprising Health Educators, Public Health Nurses, and CHDP Medical Consultants Dr. Selim Koseoglu, Pediatric Ophthalmologist Local CHDP Staff 2 Objectives By the end of the training, participants will be able to: Understand the basic anatomy of the eye and the pathway of vision Understand the importance of vision screening Recognize common vision disorders in children Identify the steps of vision screening Describe and implement the CHDP guidelines for referral and follow-up Properly document on the PM 160 vision screening results, referrals and follow-up 3 IMPORTANCE OF VISION SCREENING 4 Why Screen for Vision? Early diagnosis of: ◦ Refractive Errors (Nearsightedness, Farsightedness) ◦ Amblyopia (“lazy eye”) ◦ Strabismus (“crossed eyes”) Early intervention is the key to successful treatment 5 Why Screen for Vision? Vision problems often go undetected because: Young children may not realize they cannot see properly Many eye problems do not cause pain, therefore a child may not complain of discomfort Many eye problems may not be obvious, especially among young children The screening procedure may have been improperly performed 6 Screening vs. Diagnosis Screening Diagnosis 1. Identifies children at 1. Identifies the child’s risk for certain eye eye condition conditions or in need 2. Allows the eye of a professional -

Jaeger Eye Chart

Jaeger Eye Chart DIRECTIONS FOR USE The Jaeger eye chart (or Jaeger card) is used to test and document near visual acuity at a normal reading distance. Refractive errors and conditions that cause blurry reading vision include astigmatism, hyperopia (farsightedness) and presbyopia (loss of near focusing ability after age 40). If you typically wear eyeglasses or contact lenses full-time, you should wear them during the test. 1. Hold the test card 14 inches from the eyes. Use a tape measure to verify this distance. 2. The card should be illuminated with lighting typical of that used for comfortable reading. 3. Testing usually is performed with both eyes open; but if a significant difference between the two eyes is suspected, cover one eye and test each eye separately. 4. Go to the smallest block of text you feel you can see without squinting, and read that passage aloud. 5. Then try reading the next smaller block of text. (Remember: no squinting!) 6. Continue reading successively smaller blocks of print until you reach a size that is not legible. 7. Record the “J” value of the smallest block of text you can read (example: “J1”). DISCLAIMER: Eye charts measure only visual acuity, which is just one component of good vision. They cannot determine if your eyes are “working overtime” (needing to focus more than normal, which can lead to headaches and eye strain). Nor can they determine if your eyes work properly as a team for clear, comfortable binocular vision and accurate depth perception. Eye charts also cannot detect serious eye problems such as glaucoma or early diabetic retinopathy that could lead to serious vision impairment and even blindness. -

Strehl Ratio for Primary Aberrations: Some Analytical Results for Circular and Annular Pupils

1258 J. Opt. Soc. Am./Vol. 72, No. 9/September 1982 Virendra N. Mahajan Strehl ratio for primary aberrations: some analytical results for circular and annular pupils Virendra N. Mahajan The Charles Stark Draper Laboratory, Inc., Cambridge, Massachusetts 02139 Received February 9,1982 Imaging systems with circular and annular pupils aberrated by primary aberrations are considered. Both classical and balanced (Zernike) aberrations are discussed. Closed-form solutions are derived for the Strehl ratio, except in the case of coma, for which the integral form is used. Numerical results are obtained and compared with Mar6- chal's formula for small aberrations. It is shown that, as long as the Strehl ratio is greater than 0.6, the Markchal formula gives its value with an error of less than 10%. A discussion of the Rayleigh quarter-wave rule is given, and it is shown that it provides only a qualitative measure of aberration tolerance. Nonoptimally balanced aberrations are also considered, and it is shown that, unless the Strehl ratio is quite high, an optimally balanced aberration does not necessarily give a maximum Strehl ratio. 1. INTRODUCTION is shown that, unless the Strehl ratio is quite high, an opti- In a recent paper,1 we discussed the problem of balancing a mally balanced aberration(in the sense of minimum variance) does not classical aberration in imaging systems having annular pupils give the maximum possible Strehl ratio. As an ex- ample, spherical with one or more aberrations of lower order to minimize its aberration is discussed in detail. A certain variance. By using the Gram-Schmidt orthogonalization amount of spherical aberration is balanced with an appro- process, polynomials that are orthogonal over an annulus were priate amount of defocus to minimize its variance across the pupil. -

AAO 2018 2019 BCSC Sectio

The American Academy of Ophthalmology is accredited by the Accreditation Council for Continuing Medical Education (ACCME) to provide continuing medical education for physicians. The American Academy of Ophthalmology designates this enduring material for a maximum of 15 AMA PRA Category 1 Credits™. Physicians should claim only the credit commensurate with the extent of their participation in the activity. CME expiration date: June 1, 2021. AMA PRA Category 1 Credits™ may be claimed only once between June 1, 2018, and the expiration date. BCSC® volumes are designed to increase the physician’s ophthalmic knowledge through study and review. Users of this activity are encouraged to read the text and then answer the study questions provided at the back of the book. To claim AMA PRA Category 1 Credits™ upon completion of this activity, learners must demonstrate appropriate knowledge and participation in the activity by taking the posttest for Section 3 and achieving a score of 80% or higher. For further details, please see the instructions for requesting CME credit at the back of the book. The Academy provides this material for educational purposes only. It is not intended to represent the only or best method or procedure in every case, nor to replace a physician’s own judgment or give specic advice for case management. Including all indications, contraindications, side eects, and alternative agents for each drug or treatment is beyond the scope of this material. All information and recommendations should be veried, prior to use, with current information included in the manufacturers’ package inserts or other independent sources, and considered in light of the patient’s condition and history. -

PROTOCOL STUDY TITLE: Evaluation of Reading Speed

PROTOCOL STUDY TITLE: Evaluation of ReAding Speed, Contrast Sensitivity, and Work Productivity in Working Individuals with Diabetic Macular Edema Following Treatment with Intravitreal Ranibizumab (ERASER Study) STUDY DRUG Recombinant humanized anti-VEGF monoclonal antibody fragment (rhuFab V2 [ranibizumab]) California Retina Research Foundation SPONSOR 525 E. Micheltorena Street, Suites A & D Santa Barbara, California, United States 93103 NCT NUMBER: NCT02107131 INVESTIGATOR Nathan Steinle, MD SUB- Robert Avery, MD INVESTIGATORS Ma’an Nasir, MD Dante Pieramici, MD Alessandro Castellarin, MD Robert See, MD Stephen Couvillion, MD Dilsher Dhoot, MD DATE: 12/4/2015 AMENDMENT: 3 Protocol: ML29184s Amendment Date: 4December2015 Lucentis IST Program 1. BACKGROUND 1.1 PATHOPHYSIOLOGY Diabetic retinopathy is the leading cause of blindness associated with retinal vascular disease. Macular edema is a major cause of central vision loss in patients presenting with diabetic retinopathy. The prevalence of diabetic macular edema after 15 years of known diabetes is approximately 20% in patients with type 1 diabetes mellitus (DM), 25% in patients with type 2 DM who are taking insulin, and 14% in patients with type 2 DM who do not take insulin. Breakdown of endothelial tight junctions and loss of the blood-retinal barrier between the retinal pigment epithelium and choriocapillaris junction lead to exudation of blood, fluid, and lipid leading to thickening of the retina. When these changes involves or threatens the fovea, there is a higher risk of central vision loss. Functional and eventual structural changes in the blood-retinal barrier as well as reduced retinal blood flow leads to the development of hypoxia. These changes may result in upregulation and release of vascular endothelial growth factor (VEGF), a 45 kD glycoprotein. -

Doctor of Philosophy

RICE UNIVERSITY 3D sensing by optics and algorithm co-design By Yicheng Wu A THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE Doctor of Philosophy APPROVED, THESIS COMMITTEE Ashok Veeraraghavan (Apr 28, 2021 11:10 CDT) Richard Baraniuk (Apr 22, 2021 16:14 ADT) Ashok Veeraraghavan Richard Baraniuk Professor of Electrical and Computer Victor E. Cameron Professor of Electrical and Computer Engineering Engineering Jacob Robinson (Apr 22, 2021 13:54 CDT) Jacob Robinson Associate Professor of Electrical and Computer Engineering and Bioengineering Anshumali Shrivastava Assistant Professor of Computer Science HOUSTON, TEXAS April 2021 ABSTRACT 3D sensing by optics and algorithm co-design by Yicheng Wu 3D sensing provides the full spatial context of the world, which is important for applications such as augmented reality, virtual reality, and autonomous driving. Unfortunately, conventional cameras only capture a 2D projection of a 3D scene, while depth information is lost. In my research, I propose 3D sensors by jointly designing optics and algorithms. The key idea is to optically encode depth on the sensor measurement, and digitally decode depth using computational solvers. This allows us to recover depth accurately and robustly. In the first part of my thesis, I explore depth estimation using wavefront sensing, which is useful for scientific systems. Depth is encoded in the phase of a wavefront. I build a novel wavefront imaging sensor with high resolution (a.k.a. WISH), using a programmable spatial light modulator (SLM) and a phase retrieval algorithm. WISH offers fine phase estimation with significantly better spatial resolution as compared to currently available wavefront sensors. -

Screening for Visual Impairment in Children Ages 1–5 Years: Update for the USPSTF

Screening for Visual Impairment in Children Ages 1–5 Years: Update for the USPSTF AUTHORS: Roger Chou, MD,a,b,c Tracy Dana, MLS,a and abstract Christina Bougatsos, BSa CONTEXT: Screening could identify preschool-aged children with vi- aOregon Evidence-Based Practice Center and Departments of bMedicine and cMedical Informatics and Clinical Epidemiology, sion problems at a critical period of visual development and lead to Oregon Health & Science University, Portland, Oregon treatments that could improve vision. KEY WORDS OBJECTIVE: To determine the effectiveness of screening preschool- impaired visual acuity, vision screening, vision tests, preschool aged children for impaired visual acuity on health outcomes. children, refractive errors, amblyopia, amblyogenic risk factors, random dot E stereoacuity test, MTI photoscreener, patching, METHODS: We searched Medline from 1950 to July 2009 and the Co- systematic review chrane Library through the third quarter of 2009, reviewed reference ABBREVIATIONS lists, and consulted experts. We selected randomized trials and con- USPSTF—US Preventive Services Task Force trolled observational studies on preschool vision screening and treat- logMAR—logarithmic minimal angle of resolution ALSPAC—Avon Longitudinal Study of Parents and Children ments, and studies of diagnostic accuracy of screening tests. One in- RR—relative risk vestigator abstracted relevant data, and a second investigator CI—confidence interval checked data abstraction and quality assessments. VIP—Vision in Preschoolers PLR—positive likelihood ratio RESULTS: Direct evidence on the effectiveness of preschool vision NLR—negative likelihood ratio screening for improving visual acuity or other clinical outcomes re- MTI—Medical Technology and Innovations OR—odds ratio mains limited and does not adequately address whether screening is D—diopter(s) more effective than no screening. -

Aperture-Based Correction of Aberration Obtained from Optical Fourier Coding and Blur Estimation

Research Article Vol. 6, No. 5 / May 2019 / Optica 647 Computational aberration compensation by coded- aperture-based correction of aberration obtained from optical Fourier coding and blur estimation 1, 2 2 3 1 JAEBUM CHUNG, *GLORIA W. MARTINEZ, KAREN C. LENCIONI, SRINIVAS R. SADDA, AND CHANGHUEI YANG 1Department of Electrical Engineering, California Institute of Technology, Pasadena, California 91125, USA 2Office of Laboratory Animal Resources, California Institute of Technology, Pasadena, California 91125, USA 3Doheny Eye Institute, University of California-Los Angeles, Los Angeles, California 90033, USA *Corresponding author: [email protected] Received 29 January 2019; revised 8 April 2019; accepted 12 April 2019 (Doc. ID 359017); published 10 May 2019 We report a novel generalized optical measurement system and computational approach to determine and correct aberrations in optical systems. The system consists of a computational imaging method capable of reconstructing an optical system’s pupil function by adapting overlapped Fourier coding to an incoherent imaging modality. It re- covers the high-resolution image latent in an aberrated image via deconvolution. The deconvolution is made robust to noise by using coded apertures to capture images. We term this method coded-aperture-based correction of aberration obtained from overlapped Fourier coding and blur estimation (CACAO-FB). It is well-suited for various imaging scenarios where aberration is present and where providing a spatially coherent illumination is very challenging or impossible. We report the demonstration of CACAO-FB with a variety of samples including an in vivo imaging experi- ment on the eye of a rhesus macaque to correct for its inherent aberration in the rendered retinal images. -

Physics of Digital Photography Index

1 Physics of digital photography Author: Andy Rowlands ISBN: 978-0-7503-1242-4 (ebook) ISBN: 978-0-7503-1243-1 (hardback) Index (Compiled on 5th September 2018) Abbe cut-off frequency 3-31, 5-31 Abbe's sine condition 1-58 aberration function see \wavefront error function" aberration transfer function 5-32 aberrations 1-3, 1-8, 3-31, 5-25, 5-32 absolute colourimetry 4-13 ac value 3-13 achromatic (colour) see \greyscale" acutance 5-38 adapted homogeneity-directed see \demosaicing methods" adapted white 4-33 additive colour space 4-22 Adobe® colour matrix 4-42, 4-43 Adobe® digital negative 4-40, 4-42 Adobe® forward matrix 4-41, 4-42, 4-47, 4-49 Adobe® Photoshop® 4-60, 4-61, 5-45 Adobe® RGB colour space 4-27, 4-60, 4-61 adopted white 4-33, 4-34 Airy disk 3-15, 3-27, 5-33, 5-46, 5-49 aliasing 3-43, 3-44, 3-47, 5-42, 5-45 amplitude OTF see \amplitude transfer function" amplitude PSF 3-26 amplitude transfer function 3-26 analog gain 2-24 analog-to-digital converter 2-2, 2-5, 3-61, 5-56 analog-to-digital unit see \digital number" angular field of view 1-21, 1-23, 1-24 anti-aliasing filter 5-45, also see \optical low-pass filter” aperture-diffraction PSF 3-27, 5-33 aperture-diffraction MTF 3-29, 5-31, 5-33, 5-35 aperture function 3-23, 3-26 aperture priority mode 2-32, 5-71 2 aperture stop 1-22 aperture value 1-56 aplanatic lens 1-57 apodisation filter 5-34 arpetal ratio 1-50 astigmatism 5-26 auto-correlation 3-30 auto-ISO mode 2-34 average photometry 2-17, 2-18 backside-illuminated device 3-57, 5-58 band-limited function 3-45, 5-44 banding see \posterisation" -

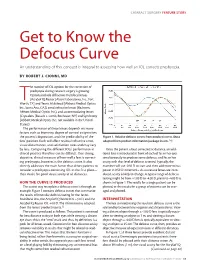

Get to Know the Defocus Curve an Understanding of This Concept Is Integral to Assessing How Well an IOL Corrects Presbyopia

CATARACT SURGERY FEATURE STORY Get to Know the Defocus Curve An understanding of this concept is integral to assessing how well an IOL corrects presbyopia. BY ROBERT J. CIONNI, MD he number of IOL options for the correction of presbyopia during cataract surgery is growing. Options include diffractive multifocal lenses (AcrySof IQ Restor [Alcon Laboratories, Inc., Fort TWorth, TX] and Tecnis Multifocal [Abbott Medical Optics Inc., Santa Ana, CA]), zonal refractive lenses (ReZoom; Abbott Medical Optics Inc.), and accommodating lenses (Crystalens [Bausch + Lomb, Rochester, NY] and Synchrony [Abbott Medical Optics Inc.; not available in the United States]). The performance of these lenses depends on many factors such as biometry, degree of corneal astigmatism, the patient’s disposition, and the predictability of the Figure 1. Relative defocus curves from product inserts. (Data lens’ position. Each will affect residual refractive error, adapted from product information/package inserts.1,2) visual disturbances, and satisfaction rates and may vary by lens. Comparing the different IOLs’ performance in Once the patient is best corrected at distance, an addi- clinical practice therefore can be difficult. One strong, tional lens is introduced in front of each of his or her eyes objective, clinical measure of how well a lens is correct- simultaneously to produce some defocus, and his or her ing presbyopia, however, is the defocus curve. It also acuity with that level of defocus is tested. Typically, the directly addresses the main factor that drives patients to examiner will use -0.50 D to start and then add more minus consider a presbyopia-correcting IOL in the first place— power in 0.50 D increments. -

Tese De Doutorado Nº 200 a Perifocal Intraocular Lens

TESE DE DOUTORADO Nº 200 A PERIFOCAL INTRAOCULAR LENS WITH EXTENDED DEPTH OF FOCUS Felipe Tayer Amaral DATA DA DEFESA: 25/05/2015 Universidade Federal de Minas Gerais Escola de Engenharia Programa de Pós-Graduação em Engenharia Elétrica A PERIFOCAL INTRAOCULAR LENS WITH EXTENDED DEPTH OF FOCUS Felipe Tayer Amaral Tese de Doutorado submetida à Banca Examinadora designada pelo Colegiado do Programa de Pós- Graduação em Engenharia Elétrica da Escola de Engenharia da Universidade Federal de Minas Gerais, como requisito para obtenção do Título de Doutor em Engenharia Elétrica. Orientador: Prof. Davies William de Lima Monteiro Belo Horizonte – MG Maio de 2015 To my parents, for always believing in me, for dedicating themselves to me with so much love and for encouraging me to dream big to make a difference. "Aos meus pais, por sempre acreditarem em mim, por se dedicarem a mim com tanto amor e por me encorajarem a sonhar grande para fazer a diferença." ACKNOWLEDGEMENTS I would like to acknowledge all my friends and family for the fondness and incentive whenever I needed. I thank all those who contributed directly and indirectly to this work. I thank my parents Suzana and Carlos for being by my side in the darkest hours comforting me, advising me and giving me all the support and unconditional love to move on. I thank you for everything you taught me to be a better person: you are winners and my life example! I thank Fernanda for being so lovely and for listening to my ideas and for encouraging me all the time. -

Your Source Vision

Good-Lite Product Catalog YOUR SOURCE for VISION Cognitive Test • Grating Acuity • Wall Charts • Handheld Charts • EyeSpy 20/20 • 10x18 Charts • ETDRS Charts • Low Vision • Intermediate Vision • Near Vision • Read- ing Cards • ETDRS Cabinet • Spot Vision Screener • ESV1200 - 9x14 • ESV1018 - 10x18 • Insta-Line • CSV-1000 • Super Pinhole • Accessories • Screening Software • Testing Software • Fixation • Occluders • Fun Frames • Prisms • Hyperopia • Retinoscopy • Eye Models • Stereo Test • Worth 4-Dot • Color Books & Tests • D15 Style Color Test • Desaturated 1-800-263-3557 Color Test • Projector Slides • Continuous Text • Adult Charts • Children Charts • Pediatric Charts • Vectographic Slides • Amsler Grid • Campimeter • Prism Bar • Loose Prism • Spanish • Vectograph • Maddox • Cylinders • Phoropter • Trial Lens/Frames • Filters • Risley • Eye PatchesGOOD-LITE • LEA Symbols • LEA Numbers • Contrast Sensitivity • Visual Field • Cognitive Vision • Adaptation • Color Vision • Optokinetic • Tangent Screen • Flat & Curved Prism • Magnifier • Flipper • Slit Lamp Celebrating More Than 80 Years of Good-Lite The Good-Lite Company is celebrating In the 1970s, Palmer worked with Otto in children and adults with developmental more than 80 years as the industry leader Lippmann, MD, to develop HOTV optotypes delays/disabilities. The American Academy of in illuminated cabinets; evidence-based eye using Sloan letters. Lippmann’s HOTV Pediatrics, et al., includes LEA Symbols on its charts, such as the LEA Symbols, and many optotypes — a modified version of a Stycar list of recommended tests for preschool vision more vision screening and testing products matching letters test based on Snellen screening. available in this catalog and online. letters — are useful for screening and testing Good-Lite values its key role in helping vision The combined efforts of Robert Good, vision of children as young as age 3 years.