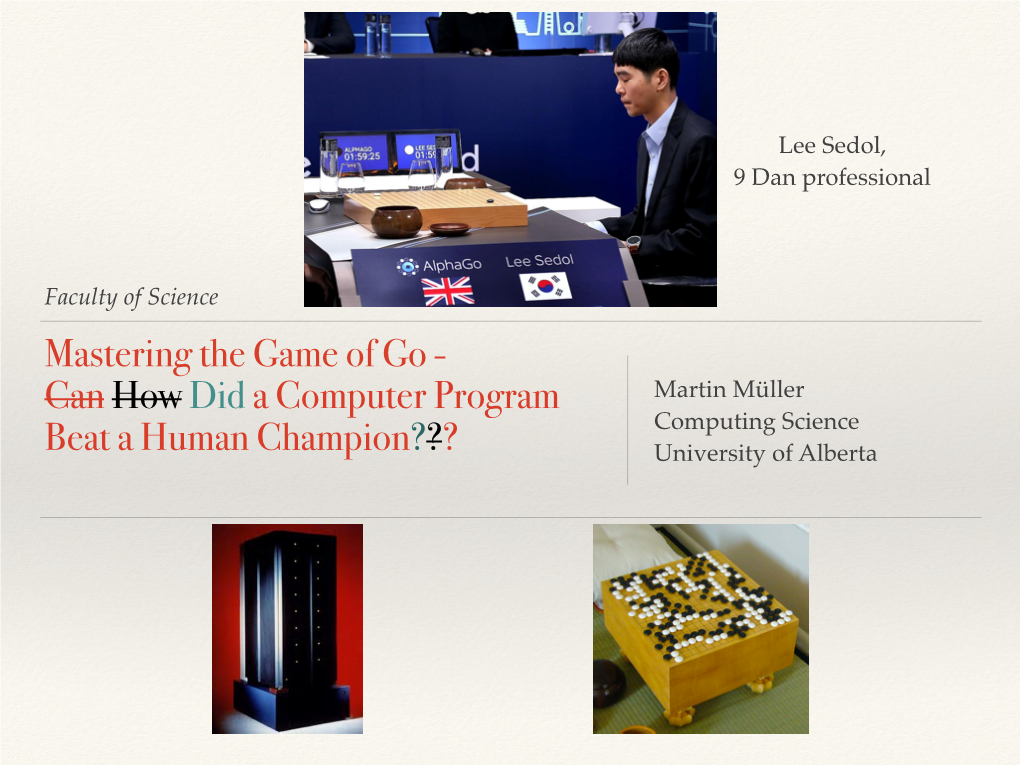

Mastering the Game of Go - Can How Did a Computer Program Martin Müller Computing Science Beat a Human Champion??? University of Alberta in This Lecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ELF Opengo: an Analysis and Open Reimplementation of Alphazero

ELF OpenGo: An Analysis and Open Reimplementation of AlphaZero Yuandong Tian 1 Jerry Ma * 1 Qucheng Gong * 1 Shubho Sengupta * 1 Zhuoyuan Chen 1 James Pinkerton 1 C. Lawrence Zitnick 1 Abstract However, these advances in playing ability come at signifi- The AlphaGo, AlphaGo Zero, and AlphaZero cant computational expense. A single training run requires series of algorithms are remarkable demonstra- millions of selfplay games and days of training on thousands tions of deep reinforcement learning’s capabili- of TPUs, which is an unattainable level of compute for the ties, achieving superhuman performance in the majority of the research community. When combined with complex game of Go with progressively increas- the unavailability of code and models, the result is that the ing autonomy. However, many obstacles remain approach is very difficult, if not impossible, to reproduce, in the understanding of and usability of these study, improve upon, and extend. promising approaches by the research commu- In this paper, we propose ELF OpenGo, an open-source nity. Toward elucidating unresolved mysteries reimplementation of the AlphaZero (Silver et al., 2018) and facilitating future research, we propose ELF algorithm for the game of Go. We then apply ELF OpenGo OpenGo, an open-source reimplementation of the toward the following three additional contributions. AlphaZero algorithm. ELF OpenGo is the first open-source Go AI to convincingly demonstrate First, we train a superhuman model for ELF OpenGo. Af- superhuman performance with a perfect (20:0) ter running our AlphaZero-style training software on 2,000 record against global top professionals. We ap- GPUs for 9 days, our 20-block model has achieved super- ply ELF OpenGo to conduct extensive ablation human performance that is arguably comparable to the 20- studies, and to identify and analyze numerous in- block models described in Silver et al.(2017) and Silver teresting phenomena in both the model training et al.(2018). -

Human Vs. Computer Go: Review and Prospect

This article is accepted and will be published in IEEE Computational Intelligence Magazine in August 2016 Human vs. Computer Go: Review and Prospect Chang-Shing Lee*, Mei-Hui Wang Department of Computer Science and Information Engineering, National University of Tainan, TAIWAN Shi-Jim Yen Department of Computer Science and Information Engineering, National Dong Hwa University, TAIWAN Ting-Han Wei, I-Chen Wu Department of Computer Science, National Chiao Tung University, TAIWAN Ping-Chiang Chou, Chun-Hsun Chou Taiwan Go Association, TAIWAN Ming-Wan Wang Nihon Ki-in Go Institute, JAPAN Tai-Hsiung Yang Haifong Weiqi Academy, TAIWAN Abstract The Google DeepMind challenge match in March 2016 was a historic achievement for computer Go development. This article discusses the development of computational intelligence (CI) and its relative strength in comparison with human intelligence for the game of Go. We first summarize the milestones achieved for computer Go from 1998 to 2016. Then, the computer Go programs that have participated in previous IEEE CIS competitions as well as methods and techniques used in AlphaGo are briefly introduced. Commentaries from three high-level professional Go players on the five AlphaGo versus Lee Sedol games are also included. We conclude that AlphaGo beating Lee Sedol is a huge achievement in artificial intelligence (AI) based largely on CI methods. In the future, powerful computer Go programs such as AlphaGo are expected to be instrumental in promoting Go education and AI real-world applications. I. Computer Go Competitions The IEEE Computational Intelligence Society (CIS) has funded human vs. computer Go competitions in IEEE CIS flagship conferences since 2009. -

Commented Games by Lee Sedol, Vol. 2 ספר חדש של לי סה-דול תורגם לאנגלית

שלום לכולם, בימים אלו יצא לאור תרגום לאנגלית לכרך השני מתוך משלושה לספריו של לי סה-דול: “Commented Games by Lee Sedol, Vol. 1-3: One Step Closer to the Summit ” בהמשך מידע נוסף על הספרים אותם ניתן להשיג במועדון הגו מיינד. ניתן לצפות בתוכן העניינים של הספר וכן מספר עמודי דוגמה כאן: http://www.go- mind.com/bbs/downloa d.php?id=64 לימוד מהנה!! בברכה, שביט 450-0544054 מיינד מועדון גו www.go-mind.com סקירה על הספרים: באמצע 0449 לקח לי סה-דול פסק זמן ממשחקי מקצוענים וכתב שלושה כרכים בהם ניתח לעומק את משחקיו. הכרך הראשון מכיל יותר מ404- עמודים ונחשב למקבילה הקוריאנית של "הבלתי מנוצח – משחקיו של שוסאקו". בכל כרך מנתח לי סה-דול 4 ממשחקיו החשובים ביותר בפירוט רב ומשתף את הקוראים בכנות במחשבותיו ורגשותיו. הסדרה מאפשרת הצצה נדירה לחשיבה של אחד השחקנים הטובים בעולם. בכרך הראשון סוקר לי סה-דול את משחק הניצחון שלו משנת 0444, את ההפסד שלו ללי צ'אנג הו בטורניר גביע LG ב0442- ואת משחק הניצחון שלו בטורניר גביע Fujitsu ב0440-. מאות תרשימים ועשרות קיפו מוצגים עבור כל משחק. בנוסף, לי סה דול משתף בחוויותיו כילד, עת גדל בחווה בביגאום, מחשבותיו והרגשותיו במהלך ולאחר המשחקים. כאנקדוטה: "דול" משמעותו "אבן" בקוריאנית. סה-דול פירושו "אבן חזקה" ואם תרצו בעברית אבן צור או אבן חלמיש או בורנשטין )שם משפחה(. From the book back page : "My ultimate goal is to be the best player in the world. Winning my first international title was a big step toward reaching this objective. I remember how fervently my father had taught me, and how he dreamed of watching me grow to be the world's best. -

Nuovo Torneo Su Dragon Go Server Fatti Più in Là, Tecnica Di Invasione Gentile Francesco Marigo

Anno 1 - Autunno 2007 - Numero 2 In Questo Numero: Francesco Marigo: Fatti più in là, Ancora Campione! tecnica di invasione Nuovo torneo su Dragon Go Server gentile EditorialeEdiroriale IlIl RitornoRitorno delledelle GruGru Editoriale Benvenuti al secondo numero. Ma veniamo ora al nuovo numero, per pre- pararci ed entrare nell’atmosfera E’ duro il ritorno dalle vacanze e non solo per “Campionato Italiano” abbiamo una doppia le nostre simpatiche gru in copertina. Il intervista a Francesco e Ramon dove ana- mondo del go era entrato nella parentesi lizzano l’ultima partita della finale. estiva dopo il Campionato Europeo, che que- Continuano le recensioni dei film con rife- st’anno si è tenuto in Austria, e in questi pri- rimenti al go, questo numero abbiamo una mi giorni di autunno si sta dolcemente risve- pietra miliare: The Go Masters. gliando con tornei, stage e manifestazioni. Naturalmente non può mancare la sezione tecnica dove presentiamo un articolo più Puntuale anche questa rivista si ripropone tattico su una strategia di invasione e uno con il suo secondo numero. Molti di voi ri- più strategico che si propone di introdurre ceveranno direttamente questo numero lo stile “cosmico”. nella vostra casella di posta elettronica, Ma non ci fermiamo qui, naturalmente ab- questa estate abbiamo ricevuto numerose biamo una sezione di problemi, la presen- richieste di abbonamento. tazione di un nuovo torneo online e un con- Ci è pervenuto anche un buon numero di fronto tra il go e il gioco praticato dai cugi- richieste di collaborazione, alcune di que- ni scacchisti. ste sono già presenti all’interno di questo numero, altre compariranno sul prossimo Basta con le parole, vi lasciamo alla lettu- numero e ce ne sono allo studio altre anco- ra, sperando anche in questo numero di for- ra. -

Mastering the Game of Go with Deep Neural Networks and Tree Search

Mastering the game of Go with deep neural networks and tree search Nature, Jan, 2016 Roadmap What this paper is about? • Deep Learning • Search problem • How to explore a huge tree (graph) AlphaGo Video https://www.youtube.com/watch?v=53YLZBSS0cc https://www.youtube.com/watch?v=g-dKXOlsf98 rank AlphaGo vs*European*Champion*(Fan*Hui 27Dan)* October$5$– 9,$2015 <Official$match> I Time+limit:+1+hour I AlphaGo Wins (5:0) AlphaGo vs*World*Champion*(Lee*Sedol 97Dan) March$9$– 15,$2016 <Official$match> I Time+limit:+2+hours Venue:+Seoul,+Four+Seasons+Hotel Image+Source: Josun+Times Jan+28th 2015 Lee*Sedol wiki Photo+source: Maeil+Economics 2013/04 Lee Sedol AlphaGo is*estimated*to*be*around*~57dan =$multiple$machines European$champion The Game Go Elo Ranking http://www.goratings.org/history/ Lee Sedol VS Ke Jie How about Other Games? Tic Tac Toe Chess Chess (1996) Deep Blue (1996) AlphaGo is the Skynet? Go Game Simple Rules High Complexity High Complexity Different Games Search Problem (the search space) Tic Tac Toe Tic Tac Toe The “Tree” in Tic Tac Toe The “Tree” of Chess The “Tree” of Go Game Search Problem (how to search) MiniMax in Tic Tac Toe Adversarial"Search"–"MiniMax"" 1" 1" 1" 0" J1" 0" 0" 0" J1" J1" J1" J1" 5" Adversarial"Search"–"MiniMax"" J1" 1" 0" J1" 1" 1" J1" 0" J1" J1" 1" 1" 1" 0" J1" 0" 0" 0" J1" J1" J1" J1" 6" What is the problem? 1. Generate the Search Tree 2. use MinMax Search The Size of the Tree Tic Tac Toe: b = 9, d =9 Chess: b = 35, d =80 Go: b = 250, d =150 b : number of legal move per position d : its depth (game length) -

Mastering the Game of Go Without Human Knowledge

Mastering the Game of Go without Human Knowledge David Silver*, Julian Schrittwieser*, Karen Simonyan*, Ioannis Antonoglou, Aja Huang, Arthur Guez, Thomas Hubert, Lucas Baker, Matthew Lai, Adrian Bolton, Yutian Chen, Timothy Lillicrap, Fan Hui, Laurent Sifre, George van den Driessche, Thore Graepel, Demis Hassabis. DeepMind, 5 New Street Square, London EC4A 3TW. *These authors contributed equally to this work. A long-standing goal of artificial intelligence is an algorithm that learns, tabula rasa, su- perhuman proficiency in challenging domains. Recently, AlphaGo became the first program to defeat a world champion in the game of Go. The tree search in AlphaGo evaluated posi- tions and selected moves using deep neural networks. These neural networks were trained by supervised learning from human expert moves, and by reinforcement learning from self- play. Here, we introduce an algorithm based solely on reinforcement learning, without hu- man data, guidance, or domain knowledge beyond game rules. AlphaGo becomes its own teacher: a neural network is trained to predict AlphaGo’s own move selections and also the winner of AlphaGo’s games. This neural network improves the strength of tree search, re- sulting in higher quality move selection and stronger self-play in the next iteration. Starting tabula rasa, our new program AlphaGo Zero achieved superhuman performance, winning 100-0 against the previously published, champion-defeating AlphaGo. Much progress towards artificial intelligence has been made using supervised learning sys- tems that are trained to replicate the decisions of human experts 1–4. However, expert data is often expensive, unreliable, or simply unavailable. Even when reliable data is available it may impose a ceiling on the performance of systems trained in this manner 5. -

Challenge Match Game 2: “Invention”

Challenge Match 8-15 March 2016 Game 2: “Invention” Commentary by Fan Hui Go expert analysis by Gu Li and Zhou Ruiyang Translated by Lucas Baker, Thomas Hubert, and Thore Graepel Invention AlphaGo's victory in the first game stunned the world. Many Go players, however, found the result very difficult to accept. Not only had Lee's play in the first game fallen short of his usual standards, but AlphaGo had not even needed to play any spectacular moves to win. Perhaps the first game was a fluke? Though they proclaimed it less stridently than before, the overwhelming majority of commentators were still betting on Lee to claim victory. Reporters arrived in much greater numbers that morning, and with the increased attention from the media, the pressure on Lee rose. After all, the match had begun with everyone expecting Lee to win either 50 or 41. I entered the playing room fifteen minutes before the game to find Demis Hassabis already present, looking much more relaxed than the day before. Four minutes before the starting time, Lee came in with his daughter. Perhaps he felt that she would bring him luck? As a father myself, I know that feeling well. By convention, the media is allowed a few minutes to take pictures at the start of a major game. The room was much fuller this time, another reflection of the increased focus on the match. Today, AlphaGo would take Black, and everyone was eager to see what opening it would choose. Whatever it played would represent what AlphaGo believed to be best for Black. -

Artificial Neural Networks for Wireless Structural Control Ghazi Binarandi Purdue University

Purdue University Purdue e-Pubs Open Access Theses Theses and Dissertations 4-2016 Artificial neural networks for wireless structural control Ghazi Binarandi Purdue University Follow this and additional works at: https://docs.lib.purdue.edu/open_access_theses Part of the Civil Engineering Commons Recommended Citation Binarandi, Ghazi, "Artificial neural networks for wireless structural control" (2016). Open Access Theses. 748. https://docs.lib.purdue.edu/open_access_theses/748 This document has been made available through Purdue e-Pubs, a service of the Purdue University Libraries. Please contact [email protected] for additional information. Graduate School Form 30 Updated PURDUE UNIVERSITY GRADUATE SCHOOL Thesis/Dissertation Acceptance This is to certify that the thesis/dissertation prepared By Ghazi Binarandi Entitled Artificial Neural Networks for Wireless Structural Control For the degree of Master of Science in Civil Engineering Is approved by the final examining committee: Shirley J. Dyke Chair Arun Prakash Julio Ramirez To the best of my knowledge and as understood by the student in the Thesis/Dissertation Agreement, Publication Delay, and Certification Disclaimer (Graduate School Form 32), this thesis/dissertation adheres to the provisions of Purdue University’s “Policy of Integrity in Research” and the use of copyright material. Approved by Major Professor(s): Shirley J. Dyke Approved by: Dulcy Abraham 4/20/2016 Head of the Departmental Graduate Program Date ARTIFICIAL NEURAL NETWORKS FOR WIRELESS STRUCTURAL CONTROL A Thesis Submitted to the Faculty of Purdue University by Ghazi Binarandi In Partial Fulfillment of the Requirements for the Degree of Master of Science in Civil Engineering May 2016 Purdue University West Lafayette, Indiana ii To my Mom; to my Dad. -

Bounded Rationality and Artificial Intelligence

No. 2019 - 04 The Game of Go: Bounded Rationality and Artificial Intelligence Cassey Lee ISEAS – Yusof Ishak Institute [email protected] May 2019 Abstract The goal of this essay is to examine the nature and relationship between bounded rationality and artificial intelligence (AI) in the context of recent developments in the application of AI to two-player zero sum games with perfect information such as Go. This is undertaken by examining the evolution of AI programs for playing Go. Given that bounded rationality is inextricably linked to the nature of the problem to be solved, the complexity of Go is examined using cellular automata (CA). Keywords: Bounded Rationality, Artificial Intelligence, Go JEL Classification: B41, C63, C70 The Game of Go: Bounded Rationality and Artificial Intelligence Cassey Lee 1 “The rules of Go are so elegant, organic, and rigorously logical that if intelligent life forms exist elsewhere in the universe, they almost certainly play Go.” - Edward Lasker “We have never actually seen a real alien, of course. But what might they look like? My research suggests that the most intelligent aliens will actually be some form of artificial intelligence (or AI).” - Susan Schneider 1. Introduction The concepts of bounded rationality and artificial intelligence (AI) do not often appear simultaneously in research articles. Economists discussing bounded rationality rarely mention AI. This is reciprocated by computer scientists writing on AI. This is surprising because Herbert Simon was a pioneer in both bounded rationality and AI. For his contributions to these areas, he is the only person who has received the highest accolades in both economics (the Nobel Prize in 1978) and computer science (the Turing Award in 1975).2 Simon undertook research on both bounded rationality and AI simultaneously in the 1950s and these interests persisted throughout his research career. -

Mastering the Game of Go Without Human Knowledge

ArtICLE doi:10.1038/nature24270 Mastering the game of Go without human knowledge David Silver1*, Julian Schrittwieser1*, Karen Simonyan1*, Ioannis Antonoglou1, Aja Huang1, Arthur Guez1, Thomas Hubert1, Lucas Baker1, Matthew Lai1, Adrian Bolton1, Yutian Chen1, Timothy Lillicrap1, Fan Hui1, Laurent Sifre1, George van den Driessche1, Thore Graepel1 & Demis Hassabis1 A long-standing goal of artificial intelligence is an algorithm that learns, tabula rasa, superhuman proficiency in challenging domains. Recently, AlphaGo became the first program to defeat a world champion in the game of Go. The tree search in AlphaGo evaluated positions and selected moves using deep neural networks. These neural networks were trained by supervised learning from human expert moves, and by reinforcement learning from self-play. Here we introduce an algorithm based solely on reinforcement learning, without human data, guidance or domain knowledge beyond game rules. AlphaGo becomes its own teacher: a neural network is trained to predict AlphaGo’s own move selections and also the winner of AlphaGo’s games. This neural network improves the strength of the tree search, resulting in higher quality move selection and stronger self-play in the next iteration. Starting tabula rasa, our new program AlphaGo Zero achieved superhuman performance, winning 100–0 against the previously published, champion-defeating AlphaGo. Much progress towards artificial intelligence has been made using trained solely by selfplay reinforcement learning, starting from ran supervised learning systems that are trained to replicate the decisions dom play, without any supervision or use of human data. Second, it of human experts1–4. However, expert data sets are often expensive, uses only the black and white stones from the board as input features. -

Challenge Match Game 4: “Endurance”

Challenge Match 8-15 March 2016 Game 4: “Endurance” Commentary by Fan Hui Go expert analysis by Gu Li and Zhou Ruiyang Translated by Lucas Baker, Thomas Hubert, and Thore Graepel Endurance When I arrived in the playing room on the morning of the fourth game, everyone appeared more relaxed than before. Whatever happened today, the match had already been decided. Nonetheless, there were still two games to play, and a true professional like Lee must give his all each time he sits down at the board. When Lee arrived in the playing room, he looked serene, the burden of expectation lifted. Perhaps he would finally recover his composure, forget his surroundings, and simply play his best Go. One thing was certain: it was not in Lee's nature to surrender. There were many fewer reporters in the press room this time. It seemed the media thought the interesting part was finished, and the match was headed for a final score of 50. But in a game of Go, although the information is open for all to see, often the results defy our expectations. Until the final stone is played, anything is possible. Moves 113 For the fourth game, Lee took White. Up to move 11, the opening was the same as the second game. AlphaGo is an extremely consistent player, and once it thinks a move is good, its opinion will not change. During the commentary for that game, I mentioned that AlphaGo prefers to tenuki with 12 and approach at B. Previously, Lee chose to finish the joseki normally at A. -

(Link to Korean Version) Thirdparty Comments

English version Korean version (link to Korean version) Thirdparty comments FINAL VERSION(PLEASE LET EMILY OR LOIS KNOW FOR FURTHER CHANGE) Release: 9am GMT Monday 22nd February English version Details announced for Google DeepMind Challenge Match Landmark fivematch tournament between the Grand Masterdefeating Go computer program, AlphaGo, and best human player of the last decade, Lee Sedol, will be held from March 9 to March 15 at the Four Seasons Hotel Seoul. February 22, 2016 (Seoul, South Korea) — Following breakthrough research on AlphaGo, the first computer program to beat a professional human Go player, Google DeepMind has announced details of the ultimate challenge to play the legendary Lee Sedol — the top Go player in the world over the past decade. AlphaGo will play Lee Sedol in a fivegame challenge match to be held from Wednesday, March 9 to Tuesday, March 15 in Seoul, South Korea. The games will be even (no handicap), with $1 million USD in prize money for the winner. If AlphaGo wins, the prize money will be donated to UNICEF, STEM and Go charities. Regarded as the outstanding grand challenge for artificial intelligence, Go has been viewed as one of the hardest games for computers to master due to its sheer complexity which makes bruteforce exhaustive search intractable (for example there are more possible board configurations than the number of atoms in the universe). DeepMind released details of the breakthrough in a paper published in the scientific journal Nature last month. The matches will be held at the Four Seasons Hotel Seoul, starting at 1pm local time (4am GMT; day before 11pm ET, 8pm PT) on the following days: 1.