Temporal Logic and Model Checking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reasoning in the Temporal Logic of Actions Basic Research in Computer Science

BRICS BRICS DS-96-1 U. Engberg: Reasoning in the Temporal Logic of Actions Basic Research in Computer Science Reasoning in the Temporal Logic of Actions The design and implementation of an interactive computer system Urban Engberg BRICS Dissertation Series DS-96-1 ISSN 1396-7002 August 1996 Copyright c 1996, BRICS, Department of Computer Science University of Aarhus. All rights reserved. Reproduction of all or part of this work is permitted for educational or research use on condition that this copyright notice is included in any copy. See back inner page for a list of recent publications in the BRICS Dissertation Series. Copies may be obtained by contacting: BRICS Department of Computer Science University of Aarhus Ny Munkegade, building 540 DK - 8000 Aarhus C Denmark Telephone: +45 8942 3360 Telefax: +45 8942 3255 Internet: [email protected] BRICS publications are in general accessible through WWW and anonymous FTP: http://www.brics.dk/ ftp ftp.brics.dk (cd pub/BRICS) Reasoning in the Temporal Logic of Actions The design and implementation of an interactive computer system Urban Engberg Ph.D. Dissertation Department of Computer Science University of Aarhus Denmark Reasoning in the Temporal Logic of Actions The design and implementation of an interactive computer system A Dissertation Presented to the Faculty of Science of the University of Aarhus in Partial Fulfillment of the Requirements for the Ph.D. Degree by Urban Engberg September 1995 Abstract Reasoning about algorithms stands out as an essential challenge of computer science. Much work has been put into the development of formal methods, within recent years focusing especially on concurrent algorithms. -

The Rise and Fall of LTL

The Rise and Fall of LTL Moshe Y. Vardi Rice University Monadic Logic Monadic Class: First-order logic with = and monadic predicates – captures syllogisms. • (∀x)P (x), (∀x)(P (x) → Q(x)) |=(∀x)Q(x) [Lowenheim¨ , 1915]: The Monadic Class is decidable. • Proof: Bounded-model property – if a sentence is satisfiable, it is satisfiable in a structure of bounded size. • Proof technique: quantifier elimination. Monadic Second-Order Logic: Allow second- order quantification on monadic predicates. [Skolem, 1919]: Monadic Second-Order Logic is decidable – via bounded-model property and quantifier elimination. Question: What about <? 1 Nondeterministic Finite Automata A = (Σ,S,S0,ρ,F ) • Alphabet: Σ • States: S • Initial states: S0 ⊆ S • Nondeterministic transition function: ρ : S × Σ → 2S • Accepting states: F ⊆ S Input word: a0, a1,...,an−1 Run: s0,s1,...,sn • s0 ∈ S0 • si+1 ∈ ρ(si, ai) for i ≥ 0 Acceptance: sn ∈ F Recognition: L(A) – words accepted by A. 1 - - Example: • • – ends with 1’s 6 0 6 ¢0¡ ¢1¡ Fact: NFAs define the class Reg of regular languages. 2 Logic of Finite Words View finite word w = a0,...,an−1 over alphabet Σ as a mathematical structure: • Domain: 0,...,n − 1 • Binary relation: < • Unary relations: {Pa : a ∈ Σ} First-Order Logic (FO): • Unary atomic formulas: Pa(x) (a ∈ Σ) • Binary atomic formulas: x < y Example: (∃x)((∀y)(¬(x < y)) ∧ Pa(x)) – last letter is a. Monadic Second-Order Logic (MSO): • Monadic second-order quantifier: ∃Q • New unary atomic formulas: Q(x) 3 NFA vs. MSO Theorem [B¨uchi, Elgot, Trakhtenbrot, 1957-8 (independently)]: MSO ≡ NFA • Both MSO and NFA define the class Reg. -

Model Checking with Mbeddr

Model Checking for State Machines with mbeddr and NuSMV 1 Abstract State machines are a powerful tool for modelling software. Particularly in the field of embedded software development where parts of a system can be abstracted as state machine. Temporal logic languages can be used to formulate desired behaviour of a state machine. NuSMV allows to automatically proof whether a state machine complies with properties given as temporal logic formulas. mbedder is an integrated development environment for the C programming language. It enhances C with a special syntax for state machines. Furthermore, it can automatically translate the code into the input language for NuSMV. Thus, it is possible to make use of state-of-the-art mathematical proofing technologies without the need of error prone and time consuming manual translation. This paper gives an introduction to the subject of model checking state machines and how it can be done with mbeddr. It starts with an explanation of temporal logic languages. Afterwards, some features of mbeddr regarding state machines and their verification are discussed, followed by a short description of how NuSMV works. Author: Christoph Rosenberger Supervising Tutor: Peter Sommerlad Lecture: Seminar Program Analysis and Transformation Term: Spring 2013 School: HSR, Hochschule für Technik Rapperswil Model Checking with mbeddr 2 Introduction Model checking In the words of Cavada et al.: „The main purpose of a model checker is to verify that a model satisfies a set of desired properties specified by the user.” [1] mbeddr As Ratiu et al. state in their paper “Language Engineering as an Enabler for Incrementally Defined Formal Analyses” [2], the semantic gap between general purpose programming languages and input languages for formal verification tools is too big. -

The Complexity of Generalized Satisfiability for Linear Temporal Logic

Logical Methods in Computer Science Vol. 5 (1:1) 2009, pp. 1–1–21 Submitted Mar. 28, 2008 www.lmcs-online.org Published Jan. 26, 2009 THE COMPLEXITY OF GENERALIZED SATISFIABILITY FOR LINEAR TEMPORAL LOGIC MICHAEL BAULAND a, THOMAS SCHNEIDER b, HENNING SCHNOOR c, ILKA SCHNOOR d, AND HERIBERT VOLLMER e a Knipp GmbH, Martin-Schmeißer-Weg 9, 44227 Dortmund, Germany e-mail address: [email protected] b School of Computer Science, University of Manchester, Oxford Road, Manchester M13 9PL, UK e-mail address: [email protected] c Inst. f¨ur Informatik, Christian-Albrechts-Universit¨at zu Kiel, 24098 Kiel, Germany e-mail address: [email protected] d Inst. f¨ur Theoretische Informatik, Universit¨at zu L¨ubeck, Ratzeburger Allee 160, 23538 L¨ubeck, Germany e-mail address: [email protected] e Inst. f¨ur Theoretische Informatik, Universit¨at Hannover, Appelstr. 4, 30167 Hannover, Germany e-mail address: [email protected] Abstract. In a seminal paper from 1985, Sistla and Clarke showed that satisfiability for Linear Temporal Logic (LTL) is either NP-complete or PSPACE-complete, depending on the set of temporal operators used. If, in contrast, the set of propositional operators is restricted, the complexity may decrease. This paper undertakes a systematic study of satisfiability for LTL formulae over restricted sets of propositional and temporal opera- tors. Since every propositional operator corresponds to a Boolean function, there exist infinitely many propositional operators. In order to systematically cover all possible sets of them, we use Post’s lattice. With its help, we determine the computational complexity of LTL satisfiability for all combinations of temporal operators and all but two classes of propositional functions. -

On Finite Domains in First-Order Linear Temporal Logic Denis Kuperberg, Julien Brunel, David Chemouil

On Finite Domains in First-Order Linear Temporal Logic Denis Kuperberg, Julien Brunel, David Chemouil To cite this version: Denis Kuperberg, Julien Brunel, David Chemouil. On Finite Domains in First-Order Linear Temporal Logic. 14th International Symposium on Automated Technology for Verification and Analysis, Oct 2016, Chiba, Japan. 10.1007/978-3-319-46520-3_14. hal-01343197 HAL Id: hal-01343197 https://hal.archives-ouvertes.fr/hal-01343197 Submitted on 7 Jul 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. On Finite Domains in First-Order Linear Temporal Logic? Denis Kuperberg1, Julien Brunel2, and David Chemouil2 1 TU Munich, Germany 2 DTIM, Université fédérale de Toulouse, ONERA, France Abstract. We consider First-Order Linear Temporal Logic (FO-LTL) over linear time. Inspired by the success of formal approaches based upon finite-model finders, such as Alloy, we focus on finding models with finite first-order domains for FO-LTL formulas, while retaining an infinite time domain. More precisely, we investigate the complexity of the following problem: given a formula ' and an integer n, is there a model of ' with domain of cardinality at most n? We show that depending on the logic considered (FO or FO-LTL) and on the precise encoding of the problem, the problem is either NP-complete, NEXPTIME-complete, PSPACE- complete or EXPSPACE-complete. -

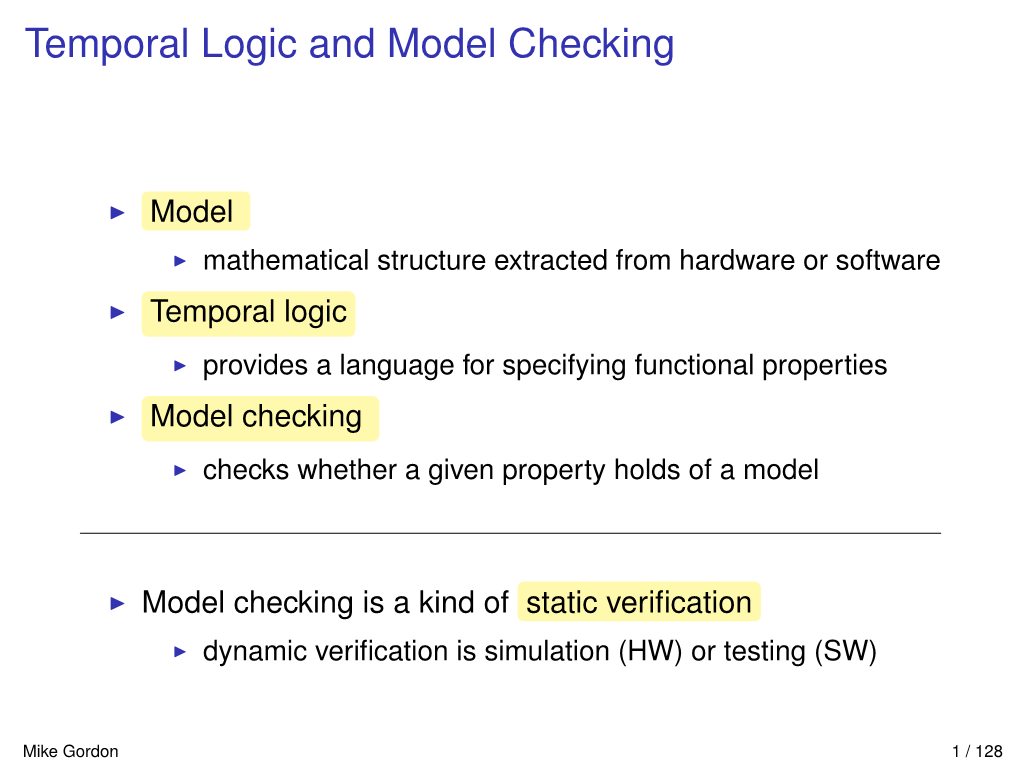

CMPSC 267 Class Notes Introduction to Temporal Logic and Model Checking

CMPSC 267 Class Notes Introduction to Temporal Logic and Model Checking Tevfik Bultan Department of Computer Science University of California, Santa Barbara Chapter 1 Introduction Static analysis techniques uncover properties of computer systems with the goal of im- proving them. A system can be improved in two ways using static analysis (1) improving its performance and (2) improving its correctness by eliminating bugs. As an example, consider the static analysis techniques used in compilers. Data flow analysis finds the relationships between the definitions and the uses of variables, then this information is used to generate more efficient executable code to improve the performance. On the other hand, type checking determines the types of the expressions in the source code, which is used to find type errors. Model checking is a static analysis technique used for improving the correctness of computer systems. I refer to model checking as a static analysis technique because it is a technique used to find errors before run-time. For example, run-time checking of assertions is not a static analysis technique, it only reports an assertion failure at run- time, after it occurs. In model checking the goal is more ambitious, we try to verify that the assertions hold on every possible execution of a system (or program or protocol or whatever you call the thing you are verifying). Given its ambitious nature, model checking is computationally expensive. (Actually, it is an uncomputable problem for most types of infinite state systems.) Model checkers have been used in checking correctness of high level specifications of both hardware [CK96] and software [CAB+98] systems. -

On the Decidability and Complexity of Metric Temporal Logic Over Finite Words

Logical Methods in Computer Science Vol. 3 (1:8) 2007, pp. 1–27 Submitted May 15, 2006 www.lmcs-online.org Published Feb. 28, 2007 ON THE DECIDABILITY AND COMPLEXITY OF METRIC TEMPORAL LOGIC OVER FINITE WORDS JOEL¨ OUAKNINE AND JAMES WORRELL Oxford University Computing Laboratory, Oxford, UK e-mail address: {joel,jbw}@comlab.ox.ac.uk Abstract. Metric Temporal Logic (MTL) is a prominent specification formalism for real- time systems. In this paper, we show that the satisfiability problem for MTL over finite timed words is decidable, with non-primitive recursive complexity. We also consider the model-checking problem for MTL: whether all words accepted by a given Alur-Dill timed automaton satisfy a given MTL formula. We show that this problem is decidable over finite words. Over infinite words, we show that model checking the safety fragment of MTL— which includes invariance and time-bounded response properties—is also decidable. These results are quite surprising in that they contradict various claims to the contrary that have appeared in the literature. 1. Introduction In the linear-temporal-logic approach to verification, an execution of a system is mod- elled by a sequence of states or events. This representation abstracts away from the precise times of observations, retaining only their relative order. Such an approach is inadequate to express specifications of systems whose correct behaviour depends on quantitative timing requirements. To address this deficiency, much work has gone into adapting linear temporal logic to the real-time setting; see, e.g., [6, 7, 9, 10, 24, 27, 32, 35]. Real-time logics feature explicit time references, typically by recording timestamps throughout computations. -

A Probabilistic Temporal Logic with Frequency Operators and Its Model Checking

A Probabilistic Temporal Logic with Frequency Operators and Its Model Checking Takashi Tomita Shigeki Hagihara Naoki Yonezaki Dept. of Computer Science, Graduate School of Information Science and Engineering, Tokyo Institute of Technology {tomita, hagihara, yonezaki}@fmx.cs.titech.ac.jp Probabilistic Computation Tree Logic (PCTL) and Continuous Stochastic Logic (CSL) are often used to describe specifications of probabilistic properties for discrete time and continuous time, respec- tively. In PCTL and CSL, the possibility of executions satisfying some temporal properties can be quantitatively represented by the probabilistic extension of the path quantifiers in their basic Com- putation Tree Logic (CTL), however, path formulae of them are expressed via the same operators in CTL. For this reason, both of them cannot represent formulae with quantitative temporal proper- ties, such as those of the form “some properties hold to more than 80% of time points (in a certain bounded interval) on the path.” In this paper, we introduce a new temporal operator which expressed the notion of frequency of events, and define probabilistic frequency temporal logic (PFTL) based on CTL∗. As a result, we can easily represent the temporal properties of behavior in probabilistic systems. However, it is difficult to develop a model checker for the full PFTL, due to rich expres- siveness. Accordingly, we develop a model-checking algorithm for the CTL-like fragment of PFTL against finite-state Markov chains, and an approximate model-checking algorithm for the bounded Linear Temporal Logic (LTL) -like fragment of PFTL against countable-state Markov chains. 1 Introduction To analyze probabilistic systems, probabilistic model checking is often used. -

Linear Time Logic Control of Discrete-Time Linear Systems

LINEAR TIME LOGIC CONTROL OF DISCRETE-TIME LINEAR SYSTEMS PAULO TABUADA AND GEORGE J. PAPPAS Abstract. The control of complex systems poses new challenges that fall beyond the traditional methods of control theory. One of these challenges is given by the need to control, coordinate and synchronize the operation of several interacting submodules within a system. The desired objectives are no longer captured by usual control specifications such as stabilization or output regulation. Instead, we consider specifications given by Linear Temporal Logic (LTL) formulas. We show that existence of controllers for discrete-time controllable linear systems and LTL specifications can be decided and that such controllers can be effectively computed. The closed-loop system is of hybrid nature, combining the original continuous dynamics with the automatically synthesized switching logic required to enforce the specification. 1. Introduction 1.1. Motivation. In recent years there has been an increasing interest in extending the application do- main of systems and control theory from monolithic continuous plants to complex systems consisting of several concurrently interacting submodules. Examples range from multi-modal software control systems in the aerospace [DS97, LL00, TH04] and automotive industry [CJG02, SH05] to advanced robotic sys- tems [KBr04, MSP05, DCG+05]. This change in perspective is accompanied by a shift in control objectives. One is no longer interested in the stabilization or output regulation of individual continuous plants, but rather wishes to regulate the global system behavior1 through the local control of each individual submodule or component. Typical specifications for this class of control problems include coordination and synchronization of individual modules, sequencing of tasks, reconfigurability and adaptability of components, etc. -

Temporal Representation and Reasoning

Temporal Representation and Reasoning Michael Fisher∗ Department of Computer Science, University of Liverpool, UK In: F. van Harmelen, B. Porter and V. Lifschitz (eds) Handbook of Knowledge Representation,† c Elsevier 2007 This book is about representing knowledge in all its various forms. Yet, whatever phenomenon we aim to represent, be it natural, computational, or abstract, it is unlikely to be static. The natural world is always decaying or evolving. Thus, computational processes, by their nature, are dynamic, and most abstract notions, if they are to be useful, are likely to incorporate change. Consequently, the notion of representations changing through time is vital. And so, we need a clear way of representing both our temporal basis, and the way in which entities change over time. This is exactly what this chapter is about. We aim to provide the reader with an overview of many of the ways temporal phenomena can be modelled, described, reasoned about, and applied. In this, we will often overlap with other chapters in this collection. Some of these topics we will refer to very little, as they will be covered directly by other chapters, for example temporal action logic [84], situation calculus [185], event calculus [209], spatio-temporal reasoning [74], temporal constraint satisfaction [291], temporal planning [84, 271], and qualitative temporal reasoning [102]. Other topics will be described in this chapter, but overlap with descriptions in other chapters, in particular: • automated reasoning, in Section 3.2 and in [290]; • description logics, in Section 4.6 and in [154]; and • natural language, in Section 4.1 and in [250]. -

On the Satisfiability of Indexed Linear Temporal Logics∗

On the Satisfiability of Indexed Linear Temporal Logics∗ Taolue Chen1, Fu Song2, and Zhilin Wu3,4 1 Department of Computer Science, Middlesex University London, UK 2 Shanghai Key Laboratory of Trustworthy Computing, East China Normal University, China 3 State Key Laboratory of Computer Science, Institute of Software, Chinese Academy of Sciences, China 4 LIAFA, Université Paris Diderot, France Abstract Indexed Linear Temporal Logics (ILTL) are an extension of standard Linear Temporal Logics (LTL) with quantifications over index variables which range over a set of process identifiers. ILTL has been widely used in specifying and verifying properties of parameterised systems, e.g., in parameterised model checking of concurrent processes. However there is still a lack of theoretical investigations on properties of ILTL, compared to the well-studied LTL. In this paper, we start to narrow this gap, focusing on the satisfiability problem, i.e., to decide whether a model exists for a given formula. This problem is in general undecidable. Various fragments of ILTL have been considered in the literature typically in parameterised model checking, e.g., ILTL formulae in prenex normal form, or containing only non-nested quantifiers, or admitting limited temporal operators. We carry out a thorough study on the decidability and complexity of the satisfiability problem for these fragments. Namely, for each fragment, we either show that it is undecidable, or otherwise provide tight complexity bounds. 1998 ACM Subject Classification F.4.1 Mathematical Logic Keywords and phrases Satisfiability, Indexed linear temporal logic, Parameterised systems Digital Object Identifier 10.4230/LIPIcs.CONCUR.2015.254 1 Introduction Many concurrent systems are comprised of a finite number, but arbitrary many, of processes running in parallel. -

Introduction to Temporal Logic

EECS 294-98: Introduction to Temporal Logic Sanjit A. Seshia EECS, UC Berkeley Plan for Today’s Lecture • Linear Temporal Logic • Signal Temporal Logic (by Alex Donze) S. A. Seshia 2 Behavior, Run, Computation Path • Define in terms of states and transitions • A sequence of states, starting with an initial state –s0 s1 s2 … such that R(si, si+1) is true • Also called “run”, or “(computation) path” • Trace: sequence of observable parts of states – Sequence of state labels S. A. Seshia 3 Safety vs. Liveness • Safety property – “something bad must not happen” – E.g.: system should not crash – finite-length error trace • Liveness property – “something good must happen” – E.g.: every packet sent must be received at its destination – infinite-length error trace S. A. Seshia 4 Examples: Safety or Liveness? 1. “No more than one processor (in a multi-processor system) should have a cache line in write mode” 2. “The grant signal must be asserted at some time after the request signal is asserted” 3. “Every request signal must receive an acknowledge and the request should stay asserted until the acknowledge signal is received” S. A. Seshia 5 Temporal Logic • A logic for specifying properties over time – E.g., Behavior of a finite-state system • Basic: propositional temporal logic – Other temporal logics are also useful: • e.g., real-time temporal logic, metric temporal logic, signal temporal logic, … S. A. Seshia 7 Atomic State Property (Label) A Boolean formula over state variables We will denote each unique Boolean formula by • a distinct color • a name such as p, q, … req req & !ack S.