Extreme Classification with Label Graph Correlations

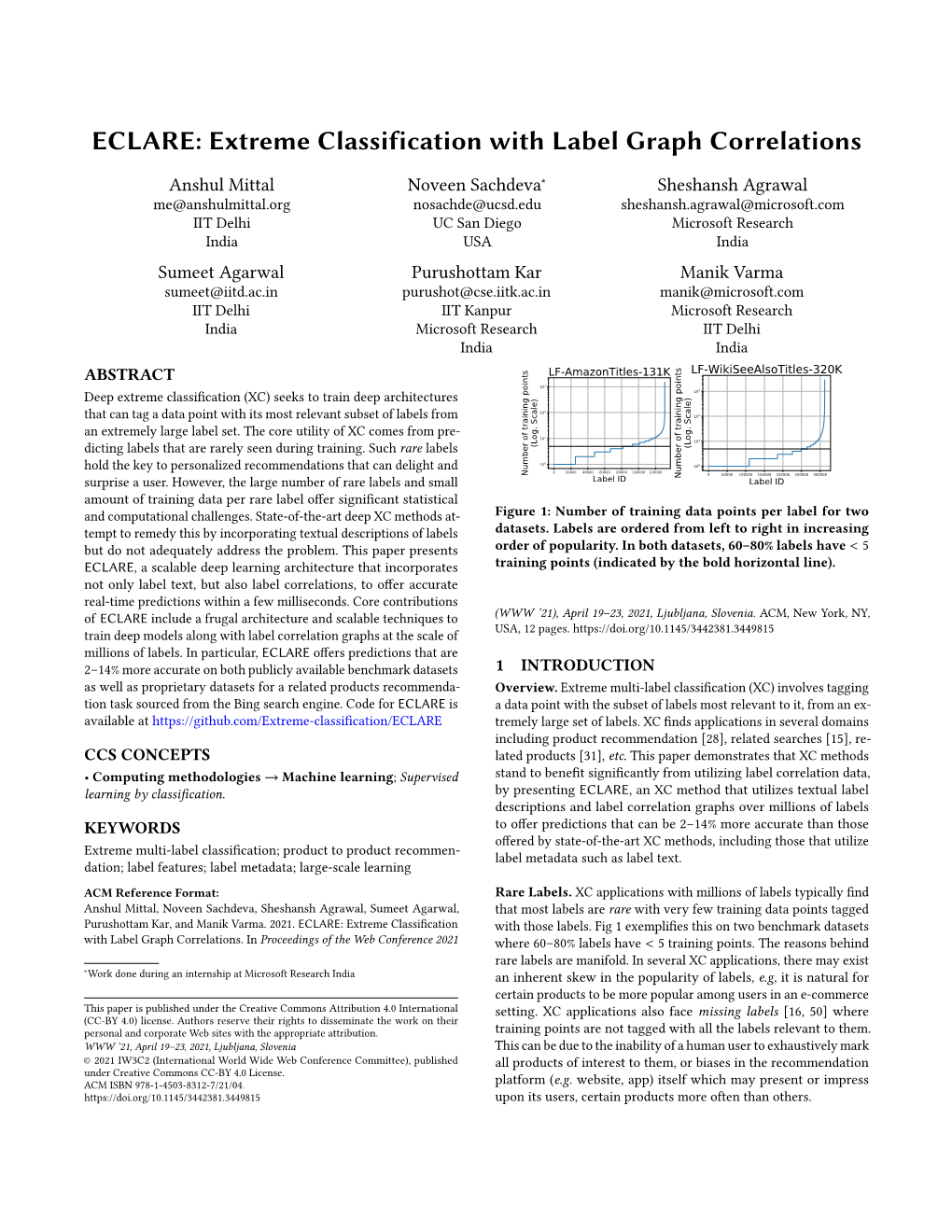

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Spencer Liff Choreographer Selected Credits Contact: 818 509-0121

spencer liff choreographer selected credits contact: 818 509-0121 < TELEVISION > So You Think You Can Dance (Season 6-10) *Emmy Nominated FOX How I Met Your Mother (Season 7-9) CBS / Dir. Pam Fryman 2 Broke Girls CBS / Dir. Phill Lewis Park and Recreation NBC / Dir. Dean Holland Mike and Molly CBS / Dir. Phill Lewis Happy Land (Pilot) MTV / Dir. Lee Toland Krieger Dancing With the Stars ABC Keeping Up With the Kardashians E! The 65th Primetime Emmy Awards CBS / Dir. Louis Horvitz 67th Tony Awards Opening Number (Assistant Chor) CBS / Neil Patrick Harris 81st Academy Awards w/Hugh Jackman, Beyonce (Asst. Choreographer) *Emmy Award Best Choreography ABC /Dr. Baz Lurhman 85th Academy Awards (Asst. Choreographer) ABC /Dir. Rob Ashford < THEATRE> Hedwig and the Angry Inch Broadway/Dir. Michael Mayer Sleepless in Seattle (World Premiere) Pasadena Playhouse/Dir. Sheldon Epps Spring Awakening Deaf West/Dir. Michael Arden Oliver! The Human Race Theatre/Dir. Alan Souza Shakespeare Theatre Co/Dir. Amanda Two Gentleman of Verona: A Rock Opera Dehnert The Wedding Singer Musical Theatre West/Dir. Larry Raben Saturday Night Fever Royal Caribbean Cruise Lines Aladdin Pasadena Playhouse/ Lythgoe Productions A Snow White Christmas Magical Pictures Ent/Lythgoe Productions An Unforgettable Journey Disney Cruise Lines A Fantasy Come True Disney Cruise Lines Trevor Live (w/Neil Patrick Harris) Trevor Project Benefit/Dir. Adam Shankman Broadway by the Year 2010 Town Hall Theatre, NYC Gypsy of the Year (w/Daniel Radcliffe) BCEFA Benefit, NYC Cry-Baby (Assistant Choreo/Dance Captain) Broadway/Dir. Mark Brokaw **2008 Astaire Award Winner: Best Male Dancer, Best Choreography Equus (Assistant Movement Director/Dance Captain) Broadway/Dir. -

Creative Arts Emmy® Awards for Programs and Individual Achievements at the Nokia Theatre L.A

FOR IMMEDIATE RELEASE August 16, 2014 7:00 PM PT The Television Academy tonight (Saturday, August 16, 2014) presented the 2014 Creative Arts Emmy® Awards for programs and individual achievements at the Nokia Theatre L.A. LIVE in Los Angeles. This first ceremony of the 66th Emmy Awards honored guest performers on television dramas and comedy series, as well as the many talented artists and craftspeople behind the scenes to create television excellence. Produced for the 20th year by Spike Jones, Jr., this year’s Creative Arts Awards featured an array of notable presenters, among them Jane Lynch, Tony Hale, Amy Schumer, Allison Janney, Tim Gunn and Heidi Klum, Comedy Central’s Key & Peele, Fred Armisen and Carrie Brownstein, Morgan Freeman, Tony Goldwyn, Aisha Tyler, Joe Manganiello and Carrie Preston. Highlights included Jon Voight’s moving posthumous presentation of the Academy’s prestigious Governors Award to casting icon, Marion Dougherty. Voight was one of Dougherty’s discoveries. The awards, as tabulated by the independent accounting firm of Ernst & Young LLP, were distributed as follows: Program Individual Total HBO 4 11 15 NBC 1 9 10 PBS 2 6 8 Fox 1 6 7 Netflix - 7 7 CBS 1 5 6 ABC 1 4 5 Discovery Channel 2 2 4 Disney Channel 1 3 4 FOX/NatGeo - 4 4 Showtime 1 3 4 Cartoon Network - 3 3 FX Networks - 3 3 Comedy Central - 2 2 Starz - 2 2 Adult Swim - 1 1 AMC - 1 1 CartoonNetwork.com - 1 1 CNN 1 - 1 comcast.com 1 - 1 ESPN 1 - 1 FunnyOrDie.com 1 - 1 justareflektor.com 1 - 1 Nat Geo WILD - 1 1 National Geographic Channel 1 - 1 pivot.tv 1 - 1 TNT 1 - 1 TELEVISION ACADEMY 2014 CREATIVE ARTS EMMY AWARDS This year’s Creative Arts telecast partner is FXM; a two-hour edited version of the ceremony will air Sunday, August 24 at 8:00 PM ET/PT with an encore at 10:00 PM ET/PT on FXM. -

67Th Annual Gala

the salvation army greater new york division’s 67th Annual Gala Honoring the actors fund The Pinnacle of Achievement Award jameel mcclain LA A The Community Service Award G AL U Special Performances by N 1 N er tony yazbeck A b H em marin mazzie T ec 67 D jason danieley Co-Hosts er 1 cemb kathie lee gifford De hoda kotb DECEMBER 1, 2014 The New York Marriott Marquis BCRE Brack Capital Real Estate USA congratulates The Salvation Army for all of their continuing good works. MULTIMEDIA SPONSOR DECEMBER 5, 2012 31 30 THE SALVATION ARMY GREATER NEW YORK DIVISION 65TH ANNUAL GALA LETTER FROM THE DIVISIONAL LEADERS LT. COLONELS GUY D. & HENRIETTA KLEMANSKI ELcomE to THE SALVation ARMY’S 67th ANNUAL CHRistmas GALA! WThe Salvation Army in Greater New York has all have used their God-given talents to a rich history of helping and bringing hope to touch the hearts of audiences everywhere. those who are in need. Thousands of people Meanwhile, The Actors Fund, this year’s across the Greater New York area stand united Pinnacle of Achievement honoree, has been in support of The Salvation Army’s mission as serving entertainers and performers in need for we go about “Doing the Most Good.” We are over 130 years. privileged to serve God by serving others and In 2015, The Salvation Army will we fight with conviction to battle against the commemorate its worldwide Sesquicentennial elements that keep people at the margins. We anniversary. We will celebrate those who have serve our neighbors, friends, and family in an given to The Salvation Army, as well as the suc- effort to help rebuild lives with compassionate cess and triumphs of those who have received. -

Lettre Concierge

SOFITEL NEW YORK 45 WEST 44TH STREET NEW YORK, NY 10036 Telephone: 212-354-8844 Concierge: 212-782-4051 HOTEL: WWW.SOFITEL-NEW-YORK.COM RESTAURANT: WWW.GABYNYRESTAURANT.COM FACEBOOK: FACEBOOK.COM/SOFITELNEWYORKCITY MONTHLY NEWSLETTER, JANUARY 2015 INSTAGRAM: SOFITELNYC SOFITELNORTHAMERICA SOFITEL NEW YORK TWITTER: @SOFITELNYC GABY EVENT HOTEL EVENT: STAY LONGER AND SAVE NATIONAL CHEESE LOVER’S DAY Tuesday January 20th 5PM – 9PM We invite you to join us for a cheese and wine tasting in cele- bration of national cheese lover’s This January, take a break and indulge in one of our unique Sofitel hotels in North America and Save! day. Please ask our restaurant for more details. Enjoy the magnificent views from the Sofitel Los Angeles at Beverly Hills, experience the energizing atmos- phere of Manhattan while staying at the Sofitel New York, soak in the warm sun by the pool of the Sofitel Miami and much more… Chicago, Philadelphia, Washington DC, San Francisco, Montreal, New York, Los Angeles and Miami. Discover all our Magnifique addresses. Book your reservation by Saturday, February 28th, 2015 and save up to 20% on your stay in all the Sofitel hotels in the US and Canada. With Sofitel Luxury Hotels, the longer you stay, the more you save. CITY EVENT BROADWAY A SLICE OF BROOKLYN MUSICAL THEATER SHOWS BUS TOURS “Hamilton” “Honeymoon in Vegas” Opens January 20th, 2015 Opens in January 15th The brilliant Lin’ Manuel’ Miranda opens his latest show, In previews now, a new musical comedy starring Tony Danza, “Hamilton,” a rap musical about the life of American Founding famous for the TV show “Who’s the Boss,” based on the 1992 Father Alexander Hamilton. -

A Self-Reflection on the Creation and Practice of Audition Technique in the Business of Live Theatre

East Tennessee State University Digital Commons @ East Tennessee State University Undergraduate Honors Theses Student Works 5-2021 Who, What, Why: A Self-Reflection on the Creation and Practice of Audition Technique in the Business of Live Theatre Kenneth Thomas Follow this and additional works at: https://dc.etsu.edu/honors Part of the Acting Commons, Fine Arts Commons, Other Theatre and Performance Studies Commons, and the Performance Studies Commons Recommended Citation Thomas, Kenneth, "Who, What, Why: A Self-Reflection on the Creation and Practice of Audition Technique in the Business of Live Theatre" (2021). Undergraduate Honors Theses. Paper 633. https://dc.etsu.edu/ honors/633 This Honors Thesis - Open Access is brought to you for free and open access by the Student Works at Digital Commons @ East Tennessee State University. It has been accepted for inclusion in Undergraduate Honors Theses by an authorized administrator of Digital Commons @ East Tennessee State University. For more information, please contact [email protected]. Who, What, Why: A Self-Reflection on the Creation and Practice of Audition Technique in the Business of Live Theatre By Kenneth Hunter Thomas An Undergraduate Thesis Submitted in Partial Fulfillment of the Requirements for the University Honors Scholars Program The Honors College University Honors Program East Tennessee State University ___________________________________________ Hunter Thomas. Date ___________________________________________ Cara Harker, Thesis Mentor Date ___________________________________________ -

Concert Masters Oktoberfest Broadway

OCT 2015 OCT ® OKTOBERFEST CELEBRATE AT THESE BEER HALLS AT CELEBRATE BROADWAY SHOWS BROADWAY CONCERT MASTERS CONCERT CLASSICS, REVIVIALS, NEW HITS, & HOLIDAY CLASSICS, REVIVIALS, NEW HITS, WHO'S PLAYING AT THE CITY'S TOP MUSIC VENUES THE CITY'S TOP AT WHO'S PLAYING NYC Monthly OCTOBER2015 NYCMONTHLY.COM VOL. 5 NO.10 OYSTER PERPETUAL SKY-DWELLER rolex oyster perpetual and sky-dweller are ® trademarks. Contents COVER IMAGE: New Amsterdam Theater © Gary Burke. The magic of live theater on Broadway is something only New York can offer. Productions on a scale unlike anywhere else in the world with critically acclaimed talent, elaborate sets, and stunning costumes fill the historic playhouses of the great White Way night after night. The New Amsterdam is the oldest working Broadway theatre, first raising its curtain in 1903. In 1995 it underwent extensive BURBERRY / COACH / CHLOÉ / PRADA renovations and is now the flagship theatre for Disney Theatrical Productions. SALVATORE FERRAGAMO / TORY BURCH SANDRO / DIANE VON FURSTENBERG GUCCI / MICHAEL KORS / CANALI / VINCE FEATURES LA MER / ESTÉE LAUDER / HELMUT LANG The world’s most coveted names, all in one place. 14 Top 10 things to Do in October 32 Fitted on 57th Street For luxury wares, there's one street in midtown that tops the rest 16 Fall Revue Your comprehensive guide to Broadway's most exciting productions Concert Masters 34 An October to remember at the city's top music venues 26 Theater Spotlight Interview Duncan Sheik 36 Concert Spotlight Interview Zedd Theater Spotlight Interview 28 -

Academy of Television Arts & Sciences

67 YEARS OF EMMY® 1948 The Emmy Awards are conceived. The Television Academy’s founding fathers struggle to name the award: Television Academy founder Syd Cassyd suggests “Ike,” the nickname for the television iconoscope tube. Pioneer television engineer and future (1949) Academy president Harry Lubcke suggests “Immy,” a nickname for the image-orthicon camera tube instrumental in the technical development of television. “Immy” is feminized as “Emmy” because the statuette, designed by engineer Louis McManus (who enlisted his wife Dorothy to model for it) depicts the winged “muse of art uplifting the electron of science.” 1949 First Emmy Awards – given to Los Angeles area programming – take place at the Hollywood Athletic Club on January 25. Tickets are $5.00. It is broadcast on local station KTSL. There are less than a million television sets in the U.S. The master of ceremonies was popular TV host Walter O’Keefe. Six awards are given: Most Outstanding Television Personality: Twenty-year-old Shirley Dinsdale and her puppet sidekick Judy Splinters for “The Judy Splinters Show.” The Station Award for Outstanding Overall Achievement: KTLA (the first commercial television station west of the Mississippi River). Technical Award: Engineer Charles Mesak of Don Lee Television for the introduction of TV camera technology. The Best Film Made for Television: “The Necklace” (a half hour adaptation of Guy de Maupassant's classic short story). Most Popular Television Program: “Pantomime Quiz.” A special Emmy is presented to Louis McManus for designing the statuette. 1950 Second Emmy Awards (January 27, Ambassador Hotel) KFI-TV broadcasts, the six other Los Angeles area stations share expense of the telecast. -

Fall 2017 (PDF)

Giving @ 2017 Richard Gilder and Peggy Tirschwell A BROTHER’S GIFT Hunter’s Newest Dance Studio Honors Our Dear Peggy n the halls of Hunter College, there is public policy programs at Roosevelt House. no more beloved presence than Peggy The Peggy is just the latest jewel in ITirschwell. She started here in 2002 Hunter’s world-class Dance Department, after a distinguished CUNY career, and CUNY’s only free-standing dance depart- retired in 2013 as assistant provost (although ment. Under the leadership of acting chair we are fortunate that she still comes in on Carol Walker, former dean of dance at a part-time basis). SUNY Purchase, the department offers a To honor her stellar service to Hunter BA in dance and, starting this fall, an MFA. and celebrate her love of dance, Tirschwell’s The department also houses the Arnhold brother, Richard Gilder, donated $500,000 Graduate Dance Education Program, to renovate The Peggy, the newly dedicated which offers a BA/MA in dance education, state-of-the-art dance studio on the sixth funded by Hunter Foundation Trustee Jody floor of Thomas Hunter Hall. Gilder is one Arnhold, chair of the Dance Advisory Board. of Hunter’s most generous supporters, most The Peggy sports a professional sprung recently donating $400,000 to support the floor, a cyclorama curtain, performance continued on page 2 FROM THE 17TH FLOOR: HUNTER’S GOT TALENT A Message From President Jennifer J. Raab A $1 Million Gift Helps Find the Best and Brightest e ended an already stellar year etermined to give Hunter students stars are spotted by faculty, then at Hunter with our first Rhodes the access to top-flight scholarships nurtured, mentored, and given Scholar, Thamara Jean ’18, who Denjoyed by Ivy League students, help writing application essays W Harold (Hal) and and preparing for interviews. -

Park Avenue Armory Announces Return of Recital Series and Artists Studio for In-Person Performances in Fall 2021

Park Avenue Armory Announces Return of Recital Series and Artists Studio for In-Person Performances in Fall 2021 Recitals Spotlight Tenor Paul Appleby, Baritone Will Liverman, and Mezzo-Soprano Jamie Barton in Intimate Salon Setting Artists Studio Debuts Dynamic Collaboration Between Trumpeter jaimie branch and Sculptor Carol Szymanski New York, NY – August 11, 2021 – Park Avenue Armory announced today that its celebrated Recital Series and Artists Studio programs will return this fall with a slate of virtuosic performances by world- class artists and musicians. Presented within two of the Armory’s historic period rooms, these performances offer audiences the opportunity to experience the work of exceptional artists at a level of intimacy that is unprecedented in conventional concert halls and performance spaces today. The Fall 2021 Recital Series and Artists Studio season launches on September 20 with the Armory debuts of tenor Paul Appleby and pianist Conor Hanick, and will also feature programs by mezzo-soprano Jamie Barton with pianist Warren Jones and baritone Will Liverman with pianist Myra Huang, as well as a cross-disciplinary collaboration between trumpeter jaimie branch and sculptor Carol Szymanski. “We are thrilled to welcome artists and audiences back to our Recital Series and Artists Studio performances this fall. These two longstanding, vital dimensions of the Armory’s program offer a more intimate complement to the large-scale productions presented in the Drill Hall, allowing artists and audiences to commune through performance in a salon-like setting.” said Rebecca Robertson, Founding President and Executive Producer at Park Avenue Armory. “With a sweeping range of programs that delve into German Lieder, spotlight on Black and female composers, and meditate on the shape of sound, this season’s Recital Series and Artists Studio performances showcases a depth of talent across artistic genres,” added Pierre Audi, the Armory’s Marina Kellen French Artistic Director. -

MAY 7, 1992 35¢ PER COPY Schechter Selects Director the Ruth and Max Alperin Ms

---------------- --- ---- Mother's Day pp.12 & 13 VOLUME LXXVIII, NUMBER 24 IYAR 3, 5752 /THURSDAY, MAY 7, 1992 35¢ PER COPY Schechter Selects Director The Ruth and Max Alperin Ms. Rubel has had ten years of Chestnut Hill, Mass. She holds Schechter Day School has taken teaching and administrative degrees in Elementary Educa great pleasure in announcing experience at the Charles E. tion and an advanced degree in the appointment of its new Smith School in Rockville, School Administration. Ms. Director, Myrna Rubel. Accord Md., one of the largest day Rubel and her husband, Gene ing to Sam Shamoon, chair of schools in the country. There Rubel, have four children and the Search Committee, the her special expertise was to currently reside in Wellesley, appointment of Ms. Rubel was coordinate the International Mass. unanimously endorsed by the Student Program which pro The Search Committee Director Search Committee, vided both Judaic and secular found in Myrna Ru bel the rare which initiated its search last educational opportunities to combination of professional August, soon after the School's new Ameri cans and visiting and personal traits that include first Director, Rabbi Alvan H. Israelis. She earned a reputa excellent teaching and admin Kaunfer, resigned to accept the tion as an indefatigable prob istrative skills, a warm and car position of Rabbi at Temple lem solver and able adminis ing personality, a deep and life Emanu-El in Providence. After trator. She is credited by senior long commitment to Judaism, (L. to R.) Sidney Goldstein, Ph.D, Ritual Committee co-chair, months of reviewing twenty level administrators, teachers and the concept of K'lal Warwick Mayor Charles Donovan, President Stephen Sholes three candidate applications and parents with fostering a Yisrael. -

The Top 20 Arts-Vibrant Large Communities

(/) Arts Vibrancy 2020 Top 20 Large Communities The Top 20 Arts-Vibrant Large Communities Here you will nd details and proles on the top 20 arts-vibrant communities with population of 1,000,000 or more. The rankings on the metrics and measures range from a high of 1 to a low of 947 since there are 947 unique MSAs and Metro Divisions. We offer insights into each community’s arts and cultural scene and report rankings for Arts Providers, Arts Dollars, and Government Support, as well as the rankings of the underlying measures. Subtle distinctions often emerge that illuminate particular strengths. Again, in determining the ranking, we weight Arts Providers and Arts Dollars at 45% each and Government Support at 10%. The two Metro Divisions that make up the larger Washington-Arlington-Alexandria, DC-VA-MD-WV, MSA — Washington- Arlington-Alexandria, DC-VAMD-WV and Frederick-Gaithersburg-Rockville, MD — made the list for the sixth year in a row. By contrast, Chicago-Naperville-Arlington Heights, IL, was the only one of four Metro Divisions of the Chicago-Naperville- Elgin, IL-IN-WI, MSA, to make the list each of the past six years. Chicago appears to have high arts vibrancy in the urban core that is less prevalent in the surrounding areas. The dispersion of arts vibrancy has increased over the years for the larger MSAs of Philadelphia-Camden-Wilmington, PA- NJ-DE-MD, New York-Newark- Jersey City, NY-NJ-PA, and San Francisco-Oakland-Berkeley, CA. More of the Metropolitan Divisions that constitute these three, large MSAs have made the list over time. -

What Makes Or Breaks a Broadway Run Jack Stucky

Show-Stopping Numbers: What Makes or Breaks a Broadway Run Jack Stucky Advisor: Scott Ogawa Northwestern University MMSS Senior Thesis June 15, 2018 Acknowledgements I would like to thank my advisor, Professor Scott Ogawa, for his advice and support throughout this process. Next, I would like to thank the MMSS program for giving me the tools to complete this thesis. In particular, thank you to Professor Joseph Ferrie for coordinating the thesis seminars and to Nicole Schneider and Professor Jeff Ely for coordinating the program. Finally, thank you to my sister, Ellen, for leading me to this topic. Abstract This paper seeks to determine the effect that Tony Awards have on the longevity and gross of a Broadway show. To do so, I examine week-by-week data collected by Broadway League on shows that have premiered on Broadway from 2000 to 2017. Much literature already exists on finding the effect of a Tony Award on a show’s success; however, this paper seeks to take this a step further. In particular, I look specifically at the differing effects of nominations versus wins for Tony Awards and the effect of single versus multiple nominations and wins. Additionally, I closely examine the differing effects that Tony Awards have on musicals versus plays. I find that while all nominated musicals see a boost in weekly gross as a result of being nominated, only plays that are able to go on to win multiple awards experience a boost because of their nomination. The most significant improvement in expected longevity that can be caused by the Tony Awards occurs for a musical that wins at least three awards, while plays need additional wins in order to see a significant increase in their longevity.