Downloads/XC/Xmlrepository.Html Pinnersage: Multi-Modal User Embedding Framework for Recommendations [4] K

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

APPENDIX Lesson 1.: Introduction

APPENDIX Lesson 1.: Introduction The Academy Awards, informally known as The Oscars, are a set of awards given annually for excellence of cinematic achievements. The Oscar statuette is officially named the Academy Award of Merit and is one of nine types of Academy Awards. Organized and overseen by the Academy of Motion Picture Arts and Sciences (AMPAS),http://en.wikipedia.org/wiki/Academy_Award - cite_note-1 the awards are given each year at a formal ceremony. The AMPAS was originally conceived by Metro-Goldwyn- Mayer studio executive Louis B. Mayer as a professional honorary organization to help improve the film industry’s image and help mediate labor disputes. The awards themselves were later initiated by the Academy as awards "of merit for distinctive achievement" in the industry. The awards were first given in 1929 at a ceremony created for the awards, at the Hotel Roosevelt in Hollywood. Over the years that the award has been given, the categories presented have changed; currently Oscars are given in more than a dozen categories, and include films of various types. As one of the most prominent award ceremonies in the world, the Academy Awards ceremony is televised live in more than 100 countries annually. It is also the oldest award ceremony in the media; its equivalents, the Grammy Awards (for music), the Emmy Awards (for television), and the Tony Awards (for theater), are modeled after the Academy Awards. The 85th Academy Awards were held on February 24, 2013 at the Dolby Theatre in Los Angeles, California. Source: http://en.wikipedia.org/wiki/Academy_Award Time of downloading: 10th January, 2013. -

Spencer Liff Choreographer Selected Credits Contact: 818 509-0121

spencer liff choreographer selected credits contact: 818 509-0121 < TELEVISION > So You Think You Can Dance (Season 6-10) *Emmy Nominated FOX How I Met Your Mother (Season 7-9) CBS / Dir. Pam Fryman 2 Broke Girls CBS / Dir. Phill Lewis Park and Recreation NBC / Dir. Dean Holland Mike and Molly CBS / Dir. Phill Lewis Happy Land (Pilot) MTV / Dir. Lee Toland Krieger Dancing With the Stars ABC Keeping Up With the Kardashians E! The 65th Primetime Emmy Awards CBS / Dir. Louis Horvitz 67th Tony Awards Opening Number (Assistant Chor) CBS / Neil Patrick Harris 81st Academy Awards w/Hugh Jackman, Beyonce (Asst. Choreographer) *Emmy Award Best Choreography ABC /Dr. Baz Lurhman 85th Academy Awards (Asst. Choreographer) ABC /Dir. Rob Ashford < THEATRE> Hedwig and the Angry Inch Broadway/Dir. Michael Mayer Sleepless in Seattle (World Premiere) Pasadena Playhouse/Dir. Sheldon Epps Spring Awakening Deaf West/Dir. Michael Arden Oliver! The Human Race Theatre/Dir. Alan Souza Shakespeare Theatre Co/Dir. Amanda Two Gentleman of Verona: A Rock Opera Dehnert The Wedding Singer Musical Theatre West/Dir. Larry Raben Saturday Night Fever Royal Caribbean Cruise Lines Aladdin Pasadena Playhouse/ Lythgoe Productions A Snow White Christmas Magical Pictures Ent/Lythgoe Productions An Unforgettable Journey Disney Cruise Lines A Fantasy Come True Disney Cruise Lines Trevor Live (w/Neil Patrick Harris) Trevor Project Benefit/Dir. Adam Shankman Broadway by the Year 2010 Town Hall Theatre, NYC Gypsy of the Year (w/Daniel Radcliffe) BCEFA Benefit, NYC Cry-Baby (Assistant Choreo/Dance Captain) Broadway/Dir. Mark Brokaw **2008 Astaire Award Winner: Best Male Dancer, Best Choreography Equus (Assistant Movement Director/Dance Captain) Broadway/Dir. -

Creative Arts Emmy® Awards for Programs and Individual Achievements at the Nokia Theatre L.A

FOR IMMEDIATE RELEASE August 16, 2014 7:00 PM PT The Television Academy tonight (Saturday, August 16, 2014) presented the 2014 Creative Arts Emmy® Awards for programs and individual achievements at the Nokia Theatre L.A. LIVE in Los Angeles. This first ceremony of the 66th Emmy Awards honored guest performers on television dramas and comedy series, as well as the many talented artists and craftspeople behind the scenes to create television excellence. Produced for the 20th year by Spike Jones, Jr., this year’s Creative Arts Awards featured an array of notable presenters, among them Jane Lynch, Tony Hale, Amy Schumer, Allison Janney, Tim Gunn and Heidi Klum, Comedy Central’s Key & Peele, Fred Armisen and Carrie Brownstein, Morgan Freeman, Tony Goldwyn, Aisha Tyler, Joe Manganiello and Carrie Preston. Highlights included Jon Voight’s moving posthumous presentation of the Academy’s prestigious Governors Award to casting icon, Marion Dougherty. Voight was one of Dougherty’s discoveries. The awards, as tabulated by the independent accounting firm of Ernst & Young LLP, were distributed as follows: Program Individual Total HBO 4 11 15 NBC 1 9 10 PBS 2 6 8 Fox 1 6 7 Netflix - 7 7 CBS 1 5 6 ABC 1 4 5 Discovery Channel 2 2 4 Disney Channel 1 3 4 FOX/NatGeo - 4 4 Showtime 1 3 4 Cartoon Network - 3 3 FX Networks - 3 3 Comedy Central - 2 2 Starz - 2 2 Adult Swim - 1 1 AMC - 1 1 CartoonNetwork.com - 1 1 CNN 1 - 1 comcast.com 1 - 1 ESPN 1 - 1 FunnyOrDie.com 1 - 1 justareflektor.com 1 - 1 Nat Geo WILD - 1 1 National Geographic Channel 1 - 1 pivot.tv 1 - 1 TNT 1 - 1 TELEVISION ACADEMY 2014 CREATIVE ARTS EMMY AWARDS This year’s Creative Arts telecast partner is FXM; a two-hour edited version of the ceremony will air Sunday, August 24 at 8:00 PM ET/PT with an encore at 10:00 PM ET/PT on FXM. -

Improvisation and Birdman; Or, the Unexpected Virtue of Irony1

Improvisation and Birdman; or, the Unexpected Virtue of Irony1 Mark Kaethler Leading up to the 87th Academy Awards, reporters launched a critique on the Oscars for only nominating white actors. Headlines included The Huffington Post’s “Why It Should Bother Everyone That The Oscars Are So White,” The Guardian’s “Oscars whitewash: why have 2015’s red carpets been so overwhelmingly white?”, TIME magazine’s “Almost All The Oscar Nominees Are White,” and US Magazine’s “Oscars 2015 Nominations: Only White Actors Received Academy Award Nods and the Internet Is Outraged,” to name a few. The International Business Times went so far as to provide an infographic in their piece, “Oscars 2015 Infographic: How White Are the 87th Academy Awards?” Even Oscar host of that year, Neil Patrick Harris, remarked upon this racism early on in the show by making the following joke: “Today we honor Hollywood’s best and whitest. Sorry, brightest” (qtd. in THR staff). It is ironic in itself that Alejandro González Iñárritu’s satire of Hollywood, superhero culture, and the unbearable whiteness that proliferates them went on to win best director, best cinematography, best screenplay, and best picture, but there is a further irony embedded in a film that mocks its white cast’s desperate efforts to receive recognition, often labelling them “children” for this selfish desire. As Samantha, daughter to Riggan (Birdman’s antihero), puts it to her father in her wonderful monologue lambasting the egotism of celebrity culture: Riggan is adapting Raymond Carver’s work for the stage “for 1000 old, rich, white people” in a desperate effort to regain publicity after his fifteen minutes of fame in the early ’90s when he played the superhero Birdman. -

67Th Annual Gala

the salvation army greater new york division’s 67th Annual Gala Honoring the actors fund The Pinnacle of Achievement Award jameel mcclain LA A The Community Service Award G AL U Special Performances by N 1 N er tony yazbeck A b H em marin mazzie T ec 67 D jason danieley Co-Hosts er 1 cemb kathie lee gifford De hoda kotb DECEMBER 1, 2014 The New York Marriott Marquis BCRE Brack Capital Real Estate USA congratulates The Salvation Army for all of their continuing good works. MULTIMEDIA SPONSOR DECEMBER 5, 2012 31 30 THE SALVATION ARMY GREATER NEW YORK DIVISION 65TH ANNUAL GALA LETTER FROM THE DIVISIONAL LEADERS LT. COLONELS GUY D. & HENRIETTA KLEMANSKI ELcomE to THE SALVation ARMY’S 67th ANNUAL CHRistmas GALA! WThe Salvation Army in Greater New York has all have used their God-given talents to a rich history of helping and bringing hope to touch the hearts of audiences everywhere. those who are in need. Thousands of people Meanwhile, The Actors Fund, this year’s across the Greater New York area stand united Pinnacle of Achievement honoree, has been in support of The Salvation Army’s mission as serving entertainers and performers in need for we go about “Doing the Most Good.” We are over 130 years. privileged to serve God by serving others and In 2015, The Salvation Army will we fight with conviction to battle against the commemorate its worldwide Sesquicentennial elements that keep people at the margins. We anniversary. We will celebrate those who have serve our neighbors, friends, and family in an given to The Salvation Army, as well as the suc- effort to help rebuild lives with compassionate cess and triumphs of those who have received. -

Dramaturgies of Power a Dissertat

UNIVERSITY OF CALIFORNIA, SAN DIEGO UNIVERSITY OF CALIFORNIA, IRVINE The Master of Ceremonies: Dramaturgies of Power A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Drama and Theatre by Laura Anne Brueckner Committee in charge: University of California, San Diego Professor James Carmody, Chair Professor Judith Dolan Professor Manuel Rotenberg Professor Ted Shank Professor Janet Smarr University of California, Irvine Professor Ian Munro 2014 © Laura Anne Brueckner, 2014 All rights reserved The Dissertation of Laura Anne Brueckner is approved, and it is acceptable in quality and form for publication on microfilm and electronically: ___________________________________________________________ ___________________________________________________________ ___________________________________________________________ ___________________________________________________________ ___________________________________________________________ ___________________________________________________________ Chair University of California, San Diego University of California, Irvine 2014 iii DEDICATION This study is dedicated in large to the sprawling, variegated community of variety performers, whose work—rarely recorded and rarely examined, so often vanishing into thin air—reveals so much about the workings of our heads and hearts and My committee chair Jim Carmody, whose astonishing insight, intelligence, pragmatism, and support has given me a model for the kind of teacher I hope to be and Christopher -

Lettre Concierge

SOFITEL NEW YORK 45 WEST 44TH STREET NEW YORK, NY 10036 Telephone: 212-354-8844 Concierge: 212-782-4051 HOTEL: WWW.SOFITEL-NEW-YORK.COM RESTAURANT: WWW.GABYNYRESTAURANT.COM FACEBOOK: FACEBOOK.COM/SOFITELNEWYORKCITY MONTHLY NEWSLETTER, JANUARY 2015 INSTAGRAM: SOFITELNYC SOFITELNORTHAMERICA SOFITEL NEW YORK TWITTER: @SOFITELNYC GABY EVENT HOTEL EVENT: STAY LONGER AND SAVE NATIONAL CHEESE LOVER’S DAY Tuesday January 20th 5PM – 9PM We invite you to join us for a cheese and wine tasting in cele- bration of national cheese lover’s This January, take a break and indulge in one of our unique Sofitel hotels in North America and Save! day. Please ask our restaurant for more details. Enjoy the magnificent views from the Sofitel Los Angeles at Beverly Hills, experience the energizing atmos- phere of Manhattan while staying at the Sofitel New York, soak in the warm sun by the pool of the Sofitel Miami and much more… Chicago, Philadelphia, Washington DC, San Francisco, Montreal, New York, Los Angeles and Miami. Discover all our Magnifique addresses. Book your reservation by Saturday, February 28th, 2015 and save up to 20% on your stay in all the Sofitel hotels in the US and Canada. With Sofitel Luxury Hotels, the longer you stay, the more you save. CITY EVENT BROADWAY A SLICE OF BROOKLYN MUSICAL THEATER SHOWS BUS TOURS “Hamilton” “Honeymoon in Vegas” Opens January 20th, 2015 Opens in January 15th The brilliant Lin’ Manuel’ Miranda opens his latest show, In previews now, a new musical comedy starring Tony Danza, “Hamilton,” a rap musical about the life of American Founding famous for the TV show “Who’s the Boss,” based on the 1992 Father Alexander Hamilton. -

Academy Awards

Analysis of the digital conversation for the 2018 ACADEMY AWARDS March, 2018 CONTEXT ACADEMY AWARDS, 2018 On March 4th, 2018, we had the 90th Oscar Ceremony, a prize given by the AMPAS to the crème of the crop in movies, recognizing their excellence in the industries’ professionals, and is considered the greatest honor in movies worldwide. 4 THE AWARDS: THE PRIDE OF MEXICO Lately, it has become common to see Mexicans participating of the Oscars. During Oscar’s 86, 87 & 88, a Mexican took 2 statues, both as best director & best movie. This is why this year it is not surprising to see Mexico’s participation, however, we had never seen such a Mexican delivery as this one. Last year, the current president of the US, Donald Trump. While being sworn in said he would put up a wall between the US and Mexico so as to avoid immigration by illegal aliens from Lat Am; stemming from this fact and others, such as the renegotiation of NAFTA the relationship between countries has suffered. This situation has generated a friction between countries, yet, “The Academy” has shown to be against these remarks and has done so in every ceremony appealing to equality and inclusion for all cultures, preferences and genders. 5 DONALD TRUMP VS. MÉXICO After the 87th Academy awards, when Mexican national “Alejandro González Iñarritu” was awarded with 3 Oscars for his film “Birdman”, current US president “Donald Trump” tweeted a series of messages of disapproval: 6 THE MOST MEXICAN ACADEMY AWARDS On the 90th Academy Awards Mexico was more present than ever: “Coco” a Disney Pixar film centered on the Mexican The shape of water, written and directed by tradition of the day of the dead was nominated filmmaker Guillermo del Toro was nominated and awarded as best animated movie & best to 14 awards of which it got 4. -

GCSE Sources and Links for the Spoken Language

GCSE Sources and Links for the Spoken Language English Language Spoken Language Task Support: Sources and Links for: Interviews and Dialogue (2014) Themes N.B. Many of these sources and links have cross-over and are applicable for use as spoken language texts for: Formal v Informal (2015) Themes. As far as possible this feature has been identified in the summary and content explanation in the relevant section below. Newspaper Sources: The Daily Telegraph ( British broadsheet newspaper) website link: www.telegraph.co.uk. This site/source has an excellent archive of relevant, accessible clips and interviews. The Guardian ( British broadsheet newspaper) website and link to their section dedicated to: Great Interviews of the 20th Century Link: http://www.theguardian.com/theguardian/series/greatinterviews This cites iconic interviews, such as: The Nixon interview is an excellent example of a formal spoken language text and can be used alongside an informal political text ( see link under ‘Political Speech’) such as Barack Obama chatting informally in a pub or his interview at home with his wife Michelle. Richard Nixon interview with David Frost Link: Youtube http://www.youtube.com/watch?v=2c4DBXFDOtg&list=PL02A5A9ACA71E35C6 Another iconic interview can be found at the link below: Denis Potter interview with Melvyn Bragg Link: Youtube http://www.youtube.com/watch?v=oAYckQbZWbU Sources/Archive for Television Interviews: The Radio Times ( Media source with archive footage of television and radio clips). There is a chronology timeline of iconic and significant television interviews dating from 1959–2011. Link: http://www.radiotimes.com/news/2011-08-16/video-the-greatest-broadcast- interviews-of-all-time Fern Britton Meet ( BBC, 2009). -

A Self-Reflection on the Creation and Practice of Audition Technique in the Business of Live Theatre

East Tennessee State University Digital Commons @ East Tennessee State University Undergraduate Honors Theses Student Works 5-2021 Who, What, Why: A Self-Reflection on the Creation and Practice of Audition Technique in the Business of Live Theatre Kenneth Thomas Follow this and additional works at: https://dc.etsu.edu/honors Part of the Acting Commons, Fine Arts Commons, Other Theatre and Performance Studies Commons, and the Performance Studies Commons Recommended Citation Thomas, Kenneth, "Who, What, Why: A Self-Reflection on the Creation and Practice of Audition Technique in the Business of Live Theatre" (2021). Undergraduate Honors Theses. Paper 633. https://dc.etsu.edu/ honors/633 This Honors Thesis - Open Access is brought to you for free and open access by the Student Works at Digital Commons @ East Tennessee State University. It has been accepted for inclusion in Undergraduate Honors Theses by an authorized administrator of Digital Commons @ East Tennessee State University. For more information, please contact [email protected]. Who, What, Why: A Self-Reflection on the Creation and Practice of Audition Technique in the Business of Live Theatre By Kenneth Hunter Thomas An Undergraduate Thesis Submitted in Partial Fulfillment of the Requirements for the University Honors Scholars Program The Honors College University Honors Program East Tennessee State University ___________________________________________ Hunter Thomas. Date ___________________________________________ Cara Harker, Thesis Mentor Date ___________________________________________ -

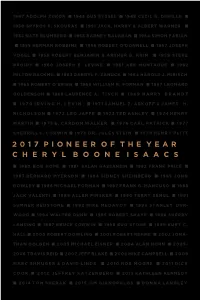

2017 Pioneer of the Year C H E R Y L B O O N E I S a A

1947 ADOLPH ZUKOR n 1948 GUS EYSSEL n 1949 CECIL B. DEMILLE n 1950 SPYROS P. SKOURAS n 1951 JACK, HARRY & ALBERT WARNER n 1952 NATE BLUMBERG n 1953 BARNEY BALABAN n 1954 SIMON FABIAN n 1955 HERMAN ROBBINS n 1956 ROBERT O’DONNELL n 1957 JOSEPH VOGEL n 1958 ROBERT BENJAMIN & ARTHUR B. KRIM n 1959 STEVE BROIDY n 1960 JOSEPH E. LEVINE n 1961 ABE MONTAGUE n 1962 MILTON RACKMIL n 1963 DARRYL F. ZANUCK n 1964 HAROLD J. MIRISCH n 1965 ROBERT O’BRIEN n 1966 WILLIAM R. FORMAN n 1967 LEONARD GOLDENSON n 1968 LAURENCE A. TISCH n 1969 HARRY BRANDT n 1970 IRVING H. LEVIN n 1971 SAMUEL Z. ARKOFF & JAMES H . NICHOLSON n 1972 LEO JAFFE n 1973 TED ASHLEY n 1974 HENRY MARTIN n 1975 E. CARDON WALKER n 1976 CARL PATRICK n 1977 SHERRILL C. CORWIN n 1978 DR. JULES STEIN n 1979 HENRY PLITT 2017 PIONEER OF THE YEAR CHERYL BOONE ISAACS n 1980 BOB HOPE n 1981 SALAH HASSANEIN n 1982 FRANK PRICE n 1983 BERNARD MYERSON n 1984 SIDNEY SHEINBERG n 1985 JOHN ROWLEY n 1986 MICHAEL FORMAN n 1987 FRANK G. MANCUSO n 1988 JACK VALENTI n 1989 ALLEN PINSKER n 1990 TERRY SEMEL n 1991 SUMNER REDSTONE n 1992 MIKE MEDAVOY n 1993 STANLEY DUR- WOOD n 1994 WALTER DUNN n 1995 ROBERT SHAYE n 1996 SHERRY LANSING n 1997 BRUCE CORWIN n 1998 BUD STONE n 1999 KURT C. HALL n 2000 ROBERT DOWLING n 2001 ROBERT REHME n 2002 JONA- THAN DOLGEN n 2003 MICHAEL EISNER n 2004 ALAN HORN n 2005– 2006 TRAVIS REID n 2007 JEFF BLAKE n 2008 MIKE CAMPBELL n 2009 MARC SHMUGER & DAVID LINDE n 2010 ROB MOORE n 2011 DICK COOK n 2012 JEFFREY KATZENBERG n 2013 KATHLEEN KENNEDY n 2014 TOM SHERAK n 2015 JIM GIANOPULOS n DONNA LANGLEY 2017 PIONEER OF THE YEAR CHERYL BOONE ISAACS 2017 PIONEER OF THE YEAR Welcome On behalf of the Board of Directors of the Will Rogers Motion Picture Pioneers Foundation, thank you for attending the 2017 Pioneer of the Year Dinner. -

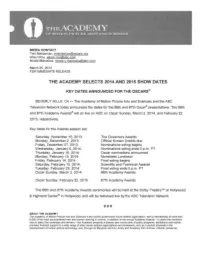

The Academy Selects 2014 and 2015 Show Dates

MEDIA CONTACT Teni Melidonian, [email protected] Alison Rou , [email protected] Nicole Marostica, [email protected] March 25, 2013 FOR IMMEDIATE RELEASE THE ACADEMY SELECTS 2014 AND 2015 SHOW DATES KEY DATES ANNOUNCED FOR THE OSCARS® BEVERLY HILLS, CA - The Academy of Motion Picture Arts and Sciences and the ABC Television Network today announced the dates for the 86th and 87th Oscar® presentations. The 86th and 87th Academy Awards® will air live on ABC on Oscar Sunday, March 2,2014, and February 22, 2015, respectively. Key dates for the Awards season are: Saturday, November 16, 2013: The Governors Awards Monday, December 2, 2013: Official Screen Credits due Friday, December 27, 2013: Nominations voting begins Wednesday, January 8,2014: Nominations voting ends 5 p.m. PT Thursday, January 16, 2014: Oscar nominations announced Monday, February 10, 2014: Nominees Luncheon Friday, February 14, 2014: Final voting begins Saturday, February 15, 2014: Scientific and Technical Awards Tuesday, February 25,2014: Final voting ends 5 p.m. PT Oscar Sunday, March 2, 2014: 86th Academy Awards Oscar Sunday, February 22, 2015: 87th Academy Awards The 86th and 87th Academy Awards ceremonies will be held at the Dolby Theatre ™ at Hollywood & Highland Center® in Hollywood, and will be televised live by the ABC Television Network. ### ABOUT THE ACADEMY The Academy of Motion Picture Arts and Sciences is the world's preeminent movie-related organization, with a membership of more than 6,000 of the most accomplished men and women working in cinema. In addition to the annual Academy Awards - in which the members vote to select the nominees and winners - the Academy presents a diverse year-round slate of public programs , exhibitions and events; provides financial support to a wide range of other movie-related organizations and endeavors; acts as a neutral advocate in the advancement of motion picture technology; and , through its Margaret Herrick Library and Academy Film Archive , collects, preserves, .