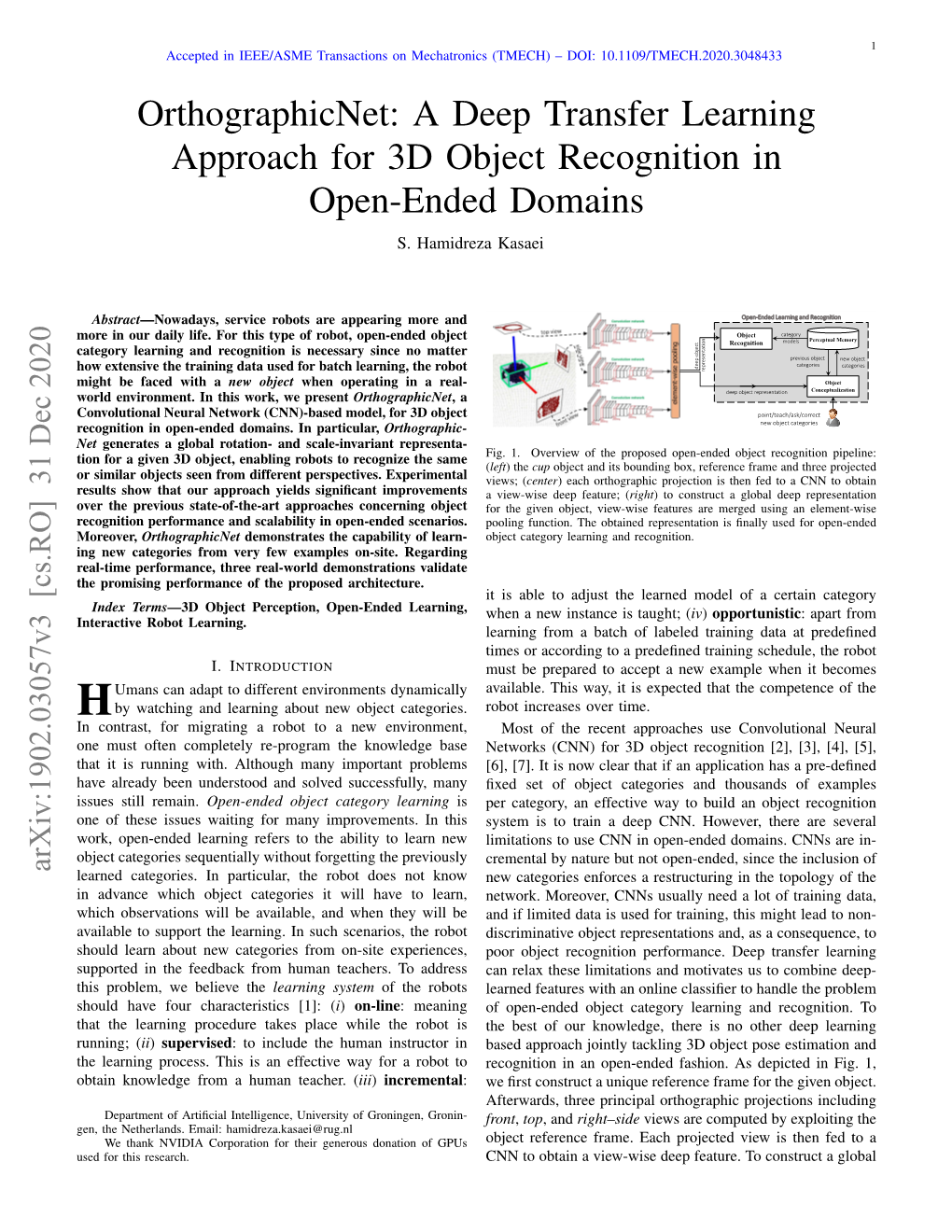

A Deep Transfer Learning Approach for 3D Object Recognition in Open-Ended Domains S

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vol 39 No 48 November 26

Notice of Forfeiture - Domestic Kansas Register 1 State of Kansas 2AMD, LLC, Leawood, KS 2H Properties, LLC, Winfield, KS Secretary of State 2jake’s Jaylin & Jojo, L.L.C., Kansas City, KS 2JCO, LLC, Wichita, KS Notice of Forfeiture 2JFK, LLC, Wichita, KS 2JK, LLC, Overland Park, KS In accordance with Kansas statutes, the following busi- 2M, LLC, Dodge City, KS ness entities organized under the laws of Kansas and the 2nd Chance Lawn and Landscape, LLC, Wichita, KS foreign business entities authorized to do business in 2nd to None, LLC, Wichita, KS 2nd 2 None, LLC, Wichita, KS Kansas were forfeited during the month of October 2020 2shutterbugs, LLC, Frontenac, KS for failure to timely file an annual report and pay the an- 2U Farms, L.L.C., Oberlin, KS nual report fee. 2u4less, LLC, Frontenac, KS Please Note: The following list represents business en- 20 Angel 15, LLC, Westmoreland, KS tities forfeited in October. Any business entity listed may 2000 S 10th St, LLC, Leawood, KS 2007 Golden Tigers, LLC, Wichita, KS have filed for reinstatement and be considered in good 21/127, L.C., Wichita, KS standing. To check the status of a business entity go to the 21st Street Metal Recycling, LLC, Wichita, KS Kansas Business Center’s Business Entity Search Station at 210 Lecato Ventures, LLC, Mullica Hill, NJ https://www.kansas.gov/bess/flow/main?execution=e2s4 2111 Property, L.L.C., Lawrence, KS 21650 S Main, LLC, Colorado Springs, CO (select Business Entity Database) or contact the Business 217 Media, LLC, Hays, KS Services Division at 785-296-4564. -

Thermal Processing Outline

Thermal Processing Outline 1. Classification of Foods based on pH and aw 2. Microbiology 3. Blanching, Pasteurization, ESL (incl. Ultra- Pasteurization), UHT, Hot Fill, Minimal processing 4. Canning Operations 5. Thermal Processing Equipment 6. Kinetics (D & z values) 7. Process Safety (F value) and Product Quality (C value) 8. Process Optimization 9. Shelf Life 10.Time Temperature Integrator (TTI) 1. Classification of Foods Based on pH & aw Classification of Foods based on pH • Low acid: pH ≥ 4.6; Acid: pH < 4.6 (C. botulinum) • More specific classification – Low acid: pH > 5.3 • Red meat, poultry, seafood, milk, corn, peas, lima beans, potatoes, cauliflower – Medium acid: 4.5 < pH < 5.3 • Spaghetti, soups, sauces, asparagus, beets, pumpkin, spinach, green beans, turnip, cabbage – Acid: 3.7 < pH < 4.5 • Tomato, pear, fig, pineapple, apricot, yogurt, white cheese, beer – High acid: pH < 3.7 • Sauerkraut, pickles, berries, citrus, rhubarb, wine, vinegar, plums, currants, apples, strawberries, peaches Classification of Foods Based on mc or aw • High moisture foods (50+% -- 70-99%) – Fruits, vegetables, juices, raw meat, fish • Intermediate moisture foods (15-50%) – Bread, hard cheeses, sausages • Low moisture foods (0-15%) – Dehydrated vegetables, grains, milk powder, dry soup mixes Importance of aw: Honey at 20% mc is shelf stable, while potato at 20% is not 2. Microbiology Classification of Bacteria • Based on Oxygen – Aerobes (Need oxygen for growth) • Microaerophile: Need only small amount of oxygen for growth – Anaerobes • Obligate: -

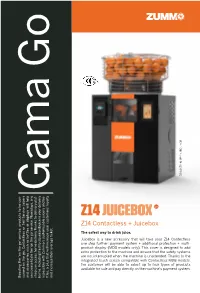

Technical Data Sheet Z14 Juice Box English

Boosting the ‘on the go’ service: products to be con- sumed on the go. Contactless or Self-Service juicers and accessories such as bottles or displays will help you consolidate the ‘on the go’ service. Nowadays, any shop can access home delivery thanks to delivery plat- forms. Including freshly squeezed juice in your delivery is possible with Zummo’s customisable juicers and bo- ttles, a plus that will increase your customers’ loyalty and increase their average ticket. Gama Go available for sale and pay directly on the machine’s payment system. payment onthemachine’s directly and pay sale for available products of types four upto select willbeableto the customer MDBmodels, withContactless compatible screen touch integrated the to Thanks whenthemachineisunattended. notinterrupted are systems thatthesafety themachineandensure to protection extra add to isdesigned This cover (MDBmodelsonly). display product +multi- +additionalprotection system further:payment one step Contectless Z14 your thatwilltake accessory isa new Juicebox drinkjuice. to way The safest Juicebox + Contactless Z14 Z14 juicebox GO ZI14x16TA-N-M** + JBDL-NOR The safest way to drink juice. 1. EVS®Advanced Unique vertical juice extraction system; a Zummo exclusive. Based on manual juice extraction system it achieves maximum profitability, obtaining the purest possible juice with the greatest operational functionality. 2. Juice extraction kits The different-sized juice extraction kits help to maximise the yield of the juice extracted from the fruit depending on its diameter. Easily identifiable thanks to the characteristic colour of each range: Medium (M): Orange; Large(L): Maroon. A system developed by Zummo to assemble and disassemble the balls of the kit in just one movement. -

Matchstick Dollhouses

MATCHSTICK DOLLHOUSES A Thesis Presented to The Graduate Faculty of The University of Akron In Partial Fulfillment Of the Requirements for the Degree Master of Fine Arts Nicholas Reali May, 2015 MATCHSTICK DOLLHOUSES Nicholas Reali Thesis Approved: Accepted: _______________________________ _______________________________ Advisor Dean of the College Mr. Christopher Barzak Dr. Chand Midha _______________________________ _______________________________ Faculty Reader Interim Dean of the Graduate School Dr. Mary Biddinger Dr. Rex Ramsier _______________________________ _______________________________ Faculty Reader Date Mr. Eric Wasserman _______________________________ Department Chair Dr. William Thelin ii TABLE OF CONTENTS Page CHAPTER I. COTTONMOUTH KISS……………………………………………………………….1 II. SOMEONE’S GOT TO HELP ME DIG ……………………………………….…….8 III. THE UNIVERSE IS SHPAED EXACTLY LIKE THE EARTH………..…………18 IV. WHEN I GROW UP ...………………...……………………………………………22 V. MY BROTHER THE ASTRONAUT …………………………………………...…..25 VI. THE FIDDLE …...………………...………………………………………………..32 VII. THE VILLAGE ……………...…………………………………………………….43 VIII. LIMBO …...……………………………………………………………………….46 IX. CEREAL BOX BEAR TRAPS ……...……………………………………………..52 X. BIGFOOT’S WIFE …………………….……………..……………………………..60 XI. WHAT THE CAT DRAGGED IN ………………...…...…………………………..64 XII. THE COLONEL………………………...…………………...……………………..76 XIII. DISAPPEARING ACT ……...………………..…………………………………..79 XIV. PROSPERPINA …………………….…………………………………………….81 XV. FOWL.......................................................................................................................85 -

Elsa Dorfman, Portraitist

Criminal Injustice • Presidential Transition • Microbial Earth SEPTEMBER-OCTOBER 2017 • $4.95 Elsa Dorfman, Portraitist Reprinted from Harvard Magazine. For more information, contact Harvard Magazine, Inc. at 617-495-5746 SEPTEMBER-OCTOBER 2017, VOLUME 120, NUMBER 1 FEATURES 30 The Portraitist | by Sophia Nguyen Elsa Dorfman’s Polaroid photographs captured generations, uniquely 38 Vita: Carl Thorne-Thomsen | by Bonnie Docherty Brief life of a man of principle: 1946-1967 p. 14 40 Life Beyond Sight | by Jonathan Shaw Tiny organisms loom large in shaping Planet Earth 44 Criminal Injustice | by Michael Zuckerman Alec Karakatsanis v. “money-based detention” in America JOHN HARVARD’S JOURNAL 14 President Faust sets her departure, campus building booms, making a MOOC at HarvardX, around and across the Bay of Bengal, proposed final-club finis, back-to-school bookshelf, crossing the Channel the hard way, a business-engineer- ing matchup, new Press director, College p. 25 friendship, new Undergraduate Fellows, and field p. 40 hockey’s finely tuned coach DEPARTMENTS 2 Cambridge 02138 | Letters from our readers—and a comment on presidential-search priorities 3 The View from Mass Hall , CAKEBOX PRODUCTIONS LLC; REBECCA CLARKE 6 Right Now | Enhanced amputation, analyzing anti-aging agents, why cities thrive 12A Harvard2 | Autumn events, river views of Boston, a Shaker village’s enduring appeal, World’s End, Watertown bistro LIFE THE AT EDGE OF SIGHT: A PHOTOGRAPHIC EXPLORATION OF THE THE JUSTICE PROJECT JUSTICE THE 53 Montage | Perfecting the pop song, leadership, American bards, Malka Older’s sci-fi thrillers, seeing the color in art, Adam and Eve, and more 63 Alumni | Precise bird painter, new alumni president 68 The College Pump | Crimson satire, memorial-minute meanings, Memorial Hall aflame p. -

Way to Grow News for Urban Gardeners

way to grow news for urban gardeners AUGUST/SEPTEMBER 2015 | VOLUME 38 | NUMBER 10 Gardening for Climate Change Laura Matter, Garden Hotline Coordinator The past two summers’ high temperatures broke records. Tomatoes loved it. Before that, the warmest summer on record was in 1967. So far, June and have July hit record highs. Our familiar Northwest summers are changing. What’s wrong with early tomatoes? Nothing! But our warming summers indicate more changes to come. Climatologists’ research indicates an upward trend in global temperatures caused by carbon dioxide emissions. These Matthew McDermott, Jess Bitting and Emma Shorr (left to right) begin building the walk-in gasses accumulate in the atmosphere and cooler at our new warehouse in Rainier Beach neighborhood. warm the planet, leading to deforestation and loss of reflective glaciers -- warming the planet further. This heat may benefit Hello Food and Farm Hub the Northwest gardener for a few decades, increasing plant diversity and offering a Chris Iberle, Food Hub Manager longer growing season. But the benefit might be mitigated by challenges such What is cool as a walk-in cooler, attracts local farmers, delivers CSA boxes, makes juice and as the increase in pests that accompany sells chicken feed? A new Food and Farm Hub in Rainier Beach, that’s what! warmer and longer seasons. Severe weather Seattle Tilth Produce has been looking for space to pack our 300 CSA shares and 150 like hail, wind storms and wildfires will Good Food Bags, increase our cold storage and receive deliveries from local farmers. increasingly take their toll. The Northwest We’ve found our new home! will see increasing humidity while summers Seattle’s Southeast Effective Development (SEED) signed a master lease on the 5000 may get drier. -

Ordinary Council Meeting

ORDINARY COUNCIL MEETING MINUTES The Ordinary Meeting of Council was held in the Council Chambers, Welcome Road, Karratha on Monday, 19 May 2014 ________________________ CHRIS ADAMS CHIEF EXECUTIVE OFFICER No responsibility whatsoever is implied or accepted by the Shire of Roebourne for any act, omission or statement or intimation occurring during Council or Committee Meetings. The Shire of Roebourne disclaims any liability for any loss whatsoever and howsoever caused arising out of reliance by any person or legal entity on any such act, omission or statement or intimation occurring during Council or Committee Meetings. Any person or legal entity who acts or fails to act in reliance upon any statement, act or omission made in a Council or Committee Meeting does so at that persons or legal entity’s own risk. In particular and without derogating in any way from the broad disclaimer above, in any discussion regarding any planning application or application for a license, any statement or intimation of approval made by any member or Officer of the Shire of Roebourne during the course of any meeting is not intended to be and is not taken as notice of approval from the Shire of Roebourne. The Shire of Roebourne warns that anyone who has any application lodged with the Shire of Roebourne must obtain and should only rely on WRITTEN CONFIRMATION of the outcome of the application, and any conditions attaching to the decision made by the Shire of Roebourne in respect of the application. Signed: _________________________ Chris Adams - Chief Executive Officer DECLARATION OF INTERESTS (NOTES FOR YOUR GUIDANCE) (updated 13 March 2000) A member who has a Financial Interest in any matter to be discussed at a Council or Committee Meeting, which will be attended by the member, must disclose the nature of the interest: (a) In a written notice given to the Chief Executive Officer before the Meeting or; (b) At the Meeting, immediately before the matter is discussed. -

Abstracts Listed by the Project Identification Number by the Title

Abstracts Listed by the project identification number by the title 1 Domestic Violence Myths and Neutralizing Attitudes of Society Towards Victims Molly Hartsough This research project intends to investigate the relationship between societal beliefs in domestic violence myths and neutralizing attitudes that serve to minimize the experiences of domestic violence victims. Data will be collected from a mid-size urban university in northeastern Ohio. A purposive sample of 800-1,000 graduate and undergraduate students will be representative of gender, age, racial/ethnic backgrounds, and majors of the student population. The Domestic Violence myths utilized in this study are those previously defined by other researchers, notably Peters (2003), Westbrook (2009), and Policastro et. al. (2013). Neutralizing attitudes are to be defined as actions performed or refused to be performed by members of society towards domestic violence victims (Scott 2007). Examples may include actions such as refusing to call 911 for victims of repeated abuse episodes, not taking a victim’s admittance of being abused seriously, refusal to testify in court on behalf of a victim. The proposed research will test hypotheses of a positive relationship between beliefs in the domestic violence myths and neutralizing attitudes, and if gender, race/ethnicity and student major may influence their beliefs in the domestic violence myths and neutralizing attitudes. If supported, the research findings will have profound real world implications, especially for those students pursuing specific majors and careers related to victim services, such as criminal justice, social work, psychology, and pre-med majors. 2 Capital Punishment Monica Woolensack & Haley Lapcevich We are going to compare states with and without the death penalty. -

•Œbeyond the Balloon" and Other Stories

City University of New York (CUNY) CUNY Academic Works Dissertations and Theses City College of New York 2012 “Beyond the Balloon" and Other Stories Mary F. Sharkey CUNY City College How does access to this work benefit ou?y Let us know! More information about this work at: https://academicworks.cuny.edu/cc_etds_theses/444 Discover additional works at: https://academicworks.cuny.edu This work is made publicly available by the City University of New York (CUNY). Contact: [email protected] 1 “Beyond the Balloon” and Other Stories by Mary F. Sharkey Advisor: Linsey Abrams May 1, 2012 Submitted in partial fulfillment of the requirements for the degree Master of Fine Arts of the City College of the City University of New York. 2 Preface “Beyond the Balloon” and Other Stories is a collection of writing that explores family interaction around themes of disability and illness, race, and marital status. This body of work presents realistic characters who are navigating ordinary lives that present unexpected challenges. “Beyond the Balloon” is the first seven chapters of a novel that explores the family and community of a boy with autism. It highlights the communication deficits that exist within autism as well as the wider world around it. A couple, already existing within a troubled marriage, must confront their son’s atypical behavior. The remaining collection of stories include a coming-of-age story of two young adolescents who share most intimacies but cannot share a racist incident in the same way; a story of a father and son exploring what is left of their lives after a difficult divorce; an adolescent boy confronted with the death of his infant sister at the hands of his fanatically anti-medical establishment parents; a woman in her thirties who thinks she has decided she doesn’t want to have children but is now confronted with two close friends who are pregnant; and, a story of a family with two divergent sons, one who grows away to independence and another who continues to live as an adult child with his aging mother. -

Cooking and Eating Vegan Can Be Easy If You Have a Few Basic Recipes

! Easy Vegan Meals and Recipes!! 1 Cooking and eating vegan can be easy if you have a few BEANS AND RICE (Meal #2) (One of the two easiest basic recipes, with variations, that you can rotate through. meals in the world) Here are a few suggestions. Words in all caps indicate a recipe! Make some BROWN RICE (Meal # 4) or better yet, have some left over from another meal. Add a can of beans, Ethnic choices can provide variety and interest. drained and rinsed, or some leftover BEANS FROM SCRATCH (Meal #3) from another meal. Add salsa, Chinese: tofu, tempeh or seitan STIR-FRY with veggies. olives, veggies, and fresh or dried herbs and spices to Japanese: store-bought sushi (vegan options) achieve any ethnic flavor profile (cumin, coriander and Mexican: CHILI; BURRITOS or TACOS with bean or chili powder for Mexican; curry powder for Indian; basil vegan “meat” filling and oregano for Italian). All 4 of Wegmans flavored Middle Eastern: falafels, HUMMUS and TABOULI with canned beans are vegan: Caribbean, Chana Masala, pita bread; stuffed grape leaves Southwest, and Tuscan. Indian: CURRY Italian: PASTA and PIZZA BURRITOS OR TACOS (Meal #3) Thai: STIR-FRY with a different flavor profile than Chinese, often using coconut, peanuts, basil and chilis 1 package burrito shells (soft) or taco shells (hard)* 1 or 2 cans fat-free refried beans, or make your own** Tip for Oil-Free Cooking: When the instructions for a Condiments: salsa, canned ripe olives, vegan sour cream recipe call for sautéing onions and garlic in oil, substitute (Tofutti brand is one kind), chopped red onion, shredded water or wine. -

Urbandale Community School District Chris Gunnare

URBANDALE COMMUNITY SCHOOL DISTRICT BOARD OF DIRECTORS’ MEETING MONDAY, SEPTEMBER 12, 2016 BOARD MEETING, ORGANIZATIONAL MEETING AND WORK SESSION – 6:00 P.M. KAREN ACRES ELEMENTARY 3500 74TH STREET CHRIS GUNNARE, PRESIDENT Our Mission: teach all/reach all Our Vision: Urbandale will be a school district that brings learning to life for everyone. UCSD School Board Mission: To partner with stakeholders to teach all and reach all through governance of Board Policy and Operating Protocol. Urbandale is a national leader in CHARACTER COUNTS!, endeavoring at all times to promote and model the principles of trustworthiness, respect, responsibility, fairness, caring and citizenship. In conducting tonight’s meeting, we expect that all participants will act in a respectful manner consistent with these principles AGENDA KAREN ACRES ELEMENTARY 3500 74TH STREET I. Call To Order and Roll Call II. Motion to Appoint a Secretary: Pro Tempore of the Board for the Evenings Meetings III. Approval of Agenda IV. Communication from the Public – School Community Relations (1001) V. Report of the Superintendent of Schools A. Policy Update Proposal for Board Policy 306 Succession of Authority VI. Report of the President A. VII. Consent Agenda Items Business Procedures (801) A. Approval of August 15, 2016 Board Meeting Minutes B. Approval of Open Enrollment C. Approval of Personnel Report D. Approval of Job Description and Position E. Approval of Field Trips, Overnight Travel, or Out of State Travel F. Approval of Contracts and Agreements a. Facility Use Agreement with Aldersgate United Methodist Church for the temporary use by Karen Acres during the completion of the renovation project. -

Minutes for the Conservation Commission April 24, 2019 Page 1 of 4 MINUTES for the CONSERVATION COMMISSION Wednesday, April 24, 2019 Siskiyou Room, 51 Winburn Way 1

Minutes for the Conservation Commission April 24, 2019 Page 1 of 4 MINUTES FOR THE CONSERVATION COMMISSION Wednesday, April 24, 2019 Siskiyou Room, 51 Winburn Way 1. Call to Order The April meeting of the Conservation Commission was called to order at 6:00 p.m. by Vice Chair Roxane Beigel-Coryell. Commissioners Risa Buck, Larry Cooper, Marion Moore, Jamie Rosenthal, Bryan Sohl were present. Commissioners Marni Koopman, James McGinnis, and David Sommer were absent for the beginning of the meeting. Staff members Stu Green, Julie Smitherman, and Kevin Caldwell were present. Councilor Julie Akins and staff member Adam Hanks were absent. As this was Cooper’s first meeting since being appointed, each Commissioner and staff introduced themselves. 2. Consent Agenda 2.1. March 27, 2019 Meeting Minutes Beigel-Coryell asked for any corrections on the March 27, 2019 meeting minutes. Buck moved to approve the minutes as written with Moore providing a second. After no discussion Beigel-Coryell called for a vote. Cooper abstained from voting as he was absent from the previous meeting. All other present members voted in favor of approving the minutes and the motion passed. 3. Announcements 3.1. Next Regular Meeting: May 22, 2019 Beigel-Coryell announced that the next meeting will be held on May 22, 2019 and asked the Commission to let the Chair and staff know if anyone would be absent. Sohl and Green stated that they will not be able to attend the next meeting. Rosenthal might be absent for the next meeting as well. 3.2. Upcoming Sub-committee meetings No Sub-committee meetings are currently scheduled.