El-Haj, M. . "Habibi

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

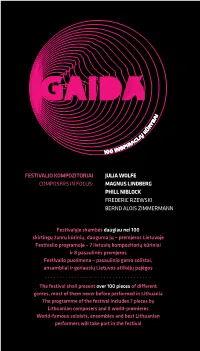

Julia Wolfe Magnus Lindberg Phill Niblock Frederic

FESTIVALIO KOMPOZITORIAI JULIA WOLFE COMPOSERS IN FOCUS: MAGNUS LINDBERG PHILL NIBLOCK FREDERIC RZEWSKI BERND ALOIS ZIMMERMANN Festivalyje skambės daugiau nei 100 skirtingų žanrų kūrinių, dauguma jų – premjeros Lietuvoje Festivalio programoje – 7 lietuvių kompozitorių kūriniai ir 8 pasaulinės premjeros Festivalio puošmena – pasaulinio garso solistai, ansambliai ir geriausių Lietuvos atlikėjų pajėgos The festival shall present over 100 pieces of different genres, most of them never before performed in Lithuania The programme of the festival includes 7 pieces by Lithuanian composers and 8 world-premieres World-famous soloists, ensembles and best Lithuanian performers will take part in the festival PB 1 PROGRAMA | TURINYS In Focus: Festivalio dėmesys taip pat: 6 JULIA WOLFE 18 FREDERIC RZEWSKI 10 MAGNUS LINDBERG 22 BERND ALOIS ZIMMERMANN 14 PHILL NIBLOCK 24 Spalio 20 d., šeštadienis, 20 val. 50 Spalio 26 d., penktadienis, 19 val. Vilniaus kongresų rūmai Šiuolaikinio meno centras LAURIE ANDERSON (JAV) SYNAESTHESIS THE LANGUAGE OF THE FUTURE IN FAHRENHEIT Florent Ghys. An Open Cage (2012) 28 Spalio 21 d., sekmadienis, 20 val. Frederic Rzewski. Les Moutons MO muziejus de Panurge (1969) SYNAESTHESIS Meredith Monk. Double Fiesta (1986) IN CELSIUS Julia Wolfe. Stronghold (2008) Panayiotis Kokoras. Conscious Sound (2014) Julia Wolfe. Reeling (2012) Alexander Schubert. Sugar, Maths and Whips Julia Wolfe. Big Beautiful Dark and (2011) Scary (2002) Tomas Kutavičius. Ritus rhythmus (2018, premjera)* 56 Spalio 27 d., šeštadienis, 19 val. Louis Andriessen. Workers Union (1975) Lietuvos nacionalinė filharmonija LIETUVOS NACIONALINIS 36 Spalio 24 d., trečiadienis, 19 val. SIMFONINIS ORKESTRAS Šiuolaikinio meno centras RŪTA RIKTERĖ ir ZBIGNEVAS Styginių kvartetas CHORDOS IBELHAUPTAS (fortepijoninis duetas) Dalyvauja DAUMANTAS KIRILAUSKAS COLIN CURRIE (kūno perkusija, (fortepijonas) Didžioji Britanija) Laurie Anderson. -

SEM 62 Annual Meeting

SEM 62nd Annual Meeting Denver, Colorado October 26 – 29, 2017 Hosted by University of Denver University of Colorado Boulder and Colorado College SEM 2017 Annual Meeting Table of Contents Sponsors .............................................................................................................................................................................................................. 1 Committees, Board, Staff, and Council ................................................................................................................................................... 2 – 3 Welcome Messages ............................................................................................................................................................................................. 4 Exhibitors and Advertisers ............................................................................................................................................................................... 5 General Information ................................................................................................................................................................................. 5 – 7 Charles Seeger Lecture...................................................................................................................................................................................... 8 Schedule at a Glance. ........................................................................................................................................................................................ -

I Silenti the Next Creation of Fabrizio Cassol and Tcha Limberger Directed by Lisaboa Houbrechts

I SILENTI THE NEXT CREATION OF FABRIZIO CASSOL AND TCHA LIMBERGER DIRECTED BY LISABOA HOUBRECHTS Notre Dame de la détérioration © The Estate of Erwin Blumenfeld, photo Erwin Blumenfeld vers 1930 THE GOAL For several years Fabrizio Cassol has been concentrating on this form, said to be innovative, between concert, opera, dance and theatre. It brings together several artistic necessities and commitments, with the starting point of previously composed music that follows its own narrative. His recent projects, such as Coup Fatal and Requiem pour L. with Alain Platel and Macbeth with Brett Bailey, are examples of this. “I Silenti proposes to be the poetical expression of those who are reduced to silence, the voiceless, those who grow old or have disappeared with time, the blank pages of non-written letters, blindness, emptiness and ruins that could be catalysers to other ends: those of comfort, recovery, regeneration and beauty.” THE MUSIC Monteverdi’s Madrigals, the first vocal music of our written tradition to express human emotions with its dramas, passions and joys. These Madrigals, composed between 1587 and 1638, are mainly grouped around three themes: love, separation and war. The music is rooted, for the first time, in the words and their meaning, using poems by Pétraque, Le Tasse or Marino. It is during the evolution of this form and the actual heart of these polyphonies that Monteverdi participated in the creation of the Opera as a new genre. Little by little the voices become individualized giving birth to “arias and recitatives”, like suspended songs prolonging the narrative of languorous grievances. -

Saudi Arabia.Pdf

A saudi man with his horse Performance of Al Ardha, the Saudi national dance in Riyadh Flickr / Charles Roffey Flickr / Abraham Puthoor SAUDI ARABIA Dec. 2019 Table of Contents Chapter 1 | Geography . 6 Introduction . 6 Geographical Divisions . 7 Asir, the Southern Region � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � �7 Rub al-Khali and the Southern Region � � � � � � � � � � � � � � � � � � � � � � � � � �8 Hejaz, the Western Region � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � �8 Nejd, the Central Region � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � �9 The Eastern Region � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � �9 Topographical Divisions . .. 9 Deserts and Mountains � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � �9 Climate . .. 10 Bodies of Water . 11 Red Sea � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 11 Persian Gulf � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 11 Wadis � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 11 Major Cities . 12 Riyadh � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � �12 Jeddah � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � �13 Mecca � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � -

Song, State, Sawa Music and Political Radio Between the US and Syria

Song, State, Sawa Music and Political Radio between the US and Syria Beau Bothwell Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2013 © 2013 Beau Bothwell All rights reserved ABSTRACT Song, State, Sawa: Music and Political Radio between the US and Syria Beau Bothwell This dissertation is a study of popular music and state-controlled radio broadcasting in the Arabic-speaking world, focusing on Syria and the Syrian radioscape, and a set of American stations named Radio Sawa. I examine American and Syrian politically directed broadcasts as multi-faceted objects around which broadcasters and listeners often differ not only in goals, operating assumptions, and political beliefs, but also in how they fundamentally conceptualize the practice of listening to the radio. Beginning with the history of international broadcasting in the Middle East, I analyze the institutional theories under which music is employed as a tool of American and Syrian policy, the imagined youths to whom the musical messages are addressed, and the actual sonic content tasked with political persuasion. At the reception side of the broadcaster-listener interaction, this dissertation addresses the auditory practices, histories of radio, and theories of music through which listeners in the sonic environment of Damascus, Syria create locally relevant meaning out of music and radio. Drawing on theories of listening and communication developed in historical musicology and ethnomusicology, science and technology studies, and recent transnational ethnographic and media studies, as well as on theories of listening developed in the Arabic public discourse about popular music, my dissertation outlines the intersection of the hypothetical listeners defined by the US and Syrian governments in their efforts to use music for political ends, and the actual people who turn on the radio to hear the music. -

Us Military Assistance to Saudi Arabia, 1942-1964

DANCE OF SWORDS: U.S. MILITARY ASSISTANCE TO SAUDI ARABIA, 1942-1964 DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Bruce R. Nardulli, M.A. * * * * * The Ohio State University 2002 Dissertation Committee: Approved by Professor Allan R. Millett, Adviser Professor Peter L. Hahn _______________________ Adviser Professor David Stebenne History Graduate Program UMI Number: 3081949 ________________________________________________________ UMI Microform 3081949 Copyright 2003 by ProQuest Information and Learning Company. All rights reserved. This microform edition is protected against unauthorized copying under Title 17, United States Code. ____________________________________________________________ ProQuest Information and Learning Company 300 North Zeeb Road PO Box 1346 Ann Arbor, MI 48106-1346 ABSTRACT The United States and Saudi Arabia have a long and complex history of security relations. These relations evolved under conditions in which both countries understood and valued the need for cooperation, but also were aware of its limits and the dangers of too close a partnership. U.S. security dealings with Saudi Arabia are an extreme, perhaps unique, case of how security ties unfolded under conditions in which sensitivities to those ties were always a central —oftentimes dominating—consideration. This was especially true in the most delicate area of military assistance. Distinct patterns of behavior by the two countries emerged as a result, patterns that continue to this day. This dissertation examines the first twenty years of the U.S.-Saudi military assistance relationship. It seeks to identify the principal factors responsible for how and why the military assistance process evolved as it did, focusing on the objectives and constraints of both U.S. -

Iran 2019 Human Rights Report

IRAN 2019 HUMAN RIGHTS REPORT EXECUTIVE SUMMARY The Islamic Republic of Iran is an authoritarian theocratic republic with a Shia Islamic political system based on velayat-e faqih (guardianship of the jurist). Shia clergy, most notably the rahbar (supreme leader), and political leaders vetted by the clergy dominate key power structures. The supreme leader is the head of state. The members of the Assembly of Experts are nominally directly elected in popular elections. The assembly selects and may dismiss the supreme leader. The candidates for the Assembly of Experts, however, are vetted by the Guardian Council (see below) and are therefore selected indirectly by the supreme leader himself. Ayatollah Ali Khamenei has held the position since 1989. He has direct or indirect control over the legislative and executive branches of government through unelected councils under his authority. The supreme leader holds constitutional authority over the judiciary, government-run media, and other key institutions. While mechanisms for popular election exist for the president, who is head of government, and for the Islamic Consultative Assembly (parliament or majles), the unelected Guardian Council vets candidates, routinely disqualifying them based on political or other considerations, and controls the election process. The supreme leader appoints half of the 12-member Guardian Council, while the head of the judiciary (who is appointed by the supreme leader) appoints the other half. Parliamentary elections held in 2016 and presidential elections held in 2017 were not considered free and fair. The supreme leader holds ultimate authority over all security agencies. Several agencies share responsibility for law enforcement and maintaining order, including the Ministry of Intelligence and Security and law enforcement forces under the Interior Ministry, which report to the president, and the Islamic Revolutionary Guard Corps (IRGC), which reports directly to the supreme leader. -

Page 01 May 16.Indd

ISO 9001:2008 CERTIFIED NEWSPAPER Thursday 16 May 2013 6 Rajab 1434 - Volume 18 Number 5701 Price: QR2 QE keen to take Chelsea win part in region’s Europa activities: CEO League Business | 22 Sport | 32 www.thepeninsulaqatar.com [email protected] | [email protected] Editorial: 4455 7741 | Advertising: 4455 7837 / 4455 7780 Sheikh Tamim meets UN official Rate of inflation QR360bn fund 3.7pc in a year DOHA: The rate of inflation in Qatar was 3.7 percent in a year until last April, according to the soon for health latest Consumer Price Index released by Qatar Statistics Authority yesterday. The CPI for April 2013 reached and education 114, showing an increase of 0.1 percent compared to March this year and an increase of 3.7 per- cent compared to April 2012. Biggest fund for any sector in Qatar The highest price increase — 8.2pc — was recorded in the cat- DOHA: Qatar has immedi- Al Thani. “It’s the Emir’s initia- egory “entertainment, recreation ate plans to set up a QR360bn tive,” Qatar News Agency said and culture” followed by “rent, ($98.9bn) fund for sustainable yesterday. The corpus is to be set fuel and energy’’ which was 6.2 financing of health and educa- up vide a law whose draft was percent. Furniture, textiles and tion projects and make these approved by the State Cabinet home appliances saw an increase sectors and services world class. at its weekly meeting yesterday. of 3.1pc. Price increases have been This is the biggest corpus The draft is to be referred to recorded in all the categories announced for any targeted sec- the Advisory Council, QNA said. -

Petroleum – Cooperation for a Sustainable Future

Vienna, Austria Petroleum – cooperation for a sustainable future 20–21 June 2018 Hofburg Palace www.opec.org Dialogue is alive and well In an increasingly globalized, complex and interdependent ity in the market and risks for future investment,” he stated. energy industry, dialogue has become essential for any stake- “While some ups and downs are endemic to the oil industry, holder to accomplish its goals. In times such as these, no one we can certainly lessen their impact by sharing information can go the distance alone. and moving towards a common goal.” OPEC has been at the forefront of international energy A week later, the Secretary General was in New Delhi to dialogue since the early 1990s, when it joined forces with take part in the first-ever India Energy Forum. Again here, he Commentary the International Energy Agency to begin a platform for pro- underlined the prominent role of dialogue between OPEC and ducer-consumer dialogue through the establishment of the one of the world’s largest consumers. International Energy Forum (IEF). “This premier energy forum marks a new stage in the grow- Since its founding in July of 1991 in Paris, the IEF has ing strategic relationship between OPEC and India, and builds evolved to become the world’s preeminent venue for dia- on previous meetings and interactions that we have had so far,” logue between global oil and gas producing and consuming he said. “My friend, Honourable Minister Pradhan, reminded countries. me on Sunday, during our OPEC-India bilateral meeting, that Today, its 72 Member Countries, representing all six con- within less than a year, we have already met five times. -

Page 01 Nov 17.Indd

ISO 9001:2008 CERTIFIED NEWSPAPER 2019 IAAF: D-Day for Doha today Sport | 26 Tuesday 18 November 2014 • 25 Muharram 1436 • Volume 19 Number 6253 www.thepeninsulaqatar.com [email protected] | [email protected] Editorial: 4455 7741 | Advertising: 4455 7837 / 4455 7780 PM meets Palestinian prime minister Business and visit Rising house visas via Metrash2 DOHA: Companies can now apply for business visas and truck drivers’ visas online through Metrash2 and the rents push up Ministry of Interior website, the Ministry said yesterday. Metrash2 is a smartphone application provided by the Ministry. Tourist visas will also inflation to 3pc be available through these chan- nels very soon, said a ministry statement. The General Directorate Rentals climb 8.2pc in October: CPI of Nationality, Borders and Expatriate Affairs at the Ministry DOHA: House rents continue incidentally, have together the has launched the service to to jump undeterred due to gal- largest share of 32.2 percent in stop dealing with paper-based loping demand for residential the CPI basket. In simple words, transactions. units driven by the exploding a household is expected to spend The Director General of General population. 32.2 percent of its monthly income Directorate of Nationality, Latest consumer price index on house rent and fuel and elec- Borders and Expatriate Affairs (CPI) details show that house tricity on average. Brigadier Abdullah Salim Al Ali rents climbed 8.2 percent year- The second largest head of said that the new service will be on-year in October, pushing the expenses of a family is transport available on condition that the overall rate of inflation to three and communications. -

US Rights Catalog Beijing and Frankfurt Book Fair, 2014

ROUTLEDGE US Rights Catalog Beijing and Frankfurt Book Fair, 2014 www.taylorandfrancis.com Welcome THE EASY WAY TO ORDER Ordering online is fast and efficient, simply follow the on-screen instructions. Welcome to Taylor & Francis’ US Books Rights Catalogue! Alternatively, you can call, fax, or see ordering information at the back of this catalog. Dear Publisher, UK and Rest of World Call: +44 (0)1235 400524 Thank you for taking an interest in this year’s most important titles. Our Rights Fax: +44 (0)20 7107 6699 Catalogue is here for you to review. Our dedicated Subsidiary Rights Manager US, Canada and Latin America has a wealth of experience in licensing translation rights worldwide and in-depth Call: 1-800-634-7064 knowledge of our books and international publishing markets. To request further Fax: 1-800-248-4724 information or an evaluation copy of any of the publications in this catalogue please contact: Contacts Christina M. Taranto | [email protected] | Tel: 561 361 2539 EBOOK AND ONLINE SALES UK and Rest of World: Our history: Email: [email protected] Call: +44 (0)20 3377 3804 Taylor & Francis has been established in academic publishing since Richard Taylor US, Canada and Latin America: founded the company in the City of London in 1798. Our subsequent growth Email: [email protected] Call: Toll free: 1-888-318-2367 has seen us acquire many highly respected publishers and Taylor & Francis Group Call: Overseas: 1-561-998-2505 now publishes more than 1,700 journals and over 3,600 new books each year, JOURNALS with a books backlist in excess of 50,000 specialist titles. -

David A. Mcdonald 800 E. Third St. Bloomington, in 47405 (812) 855-0396 [email protected]

David A. McDonald Curriculum Vitae David A. McDonald 800 E. Third St. Bloomington, IN 47405 (812) 855-0396 [email protected] Education 2006 Ph.D., Ethnomusicology, School of Music, University of Illinois at Urbana Champaign. Urbana, Illinois. 2001 M.M., Ethnomusicology, School of Music, University of Illinois at Urbana- Champaign. Urbana, Illinois. 1998 B.M., Music Performance, School of Music, Theatre, and Dance, Colorado State University, Ft. Collins, Colorado. Minor in History, with concentration in the Arab Middle East. Professional Experience 2017-21 Chair, Department of Folklore and Ethnomusicology, Indiana University 2017– Series Editor, Activist Encounters in Folklore and Ethnomusicology, Indiana University Press 2017– Associate Editor for Performing Arts, Review of Middle East Studies 2016– Associate Editor for Ethnomusicology, Journal of Folklore Research 2014-15, Director, Ethnomusicology Institute, Indiana University 2016-20 2008-present Associate Professor of Ethnomusicology, Department of Folklore and Ethnomusicology, Indiana University, Bloomington, Indiana Adjunct Associate Professor, Dept. of Anthropology Adjunct Associate Professor, Institute for European Studies (Irish) Adjunct Associate Professor, Dept. Near Eastern Languages and Cultures Adjunct Associate Professor, Dept. Gender Studies Adjunct Associate Professor, Center for the Study of the Middle East 1 David A. McDonald Curriculum Vitae 2007-08 Visiting Assistant Professor of Ethnomusicology, College of Musical Arts, Bowling Green State University, Bowling Green, Ohio 2006-07 Visiting Assistant Professor of Anthropology, Department of Anthropology, University of Illinois Urbana-Champaign 2006-07 Program Coordinator, Ethnography of the University Initiative (EUI), Department of Anthropology, University of Illinois Urbana-Champaign 2003-04 Visiting Professor, National Music Conservatory of Jordan, Queen Noor Al- Hussein Foundation, Amman, Jordan Publications Refereed / Peer-reviewed Books 2013 My Voice is My Weapon: Music, Nationalism, and the Poetics of Palestinian Resistance.