Lecture 17: Color

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Impact of Properties of Thermochromic Pigments on Knitted Fabrics

International Journal of Scientific & Engineering Research, Volume 7, Issue 4, April-2016 1693 ISSN 2229-5518 Impact of Properties of Thermochromic Pigments on Knitted Fabrics 1Dr. Jassim M. Abdulkarim, 2Alaa K. Khsara, 3Hanin N. Al-Kalany, 4Reham A. Alresly Abstract—Thermal dye is one of the important indicators when temperature is changed. It is used in medical, domestic and electronic applications. It indicates the change in chemical and thermal properties. In this work it is used to indicate the change in human body temperature where the change in temperature between (30 – 41 oC) is studied .The change in color begin to be clear at (30 oC). From this study it is clear that heat flux increased (81%) between printed and non-printed clothes which is due to the increase in heat transfer between body and the printed cloth. The temperature has been increased to the maximum level that the human body can reach and a gradual change in color is observed which allow the use of this dye on baby clothes to indicate the change in baby body temperature and monitoring his medical situation. An additional experiment has been made to explore the change in physical properties to the used clothe after the printing process such as air permeability which shows a clear reduction in this property on printed region comparing with the unprinted region, the reduction can reach (70%) in this property and in some type of printing the reduction can reach (100%) which give non permeable surface. Dye fitness can also be increased by using binders and thickeners and the reduction in dyes on the surface of cloth is reached (15%) after (100) washing cycle. -

Optical Illusions “What You See Is Not What You Get”

Optical Illusions “What you see is not what you get” The purpose of this lesson is to introduce students to basic principles of visual processing. Much of the lesson revolves around the use of visual illusions and interactive demonstrations with the students. The overarching theme of this lesson is that perception and sensation are not necessarily the same, and that optical illusions are a way for us to study the way that our visual system works. Furthermore, there are cells in the visual system that specifically respond to particular aspects of visual stimuli, and these cells can become fatigued. Grade Level: 3-12 Presentation time: 15-30 minutes, depending on which activities are chosen Lesson plan organization: Each lesson plan is divided into three sections: Introducing the lesson, Conducting the lesson, and Concluding the lesson. Each lesson has specific principles with associated figures, class discussion (D), and learning activities (A). This lesson plan is provided by the Neurobiology and Behavior Community Outreach Team at the University of Washington: http://students.washington.edu/watari/neuroscience/k12/LessonPlans.html 1 Materials: Computer to display some optical illusions (optional) Checkerboard illusion: Provided on page 8 or available online with explanation at http://web.mit.edu/persci/people/adelson/checkershadow_illusion.html Lilac chaser movie: http://www.scientificpsychic.com/graphics/ as an animated gif or http://www.michaelbach.de/ot/col_lilacChaser/index.html as Adobe Flash and including scientific explanation -

Color Gamut of Halftone Reproduction*

Color Gamut of Halftone Reproduction* Stefan Gustavson†‡ Department of Electrical Engineering, Linkøping University, S-581 83 Linkøping, Sweden Abstract tern then gets attenuated once more by the pattern of ink that resides on the surface, and the finally reflected light Color mixing by a halftoning process, as used for color is the result of these three effects combined: transmis- reproduction in graphic arts and most forms of digital sion through the ink film, diffused reflection from the hardcopy, is neither additive nor subtractive. Halftone substrate, and transmission through the ink film again. color reproduction with a given set of primary colors is The left-hand side of Fig. 2 shows an exploded view of heavily influenced not only by the colorimetric proper- the ink layer and the substrate, with the diffused reflected ties of the full-tone primaries, but also by effects such pattern shown on the substrate. The final viewed image as optical and physical dot gain and the halftone geom- is a view from the top of these two layers, as shown to etry. We demonstrate that such effects not only distort the right in Fig. 2. The dots do not really increase in the transfer characteristics of the process, but also have size, but they have a shadow around the edge that makes an impact on the size of the color gamut. In particular, a them appear larger, and the image is darker than what large dot gain, which is commonly regarded as an un- would have been the case without optical dot gain. wanted distortion, expands the color gamut quite con- siderably. -

Painting Part 3 1

.T 720 (07) | 157 ) v.10 • pt. 3 I n I I I 6 International Correspondence Schools, Scranton, Pa. Painting By DURWARD E. NICHOLSON Technical Writer, International Correspondence Schools and DAVID T. JONES, B.Arch. Director, School of Architecture and the Building Trades International Correspondence Schools 6227C Part 3 Edition 1 International Correspondence Schools, Scranton, Pennsylvania International Correspondence Schools, Canadian, Ltd., Montreal, Canadc Painting \A jo Pa3RT 3 “I find in life lliat most affairs tliat require serious handling are distasteful. For this reason, I have always believed that the successful man has the hardest battle with himself rather than with the other fellow'. By To bring one’s self to a frame of mind and to the proper energy to accomplish things that require plain DURWARD E. NICHOLSON hard work continuously is the one big battle that Technical Writer everyone has. When this battle is won for all time, then everything is easy.” \ International Correspondence Schools —Thomas A. Buckner and DAVID T. JONES, B. Arch. 33 Director, School of Architecture and the Building Trades International Correspondence Schools B Member, American Institute of Architects Member, Construction Specifications Institute Serial 6227C © 1981 by INTERNATIONAL TEXTBOOK COMPANY Printed in the United States of America All rights reserved International Correspondence Schools > Scranton, Pennsylvania\/ International Correspondence Schools Canadian, Ltd. ICS Montreal, Canada ▼ O (7M V\) v-V*- / / *r? 1 \/> IO What This Text Covers . /’ v- 3 Painting Part 3 1. PlGX OLORS ___________________ ________ Pages 1 to 14 The color of paint depends on the colors of the pigments that are mixed with the vehicle. -

Color Mixing Ratios

Colour Mixing: Ratios Color Theory with Tracy Moreau Learn more at DecoArt’s Art For Everyone Learning Center www.tracymoreau.net Primary Colours In painting, the three primary colours are yellow, red, and blue. These colors cannot be created by mixing other colours. They are called primary because all other colours are derived from them. Mixing Primary Colours Creates Secondary Colours If you combine two primary colours you get a secondary colour. For example, red and blue make violet, yellow and red make orange, and blue and yellow make green. If you mix all of the primary colours together you get black. The Mixing Ratio for Primary Colours To get orange, you mix the primary colours red and yellow. The mixing ratio of these two colours determines which shade of orange you will get after mixing. For example, if you use more red than yellow you will get a reddish-orange. If you add more yellow than red you will get a yellowish-orange. Experiment with the shades you have to see what you can create. Try out different combinations and mixing ratios and keep a written record of your results so that you can mix the colours again for future paintings. www.tracymoreau.net Tertiary Colours By mixing a primary and a secondary colour or two secondary colours you get a tertiary colour. Tertiary colours such as blue-lilac, yellow-green, green-blue, orange-yellow, red-orange, and violet-red are all created by combining a primary and a secondary colour. The Mixing Ratios of Light and Dark Colours If you want to darken a colour, you only need to add a small amount of black or another dark colour. -

Chapter 2 Fundamentals of Digital Imaging

Chapter 2 Fundamentals of Digital Imaging Part 4 Color Representation © 2016 Pearson Education, Inc., Hoboken, 1 NJ. All rights reserved. In this lecture, you will find answers to these questions • What is RGB color model and how does it represent colors? • What is CMY color model and how does it represent colors? • What is HSB color model and how does it represent colors? • What is color gamut? What does out-of-gamut mean? • Why can't the colors on a printout match exactly what you see on screen? © 2016 Pearson Education, Inc., Hoboken, 2 NJ. All rights reserved. Color Models • Used to describe colors numerically, usually in terms of varying amounts of primary colors. • Common color models: – RGB – CMYK – HSB – CIE and their variants. © 2016 Pearson Education, Inc., Hoboken, 3 NJ. All rights reserved. RGB Color Model • Primary colors: – red – green – blue • Additive Color System © 2016 Pearson Education, Inc., Hoboken, 4 NJ. All rights reserved. Additive Color System © 2016 Pearson Education, Inc., Hoboken, 5 NJ. All rights reserved. Additive Color System of RGB • Full intensities of red + green + blue = white • Full intensities of red + green = yellow • Full intensities of green + blue = cyan • Full intensities of red + blue = magenta • Zero intensities of red , green , and blue = black • Same intensities of red , green , and blue = some kind of gray © 2016 Pearson Education, Inc., Hoboken, 6 NJ. All rights reserved. Color Display From a standard CRT monitor screen © 2016 Pearson Education, Inc., Hoboken, 7 NJ. All rights reserved. Color Display From a SONY Trinitron monitor screen © 2016 Pearson Education, Inc., Hoboken, 8 NJ. -

Mean Green Interpreting the Emotion of Color (Art + Language)

Mean Green Interpreting the Emotion of Color (art + language) Is there such a thing as an all-black painting, all- green or all-red painting? Yes, there is! American artists around the 1950s turned from abstract expressionism to a movement labeling them colorists. Colorists painted with a monochromatic color palette using variations of one color. Their process was made easier with the introduction of acrylics and acrylic mediums in 1953. Painters like Barnett Newman, Morris Louis, Frank Stella and Ad Reinhardt created paintings in all black. They believed art was art and should be created without rules. This break in approach to art was not popular with art critics. The critics found that a closer look at the monochromatic paintings exhibited details and required more thought. Artists added texture and surface variations to enhance the monochromatic paintings. American colorists changed the artists image from Materials that of realistic human behavior to the use Blick Canvas Panels 11" x 14" (07008-1114), need of color for feelings. They worked to make one per student color and color changes the total emphasis Blickrylic Student Acrylics, need one basic set of of their art. six pints (00711-1049) and one pint each This lesson is great fun, combining one Fluorescent Green (00711-7266) and Magenta color and fun words to describe emotions (00711-3046), share across classroom and meanings to that color. These titles could be wonderful white, riot red or cool Round 10-Well Trays (03041-1010), share one tray blue. Students” paintings turn from between two students monochromatic paintings to 3D collage Dynasty® Fine Ruby Synthetic Brushes, canister paintings when textures and found objects set of 72 assorted (05198-0729), share across are added. -

Color Mixing Challenge

COLOR MIXING CHALLENGE Target age group: any age Purpose of activity: to experiment with paint and discover color combinations that will make many different shades of the basic colors Materials needed: copies of the pattern page printed onto heavy card stock paper, small paint brushes, paper towels, paper plates to use as palettes (or half-sheets of card stock), a bowl of water to rinse brushes, acrylic paints in these colors: red, blue, yellow, and white (NOTE: Try to purchase the most “true” colors you can-- a royal blue, a true red, a medium yellow.) Time needed to complete activity: about 30 minutes (not including set-up and clean-up time) How to prepare: Copy (or print out) a pattern page for each student. Give each student a paper plate containing a marble-sized blob of red, blue, yellow and white. (Have a few spare plates available in case they run out of mixing space on their fi rst plate.) Also provide a paper towel and a bowl of rinse water. If a student runs out of a particular color of paint, give them a dab more. This will avoid wasting a lot of paint. (If you let the students fi ll their own paints, they will undoubtedly waste a lot of paint. In my experience, students almost always over-estimate how much paint they need.) What to do: It’s up to you (the adult in charge) how much instruction to give ahead of time. You may want to discuss color theory quite a bit, or you may want to emphasize the experimental nature of this activity and let the students discover color combinations for themselves. -

Josiah Willard Gibbs

GENERAL ARTICLE Josiah Willard Gibbs V Kumaran The foundations of classical thermodynamics, as taught in V Kumaran is a professor textbooks today, were laid down in nearly complete form by of chemical engineering at the Indian Institute of Josiah Willard Gibbs more than a century ago. This article Science, Bangalore. His presentsaportraitofGibbs,aquietandmodestmanwhowas research interests include responsible for some of the most important advances in the statistical mechanics and history of science. fluid mechanics. Thermodynamics, the science of the interconversion of heat and work, originated from the necessity of designing efficient engines in the late 18th and early 19th centuries. Engines are machines that convert heat energy obtained by combustion of coal, wood or other types of fuel into useful work for running trains, ships, etc. The efficiency of an engine is determined by the amount of useful work obtained for a given amount of heat input. There are two laws related to the efficiency of an engine. The first law of thermodynamics states that heat and work are inter-convertible, and it is not possible to obtain more work than the amount of heat input into the machine. The formulation of this law can be traced back to the work of Leibniz, Dalton, Joule, Clausius, and a host of other scientists in the late 17th and early 18th century. The more subtle second law of thermodynamics states that it is not possible to convert all heat into work; all engines have to ‘waste’ some of the heat input by transferring it to a heat sink. The second law also established the minimum amount of heat that has to be wasted based on the absolute temperatures of the heat source and the heat sink. -

Calculation of CCT and Duv and Practical Conversion Formulae

CORM 2011 Conference, Gaithersburg, MD, May 3-5, 2011 Calculation of CCT and Duv and Practical Conversion Formulae Yoshi Ohno Group Leader, NIST Fellow Optical Technology Division National Institute of Standards and Technology Gaithersburg, Maryland USA CORM 2011 1 White Light Chromaticity Duv CCT CORM 2011 2 Duv often missing Lighting Facts Label CCT and CRI do not tell the whole story of color quality CORM 2011 3 CCT and CRI do not tell the whole story Triphosphor FL simulation Not acceptable Not preferred Neodymium (Duv=-0.005) Duv is another important dimension of chromaticity. CORM 2011 4 Duv defined in ANSI standard Closest distance from the Planckian locus on the (u', 2/3 v') diagram, with + sign for above and - sign for below the Planckian locus. (ANSI C78.377-2008) Symbol: Duv CCT and Duv can specify + Duv the chromaticity of light sources just like (x, y). - Duv The two numbers (CCT, Duv) provides color information intuitively. (x, y) does not. Duv needs to be defined by CIE. CORM 2011 5 ANSI C78.377-2008 Specifications for the chromaticity of SSL products CORM 2011 6 CCT- Duv chart 5000 K 3000 K 4000 K 6500 K 2700 K 3500 K 4500 K 5700 K ANSI 7-step MacAdam C78.377 ellipses quadrangles (CCT in log scale) CORM 2011 7 Correlated Color Temperature (CCT) Temperature [K] of a Planckian radiator whose chromaticity is closest to that of a given stimulus on the CIE (u’,2/3 v’) coordinate. (CIE 15:2004) CCT is based on the CIE 1960 (u, v) diagram, which is now obsolete. -

Massimo Olivucci Director of the Laboratory for Computational Photochemistry and Photobiology

Massimo Olivucci Director of the Laboratory for Computational Photochemistry and Photobiology September 12, 2012 Center for Photochemical Sciences & Department of Chemistry Bowling Green State University, Bowling Green, OH The Last Frontier of Sensitivity The research group working at the Laboratory for Computational Photochemistry and Photobiology (LCPP) at the Center for Photochemical Sciences, Bowling Green State University (Ohio) has been investigating the so- called Purkinje effect: the blue-shift in the perceived color under the decreasing levels of illumination that are experienced at dusk. Their results have appeared in the September 7 issue of Science Magazine. By constructing sophisticated computer models of rod rhodopsin, the dim-light visual “sensor” of vertebrates, the group has provided a first-principle explanation for this effect in atomic detail. The effect can now be understood as a result of quantum mechanical effects that may some day be used to design the ultimate sub-nanoscale light detector. The retina of vertebrate eyes, including humans, is the most powerful light detector that we know. In the human eye, light coming through the lens is projected onto the retina where it forms an image on a mosaic of photoreceptor cells that transmits information on the surrounding environment to the brain visual cortex, both during daytime and nighttime. Night (dim-light) vision represents the last frontier of light detection. In extremely poor illumination conditions, such as those of a star-studded night or of ocean depths, the retina is able to perceive intensities corresponding to only a few photons, the indivisible units of light. Such high sensitivity is due to specialized sensors called rod rhodopsins that appeared more than 250 million years ago on the retinas of vertebrate animals. -

OSHER Color 2021

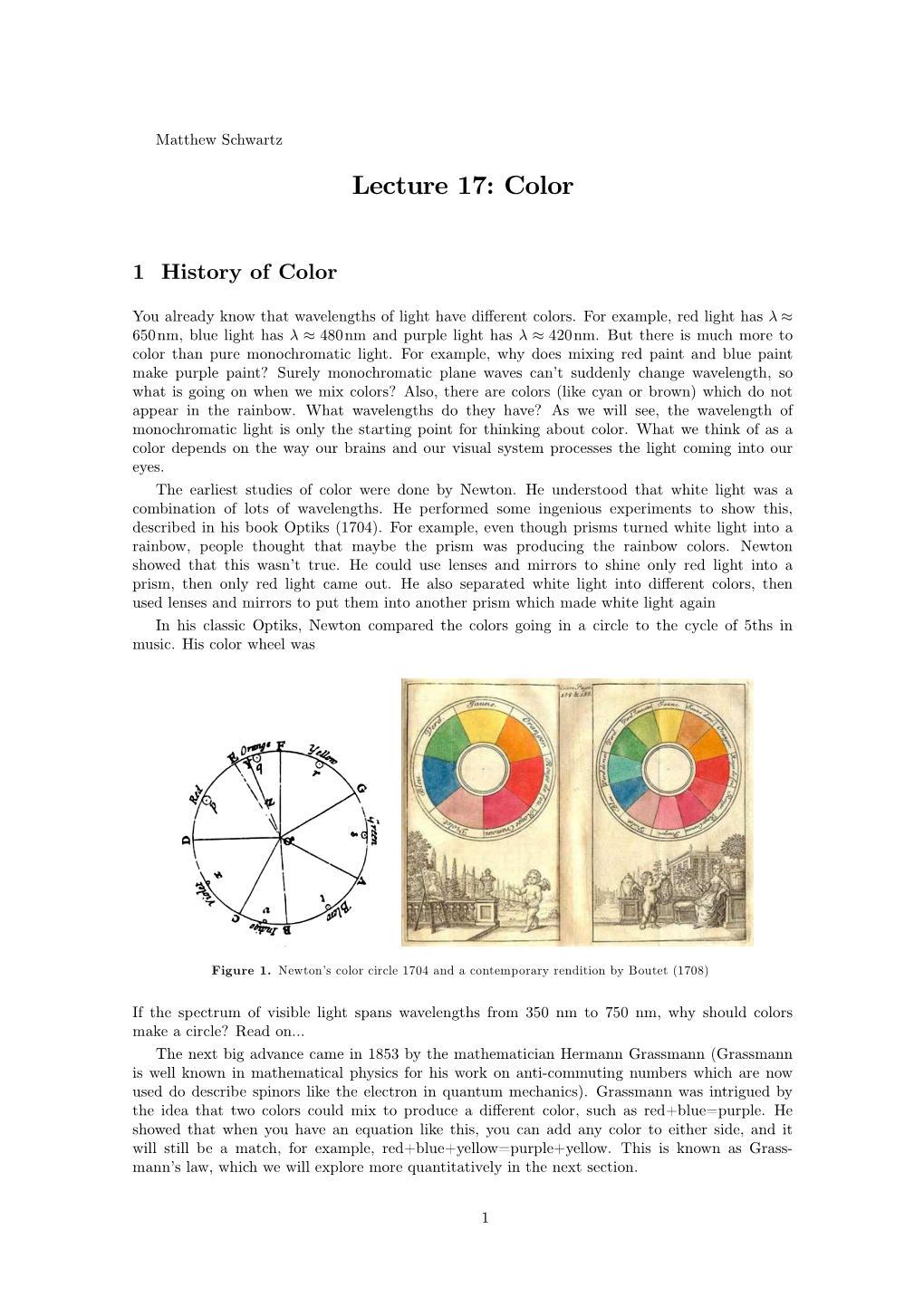

OSHER Color 2021 Presentation 1 Mysteries of Color Color Foundation Q: Why is color? A: Color is a perception that arises from the responses of our visual systems to light in the environment. We probably have evolved with color vision to help us in finding good food and healthy mates. One of the fundamental truths about color that's important to understand is that color is something we humans impose on the world. The world isn't colored; we just see it that way. A reasonable working definition of color is that it's our human response to different wavelengths of light. The color isn't really in the light: We create the color as a response to that light Remember: The different wavelengths of light aren't really colored; they're simply waves of electromagnetic energy with a known length and a known amount of energy. OSHER Color 2021 It's our perceptual system that gives them the attribute of color. Our eyes contain two types of sensors -- rods and cones -- that are sensitive to light. The rods are essentially monochromatic, they contribute to peripheral vision and allow us to see in relatively dark conditions, but they don't contribute to color vision. (You've probably noticed that on a dark night, even though you can see shapes and movement, you see very little color.) The sensation of color comes from the second set of photoreceptors in our eyes -- the cones. We have 3 different types of cones cones are sensitive to light of long wavelength, medium wavelength, and short wavelength.