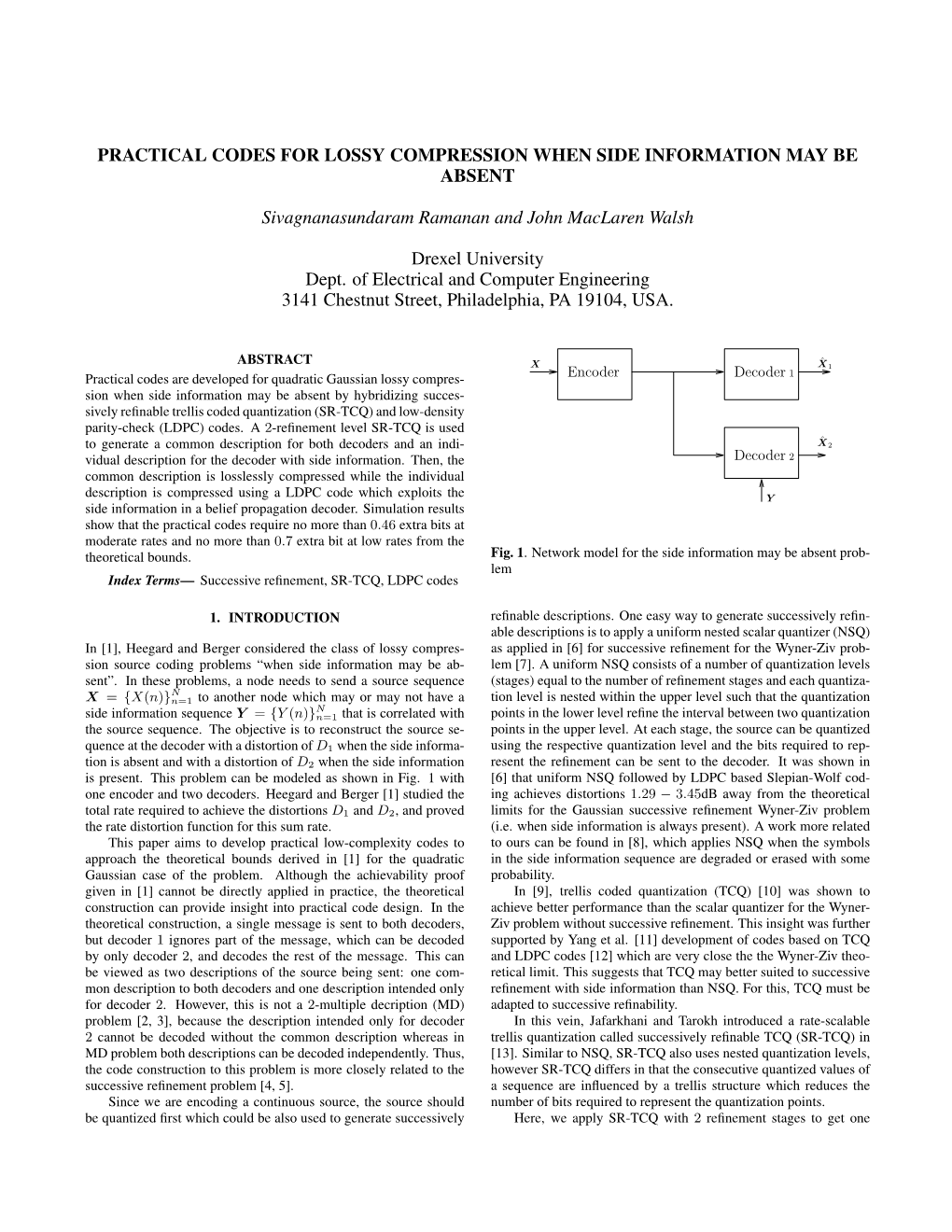

Practical Codes for Lossy Compression When Side Information May Be Absent

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Principles of Communications ECS 332

Principles of Communications ECS 332 Asst. Prof. Dr. Prapun Suksompong (ผศ.ดร.ประพันธ ์ สขสมปองุ ) [email protected] 1. Intro to Communication Systems Office Hours: Check Google Calendar on the course website. Dr.Prapun’s Office: 6th floor of Sirindhralai building, 1 BKD 2 Remark 1 If the downloaded file crashed your device/browser, try another one posted on the course website: 3 Remark 2 There is also three more sections from the Appendices of the lecture notes: 4 Shannon's insight 5 “The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point.” Shannon, Claude. A Mathematical Theory Of Communication. (1948) 6 Shannon: Father of the Info. Age Documentary Co-produced by the Jacobs School, UCSD- TV, and the California Institute for Telecommunic ations and Information Technology 7 [http://www.uctv.tv/shows/Claude-Shannon-Father-of-the-Information-Age-6090] [http://www.youtube.com/watch?v=z2Whj_nL-x8] C. E. Shannon (1916-2001) Hello. I'm Claude Shannon a mathematician here at the Bell Telephone laboratories He didn't create the compact disc, the fax machine, digital wireless telephones Or mp3 files, but in 1948 Claude Shannon paved the way for all of them with the Basic theory underlying digital communications and storage he called it 8 information theory. C. E. Shannon (1916-2001) 9 https://www.youtube.com/watch?v=47ag2sXRDeU C. E. Shannon (1916-2001) One of the most influential minds of the 20th century yet when he died on February 24, 2001, Shannon was virtually unknown to the public at large 10 C. -

SHANNON SYMPOSIUM and STATUE DEDICATION at UCSD

SHANNON SYMPOSIUM and STATUE DEDICATION at UCSD At 2 PM on October 16, 2001, a statue of Claude Elwood Shannon, the Father of Information Theory who died earlier this year, will be dedicated in the lobby of the Center for Magnetic Recording Research (CMRR) at the University of California-San Diego. The bronze plaque at the base of this statue will read: CLAUDE ELWOOD SHANNON 1916-2001 Father of Information Theory His formulation of the mathematical theory of communication provided the foundation for the development of data storage and transmission systems that launched the information age. Dedicated October 16, 2001 Eugene Daub, Sculptor There is no fee for attending the dedication but if you plan to attend, please fill out that portion of the attached registration form. In conjunction with and prior to this dedication, 15 world-renowned experts on information theory will give technical presentations at a Shannon Symposium to be held in the auditorium of CMRR on October 15th and the morning of October 16th. The program for this Symposium is as follows: Monday Oct. 15th Monday Oct. 15th Tuesday Oct. 16th 9 AM to 12 PM 2 PM to 5 PM 9 AM to 12 PM Toby Berger G. David Forney Jr. Solomon Golomb Paul Siegel Edward vanderMeulen Elwyn Berlekamp Jacob Ziv Robert Lucky Shu Lin David Neuhoff Ian Blake Neal Sloane Thomas Cover Andrew Viterbi Robert McEliece If you are interested in attending the Shannon Symposium please fill out the corresponding portion of the attached registration form and mail it in as early as possible since seating is very limited. -

Admissibility Properties of Gilbert's Encoding for Unknown Source

216 IEEE TRANSACTIONS ONINFORMATION THEORY,JANLJARY 1912 Admissibility Properties of Gilbert’s Encoding for Unknown We now wish to show that U*(b) minimizes Source Probabilities E{U@,P) I x1,x2,. .,X”h (7) THOMAS M. COVER the expected average code length conditioned on the data, if in fact p is drawn according to a Dirichlet prior distribution with parameters ,&), defined by the density function Abstract-Huffman coding provides optimal source encoding for (&A,. events of specified source probabilities. Gilbert has proposed a scheme &dPl,PZ,. ,PN) = C&J,,. .,a,)p;1-‘p;i-‘. .p;N-l, for source encoding when these source probabilities are imperfectly known. This correspondence shows that Gilbert’s scheme is a Bayes pi 1 0, zpp1 = 1, I* r 1, (8) scheme against a certain natural prior distribution on the source where C(1i,. J,) is a suitable normalization constant. This follows probabilities. Consequently, since this prior distribution has nonzero from application of Bayes’ rule, from which it follows that the posterior mass everywhere, Gilbert’s scheme is admissible in the sense that no distribution of p given X is again a Dirichlet distribution, this time source encoding has lower average code length for every choice of source with parameters Izl + nl, given by probabilities. APP,,P2,. ‘,PN I x1,x2,. .9X”) I. INTRODUCTION = C(Il + n1, a2 + Q,‘. ., a&l + nN)p;‘+“‘-‘p:2+“2-1.. ., CODINGFOR KNOWN~ ++Q- 1 Consider the random variable X, where Pr {X = xi} = pi, i = PN 3 p*ro,zppi=1. (9) 1,2,. ,N,pi 2 0, Xpi = 1. -

Andrew J. and Erna Viterbi Family Archives, 1905-20070335

http://oac.cdlib.org/findaid/ark:/13030/kt7199r7h1 Online items available Finding Aid for the Andrew J. and Erna Viterbi Family Archives, 1905-20070335 A Guide to the Collection Finding aid prepared by Michael Hooks, Viterbi Family Archivist The Andrew and Erna Viterbi School of Engineering, University of Southern California (USC) First Edition USC Libraries Special Collections Doheny Memorial Library 206 3550 Trousdale Parkway Los Angeles, California, 90089-0189 213-740-5900 [email protected] 2008 University Archives of the University of Southern California Finding Aid for the Andrew J. and Erna 0335 1 Viterbi Family Archives, 1905-20070335 Title: Andrew J. and Erna Viterbi Family Archives Date (inclusive): 1905-2007 Collection number: 0335 creator: Viterbi, Erna Finci creator: Viterbi, Andrew J. Physical Description: 20.0 Linear feet47 document cases, 1 small box, 1 oversize box35000 digital objects Location: University Archives row A Contributing Institution: USC Libraries Special Collections Doheny Memorial Library 206 3550 Trousdale Parkway Los Angeles, California, 90089-0189 Language of Material: English Language of Material: The bulk of the materials are written in English, however other languages are represented as well. These additional languages include Chinese, French, German, Hebrew, Italian, and Japanese. Conditions Governing Access note There are materials within the archives that are marked confidential or proprietary, or that contain information that is obviously confidential. Examples of the latter include letters of references and recommendations for employment, promotions, and awards; nominations for awards and honors; resumes of colleagues of Dr. Viterbi; and grade reports of students in Dr. Viterbi's classes at the University of California, Los Angeles, and the University of California, San Diego. -

Digital Communication Systems ECS 452

Digital Communication Systems ECS 452 Asst. Prof. Dr. Prapun Suksompong (ผศ.ดร.ประพันธ ์ สขสมปองุ ) [email protected] 1. Intro to Digital Communication Systems Office Hours: BKD, 6th floor of Sirindhralai building Monday 10:00-10:40 Tuesday 12:00-12:40 1 Thursday 14:20-15:30 “The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point.” Shannon, Claude. A Mathematical Theory Of Communication. (1948) 2 Shannon: Father of the Info. Age Documentary Co-produced by the Jacobs School, UCSD-TV, and the California Institute for Telecommunications and Information Technology Won a Gold award in the Biography category in the 2002 Aurora Awards. 3 [http://www.uctv.tv/shows/Claude-Shannon-Father-of-the-Information-Age-6090] [http://www.youtube.com/watch?v=z2Whj_nL-x8] C. E. Shannon (1916-2001) 1938 MIT master's thesis: A Symbolic Analysis of Relay and Switching Circuits Insight: The binary nature of Boolean logic was analogous to the ones and zeros used by digital circuits. The thesis became the foundation of practical digital circuit design. The first known use of the term bit to refer to a “binary digit.” Possibly the most important, and also the most famous, master’s thesis of the century. It was simple, elegant, and important. 4 C. E. Shannon: Master Thesis 5 Boole/Shannon Celebration Events in 2015 and 2016 centered around the work of George Boole, who was born 200 years ago, and Claude E. Shannon, born 100 years ago. Events were scheduled both at the University College Cork (UCC), Ireland and the Massachusetts Institute of Technology (MIT) 6 http://www.rle.mit.edu/booleshannon/ An Interesting Book The Logician and the Engineer: How George Boole and Claude Shannon Created the Information Age by Paul J. -

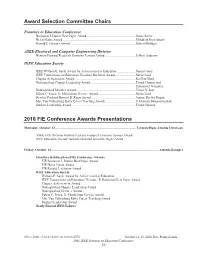

Awards Program

Award Selection Committee Chairs Frontiers in Education Conference Benjamin J. Dasher Best Paper Award ........................................................... Diane Rover Helen Plants Award ........................................................................................ Elizabeth Eschenbach Ronald J. Schmitz Award ............................................................................... Robert Hofinger ASEE Electrical and Computer Engineering Division Hewlett-Packard Frederick Emmons Terman Award ..................................... Jeffrey Andrews IEEE Education Society IEEE William E. Sayle Award for Achievement in Education ....................... Susan Conry IEEE Transactions on Education Theodore Bachman Award ........................ Susan Lord Chapter Achievement Award ......................................................................... Kai Pan Mark Distinguished Chapter Leadership Award ...................................................... Trond Clausen and Emmanuel Gonzalez Distinguished Member Award ........................................................................ Victor Nelson Edwin C. Jones, Jr. Meritorious Service Award .............................................. Susan Lord Hewlett-Packard/Harriett B. Rigas Award ..................................................... Joanne Bechta Dugan Mac Van Valkenburg Early Career Teaching Award... ................................... S. Hossein Mousavinezhad Student Leadership Award ............................................................................. -

Uwaterloo Latex Thesis Template

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by University of Waterloo's Institutional Repository On Causal Video Coding with Possible Loss of the First Encoded Frame by Mahshad Eslamifar A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Master of Applied Science in Electrical and Computer Engineering Waterloo, Ontario, Canada, 2013 c Mahshad Eslamifar 2013 I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. ii Abstract Multiple Description Coding (MDC) was first formulated by A. Gersho and H. Witsen- hausen as a way to improve the robustness of telephony links to outages. Lots of studies have been done in this area up to now. Another application of MDC is the transmission of an image in different descriptions. If because of the link outage during transmission, any one of the descriptions fails, the image could still be reconstructed with some quality at the decoder side. In video coding, inter prediction is a way to reduce temporal redundancy. From an information theoretical point of view, one can model inter prediction with Causal Video Coding (CVC). If because of link outage, we lose any I-frame, how can we recon- struct the corresponding P- or B-frames at the decoder? In this thesis, we are interested in answering this question and we call this scenario as causal video coding with possible loss of the first encoded frame and we denote it by CVC-PL as PL stands for possible loss. -

Human Space Flight

HUMAN SPACE FLIGHT APOLLO 50 YEARS ON 2019 ANNUAL MEETING October 6-7, 2019 Washington, DC NATIONAL ACADEMY OF ENGINEERING 2019 ANNUAL MEETING October 6–7, 2019 Washington, DC CONTENTS Sunday, October 6 Public Program . 2 Chair’s Remarks . 2 President’s Address . 4 Induction Ceremony . 5 Awards Program. 5 Plenary Session . .10 Monday, October 7 Business Session . .12 Public Forum . .12 Reception & Dinner Dance . .17 Section Meetings . .18 General Information Meeting Services . 18 Section Meetings . 18 Registration . 19 Shuttle Bus Service . 19 Guest Tour Bus Service . .19 Guest Program . .20 Section Chairs . 21 NAS Building Map . .22 Area Map . 23 2018 Honor Roll of Donors . 24 Cover photo credit: William Anders/NASA/AP, Dec. 24, 1968 10:00 am–4:00 pm Registration NAS 120 10:30 am–11:45 am Brunch Buffet West Lawn 10:30 am–11:45 am Planned Giving in the Current Tax Environment Members’ Room (advance registration required; brunch included) Led by Alan L. Cates, JD Partner, Husch Blackwell Alan L. Cates focuses his practice on trust and estate issues and related private wealth matters and frequently presents on financial topics such as taxation, estate planning, business succession planning and probate and trust issues across the country. With decades of comprehensive legal experience, he has guided individuals and their businesses in estate planning and business succession planning. Additionally, he has represented individual and institutional executors and trustees in all sorts of litigation matters, both as defense counsel and in initiating action. He has also represented taxpayers in administrative proceedings and in courtrooms, and provided critical services to tax-exempt organizations. -

IEEE Information Theory Society Newsletter

IEEE Information Theory Society Newsletter Vol. 53, No. 1, March 2003 Editor: Lance C. Pérez ISSN 1059-2362 LIVING INFORMATION THEORY The 2002 Shannon Lecture by Toby Berger School of Electrical and Computer Engineering Cornell University, Ithaca, NY 14853 1 Meanings of the Title Information theory has ... perhaps The title, ”Living Information Theory,” is a triple enten- ballooned to an importance beyond dre. First and foremost, it pertains to the information its actual accomplishments. Our fel- theory of living systems. Second, it symbolizes the fact low scientists in many different that our research community has been living informa- fields, attracted by the fanfare and by tion theory for more than five decades, enthralled with the new avenues opened to scientific the beauty of the subject and intrigued by its many areas analysis, are using these ideas in ... of application and potential application. Lastly, it is in- biology, psychology, linguistics, fun- tended to connote that information theory is decidedly damental physics, economics, the theory of the orga- alive, despite sporadic protestations to the contrary. nization, ... Although this wave of popularity is Moreover, there is a thread that ties together all three of certainly pleasant and exciting for those of us work- these meanings for me. That thread is my strong belief ing in the field, it carries at the same time an element that one way in which information theorists, both new of danger. While we feel that information theory is in- and seasoned, can assure that their subject will remain deed a valuable tool in providing fundamental in- vitally alive deep into the future is to embrace enthusias- sights into the nature of communication problems tically its applications to the life sciences. -

2017 Annual Report

2017 Annual Report NATIONAL ACADEMY OF ENGINEERING ENGINEERING THE FUTURE 1 Letter from the President 3 In Service to the Nation 3 Mission Statement 4 NAE Strategic Plan Implementation 6 NAE Annual Meeting 6 2017 NAE Annual Meeting Forum: Autonomy on Land and Sea and in the Air and Space 7 Program Reports 7 Postsecondary Engineering Education Understanding the Engineering Education–Workforce Continuum Engagement of Engineering Societies in Undergraduate Engineering Education The Supply Chain for Middle-Skill Jobs: Education, Training, and Certification Pathways Engineering Technology Education 8 PreK–12 Engineering Education LinkEngineering Educator Capacity Building in PreK–12 Engineering Education 9 Media Relations 10 Grand Challenges for Engineering NAE Grand Challenges Scholars Program Global Grand Challenges Summit 12 Center for Engineering Ethics and Society (CEES) Becoming the Online Resource Center for Ethics in Engineering and Science Workshop on Overcoming Challenges to Infusing Ethics in the Development of Engineers Integrated Network for Social Sustainability 14 Diversity of the Engineering Workforce EngineerGirl Program 15 Frontiers of Engineering Armstrong Endowment for Young Engineers—Gilbreth Lectures 17 Manufacturing, Design, and Innovation Adaptability of the Engineering and Technical Workforce 18 A New Vision for Center-Based Engineering Research 18 Connector Reliability for Offshore Oil and Natural Gas Operations 19 Microbiomes of the Built Environment 20 2017 NAE Awards Recipients 22 2017 New Members and Foreign Members -

Multiterminal Source-Channel Coding: Rate and Outage Analysis

Multiterminal Source-ChannelCoding Rate and Outage Analysis olf W Technische Universität Dresden Multiterminal Source-Channel Coding: Rate and Outage Analysis Albrecht Wolf der Fakultät Elektrotechnik und Informationstechnik der Technischen Universität Dresden zur Erlangung des akademischen Grades Doktoringenieur (Dr.-Ing.) genehmigte Dissertation Vorsitzender: Prof. Dr.-Ing. habil. Frank Ellinger Gutachter: Prof. Dr.-Ing. Dr. h.c. Gerhard Fettweis Prof. Dr. Markku Juntti Tag der Einreichung: 04.02.2019 Tag der Verteidigung: 04.06.2019 Albrecht Wolf Multiterminal Source-Channel Coding: Rate and Outage Analysis Dissertation, 04. Juni 2017 Vodafone Chair Mobile Communications Systems Institut für Nachrichtentechnik Fakultät Elektrotechnik und Informationstechnik Technische Universität Dresden 01062 Dresden, Germany Abstract Cooperative communication is seen as a key concept to achieve ultra-reliable communication in upcoming fifth-generation mobile networks (5G). A promis- ing cooperative communication concept is multiterminal source-channel coding, which attracted recent attention in the research community. This thesis lays theoretical foundations for understanding the performance of multiterminal source-channel codes in a vast variety of cooperative communi- cation networks. To this end, we decouple the multiterminal source-channel code into a multiterminal source code and multiple point-to-point channel codes. This way, we are able to adjust the multiterminal source code to any cooperative communication network without modification of the channel codes. We analyse the performance in terms of the outage probability in two steps: at first, we evaluate the instantaneous performance of the multi- terminal source-channel codes for fixed channel realizations; and secondly, we average the instantaneous performance over the fading process. Based on the performance analysis, we evaluate the performance of multiterminal source-channel codes in three cooperative communication networks, namely relay, wireless sensor, and multi-connectivity networks. -

Ieee Richard W. Hamming Medal Recipients

IEEE RICHARD W. HAMMING MEDAL RECIPIENTS 2020 CYNTHIA DWORK “For foundational work in privacy, cryptography, and Gordon McKay Professor of distributed computing, and for leadership in developing Computer Science, Harvard differential privacy.” University, Cambridge, Massachusetts, USA 2019 DAVID TSE “For seminal contributions to wireless network Professor, Stanford University, information theory and wireless network systems.” Stanford, California, USA 2018 ERDAL ARIKAN “For contributions to information and communications Professor, Department of theory, especially the discovery of polar codes and Electrical Engineering, Bilkent polarization techniques.” University, Ankara, Turkey 2017 SHLOMO SHAMAI “For fundamental contributions to information theory Professor, Technion-Israel and wireless communications.” Institute of Technology, Haifa, Israel 2016 ABBAS EL GAMAL “For contributions to network multi-user information Professor and Department theory and for wide ranging impact on programmable Chair, Department of Electrical circuit architectures.” Engineering, Stanford University, Stanford, California, USA 2015 IMRE CSISZAR “For contributions to information theory, information- Research Professor, A. Rényi theoretic security, and statistics.” Institute of Mathematics, Hungarian Academy of Sciences, Budapest, Hungary 2014 THOMAS RICHARDSON “For fundamental contributions to coding theory, Vice President, Engineering, iterative information processing, and Qualcomm, Bridgewater, applications.” New Jersey, USA AND RÜDIGER URBANKE Professor,