Gnomad Lof Paper Supp Reformat Refs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Computational Approach for Defining a Signature of Β-Cell Golgi Stress in Diabetes Mellitus

Page 1 of 781 Diabetes A Computational Approach for Defining a Signature of β-Cell Golgi Stress in Diabetes Mellitus Robert N. Bone1,6,7, Olufunmilola Oyebamiji2, Sayali Talware2, Sharmila Selvaraj2, Preethi Krishnan3,6, Farooq Syed1,6,7, Huanmei Wu2, Carmella Evans-Molina 1,3,4,5,6,7,8* Departments of 1Pediatrics, 3Medicine, 4Anatomy, Cell Biology & Physiology, 5Biochemistry & Molecular Biology, the 6Center for Diabetes & Metabolic Diseases, and the 7Herman B. Wells Center for Pediatric Research, Indiana University School of Medicine, Indianapolis, IN 46202; 2Department of BioHealth Informatics, Indiana University-Purdue University Indianapolis, Indianapolis, IN, 46202; 8Roudebush VA Medical Center, Indianapolis, IN 46202. *Corresponding Author(s): Carmella Evans-Molina, MD, PhD ([email protected]) Indiana University School of Medicine, 635 Barnhill Drive, MS 2031A, Indianapolis, IN 46202, Telephone: (317) 274-4145, Fax (317) 274-4107 Running Title: Golgi Stress Response in Diabetes Word Count: 4358 Number of Figures: 6 Keywords: Golgi apparatus stress, Islets, β cell, Type 1 diabetes, Type 2 diabetes 1 Diabetes Publish Ahead of Print, published online August 20, 2020 Diabetes Page 2 of 781 ABSTRACT The Golgi apparatus (GA) is an important site of insulin processing and granule maturation, but whether GA organelle dysfunction and GA stress are present in the diabetic β-cell has not been tested. We utilized an informatics-based approach to develop a transcriptional signature of β-cell GA stress using existing RNA sequencing and microarray datasets generated using human islets from donors with diabetes and islets where type 1(T1D) and type 2 diabetes (T2D) had been modeled ex vivo. To narrow our results to GA-specific genes, we applied a filter set of 1,030 genes accepted as GA associated. -

Characterization of a 7.6-Mb Germline Deletion Encompassing the NF1 Locus and About a Hundred Genes in an NF1 Contiguous Gene Syndrome Patient

European Journal of Human Genetics (2008) 16, 1459–1466 & 2008 Macmillan Publishers Limited All rights reserved 1018-4813/08 $32.00 www.nature.com/ejhg ARTICLE Characterization of a 7.6-Mb germline deletion encompassing the NF1 locus and about a hundred genes in an NF1 contiguous gene syndrome patient Eric Pasmant*,1,2, Aure´lie de Saint-Trivier2, Ingrid Laurendeau1, Anne Dieux-Coeslier3, Be´atrice Parfait1,2, Michel Vidaud1,2, Dominique Vidaud1,2 and Ivan Bie`che1,2 1UMR745 INSERM, Universite´ Paris Descartes, Faculte´ des Sciences Pharmaceutiques et Biologiques, Paris, France; 2Service de Biochimie et de Ge´ne´tique Mole´culaire, Hoˆpital Beaujon AP-HP, Clichy, France; 3Service de Ge´ne´tique Clinique, Hoˆpital Jeanne de Flandre, Lille, France We describe a large germline deletion removing the NF1 locus, identified by heterozygosity mapping based on microsatellite markers, in an 8-year-old French girl with a particularly severe NF1 contiguous gene syndrome. We used gene-dose mapping with sequence-tagged site real-time PCR to locate the deletion end points, which were precisely characterized by means of long-range PCR and nucleotide sequencing. The deletion is located on chromosome arm 17q and is exactly 7 586 986 bp long. It encompasses the entire NF1 locus and about 100 other genes, including numerous chemokine genes, an attractive in silico-selected cerebrally expressed candidate gene (designated NUFIP2, for nuclear fragile X mental retardation protein interacting protein 2; NM_020772) and four microRNA genes. Interestingly, the centromeric breakpoint is located in intron 4 of the PIPOX gene (pipecolic acid oxidase; NM_016518) and the telomeric breakpoint in intron 5 of the GGNBP2 gene (gametogenetin binding protein 2; NM_024835) coding a transcription factor. -

Identification of Potential Core Genes in Sevoflurance Induced Myocardial

Identication of Potential core genes in Sevourance induced Myocardial Energy Metabolism in Patients Undergoing Off-pump Coronary Artery Bypass Graft Surgery using Bioinformatics analysis Hua Lin ( [email protected] ) Tianjin Medical University General Hospital Airport Site Research article Keywords: sevourane, Myocardial Energy Metabolism, Off-pump Coronary Artery Bypass Graft Surgery Posted Date: November 18th, 2019 DOI: https://doi.org/10.21203/rs.2.17434/v1 License: This work is licensed under a Creative Commons Attribution 4.0 International License. Read Full License Page 1/15 Abstract Background: Myocardial ischemia-reperfusion injury always happened after Off-pump coronary artery bypass graft(OPCABG), and this can not be avoided altogether. In this study, we tried to detect potential genes of sevourane-induced myocardial energy metabolism in patients undergoing OPCABG using bioinformatics analysis. Methods: We download and analyze the gene expression prole data from the Gene Expression Omnibus(GEO) database using bioinformatics methods. We downloded the gene expression data from the Gene Expression Omnibus(GEO) database using bioinformatics methods. Gene Ontology(GO) functional annotation analysis and Kyoto Encyclopedia of Genes and Genomes(KEGG) pathway enrichment analysis were used to analysis the screened differentially expressed genes(DEGs). Then, we established a protein–protein interaction (PPI) network to nd hub genes associated with myocardial energy metabolism. Results: Through PPI network, we nd ten hub genes, including JUN, EGR1, ATF3, FOSB, JUNB, DUSP1, EGR2, NR4A1, BTG2, NR4A2. Conclusions: In conclusion, the proteins encoded by EGR1ATF3c-FosBtg2JunBDUSP1NR4A1BTG2 and NR4A2 were related to cardiac function. ATF3, FOSB, JUNB, DUSP1, NR4A1, NR4A2 are related to apoptosis of cardiomyocytes. The protein encoded by BTG2 is related to hypertrophy. -

Autocrine IFN Signaling Inducing Profibrotic Fibroblast Responses By

Downloaded from http://www.jimmunol.org/ by guest on September 23, 2021 Inducing is online at: average * The Journal of Immunology , 11 of which you can access for free at: 2013; 191:2956-2966; Prepublished online 16 from submission to initial decision 4 weeks from acceptance to publication August 2013; doi: 10.4049/jimmunol.1300376 http://www.jimmunol.org/content/191/6/2956 A Synthetic TLR3 Ligand Mitigates Profibrotic Fibroblast Responses by Autocrine IFN Signaling Feng Fang, Kohtaro Ooka, Xiaoyong Sun, Ruchi Shah, Swati Bhattacharyya, Jun Wei and John Varga J Immunol cites 49 articles Submit online. Every submission reviewed by practicing scientists ? is published twice each month by Receive free email-alerts when new articles cite this article. Sign up at: http://jimmunol.org/alerts http://jimmunol.org/subscription Submit copyright permission requests at: http://www.aai.org/About/Publications/JI/copyright.html http://www.jimmunol.org/content/suppl/2013/08/20/jimmunol.130037 6.DC1 This article http://www.jimmunol.org/content/191/6/2956.full#ref-list-1 Information about subscribing to The JI No Triage! Fast Publication! Rapid Reviews! 30 days* Why • • • Material References Permissions Email Alerts Subscription Supplementary The Journal of Immunology The American Association of Immunologists, Inc., 1451 Rockville Pike, Suite 650, Rockville, MD 20852 Copyright © 2013 by The American Association of Immunologists, Inc. All rights reserved. Print ISSN: 0022-1767 Online ISSN: 1550-6606. This information is current as of September 23, 2021. The Journal of Immunology A Synthetic TLR3 Ligand Mitigates Profibrotic Fibroblast Responses by Inducing Autocrine IFN Signaling Feng Fang,* Kohtaro Ooka,* Xiaoyong Sun,† Ruchi Shah,* Swati Bhattacharyya,* Jun Wei,* and John Varga* Activation of TLR3 by exogenous microbial ligands or endogenous injury-associated ligands leads to production of type I IFN. -

Gnomad Lof Supplement

1 gnomAD supplement gnomAD supplement 1 Data processing 4 Alignment and read processing 4 Variant Calling 4 Coverage information 5 Data processing 5 Sample QC 7 Hard filters 7 Supplementary Table 1 | Sample counts before and after hard and release filters 8 Supplementary Table 2 | Counts by data type and hard filter 9 Platform imputation for exomes 9 Supplementary Table 3 | Exome platform assignments 10 Supplementary Table 4 | Confusion matrix for exome samples with Known platform labels 11 Relatedness filters 11 Supplementary Table 5 | Pair counts by degree of relatedness 12 Supplementary Table 6 | Sample counts by relatedness status 13 Population and subpopulation inference 13 Supplementary Figure 1 | Continental ancestry principal components. 14 Supplementary Table 7 | Population and subpopulation counts 16 Population- and platform-specific filters 16 Supplementary Table 8 | Summary of outliers per population and platform grouping 17 Finalizing samples in the gnomAD v2.1 release 18 Supplementary Table 9 | Sample counts by filtering stage 18 Supplementary Table 10 | Sample counts for genomes and exomes in gnomAD subsets 19 Variant QC 20 Hard filters 20 Random Forest model 20 Features 21 Supplementary Table 11 | Features used in final random forest model 21 Training 22 Supplementary Table 12 | Random forest training examples 22 Evaluation and threshold selection 22 Final variant counts 24 Supplementary Table 13 | Variant counts by filtering status 25 Comparison of whole-exome and whole-genome coverage in coding regions 25 Variant annotation 30 Frequency and context annotation 30 2 Functional annotation 31 Supplementary Table 14 | Variants observed by category in 125,748 exomes 32 Supplementary Figure 5 | Percent observed by methylation. -

Assocication of Hepatocyte Gene Expression and DNA

bioRxiv preprint doi: https://doi.org/10.1101/491225; this version posted December 9, 2018. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. Uncovering the role admixture in health disparities: Association of hepatocyte gene expression and DNA methylation to African Ancestry in African-Americans. Park CS1, De T1, Xu Y1,2, Zhong Y1, Smithberger E1,3, Alarcon C1, Perera MA1* 1. Department of Pharmacology, Center for Pharmacogenomics, Feinberg School of Medicine, Northwestern University, Chicago IL 2. Center for Translational Data Science, University of Chicago, Chicago IL 3. Department of Pathology and laboratory medicine, Univeristy of North Caroline School of Medcine, Chapel Hill, NC. * corresponding author: [email protected] ABSTRACT In African Americans (AA), the proportion of West African ancestry (WAA) may explain the genetic drivers of health disparities in disease. Analysis of RNA sequencing data from sixty AA-derived primary hepatocytes identified 32 gene expression profiles associated with WAA (FDR <0.05) with enrichment in angiogenesis and inflammatory pathways (FDR <0.1). Association of DNA methylation to WAA identified 1037 differentially methylated regions (FDR <0.05), with hypomethylated genes enriched for drug response pathways. Within the PharmGKB pharmacogene, VDR, PTGIS, ALDH1A1, CYP2C19 and P2RY1 were associated with WAA (p <0.05) with replication of CYP2C19 and VDR in the GTEx liver cohort. For every 1% increment in WAA, P2RY1 gene expression increased by 1.6% and CYP2C19 gene expression decreased by 1.4%, suggesting effects on clopidogrel response and platelet aggregation. We conclude that WAA contributes to variablity in hepatic gene expression and DNA methylation with identified genes indicative of health disparities prevalent in AAs. -

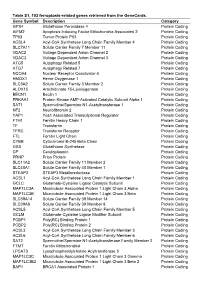

Table S1. 103 Ferroptosis-Related Genes Retrieved from the Genecards

Table S1. 103 ferroptosis-related genes retrieved from the GeneCards. Gene Symbol Description Category GPX4 Glutathione Peroxidase 4 Protein Coding AIFM2 Apoptosis Inducing Factor Mitochondria Associated 2 Protein Coding TP53 Tumor Protein P53 Protein Coding ACSL4 Acyl-CoA Synthetase Long Chain Family Member 4 Protein Coding SLC7A11 Solute Carrier Family 7 Member 11 Protein Coding VDAC2 Voltage Dependent Anion Channel 2 Protein Coding VDAC3 Voltage Dependent Anion Channel 3 Protein Coding ATG5 Autophagy Related 5 Protein Coding ATG7 Autophagy Related 7 Protein Coding NCOA4 Nuclear Receptor Coactivator 4 Protein Coding HMOX1 Heme Oxygenase 1 Protein Coding SLC3A2 Solute Carrier Family 3 Member 2 Protein Coding ALOX15 Arachidonate 15-Lipoxygenase Protein Coding BECN1 Beclin 1 Protein Coding PRKAA1 Protein Kinase AMP-Activated Catalytic Subunit Alpha 1 Protein Coding SAT1 Spermidine/Spermine N1-Acetyltransferase 1 Protein Coding NF2 Neurofibromin 2 Protein Coding YAP1 Yes1 Associated Transcriptional Regulator Protein Coding FTH1 Ferritin Heavy Chain 1 Protein Coding TF Transferrin Protein Coding TFRC Transferrin Receptor Protein Coding FTL Ferritin Light Chain Protein Coding CYBB Cytochrome B-245 Beta Chain Protein Coding GSS Glutathione Synthetase Protein Coding CP Ceruloplasmin Protein Coding PRNP Prion Protein Protein Coding SLC11A2 Solute Carrier Family 11 Member 2 Protein Coding SLC40A1 Solute Carrier Family 40 Member 1 Protein Coding STEAP3 STEAP3 Metalloreductase Protein Coding ACSL1 Acyl-CoA Synthetase Long Chain Family Member 1 Protein -

Systematic Detection of Brain Protein-Coding Genes Under Positive Selection During Primate Evolution and Their Roles in Cognition

Downloaded from genome.cshlp.org on October 7, 2021 - Published by Cold Spring Harbor Laboratory Press Title: Systematic detection of brain protein-coding genes under positive selection during primate evolution and their roles in cognition Short title: Evolution of brain protein-coding genes in humans Guillaume Dumasa,b, Simon Malesysa, and Thomas Bourgerona a Human Genetics and Cognitive Functions, Institut Pasteur, UMR3571 CNRS, Université de Paris, Paris, (75015) France b Department of Psychiatry, Université de Montreal, CHU Ste Justine Hospital, Montreal, QC, Canada. Corresponding author: Guillaume Dumas Human Genetics and Cognitive Functions Institut Pasteur 75015 Paris, France Phone: +33 6 28 25 56 65 [email protected] Dumas, Malesys, and Bourgeron 1 of 40 Downloaded from genome.cshlp.org on October 7, 2021 - Published by Cold Spring Harbor Laboratory Press Abstract The human brain differs from that of other primates, but the genetic basis of these differences remains unclear. We investigated the evolutionary pressures acting on almost all human protein-coding genes (N=11,667; 1:1 orthologs in primates) based on their divergence from those of early hominins, such as Neanderthals, and non-human primates. We confirm that genes encoding brain-related proteins are among the most strongly conserved protein-coding genes in the human genome. Combining our evolutionary pressure metrics for the protein- coding genome with recent datasets, we found that this conservation applied to genes functionally associated with the synapse and expressed in brain structures such as the prefrontal cortex and the cerebellum. Conversely, several genes presenting signatures commonly associated with positive selection appear as causing brain diseases or conditions, such as micro/macrocephaly, Joubert syndrome, dyslexia, and autism. -

The Role of Co-Deleted Genes in Neurofibromatosis Type 1

G C A T T A C G G C A T genes Article The Role of Co-Deleted Genes in Neurofibromatosis Type 1 Microdeletions: An Evolutive Approach Larissa Brussa Reis 1,2 , Andreia Carina Turchetto-Zolet 2, Maievi Fonini 1, Patricia Ashton-Prolla 1,2,3 and Clévia Rosset 1,* 1 Laboratório de Medicina Genômica, Centro de Pesquisa Experimental, Hospital de Clínicas de Porto Alegre, Porto Alegre, Rio Grande do Sul 90035-903, Brazil; [email protected] (L.B.R.); [email protected] (M.F.); [email protected] (P.A.-P.) 2 Programa de Pós-Graduação em Genética e Biologia Molecular, Departamento de Genética, UFRGS, Porto Alegre, Rio Grande do Sul 91501-970, Brazil; [email protected] 3 Serviço de Genética Médica do Hospital de Clínicas de Porto Alegre (HCPA), Porto Alegre, Rio Grande do Sul 90035-903, Brazil * Correspondence: [email protected]; Tel.: +55-51-3359-7661 Received: 28 June 2019; Accepted: 16 September 2019; Published: 24 October 2019 Abstract: Neurofibromatosis type 1 (NF1) is a cancer predisposition syndrome that results from dominant loss-of-function mutations mainly in the NF1 gene. Large rearrangements are present in 5–10% of affected patients, generally encompass NF1 neighboring genes, and are correlated with a more severe NF1 phenotype. Evident genotype–phenotype correlations and the importance of the co-deleted genes are difficult to establish. In our study we employed an evolutionary approach to provide further insights into the understanding of the fundamental function of genes that are co-deleted in subjects with NF1 microdeletions. Our goal was to access the ortholog and paralog relationship of these genes in primates and verify if purifying or positive selection are acting on these genes. -

S Disease Biomarker Progression Profile Identified by Transcriptome

European Journal of Human Genetics (2015) 23, 1349–1356 & 2015 Macmillan Publishers Limited All rights reserved 1018-4813/15 www.nature.com/ejhg ARTICLE Huntington’s disease biomarker progression profile identified by transcriptome sequencing in peripheral blood Anastasios Mastrokolias1, Yavuz Ariyurek2, Jelle J Goeman3,4, Erik van Duijn5,6, Raymund AC Roos7, Roos C van der Mast5, GertJan B van Ommen1, Johan T den Dunnen1,2, Peter AC ’t Hoen1 and Willeke MC van Roon-Mom*,1 With several therapeutic approaches in development for Huntington’s disease, there is a need for easily accessible biomarkers to monitor disease progression and therapy response. We performed next-generation sequencing-based transcriptome analysis of total RNA from peripheral blood of 91 mutation carriers (27 presymptomatic and, 64 symptomatic) and 33 controls. Transcriptome analysis by DeepSAGE identified 167 genes significantly associated with clinical total motor score in Huntington’s disease patients. Relative to previous studies, this yielded novel genes and confirmed previously identified genes, such as H2AFY, an overlap in results that has proven difficult in the past. Pathway analysis showed enrichment of genes of the immune system and target genes of miRNAs, which are downregulated in Huntington’s disease models. Using a highly parallelized microfluidics array chip (Fluidigm), we validated 12 of the top 20 significant genes in our discovery cohort and 7 in a second independent cohort. The five genes (PROK2, ZNF238, AQP9, CYSTM1 and ANXA3) that were validated independently in both cohorts present a candidate biomarker panel for stage determination and therapeutic readout in Huntington’s disease. Finally we suggest a first empiric formula predicting total motor score from the expression levels of our biomarker panel. -

Discovering Pathways in Benign Prostate Hyperplasia: a Functional Genomics Pilot Study

EXPERIMENTAL AND THERAPEUTIC MEDICINE 21: 242, 2021 Discovering pathways in benign prostate hyperplasia: A functional genomics pilot study ZHELING CHEN1 and MINYAO GE2 1Department of Traditional Chinese Medicine, Zhenxin Community Health Service Center, Shanghai 201824; 2Department of Urology Surgery, Shuguang Hospital Affiliated to Shanghai University of Traditional Chinese Medicine, Shanghai 201203, P.R. China Received February 24, 2020; Accepted October 13, 2020 DOI: 10.3892/etm.2021.9673 Abstract. Benign prostate hyperplasia (BPH) is one of the prevalent in aging males, usually starting at 50‑61 years of well‑known urological neoplasms common in males with an age (1), and causing lower urinary tract symptoms (LUTS), increasing number of associated deaths in aging males. It such as urine flow blockage as a result of the urethra being causes uncomfortable urinary symptoms, including urine flow compressed by the enlarged gland. Other potential complica‑ blockage, and may cause bladder, urinary tract or kidney prob‑ tions may include bladder, urinary tract or kidney problems (2). lems. The histopathological and clinical knowledge regarding Most males have continued prostate growth throughout their BPH is limited. In the present study, an in silico approach was life (2) After the age of 30 years, males exhibit a 1% drop in applied that uses genome‑scale microarray expression data to testosterone production per year and an increase in the level of discover a wide range of protein‑protein interactions in addition dihydrotestosterone, possibly due to the age‑related increase to focusing on specific genes responsible for BPH to develop in 5α reductase (SRD5A2) activity. This rising level of dihy‑ prognostic biomarkers. -

Sheet1 Page 1 Gene Symbol Gene Description Entrez Gene ID

Sheet1 RefSeq ID ProbeSets Gene Symbol Gene Description Entrez Gene ID Sequence annotation Seed matches location(s) Ago-2 binding specific enrichment (replicate 1) Ago-2 binding specific enrichment (replicate 2) OE lysate log2 fold change (replicate 1) OE lysate log2 fold change (replicate 2) Probability Pulled down in Karginov? NM_005646 202813_at TARBP1 Homo sapiens TAR (HIV-1) RNA binding protein 1 (TARBP1), mRNA. 6894 TR(1..5130)CDS(1..4866) 4868..4874,5006..5013 3.73 2.53 -1.54 -0.44 1 Yes NM_001665 203175_at RHOG Homo sapiens ras homolog gene family, member G (rho G) (RHOG), mRNA. 391 TR(1..1332)CDS(159..734) 810..817,782..788,790..796,873..879 3.56 2.78 -1.62 -1 1 Yes NM_002742 205880_at PRKD1 Homo sapiens protein kinase D1 (PRKD1), mRNA. 5587 TR(1..3679)CDS(182..2920) 3538..3544,3202..3208 4.15 1.83 -2.55 -0.42 1 Yes NM_003068 213139_at SNAI2 Homo sapiens snail homolog 2 (Drosophila) (SNAI2), mRNA. 6591 TR(1..2101)CDS(165..971) 1410..1417,1814..1820,1610..1616 3.5 2.79 -1.38 -0.31 1 Yes NM_006270 212647_at RRAS Homo sapiens related RAS viral (r-ras) oncogene homolog (RRAS), mRNA. 6237 TR(1..1013)CDS(46..702) 871..877 3.82 2.27 -1.54 -0.55 1 Yes NM_025188 219923_at,242056_at TRIM45 Homo sapiens tripartite motif-containing 45 (TRIM45), mRNA. 80263 TR(1..3584)CDS(589..2331) 3408..3414,2437..2444,3425..3431,2781..2787 3.87 1.89 -0.62 -0.09 1 Yes NM_024684 221600_s_at,221599_at C11orf67 Homo sapiens chromosome 11 open reading frame 67 (C11orf67), mRNA.