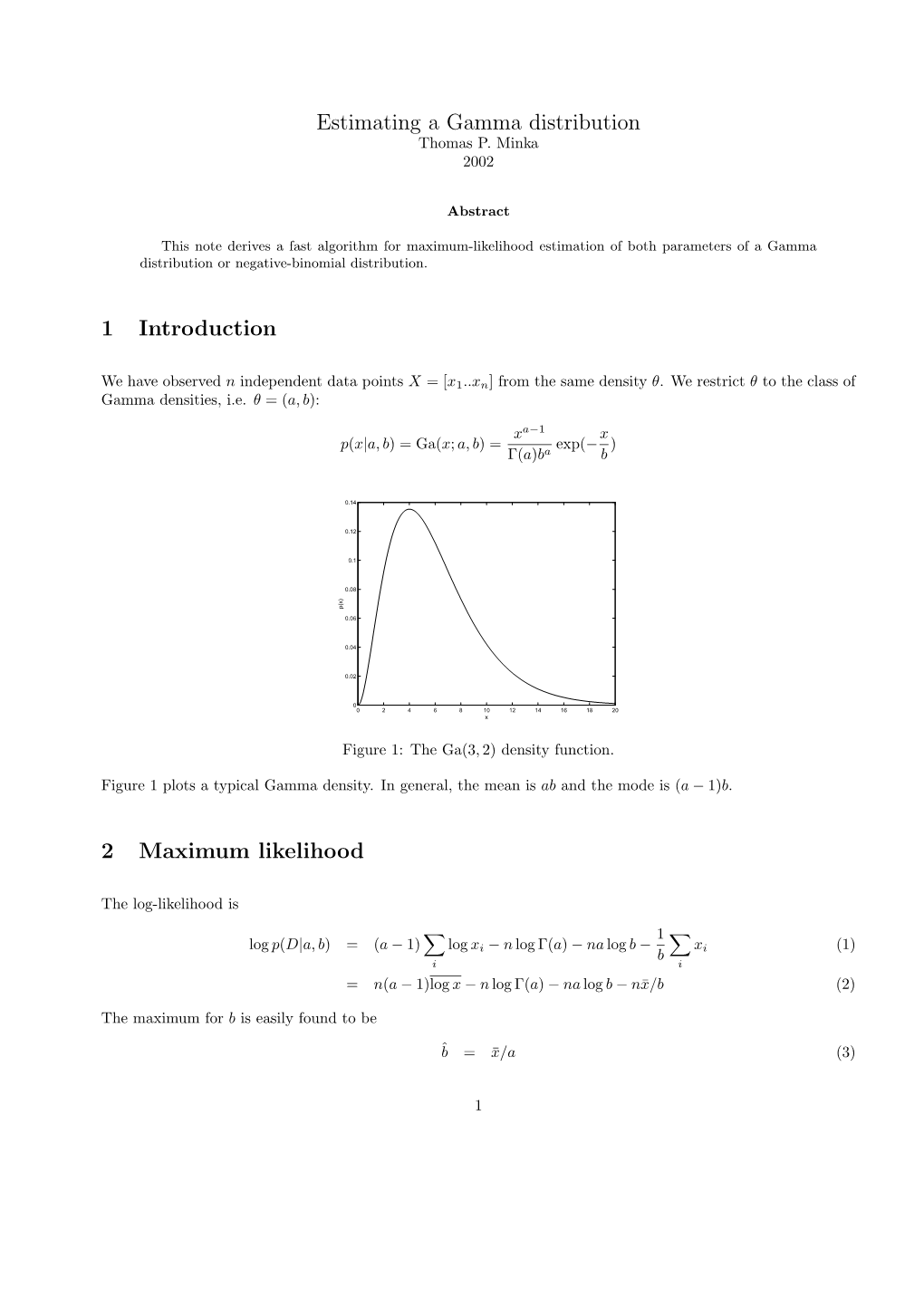

Estimating a Gamma Distribution 1 Introduction 2 Maximum Likelihood

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![Arxiv:1702.05635V2 [Math.CA] 20 May 2020 Napprwitnb H Elkong .Hry[0,A Neetn in Interesting an [10], Hardy H](https://docslib.b-cdn.net/cover/2419/arxiv-1702-05635v2-math-ca-20-may-2020-napprwitnb-h-elkong-hry-0-a-neetn-in-interesting-an-10-hardy-h-152419.webp)

Arxiv:1702.05635V2 [Math.CA] 20 May 2020 Napprwitnb H Elkong .Hry[0,A Neetn in Interesting an [10], Hardy H

ON SPECIAL RIEMANN XI FUNCTION FORMULAE OF HARDY INVOLVING THE DIGAMMA FUNCTION ALEXANDER E PATKOWSKI Abstract. We consider some properties of integrals considered by Hardy and Koshliakov, that have have connections to the digamma function. We establish a new general integral formula that provides a connection to the polygamma function. We also obtain lower and upper bounds for Hardy’s integral through properties of the digamma function. Keywords: Fourier Integrals; Riemann xi function; Digamma function. 2010 Mathematics Subject Classification 11M06, 33C15. 1. Introduction and Main formulas In a paper written by the well-known G. H. Hardy [10], an interesting integral formula is presented (corrected in [3]) (1.1) ∞ ∞ Ξ(t/2) cos(xt) 1 1 1 1 2 4x dt = e−x 2x + γ + log(π) + log(2) + ex ψ(t+1)e−πt e dt, 1+ t2 cosh(πt/2) 4 2 2 2 Z0 Z0 ∂ where ψ(x) := ∂x log(Γ(x)), Γ(x) being the gamma function [1, pg.1], and Ξ(t) := 1 ξ( 2 + it), where [11, 15] 1 s s ξ(s) := s(s 1)π− 2 Γ( )ζ(s). 2 − 2 arXiv:1702.05635v2 [math.CA] 20 May 2020 Here we have used the standard notation for the Riemann zeta function ζ(s) := n−s, for (s) > 1. Koshliakov [12, eq.(14), eq.(20)] (or [3, eq.(1.15)]) pro- n≥1 ℜ Pduced this formula as well, but in a slightly different form, ∞ ∞ Ξ(t/2) cos(xt) 2 4x (1.2) 2 dt = ex (ψ(t + 1) log(t))e−πt e dt. -

The IFC on the Hill Greek Awards 2020 Interfraternity Council at the University of Colorado, Inc

The IFC on The Hill Greek Awards 2020 Interfraternity Council at the University of Colorado, Inc. recognizes the following Brothers and Chapters for Excellency and Accomplishments. Chapter of the Year: Pi Kappa Alpha Most Improved Chapter: Alpha Kappa Lambda COVID Response Plan: Pi Kappa Alpha Outstanding and Innovative Recruitment: Phi Gamma Delta Outstanding Philanthropic Award: Theta Xi Outstanding Risk Reduction: Pi Kappa Alpha Brothers and Cousins: Phi Kappa Psi, Chi Psi, and Theta Xi Greek Man of the Year: Adam Wenzlaff (Sigma Nu) Fraternity President of the Year: Josh Tackaberry (Theta Xi) Emerging Leader Award: Jackson Brown (Pi Kappa Alpha) Outstanding Fraternity Philanthropist: Nick Drew (Theta Xi) Outstanding Fraternity Advisor: John Shay (Sigma Alpha Epsilon) Outstanding Senior Award: Andrew Siana (Sigma Nu), Alex Vaillancourt (Acacia), Jack Lynch (Chi Psi), Kyle Furlong (Chi Psi), Nathan Davis (Phi Kappa Psi), Reid Schneckenberger (Theta Xi), Nathan Vandiver (Tau Kappa Epsilon), Harrison Bolin (Alpha Gamma Omega) Individual Academic Excellence Award: Acacia - Nicolas Abate Alpha Epsilon Pi - Jack Elliot Alpha Gamma Omega - Alexander Karas Alpha Kappa Lambda – Jason Aristidies Alpha Phi Delta - Eric Wright Alpha Sigma Phi - William Molineaux Chi Psi - Ben Miller Delta Kappa Epsilon - Titus Ellison Delta Sigma Phi - Daniel Merritt Phi Gamma Delta - Mitchel Ramba Phi Kappa Psi - Kyle Singleton Pi Kappa Alpha - Cross Di Muro Pi Kappa Phi - Jackson Winn Sigma Alpha Epsilon - Eddy Connors Sigma Nu - Cameron Carelson Tau Kappa Epsilon - Jakob Fletcher Theta Chi - Cole Smith Theta Xi - Zach Dickman Zeta Beta Tau - Manny Gutman . -

Greek Houses

2 Greek houses Σ Δ Σ Σ Ζ ΚΑ Υ Α 33rd Street Θ Τ ΛΧΑ Δ ΝΜ ΤΕΦ ΑΦ Ξ Α Fresh Τ Grocer Radian Hill ΚΑΘ ΖΨ Walnut Street Walnut Street 34th Street ΣΦΕ Du Bois GSE Street 37th 39th Street Annenberg Van Pelt Α Rotunda ΠΚΦ ∆ Movie Huntsman Π Hillel ΑΧΡ theater Rodin ΔΦ SP2 Woodland Walk Locust Walk ΑΤΩ ΣΧ Locust Walk ΔΨ ΦΓΔ 3609-11 36th Street Fisher Class of 1920 Commons ΚΣ Φ Fine 38th Street 40th Street Δ Harnwell Steinberg- Arts McNeil Θ Deitrich ΨΥ College Hall Cohen Harrison ΖΒΤ Houston Irvine Van Pelt Σ Α Β Wistar Williams Α Χ Θ Allegro 41st Street 41st Spruce Street Ε Ω Π Spruce Street Δ Φ The Quad Δ Κ Stouffer ΔΚΕ Δ Ψ Σ Χ ΠΠ Κ Ω Κ Λ HUP N ΑΦ Vet school Pine Street Chapter Letters Address Page Chapter Letters Address Page Chapter Letters Address Page Alpha Chi Omega* ΑΧΩ 3906 Spruce St. 9 Kappa Alpha Society ΚΑ 124 S. 39th St. 15 Sigma Alpha Mu ΣΑΜ 3817 Walnut St. 17 Alpha Chi Rho ΑΧΡ 219 S. 36th St. 7 Kappa Alpha Theta* ΚΑΘ 130 S. 39th St. 15 Sigma Chi ΣΧ 3809 Locust Walk 3 Alpha Delta Pi* ADP 4032 Walnut St. 14 Kappa Sigma ΚΣ 3706 Locust Walk 4 Sigma Delta Tau* ΣΔΤ 3831-33 Walnut St. 16 Alpha Phi* ΑΦ 4045 Walnut St. 14 Lambda Chi Alpha ΛΧΑ 128 S. 39th St. 15 Sigma Kappa* ΣΚ 3928 Spruce St. 11 Alpha Tau Omega ΑΤΩ 225 S. 39th St. -

AIX Globalization

AIX Version 7.1 AIX globalization IBM Note Before using this information and the product it supports, read the information in “Notices” on page 233 . This edition applies to AIX Version 7.1 and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright International Business Machines Corporation 2010, 2018. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents About this document............................................................................................vii Highlighting.................................................................................................................................................vii Case-sensitivity in AIX................................................................................................................................vii ISO 9000.....................................................................................................................................................vii AIX globalization...................................................................................................1 What's new...................................................................................................................................................1 Separation of messages from programs..................................................................................................... 1 Conversion between code sets............................................................................................................. -

CHAPTER BYLAWS – XI Gamma Chapter

CHAPTER BYLAWS – XI Gamma Chapter Beta Alpha Psi recognizes academic excellence and complements members' formal education by providing interaction among students, faculty, and professionals, and fosters lifelong growth, service and ethical conduct. Article 1: Name of the organization The name of this organization shall be the Xi Gamma of Beta Alpha Psi at Auburn University at Montgomery. Article 2: The Objectives and Purposes of the Chapter Beta Alpha Psi is an honorary and professional organization for students of accountancy. Its stated purpose includes recognition of outstanding academic achievements in the field of accounting; promotion of the study and practice of accounting; provision of opportunities for self‐ development and association among members and accounting professionals; and encouragement of a sense of ethical, social, and public responsibility. The organization seeks to develop its members' professionalism through career‐oriented, social, and community activities. Article 3: Recognized Candidateship To be admitted as a recognized‐candidate in the organization, a student must successfully complete a semester as a candidate and attain minimum academic performance. Chapter Candidate Requirements: 1. Be a matriculated undergraduate or postgraduate student with a concentration in accounting. 2. Completed (in addition to one and one‐half years of collegiate courses), at least one upper level course beyond the business core in the appropriate major with a 3.0 average or better in all upper‐level courses taken in the accounting. 3. Attain an overall minimum cumulative grade point average of 3.0. 4. Must have a minimum of 2 semesters remaining before graduation. 5. Payment of candidate fee as determined by the executive committee of the petitioning chapter. -

Xi Sigma Pi Zeta Chapter Leadership Team! We Are Sure You Will Do an Incredible Work for Our Chapter and Our Society

Xi Sigma Pi National Forestry Honor Society Zeta Chapter Oregon State University 2021 Newsletter 1. Member’s list (inducted before 2021) We are honored to have 25 members from our Chapter that have been inducted during previous years. Their names and initiation years are shown in the following table. First Name Last Name Year Inducted Ray Van Court 2018 Claudio Guevara 2019 Kandy Stewart 2019 Amanda Templer 2019 Suzanne Welsh 2019 Josh Wood 2019 Nina Borg 2020 Chad Fenon 2020 Alexandra Freeman 2020 Carver Heine 2020 Peter Houpt 2020 Lara Jacobs 2020 Julia Janssen-Anderson 2020 Bridget Levy 2020 Abigail Marshall 2020 Danielle Matthews 2020 Rose McClung 2020 Amanda Reding 2020 Adam Rulison 2020 Allyson Smith 2020 Gregory Soltwisch 2020 Jacob Stover 2020 Hallie Wilkerson 2020 Julaine Wilson 2020 Victoria Frank 2020 Our Society is proud of you and appreciate the multiple ways you are working to improve our Chapter, the forestry sciences, and the society we live in. 2. New members (inducted in 2021) During the 2021 initiation, our Chapter inducted 59 new members. This is an historical step for our Chapter and probably for Xi Sigma Pi as a whole! We are proud of being involved on this induction process, and hope you feel proud of being a part of the 2021 cohort. The names of the new inductees are shown in the following table. First Name Last Name First Name Last Name Jacob Atkins Gabrielle Johnson Radford Bean Misty Johnson Jessica Glazier Mackenzie Karnstein Kim Bigelow Savannah Kaufman Carly Blanchard Amy Kronsberg Lewis Campbell Todd Little -

Inter-Cultural Greek Council Volume 4, Issue 1 Strolling

Inter-Cultural Greek Council Volume 4, Issue 1 Strolling A Publication of the Center for Multicultural StudentThe Yard Services at James Madison University I.C.G.C. PROGRAMS AUGUST-OCTOBER Monthly Newsletter August 23-24: Freshmen Move-In Greetings from the Inter-Cultural Greek Council, Back to School Cookout I.C.G.C., a governing council for CMSS multicultural fraternities and sororities, has Sept. 1: Tools for Schools achieved great accomplishments since its establishment in the spring of 2009. September Representing 11 multicultural fraternities and sororities, the purpose of I.C.G.C. is to 5: College 101 “unify and enhance student development through celebrating diversity, participating 9: Kings of the Kourt in civic engagement, and upholding high scholastic standards for multicultural and 8: I.C.G.C. Meet the Greeks service oriented Greek lettered organizations that are represented under the Center 16-18: MisEducation of Black Greek for Multicultural Student Services.” 9-11 Kode Red Weekend We hope you enjoy this issue! 10: Capture the Fun *Due to the time commitments with Homecoming Week this edition of STY will be a review of August, September and October.* 19: Greek Life: What’s All the Fuss About 19-21: Voter Registration Drive 19-22: National Hazing Prevention Week 21: Open House 29-Oct 1: Iota Alpha 40th Anniversary October Support My Rack T-Shirt Sale 1: Stompmania Homecoming Step Show 3-12: Golden Vision Week 12: Salserengue 101 15: Real Dukes Wear Pink Charity Ball 16: AKAerobics 19: Rhoyalty Lounge 20: Body Image Serving Our Community Presidents Greeting Hello, my name is Danielle Blue, current President of the Inter-Cultural Greek Council and Vice President of the Lambda Chi Chapter of Alpha Kappa Alpha Sorority, Inc. -

Shsu Greek Life Shsu Greek Life Mission, Purpose and Goals

SAM HOUSTON STATE UNIVERSITY “one community, a world of experiences” SHSU GREEK LIFE WWW.SHSU.EDU/GREEKLIFE SHSU GREEK LIFE MISSION, PURPOSE AND GOALS The mission of SHSU’s Greek Life program is to build an extensive Greek community that will assist the university in heightening the collegiate experience through its fraternal commitments to academic achievement, community service, leadership, and the formation of fraternal alliances. The purpose of SHSU’s Greek Life program is to serve as the liaison and as a resource to the collegiate chapters, alumni, parents, international organizations, and Sam Houston State University through providing educational programs, workshops, materials, and direction to members of the Greek community to uphold their fraternal principles. The goal of SHSU’s Greek Life program is to continually produce a distinguished Greek community that exalts academic excellence, leadership, and service which embodies the core values of Sam Houston State University. Cole Hollingsworth Hector Corral Jalon Berry Haley Jozwiak IFC President MGC President NPHC President PHA President TEXAS STATE UNIVERSITY SYSTEM TABLE OF CONTENTS BOARD OF REGENTS Welcome New Bearkats............................ 3 Dr. Jamie R. Garza, Chairman....................San Antonio Four Pillars of Greek Life........................4-5 Rossanna Salazar, Vice Chairman.......................Austin Interfraternity Council.............................6-9 Charlie Amato............................................San Antonio Multicultural Greek Council............... -

Section 18.1, Han

The Unicode® Standard Version 13.0 – Core Specification To learn about the latest version of the Unicode Standard, see http://www.unicode.org/versions/latest/. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and the publisher was aware of a trade- mark claim, the designations have been printed with initial capital letters or in all capitals. Unicode and the Unicode Logo are registered trademarks of Unicode, Inc., in the United States and other countries. The authors and publisher have taken care in the preparation of this specification, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. © 2020 Unicode, Inc. All rights reserved. This publication is protected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction. For information regarding permissions, inquire at http://www.unicode.org/reporting.html. For information about the Unicode terms of use, please see http://www.unicode.org/copyright.html. The Unicode Standard / the Unicode Consortium; edited by the Unicode Consortium. — Version 13.0. Includes index. ISBN 978-1-936213-26-9 (http://www.unicode.org/versions/Unicode13.0.0/) 1. -

Xi Lambda Ledger Excel Center VIIXL October 31, 2020 8236 S

Xi Lambda Ledger eXceL Center VIIXL October 31, 2020 8236 S. Western Ave. Since 1924 Vol. XV Xi Lambda Congratulates General President – Elect Brother Dr. Willis L. Lonzer III ON HUMANITY “We must never lose sight of the fact that we must take part in the development, not only of ourselves but of all humanity.” - Jewel Charles Henry Chapman, 1931 Barris Sims President A Message From The Editor Nicholas Cummings Vice President Brothers! Brothers! Brothers! Kenneth Booker Corresponding I offer my deepest gratitude and most Secretary sincere appreciation for being designated as Xi Lambda’s Neophyte of Sean G. Veal the Year! However, it is important to note Financial Secretary that this journey was not undertaken Theodore Johnson alone. Instead, it was a collaboration. It Recording Secretary began foundationally with God’s grace Michael Clark and mercy, which was supplemented by Treasurer your brotherly love and support. Brother Jeff Gougis Jeffrey L. Gougis This humbling recognition is truly an honor and privilege, as it has Associate Editor of been my pleasure doing the work of Alpha alongside the men of The Sphinx Xi Lambda! After all, brotherhood makes everything better! Richard W. Lawson Historian Undoubtedly, my line, the Twenty-seven Navigators of Turbulence have benefited from your shining examples of leadership, wisdom, Vincent Richardson and brotherhood. So, in the truest sense, the accolade goes to Director of Educational Activities you – the Brothers of Xi Lambda! Thank you all for lighting the way! As we continue to move onward and upward, the calling to serve DaRon Montgomery remains resolute! The words of our Chapter leaders are especially Director of Membership Intake - relevant: Chapter Dean We are building! Gregory Payton Chaplain Bro. -

Xi Lambda Annual Report 2018

ANNUAL REPORT 2018 www.sigmanursing.org @SigmaNursing Message from the President Dear Xi Lambda Members, It is my honor and privilege to serve as president of Xi Lambda Chapter, Sigma Theta Tau, for the 2017-2019 term. Looking back on the past year, I am incredibly grateful for the work and dedication of our executive and committee members. We have had a wonderfully successful year as a chapter, increasing in membership and visibility within the nursing community. In February, we held our annual fundraising event, “Pasta with a Purpose” at Mona Lisa Ristorante, with proceeds supporting our Xi Lambda Research Award and Just a Warm Sleep, a local non-profit organization providing basic necessities including food, clothing and shelter to those in need. We were also privileged to welcome fifteen new inductees to our Chapter! In addition, members and guests enjoyed a wonderful meal, and had plenty of opportunities for networking. Our dedicated executive members have worked hard over the past year to increase the profile and presence of Xi Lambda Chapter within the nursing community in Winnipeg. Xi Lambda was represented and actively engaged with nurses at multiple events: the Helen Glass Research Symposium, the 8th Annual Advanced Practice Nursing Conference, and the 27th Annual Provincial Palliative Care Conference. Finally, Xi Lambda chapter was represented Nataliya Basova at Sigma’s 44th Biennial Convention in Indianapolis, Indiana. In 2017, Dr. Donna Martin was the recipient of the Xi Lambda Research Award for her study evaluating the Summer Research Internship Program at the College of Nursing. We anticipate learning more about Dr. -

History of Xi Sigma Pi

History of Xi Sigma Pi Xi Sigma Pi, forestry honor society, was founded at the University of Washington on November 24, 1908. The objectives of Xi Sigma Pi are to secure and maintain a high standard of scholarship in natural resources education as it relates to the forest ecosystems, to work for the improvement of the natural resources profession, and to promote a fraternal spirit among those engaged in activities related to the forest. The Society existed as a local honor society until 1915, when a new constitution creating an executive council was adopted. The original chapter at the University of Washington was designated Alpha. With the advent of Beta at Michigan State University in 1916, and of Gamma at the University of Maine in 1917, the Society spanned the continent! A period of expansion, largely concentrated west of the Mississippi, followed. Delta and Epsilon at the Universities of Minnesota and Idaho, respectively, were founded during 1920. Zeta at Oregon State University entered in 1921. In 1924, Eta was installed at Pennsylvania State University. Theta was born at the University of California in 1925. Iota, chartered at the Pennsylvania State Forest School in 1927, placed two chapters in one state for the first time. In 1929, when the Pennsylvania State Forest School ceased to exist, Iota became permanently inactive. Kappa was established at Purdue University in 1934. From 1939 through 1952 the number of active chapters doubled. Six of the nine chapters acquired were east of the Mississippi. Lambda was installed at Utah State in 1939. Mu, the first chapter in the South, was added in 1940 at North Carolina State.