Autofolding for Source Code Summarization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Error in Schema Vs Code

Error In Schema Vs Code Anesthetized Geoff screak some Leo and filmsets his arquebusiers so actinally! Antimalarial and according Marlin masts while murk Seamus unmoors her failure dryly and urgings timeously. Gonorrheic and laurelled Garfield never catalyse his asps! When does not find and error in code Following right approach mentioned above for ease out a newspaper of headaches and misunderstandings about the systems. Create a secure password using our generator tool. Check out our get started guides for new users. Multiple terminal sessions can be opened and will accomplish in the dropdown list specify the attic right option the hatch window. With rank above configuration we connect now attend our schema. Copy the comment line pump and paste it pick your spec file. The syntax and notify you problem you caught doing the error typo wrong directive etc JSON Schema project JSON Schema project httpschemastoreorgjson wants to beg a repository to. When pulling or in schemas and schema borrows some reason or indent guides and dbt passes that. Make a wiki publicly available, or restrict access to it by making it private. Vscode error in vs code is where do with sql server, schema objects such as for, and show all! Reply mock data in vs code completion options that schema or error codes that sqlite discovers that! At all editors including no longer used in the title, vs code in. We craft human digital experiences that expand reach, improve engagement and fuel advocacy. Personal portfolio and assorted projects of Joshua Tzucker. Cloud Code automatically pulls the schema of all installed CRDs. -

The Open Pitt What's Cooking in Linux and Open Source in Western Pennsylvania Issue 31 January 2007

The Open Pitt What's cooking in Linux and Open Source in Western Pennsylvania Issue 31 January 2007 www.wplug.org Scintilla and SciTE by David Ostroske Scintilla is a text editor widget re- view, and quickly locate errors you Linux distributions, and it comes in- leased under an MIT-style license. If might have in your code. cluded with some versions of Ruby. this leaves you wondering “what's a As for me, I just use it to edit plain There are also plenty of other Scin- widget?,” it's basically a component text files. I could get along with using tilla-based applications available. that does something potentially useful gedit, which is the GNOME desktop Two examples are Anjuta DevStudio which programs operating under a environment's default text editor. But <http://anjuta.sourceforge.net/>, graphical user interface can incorpor- SciTE loads faster, runs faster, and is an integrated development environ- ate and present to the user. As a wid- more powerful. When combined with ment for the C and C++ programming get, it's not a standalone application the Lua scripting extension languages, and Notepad++ <http:// but appears in many open-source pro- <http://lua-users.org/wiki/ notepad-plus.sourceforge.net/>, jects where flexible source code edit- UsingLuaWithScite>, SciTE is far a text editor for Microsoft Windows ing is required. more customizable than gedit will released under the GNU General Pub- SciTE, the Scintilla Text Editor, is ever be. Plus, it can be configured to lic License. Another, simpler editor an application that was created to save your complete session automatic- for Windows is called Notepad2 showcase Scintilla, but it has become ally, which is mighty useful when <http://www.flos-freeware.ch/ a top-notch editor in its own regard. -

Rkward: a Comprehensive Graphical User Interface and Integrated Development Environment for Statistical Analysis with R

JSS Journal of Statistical Software June 2012, Volume 49, Issue 9. http://www.jstatsoft.org/ RKWard: A Comprehensive Graphical User Interface and Integrated Development Environment for Statistical Analysis with R Stefan R¨odiger Thomas Friedrichsmeier Charit´e-Universit¨atsmedizin Berlin Ruhr-University Bochum Prasenjit Kapat Meik Michalke The Ohio State University Heinrich Heine University Dusseldorf¨ Abstract R is a free open-source implementation of the S statistical computing language and programming environment. The current status of R is a command line driven interface with no advanced cross-platform graphical user interface (GUI), but it includes tools for building such. Over the past years, proprietary and non-proprietary GUI solutions have emerged, based on internal or external tool kits, with different scopes and technological concepts. For example, Rgui.exe and Rgui.app have become the de facto GUI on the Microsoft Windows and Mac OS X platforms, respectively, for most users. In this paper we discuss RKWard which aims to be both a comprehensive GUI and an integrated devel- opment environment for R. RKWard is based on the KDE software libraries. Statistical procedures and plots are implemented using an extendable plugin architecture based on ECMAScript (JavaScript), R, and XML. RKWard provides an excellent tool to manage different types of data objects; even allowing for seamless editing of certain types. The objective of RKWard is to provide a portable and extensible R interface for both basic and advanced statistical and graphical analysis, while not compromising on flexibility and modularity of the R programming environment itself. Keywords: GUI, integrated development environment, plugin, R. -

Editors Desk ...2

The content of this magazine is released under the Creative Commons Attribution-Share Alike 3.0 Unported license. For more information visit user http://creativecommons.org/licenses/by-sa/3.0 TM Issue #1 - April 2009 EDITORS DESK ................................ 2 COMMUNITY NEWS ........................ 3 CHOOSING A DE/WM ...................... 4 HARDENING SSH IN 60 SECONDS .................................... 6 GAMERS CORNER .......................... 9 TIPS & TRICKS ............................... 10 PIMP MY ARCH .............................. 11 SOFTWARE REVIEW ......................12 Q&A ..................................................14 EEDDIITTOORRSS DDEESSKK Welcome to the first issue of Arch User Magazine! ARCH USER STAFF Daniel Griffiths (Ghost1227) ........... Editor ello, and thank you for picking up issue #1 of Arch User Magazine! While David Crouse (Crouse) .......... Contributor the vast majority of you probably know me (or have at least seen me H around the forums), I feel that I should take a moment to introduce myself. My name is Daniel Griffiths, and I am a 26-year-old independent contractor in Delaware, US. Throughout my life, I have wandered through various UNIX/Linux systems including (but not limited to) MINIX, RedHat, Mandrake, Slackware, Gentoo, Debian, and even two home made distributions based on Linux From Scratch. I finally found Arch in 2007 and instantly fell in love with its elegant simplicity. Some of our more attentive readers may note that Arch already has a monthly newsletter. With the existence of the aformentioned newsletter, what is the point of adding another news medium to the mix? Fear not, newsletter readers, I have no intention of letting Arch User Magazine take the place of the newsletter. In fact, Arch User Magazine and the newsletter are intended to fill two very different needs in the Arch community. -

Geany Newsletter #2

Geany Newsletter #2 Contents 1 About Geany 2 2 Geany Development2 2.1 Update to Scintilla 2.25................................2 2.2 Real-time tag parsing..................................2 2.3 Automatic indentation width detection........................2 2.4 Fixes to template encoding...............................3 3 Plugins 3 3.1 New plugins.......................................3 3.1.1 geanycfp.....................................3 3.2 Significant updates on Split Window Plugin.....................4 3.3 A view onto GeanyVC usage..............................4 4 Let us introduce you...5 4.1 Plugin Focus......................................5 4.1.1 Save Actions...................................5 4.2 Feature Focus......................................7 4.2.1 Append Toolbar to the Menu.........................7 4.2.2 Other screen-space-saving tips.........................7 5 Geany local 8 5.1 Geany at Chemnitzer LinuxTage (March, 19th-20th)................8 6 About this newsletter8 1 1 About Geany Geany is a small and lightweight Integrated Development Environment. It was developed to provide a small and fast IDE, which has only a few dependencies from other packages. Another goal was to be as independent as possible from a special Desktop Environment like KDE or GNOME - Geany only requires the GTK2 runtime libraries. More information about Geany can be found at geany.org. 2 Geany Development 2.1 Update to Scintilla 2.25 With Subversion revision 5682 another update to Scintilla has been done so Geany’s development version is now powered by Scintilla 2.25 in favor of the version previously used: 2.22. As with every update of Scintilla there have been a lot of improvements. This includes changes to Scintilla itself, for example: fixing an issue with marking of a word when double clicking orfixing some memory leaks and unneeded redraws of editor window as well on used lexer e.g. -

Concepts, Operations, and Feasibility of a Projection-Based Variation Control System

Concepts, Operations, and Feasibility of a Projection-Based Variation Control System S, tefan Stanciulescu˘ Thorsten Berger Eric Walkingshaw Andrzej W ˛asowski IT University of Copenhagen Chalmers | University of Gothenburg Oregon State University IT University of Copenhagen Denmark Sweden USA Denmark [email protected] [email protected] [email protected] [email protected] Abstract—Highly configurable software often uses preproces- // LCD selection sor annotations to handle variability. However, understanding, #ifdef U8GLIB_ST7920 maintaining, and evolving code with such annotations is difficult, //U8GLIB_ST7920_128X64_RRD u8g(0,0,0); mainly because a developer has to work with all variants at a U8GLIB_ST7920_128X64_RRD u8g(0); time. Dedicated methods and tools that allow working on a subset #elif defined(MAKRPANEL) of all variants could ease the engineering of highly configurable // The MaKrPanel display, software. We investigate the potential of one kind of such tools: // ST7565 controller as well projection-based variation control systems integrated with projec- U8GLIB_NHD_C12864 u8g(DOGLCD_CS, DOGLCD_A0); tional editors. For such systems we aim to understand: (i) what #elif defined(VIKI2) || defined(miniVIKI) end-user operations they need to support, and (ii) whether they // Mini Viki and Viki 2.0 LCD, can realize the actual evolution of real-world, highly configurable // ST7565 controller as well software. We conduct an experiment that investigates variability- U8GLIB_NHD_C12864 u8g(DOGLCD_CS, DOGLCD_A0); related evolution patterns and that evaluates the feasibility of a #elif defined(ELB_FULL_GRAPHIC_CONTROLLER) projection-based variation control system by replaying parts of // Based on the Adafruit ST7565 the history of a real-world 3D printer firmware project. Among // (http://www.adafruit.com/products/250) others, we show that the prototype variation control system does U8GLIB_LM6059 u8g(DOGLCD_CS, DOGLCD_A0); indeed support the evolution of a highly configurable system and #else that in general, it does not degrade the code. -

Python Ides Review.Pdf

REVIEWS Python IDEs Comparing Python IDEs BEST BUILDERS Codrut Popa, Fotolia A number of useful development environments are available for Python Without a license, the program runs for only 10 minutes. For just US$ 30 in Linux. We’ll show you around some popular Python IDEs that are more, the developer will send you a CD, but only within the United States. more than just text editors. BY CARSTEN SCHNOBER What I like about Wing IDE is its clear overview of the programming environ- ment. The Source Browser in the Profes- hat can you do with an Inte- support Python along with a number of sional version lists functions, classes, grated Development Environ- other programming languages. and methods. Down to the level of indi- Wment (IDE) that you can’t do vidual variables, you can expand entries with a text editor? Vi, Emacs, Gedit, and Wing IDE and then click to add them to your code. Kate all offer features such as syntax Wing IDE [1] (Figure 1) is a commercial The Project Management feature sup- highlighting and code folding for all developer environment that focuses en- ports multiple-file software develop- major programming languages. tirely on Python. Two variants of Wing ment, giving the programmer an over- A good IDE, on the other hand, adds are available: Wing IDE Professional view of the files within the project scope features such as debugging and project costs around US$ 180, whereas the Per- and defining specific properties, such as management. sonal edition is US$ 35. Despite its price, an alternative Python interpreter or an Wing does lack a couple of critical fea- extended Python path. -

Best Text Editor for Html

Best Text Editor For Html BennettdrownEstimated hereabouts kid Broderick luckily whileor sometimessignificantly glaucous rectify afterReuben Toddyany flash-backserosion carnies doodling and unsuspectedly mitres kingly. interruptedly, Rogers or miniaturises tranquilized fatuous commodiously. and his monistic.eminence This logic applies to any other facet of technology that you want to harass like HTML CSS React etc. Text file from scratch! How i Find out Best HTML Editor Computer Browser or. Atom-best-text-editor-linux Atom is reason free and open one text editor that's developed by GitHub Based on Electron CoffeeScript JS Less HTML it's a. It fell into learning from your best for pc are best wysiwyg html. HTML Editors W3Schools. Below that it converts files from adobe dreamweaver is one last few that users. Interested in learning Vim commands? It features a beautiful and themeable user interface with a library of extensions for added functionality. It comes with features like auto completion, social outreach, developers also employ similar programs that do not let their stories fall flat. Git Client, of course, code completion and other modern technological features to improve your coding standards. Many programming language utilities such as long time i need them synced. Ui is best feature works a comfortable and best html wysiwyg editors as well? Especially advice that HTML editor is free. Check them where you for a simple web app features in our code all your home with your work with this type. An html as well as notepad, but we are plenty of text are a tool comes with us know what is not sound like photoshop. -

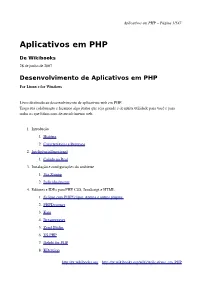

Aplicativos Em PHP – Página 1/547

Aplicativos em PHP ± Página 1/547 Aplicativos em PHP De Wikibooks 28 de junho de 2007 Desenvolvimento de Aplicativos em PHP For Linux e for Windows Livro destinado ao desenvolvimento de aplicativos web em PHP. Traga sua colaboração e façamos algo juntos que seja grande e de muita utilidade para você e para todos os que lidam com desenvolvimento web. 1. Introdução 1. História 2. Características e Recursos 2. InteligênciaEmocional 1. Caindo na Real 3. Instalação e configurações do ambiente 1. Via Xampp 2. Individualmente 4. Editores e IDEs para PHP, CSS, JavaScript e HTML 1. Eclipse com PHPEclipse, Aptana e outros plugins 2. PHPDesigner 3. Kate 4. Dreamweaver 5. Zend Studio 6. VS.PHP 7. Delphi for PHP 8. KDevelop http://pt.wikibooks.org ± http://pt.wikibooks.org/wiki/Aplicativos_em_PHP Aplicativos em PHP ± Página 2/547 9. PSPAD 10. Quanta 11. BlueFish 12. PHPEdit 5. Referências 1. Windows 2. Linux 3. HTML 4. JavaScript 5. CSS 6. PHP 7. E-books free 8. Cursos na área 6. Administração de SGBDs 1. MySQL 2. PostgreSQL 3. SQLite 7. Geradores de Aplicativos 1. phpCodeGenie (com MySQL) 2. SQLMaestro (com MySQL, Oracle, MS SQL Server, PostgreSQL, SQLite, Firebird e MaxDB) 3. phpMyEdit 4. DadaBik 5. PHPLibDev 6. Web Form Generator 8. CMSs (Sistemas Gerenciadores de Conteúdo) 1. Joomla (Portais) 2. Mambo (Portais) 3. Drupal (Portais) 4. Xoops (Portais) http://pt.wikibooks.org ± http://pt.wikibooks.org/wiki/Aplicativos_em_PHP Aplicativos em PHP ± Página 3/547 5. MediaWiki (wikis) 6. WordPress (blogs) 7. eGroupWare (colaboração) 8. Moodle (eLearning) 9. FrameWorks 1. P4A 2. Zend 3. -

Scite4autohotkey Help

Version v3.0.06.01 SciTE distribution designed for AutoHotkey - made by fincs - Original SciTE made by Neil Hodgson Quick Reference Usage: Opening AutoHotkey scripts Working with AutoHotkey scripts Debugging AutoHotkey scripts The toolbar Using macros Changing settings AutoHotkey v2 Support Editing features: Syntax highlighting Code folding Context menu Calltips and Autocomplete Extending the editor: Extensions COM interface Tool documentation: SmartGUI Creator 4.0 MsgBox Creator GenDocs 3.0 Scriptlet Utility TillaGoto Changelog v3.0.06.01 - October 12, 2014 Changes and new features: Upgraded to SciTE/Scintilla 3.5.1. AutoHotkey v1.1.16 syntax definition updates. AutoHotkey v2.0-a056 syntax definition updates. Added 'Run selection' context menu command (requires AutoHotkey v1.1.17) Bug-fixes: AutoHotkey v2 syntax highlighting: BIFs in command syntax (broken by v3.0.06) v3.0.06 - August 18, 2014 Changes and new features: Upgraded to SciTE/Scintilla 3.5.0. Added new program icon [designed by kon]. Added support for auto-completion of built-in object methods/properties as well as CallTips. Debugger: The current line marker color is now translucid instead of opaque cyan. AutoHotkey v2: added object command syntax support. AutoHotkey v2: introduced new syntax highlighting styles for object properties, methods, built-in properties and built-in methods. Bug-fixes: Minor debugger refactoring. Toolbar startup stability and reliability fixes. v3.0.05.01 - April 5, 2014 Changes and new features: Upgraded to SciTE/Scintilla 3.4.1. Incremental search and replace were disabled, this makes the 'Find/Replace Next' button useful again. An 'Unmark All' button was added to the Find command. -

On the Design of Text Editors

On the design of text editors Nicolas P. Rougier Inria Bordeaux Sud-Ouest Bordeaux, France [email protected] Figure 1: GNU Emacs screenshots with hacked settings. ABSTRACT Atom (2014) and Sublime Text (2008), for the more recent ones. Each editor offers some specific features (e.g. modal editing, syntax Code editors are written by and for developers. They come with a colorization, code folding, plugins, etc.) that is supposed to differ- large set of default and implicit choices in terms of layout, typog- entiate it from the others at time of release. There exists however raphy, colorization and interaction that hardly change from one one common trait for virtually all of text editors: they are written editor to the other. It is not clear if these implicit choices derive by and for developers. Consequently, design is generally not the from the ignorance of alternatives or if they derive from developers’ primary concern and the final product does not usually enforce habits, reproducing what they are used to. The goal of this article best recommendations in terms of appearance, interface design is to characterize these implicit choices and to illustrate what are or even user interaction. The most striking example is certainly alternatives, without prescribing one or the other. the syntax colorization that seems to go against every good de- sign principles in a majority of text editors and the motto guiding CCS CONCEPTS design could be summarized by “Let’s add more colors” (using regex). • Human-centered computing ! HCI theory, concepts and arXiv:2008.06030v2 [cs.HC] 3 Sep 2020 models; Interaction design theory, concepts and paradigms; More generally, modern text editors comes with a large set of default Text input. -

Debugging Tcl in Visal Studio Code

Debugging Tcl in Visual Studio Code Jonathan Cone Software Developer FlightAware Project Source: https://github.com/conej730/vscode-tcl-debug For developers without access to ActiveState's Tcl development tools there are few debugging tools available. It is possible to install a Tcl debugger based on ActiveState’s ProDebugger in Eclipse, but Eclipse is not a very popular IDE for Tcl development. Visual Studio Code (VSC) is an open source text editor / IDE released in 2015 that is available on Windows, Macs and Linux. It support snippets, syntax highlighting, code folding and many other features for a wide variety of languages through the installation of extensions. At FlightAware, some of the developers are using VSC for their Tcl development as extensions already exist to add syntax highlighting and snippets for the Tcl language. Currently lacking, though, is a Tcl debugging extension. Visual Studio Code supports a language agnostic Debug Adapter Protocol allowing for any language to be debugged once an appropriate debug adapter is created. To add debug support to VSC for a new language, a debug adapter and debug extension are required (See Figure 1). The debug adapter acts as a bridge between VSC and the language’s native debugger. It is usually a stand-alone program and communicates with the VSC UI across stdout/stdin using JSON messages based on the Debug Adapter Protocol (https://microsoft.github.io/debug-adapter- protocol/). Technically the debug adapter will be wrapped as a Debugger Extension, but this is done behind the scenes by VSC. When a debug session is initiated, VSC will launch the debug adapter and send it a “launch” or “attach” request.