Trustworthy Privacy Indicators: Grades, Labels, Certifications, and Dashboards

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

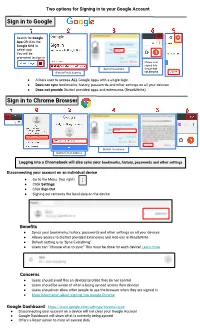

Signing in to Your Google Account File

Two options for Signing in to your Google Account Sign in to Google Search for Google App OR click the Google Grid to select app. You will be prompted to sign in Shows user signed into District Username Google but District Email Address not Chrome ● Allows user to access ALL Google Apps with a single login ● Does not sync bookmarks, history, passwords and other settings on all your devices ● Does not provide District provided apps and extensions (Read&Write) Sign in to Chrome Browser District Username District Email Address Logging into a Chromebook will also sync your bookmarks, history, passwords and other settings Disconnecting your account on an individual device ● Go to the Menu (top right) ● Click Settings ● Click Sign Out ● Signing out removes the local data on the device Benefits ● Syncs your bookmarks, history, passwords and other settings on all your devices ● Allows access to District provided Extensions and Add-ons ie Read&Write ● Default setting is to “Sync Everything” ● Users can “Choose what to sync” This must be done for each device! Learn more Concerns ● Users should avoid this on devices/profiles they do not control ● Users should be aware of what is being synced across their devices ● Users should not allow other people to use the browser when they are signed in ● More information about signing into Google Chrome Google Dashboard: https://www.google.com/settings/chrome/sync ● Disconnecting your account on a device will not clear your Google Account ● Google Dashboard will show what is currently being synced ● Offers a Reset option to clear all synced data. -

Noor Kharrat

Google and your Privacy By: Noor Kharrat Many take Google.com for granted. The number one most used website to search and the number two most visited site has recently been under criticism by many individuals and groups about its privacy. Google is based in Mountain View, California and is regulated according to US laws. This means that Google has to follow the USA Patriot Act, which is a controversial Act of Congress that U.S. President George Bush signed into law on October 26, 2001. All Google's applications and services that it opens to the public are bound under that act. Google.ca, Google.co.uk, Google.cn all fall under google.com. The servers that hold international sites for Google are still located in US grounds. The rights group "Privacy International" rated the search giant as "hostile" to privacy in a report ranking web firms by how they handle personal data. The group said Google was leading a "race to the bottom" among Internet firms many of whom had policies that did little to substantially protect users. (BBC news channel) Here are some of the reasons why Google was declared "hostile to privacy":1 • IP addresses are not considered personal information. They do not believe that they collect sensitive information. (privacyinternational.org, 2007) • Google maintains records of all search strings and the associated IP-addresses and time stamps for at least 18 to 24 months and does not provide users with an option. (privacyinternational.org, 2007) • Google has access to additional personal information, including hobbies, employment, address, and phone number, contained within user profiles in Orkut, which is a social network similar to the much popular Facebook. -

Google Sync Integration Guide

Google Sync Integration Guide VMware Workspace ONE UEM Google Sync Integration Guide You can find the most up-to-date technical documentation on the VMware website at: https://docs.vmware.com/ VMware, Inc. 3401 Hillview Ave. Palo Alto, CA 94304 www.vmware.com © Copyright 2020 VMware, Inc. All rights reserved. Copyright and trademark information. VMware, Inc. 2 Contents 1 Introduction to Google Sync Integration 4 Understanding Google Sync Integration 4 Requirements for Google Sync Deployment 5 2 Google Sync Integration 6 Integrate Secure Email Gateway (V2) Proxy with Google 7 Configure Secure Email Gateway V2 With Workspace ONE UEM console 8 Configure Advanced Settings 10 Configure IP Restriction on Google Admin Console 11 Integrate Direct Model using Password Management 11 Configure Gmail Deployment With Password Retention 12 Configure Gmail Deployment Without Password Retention 13 Integrate Direct Model Using Directory APIs 14 3 Create an Admin Role for Gmail Integration 17 Enable the Google API 21 Create a Service Account Certificate 21 4 Email Management through Google Sync Integration 26 Device Management with Direct Password Management Integration 26 Device Management with Directory APIs Integration 28 Device Management with SEG Proxy Integration 31 VMware, Inc. 3 Introduction to Google Sync Integration 1 Organizations using the Gmail infrastructure may be familiar with the challenge of securing email endpoints for Gmail and preventing mail from circumventing the secure endpoint. Workspace ONE UEM powered by AirWatch provides you with an end-to-end solution that enables you to fully integrate your Gmail infrastructure without compromising security. Integrating your Gmail infrastructure with Workspace ONE provides the following benefits: n Flexible configuration while maintaining tight integration n Email monitoring and management n Customizable access control n Google Sync support For more information on Google Sync, see https://support.google.com/a/answer/135937?hl=en. -

Google Overview Created by Phil Wane

Google Overview Created by Phil Wane PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Tue, 30 Nov 2010 15:03:55 UTC Contents Articles Google 1 Criticism of Google 20 AdWords 33 AdSense 39 List of Google products 44 Blogger (service) 60 Google Earth 64 YouTube 85 Web search engine 99 User:Moonglum/ITEC30011 105 References Article Sources and Contributors 106 Image Sources, Licenses and Contributors 112 Article Licenses License 114 Google 1 Google [1] [2] Type Public (NASDAQ: GOOG , FWB: GGQ1 ) Industry Internet, Computer software [3] [4] Founded Menlo Park, California (September 4, 1998) Founder(s) Sergey M. Brin Lawrence E. Page Headquarters 1600 Amphitheatre Parkway, Mountain View, California, United States Area served Worldwide Key people Eric E. Schmidt (Chairman & CEO) Sergey M. Brin (Technology President) Lawrence E. Page (Products President) Products See list of Google products. [5] [6] Revenue US$23.651 billion (2009) [5] [6] Operating income US$8.312 billion (2009) [5] [6] Profit US$6.520 billion (2009) [5] [6] Total assets US$40.497 billion (2009) [6] Total equity US$36.004 billion (2009) [7] Employees 23,331 (2010) Subsidiaries YouTube, DoubleClick, On2 Technologies, GrandCentral, Picnik, Aardvark, AdMob [8] Website Google.com Google Inc. is a multinational public corporation invested in Internet search, cloud computing, and advertising technologies. Google hosts and develops a number of Internet-based services and products,[9] and generates profit primarily from advertising through its AdWords program.[5] [10] The company was founded by Larry Page and Sergey Brin, often dubbed the "Google Guys",[11] [12] [13] while the two were attending Stanford University as Ph.D. -

Google Chrome

What Google Knows About You and Your Devices AND HOW WE CAN GET IT Google Account Acquisition Extracting evidence from users’ Google Account: challenges, approaches and tools Vladimir Katalov ElcomSoft Ltd. www.elcomsoft.com Illuminating the Black Art of Security - October 19-21, 2015-Toronto What Google Knows About You Who We Are • Privately held company, established in 1990 • 100% in‐house development • Microsoft Certified Partner • Intel Software Partner (Premier Elite) • Trusted NVIDIA developer • Over 300 partners/resellers on all continents • Six US patents (incl. GPU acceleration) • Corporate, government, military & forensic customers • Over 300,000 installations worldwide Illuminating the Black Art of Security - October 19-21, 2015-Toronto Page 2 What Google Knows About You Our Customers Illuminating the Black Art of Security - October 19-21, 2015-Toronto Page 3 What Google Knows About You Research Motivation • Curiosity • Privacy • The right to know • Government surveillance • Forensics • Backup and recovery Illuminating the Black Art of Security - October 19-21, 2015-Toronto Page 4 What Google Knows About You Top 10 fears of 2015 Source: http://www.livescience.com/52535-american-fear-survey-2015.html Illuminating the Black Art of Security - October 19-21, 2015-Toronto Page 5 What Google Knows About You What This Presentation is NOT About • Hacking • Accessing someone else’ account • Compromising Google • Criminal activities • Profit Most information used for this research is public Illuminating the Black Art of Security - October -

It's Not Your Father's Funeral Home

Chapter 4: It’s Not Your Father’s Funeral Home 3 CE Hours By Staff Writer Objectives Identify preplanning activities and guidelines to meet changing Identify components of personalization and strategies to achieve consumer demands. them. Discuss consumer demands and list options for funeral services Discuss the changing roles of women in the industry. and celebrations outside of the funeral home. Compare and contrast the services offered by publically-owned Explain the changes in embalming and cremation, including corporations and private family businesses. alternative methods that are growing in popularity. List innovative commemorative products for the disposition of Define five technology strategies to expand market coverage and remains. meet client needs. Overview The funeral industry today is rapidly evolving to meet the changing “one size fits all” approach, where the funeral director takes the lead in demands of consumers. Businesses must stay current with industry planning and offers traditional embalming, casket selection, viewing, changes to survive and this involves embracing technology and social memorial service, and burial options. media to expand the geographic area of service that was traditionally Many of the new requests by consumers may seem controversial to confined to a small local territory. Consumers today rely heavily on some directors and communities, while seeming perfectly acceptable technology to compare and choose services and share information on to others. It is important for the business to develop a spectrum of social media. services to meet the diverse needs of the community and reflect the Consumers are seeking innovative ways to personalize services, dynamic nature of the industry. celebrations, and memorials for themselves and their loved ones. -

RFP 1819-IT4 TV Setup Purchase & Deployment

HOLLISTER SCHOOL DISTRICT RFP 1819-IT4 TV Setup Purchase & Deployment Notice Inviting Proposals RFP DUE DATE: Friday, July 20, 2018 at 10:00:00 A.M. SUBMIT PROPOSALS TO: Technology Department HOLLISTER SCHOOL DISTRICT 2690 CIENEGA ROAD HOLLISTER, CA 95023 ATTN: DIRECTOR OF TECHNOLOGY NOTICE INVITING PROPOSALS RFP Number – 1819-IT4 – TV Setup Purchase & Deployment NOTICE IS HEREBY GIVEN that Hollister School District of San Benito County, California, will receive up to, but not later than 10:00:00 A.M., on July 20, 2018, Sealed Proposals for TV Setup Purchase & Deployment Proposals shall be received at: Hollister School District 2690 Cienega Road. Hollister, CA 95023 ATTN: Director of Technology RFP 1819-IT4 TV Setup Purchase & Deployment 1 Proposals will be received in the office of the Technology Department of the District at 2690 Cienega Rd, Hollister CA 95023, in accordance with this RFP. Each proposal must conform and be responsive to the requirements of this RFP, a copy of which is now on the District website, at www.hesd.org. Interested proposers should direct questions in writing to the District’s Director of Technology & Innovation, Jr Rayas, at [email protected] before July 13, 2018 at 2:00 pm. Answers to questions and any addenda, as needed, will be posted on the District website on the date specified in the Schedule, provided herein. The District reserves the right to waive any informalities or irregularities in received submittals. Also, District reserves the right to reject any or all submittals and to negotiate contract terms with one or more proposers for one or more work items. -

Logging Into Classlink Using the Quickcard (QR Code) and Chromebook: 1

Parents, Here are a few instructions on how to log into your child’s Canvas and Classlink accounts from home. Canvas is our learning management system that supports online learning and teaching. Teachers will share information from class, including newsletters, assignments and upcoming information. Classlink houses Lexia Core 5, Splash Math, AR, McGraw Hill Wonders (reading program), Envision and other programs used at school. Logging into Classlink using the quickcard (QR Code) and Chromebook: 1. Open the Chromebook. 2. When the camera comes up, hold up your quickcard (pictured below). 3. Your child’s Classlink page should automatically load (pictured below). If you don’t have a quickcard/ chromebook, here is an alternative- (Logging into your student’s Google Account): 1. Click on your internet browser (Google Chrome is the best for this). 2. Open a new tab. 3. Click on the profile picture in the upper right corner. 4. Click on add another account. 5. Type in your child’s email address: _______________________________ 6. Type in your child’s password: _______________________________ Part 2 (Logging into ClassLink): 1. Enter the following URL: https://launchpad.classlink.com/tpsd 2. Once the page loads click “Log in with Google” 3. You can access Savvas EasyBridge (Envision Math), LEXIA: CORE 5, Canvas, Wonders, and other resources from this page. 4. It may be a good idea to bookmark this page, so you can find your way back quickly. (You can do this by clicking CTRL + D on Windows or CMD + D on Mac) Part 3 (Logging into McGraw-Hill/Wonders Reading): 1. In Classlink, click on the red McGraw Hill Education button 2. -

2018-08 Internet/Email SIG Click Here to Return to the Computer Club’S Website

2018-08 Internet/Email SIG Click here to return to the Computer Club’s website GMAIL HAS CHANGED IT’S WEBSITE! They have completely redone the site and added many new features. There will be a workshop that will cover all of the changes in detail. Here a sneak preview. Open and sign into your Gmail account. Click on the Setting Gear icon and choose the top option: “T ry the new Gmail” Why you may want to update your browser in the next 9 days - or NOW! It’s time for early Transport Layer Security (TLS) versions to die, die, die… which means that it’s time for all of us, if we haven’t already, to take our browsers, our projects and/or our organizations and upgrade, upgrade, upgrade. What happens on 3 0 June 2018 ? 30 June 2018 is the deadline for disabling SSL/early TLS and implementing a more secure encryption protocol – TLS 1.1 or higher (TLS v1.2 is strongly encouraged) in order to meet the PCI Data Security Standard (PCI DSS) for safeguarding payment data. What is SSL/early TLS? Transport Layer Security (TLS) is a cryptographic protocol used to establish a secure communications channel between two systems. It is used to authenticate one or both systems, and protect the confidentiality and integrity of information that passes between systems. It was originally developed as Secure Sockets Layer (SSL) by Netscape in the early 1990s. Online merchants have until 30 June to support TLS 1.2 and HTTP/1.1: a kill date that was extended for these security sadsack protocols from the original June 2016 deadline, which the PCI Council decided that retailers weren’t going to make. -

Google Sync Integration Guide

Google Sync Integration Guide VMware Workspace ONE UEM Google Sync Integration Guide You can find the most up-to-date technical documentation on the VMware website at: https://docs.vmware.com/ VMware, Inc. 3401 Hillview Ave. Palo Alto, CA 94304 www.vmware.com © Copyright 2021 VMware, Inc. All rights reserved. Copyright and trademark information. VMware, Inc. 2 Contents 1 Introduction to Google Sync Integration 4 2 Google Sync Integration 6 3 Create an Admin Role for Gmail Integration 16 4 Email Management through Google Sync Integration 24 VMware, Inc. 3 Introduction to Google Sync Integration 1 Organizations using the Gmail infrastructure may be familiar with the challenge of securing email endpoints for Gmail and preventing mail from circumventing the secure endpoint. Workspace ONE UEM powered by AirWatch provides you with an end-to-end solution that enables you to fully integrate your Gmail infrastructure without compromising security. Integrating your Gmail infrastructure with Workspace ONE provides the following benefits: n Flexible configuration while maintaining tight integration n Email monitoring and management n Customizable access control n Google Sync support For more information on Google Sync, see https://support.google.com/a/answer/135937?hl=en. Understanding Google Sync Integration Methods Integrate Google Sync infrastructure using the Workspace ONE UEM end-to-end solution to securely integrate, access, and manage your email. To suit your business needs, you can integrate Google Sync in different ways by using the different deployment models offered by Workspace ONE UEM. The integration methods you choose require configuring of certain settings on the UEM console. To know more about these integration methods, see Chapter 2 Google Sync Integration topic. -

What Google Knows About You and Your Devices (And How to Get It) Vladimir Katalov Elcomso� Ltd

What Google knows about you and your devices (and how to get it) Vladimir Katalov ElcomSo0 Ltd. www.elcomso.com What Google knows about you Research mo;va;ons • Curiosity • Privacy • The right to know • Government surveillance • Forensics • Backup and recovery Academia Sinica, Nankang, Taipei AUG 28-29, 2015 www.elcomso.com Page 2 What Google knows about you What this presentaon is NOT about • Hacking • Accessing someone else’ account • Compromising Google • Criminal acRviRes • Profit Most informa,on used for this research is public Academia Sinica, Nankang, Taipei AUG 28-29, 2015 www.elcomso.com Page 3 What Google knows about you Desktop vs Mobile forensics Academia Sinica, Nankang, Taipei AUG 28-29, 2015 www.elcomso.com Page 4 What Google knows about you Smartphone sales Source: Gartner, August 2015 Academia Sinica, Nankang, Taipei AUG 28-29, 2015 www.elcomso.com Page 5 What Google knows about you Enterprise tablet market Source: Q2’2015 Mobility Index Report Academia Sinica, Nankang, Taipei AUG 28-29, 2015 www.elcomso.com Page 6 What Google knows about you Mobile vs Cloud forensics Google mail • 900 million users (May’2015) • Monthly unique users: 90 million (2014) • Percentage of Americans using Gmail: 24% (2013) • Gmail app downloads from Google Play: 1 billion (2014) • Percentage of Gmail users working on mobile device: 75% (2015) Google Chrome • Google Chrome users: 1 billion (2015) • Percentage of web browser usage: 35% (2013) Android Apple iCloud • Introduced in Oct 2011 with iOS 5 • Number of Android devices: 1 billion (2013) -

Defending Mobile Devices for High Level Officials and Decision-Makers

Authors Teemu Väisänen, Alexandria Farar, Nikolaos Pissanidis, Christian Braccini, Bernhards Blumbergs, and Enrique Diez Defending mobile devices for high level officials and decision-makers Tallinn 2015 This publication is a product of the NATO Cooperative Cyber Defence Centre of Excellence (the Centre). It does not necessarily reflect the policy or the opinion of the Centre or NATO. The Centre may not be held responsible for any loss or harm arising from the use of information contained in this publication and is not responsible for the content of the external sources, including external websites referenced in this publication. Digital or hard copies of this publication may be produced for internal use within NATO and for personal or educational use when for non‐ profit and non‐commercial purpose, provided that copies bear a full citation. www.ccdcoe.org [email protected] 1. Abstract High‐level officials and decision‐makers handle and store sensitive data with their own or with their organisations’ mobile devices. The sensitive data may be owned by the person him/herself or by the organisation. These users do not always follow security policies, creating a risk of leaking this sensitive data. It is often impossible to assess all the places where data is accessed and/or stored. The purpose of this study is to find mitigation mechanisms for a number of risks resulting from the usage of such systems without obeying security policies. The study was done by analysing usage scenarios; their actors and the assets to be secured; related mobile threats; suitable mitigation mechanisms; and threats lacking good enough mitigation mechanisms.