Pdf (April 8, 2009) Hahn, J., & Subramani, M

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2016 FSFF Leaflet

Special thanks to… The 1St Paul Alderson and Joe Long from Fighters Inc. and the Combat Fighting Spirit and Strength Show, Milt at Asia Releasing, Mark at Park Circus, 20 Century Fox, Zee West, Charlotte Grogan, Mimi Devenish, Esmond Francis, Nick and Cineasia, Robert at Kaleidoscope, Film FeStival Marlon at Kush Films Online, Terracotta Films, Raj and the Kung Fu Kingdom crew, Will at WoMA, Time Out Online, Eastern O2 CinewOrld Se10 0dX Kicks, Impact online, Andrew Staton and the team at Martial Arts Illustrated, Kung Fu Movie Guide, Kung Fu Drive In Podcast, Rotten Tomatoes, Paul Bowman at Martial Studies, Spencer Murphy from East Winds Film Festival, Joshua Smith from London East Asian Film Festival, Bey Logan, Rick Baker and Toby Russell at Eastern Heroes, Alex Reid, Shifu Yanzi and the team at Shaolin Temple UK, Barry McGinlay, Lotus, Chris O’Connor and O2 Cineworld North Greenwich, Jay Cruz, Carl Powell, Paul Greer, Sally Ellis, Danny Aindow, Nic Ognibene, Parkour Generations, Paul Phear from Smooth FM, Chocolate Films, London Symphony, Jino Kang Films, Jingan Young, Marama Corlett, Jason Newell, Elliot Murray, Zaid, the Burleys, and my Dad Cole. Finally a big big thanks to all the Film Makers, Martial Artist and those of you who supported the event. You can find us on Facebook: https://www.facebook.com/FightingSpiritFilmFestival/ Instagram: 10am-6pm https://www.instagram.com/FightingSpiritFilmFestival/ Twitter: Saturday @FSFilmFestival 16th July 2016 £10 Session £25 day The 1st Fighting Spirit Film Festival: All Martial is proud to be Welcome presenting 3 main feature length films, 7 Short Fiction Films and to the first 5 Short Documentary Films. -

Annual Report 2009/2010

I.T LIMITED ANNUAL REPORT 2009/2010 I.T LIMITED ANNUAL REPORT 09/10 09/10 I.T LIMITED ANNUAL REPORT 09/10 2 I.T Limited Annual Report 09/10 TABLE OF CONTENTS CORPORATE PROFILE 4 I.T POSITIONING 16 MESSAGE FROM THE CHAIRMAN 18 FINANCIAL HIGHLIGHTS 20 MANAGEMENT DISCUSSION AND ANALYSIS 24 BIOGRAPHIES OF DIRECTORS AND SENIOR MANAGEMENT TEAM 29 CORPORATE GOVERNANCE REPORT 34 SOCIAL RESPONSIBILITIES 39 REPORT OF THE DIRECTORS 41 INDEPENDENT AUDITOR’S REPORT 52 FINANCIAL STATEMENTS 53 FIVE YEAR FINANCIAL SUMMARY 95 I.T Limited Annual Report 09/10 3 CORPORATE PROFILE I.T is well established as a in fashion apparel retail TTREND SETTEmarket in Hong KongR with stores in the PRC, Taiwan, Macau, Thailand, Saudi Arabia, Australia, the Philippines, France and Germany. The Group has an extensive self managed retail network extending to nearly 350 stores across Greater China with staff around 3,700. 4 I.T Limited Annual Report 09/10 I.T Limited Annual Report 09/10 5 I.T is not just a fashion icon 6 I.T Limited Annual Report 09/10 I.T Limited Annual Report 09/10 7 WE ACTUALLY LIVE FOR FASHION Through the multi-brand and multi-layer business model, we offer a wide range of fashion apparel and accessories with different fashion concepts, sold at varying retail price points and targeted at different customer groups. 8 I.T Limited Annual Report 09/10 I.T Limited Annual Report 09/10 9 I.T carries apparel from established and up-and-coming international designer’s brands, in-house brands and licensed brands. -

IP MAN 3 – Spektakulärer Martial-Arts- Movie Mit Donnie Yen Und Boxlegende Mike Tyson

Presseinformation zum Kinostart von IP MAN 3 – spektakulärer Martial-Arts- Movie mit Donnie Yen und Boxlegende Mike Tyson » Dritter Teil der berühmten IP MAN-Reihe » Ab 7. April 2016 in exklusiven Vorstellungen im Kino Wiesbaden, März 2016 – Spektakuläre Kampfkunst, atemlose Spannung und erbitterte Kampfszenen – mit dem dritten Teil der berühmten Movie-Reihe IP MAN entzünden das Erfolgstrio Edmond Wong (Drehbuchautor), Wilson Yip (Regisseur) und Raymond Wong Pak-ming (Produzent) wieder ein explosives Kampffeuerwerk der Superlative. In spannenden und schonungs- losen Kampfszenen liefern sich die beiden Hauptdarsteller, Martial- Arts-Ikone Donnie Yen („Iceman“, „The Monkey King“) sowie Boxlegende und zweimaliger Schwergewichts-Boxweltmeister „Iron Mike“ Tyson, ein brutales Kampfduell der Extraklasse. Mit weiterer Topbesetzung wie Lynn Hung („All’s Well, Ends Well“, „IP MAN 2“), Zhang Jin („The Grandmaster“, „Rise of the Legend“) und Martial-Arts-Legende Bruce Lee, der als CGI-Version wiederbelebt wird, ist Regisseur Wilson Yip eine spektakuläre Fortsetzung der legendären IP MAN-Reihe gelungen. Am 7. April 2016 feiert der Film Deutschlandpremiere. Der Kartenvorverkauf startet ab sofort. mehr >> Presseinformation Hongkong, 1959: Der legendäre Wing-Chun-Kampfkünstler Ip Man führt ein ruhiges Leben mit seiner gesundheitlich geschwächten Frau Wing-sing und seinem Sohn Ip Ching. Als der gewissenlose US-Bauträger Frank samt seinem Schlägertrupp auftaucht, ist das Idyll abrupt vorbei. Der kaltblütige Straßenkampfboxer Frank setzt alles daran, das Land, auf dem die Schule von Weitere Informationen Ips Sohn Ip Ching gebaut ist, an sich zu reißen – koste es, was es wolle. Deutscher Pressestern® Bierstadter Straße 9 a Zusammen mit dem begabten Wing-Chun-Wettkämpfer Cheung Tin-chi und 65189 Wiesbaden dem Vater von Ip Chings Klassenkameraden Cheung Fong kämpft Meister Ip Man für Gerechtigkeit und versucht mit allen Mitteln, die Schule seines Sandra Hemmerling Sohnes vor dem habgierigen Frank zu retten. -

Commencement 1991-2000

The Johns Hopkins University 17 May 1795 Conferring of Degrees Celebrating the 200th at the Close of the Anniversary of the Births 1 1 9th Academic Year of Johns Hopkins May 25,1995 George Peabody 18 February 1795 and For those wishing to take photographs of graduates receiving their diplomas, we request that you do so only in the area designated for this purpose located just to the left of the stage, To reach this area, please walk around the outside of the tent, where ushers and security officers will assist you. Do not walk in the three center aisles as these are needed for the graduates. We appreciate your cooperation. C ONTENTS Order of Procession 1 Order of Events 2 Divisional Diploma Ceremonies Information 5 Johns Hopkins Society of Scholars 6 Honorary Degree Citations 9 Academic Regalia 12 Awards 14 Honor Societies 18 Student Honors 20 Degree Candidates 22 Digitized by the Internet Archive in 2012 with funding from LYRASIS Members and Sloan Foundation http://archive.org/details/commencement1995 Order of Procession Marshals Ronald A. Berk Radoslaw Michalowski Kyle Cunningham Ann Marie Morgan Robert E. Green, Jr. Nancy R. Norris Bernard Guyer Peter B. Petersen H. Franklin Herlong Robert Reid-Pharr Frederick L. Holborn Edyth H. Schoenrich Thomas Lectka Kathleen J. Stebe Violaine Marie Melancon Susan Wolf The Graduates Marshals Edward John Bouwer Katrina B. McDonald The Faculties Marshals Wilda Anderson Edward Scheinerman The Deans Members of the Society ofScholars Officers of the University The Trustees ChiefMarshal Milton Cummings, Jr. The President of the Johns Hopkins University Alumni Association The Chaplain The Faadty Presenter of the Doctoral Candidates The Honorary Degree Candidates The Provost of the University The Chairman of the Board of Trustees The United States Senatorfrom Maryland The Queen of Thailand The President of the University mmMmm\m\mmmmmmAU^mtm> mmwMm+mwMmwmm^mwmwM Order of Events Greetings Douglas A. -

WEEK 4: Sunday, 19 January

b WEEK 4: Sunday, 19 January - Saturday, 25 January 2020 ALL MARKETS Start Consumer Closed Date Genre Title TV Guide Text Country of Origin Language Year Repeat Classification Subtitles Time Advice Captions Edouard Baer and Gerard Depardieu star as comic book duo Asterix and Obelix 2020-01-19 0555 Comedy Asterix And Obelix In Britain in this zany adventure, which sees Asterix cross the channel to help his second- FRANCE French-100 2013 RPT PG Y cousin Anticlimax face Caesar and the invading Romans. On a cruise to celebrate their parents' 30th wedding anniversary, a brother Hindi-60; English- 2020-01-19 0800 Drama Dil Dhadakne Do and sister deal with the impact of family considerations on their romantic INDIA 2015 RPT PG a l v Y 40 lives. In 1947, Lord Mountbatten assumes the post of last Viceroy, charged with handing India back to its people, living upstairs at the house which was the 2020-01-19 1110 Drama Viceroy's House INDIA English-100 2017 RPT PG a Y Y home of British rulers, whilst 500 Hindu, Muslim and Sikh servants lived downstairs. Loving Vincent, the world’s first fully painted feature film, brings the paintings of Vincent van Gogh to life to tell his remarkable story. Every frame of the film, 2020-01-19 1310 History Loving Vincent totalling around 65,000, is an oil-painting hand-painted by 125 professional oil- POLAND English-100 2017 RPT M a painters who travelled from around the world to the Loving Vincent studios in Poland and Greece to be a part of the production. -

SMI Culture Group Holdings Limited 星美文化集團控股

Hong Kong Exchanges and Clearing Limited and The Stock Exchange of Hong Kong Limited take no responsibility for the contents of this announcement, make no representation as to its accuracy or completeness and expressly disclaim any liability whatsoever for any loss howsoever arising from or in reliance upon the whole or any part of the contents of this announcement. SMI Culture Group Holdings Limited 星 美 文 化 集 團 控 股 有 限 公 司 (Incorporated in the Cayman Islands and continued in Bermuda with limited liability) (Stock Code: 2366) VOLUNTARY ANNOUNCEMENT IN RELATION TO THE DISTRIBUTION INVESTMENT AGREEMENT The board (the ‘‘Board’’)ofdirectors(the‘‘Directors’’) of SMI Culture Group Holdings Limited (the ‘‘Company’’) is pleased to announce that on 4 March 2016, SMI Culture Workshop Company Limited (‘‘SMI Culture Workshop’’), a wholly-owned subsidiary of the Company, entered into a distribution investment agreement (the ‘‘Agreement’’) with an independent third party (the ‘‘Investor’’) pursuant to which SMI Culture Workshop and the Investor agreed to jointly invest in the distribution of the movie《葉問3》(Ip Man 3) (the ‘‘Movie’’) in the People’s Republic of China (the ‘‘PRC’’). The Investor has acquired the joint distribution rights of the Movie in the PRC in the principal amount of RMB117 million. The Movie has been released in the PRC on 4 March 2016. The Movie is directed by Mr. Wilson Yip (葉偉信) and starred by Mr. Donnie Yen (甄子丹), Mr. Zhang Jin (張晉), Ms. Lynn Hung (熊黛林), Mr. Patrick Tam (譚耀文) and Mr. Mike Tyson. Pursuant to the Agreement, SMI Culture Workshop will invest HK$29.5 million in the distribution of the Movie and will be entitled to the income generated from the distribution of the Movie in proportion to its investment amount under the Agreement. -

Movie Museum FEBRUARY 2011 COMING ATTRACTIONS

Movie Museum FEBRUARY 2011 COMING ATTRACTIONS THURSDAY FRIDAY SATURDAY SUNDAY MONDAY Hawaii Premiere! Chinese New Year IP MAN Hawaii Premiere! ECHOES OF THE IP MAN YOUSSOU N'DOUR (2008-Hong Kong) (2008-Hong Kong) DOGTOOTH RAINBOW in Cantonese with English RETURN TO GORÉE in Cantonese with English aka Kynodontas (2010-Hong Kong) subtitles & in widescreen (2007-Switzerland/Luxembourg) subtitles & in widescreen (2009-Greece) in Cantonese with English in English/French/Wolof w/ in Greek with English subtitles subtitles & in widescreen with Donnie Yen, Simon Yam, English subtitles, widescreen with Donnie Yen, Simon Yam, & in widescreen with Sandra Ng Kwan Yue, Lynn Hung, Siu-Wong Fan, with Youssou N'Dour, Moncef Lynn Hung, Siu-Wong Fan, with Christos Stergioglou, Simon Yam, Aarif Lee, Buzz Ka Tung Lam,Yu Xing. Genoud, Boubacar Joseph Ka Tung Lam,Yu Xing. Michele Vallley, Aggeliki Chung, Paul Chun. Ndiaye, Idris Muhammad. Papoulia, Mary Tsoni. Written and Directed by Directed by Directed and Co-written by Directed by Directed and Co-written by Alex Law. Wilson Yip. Pierre-Yves Borgeaud. Wilson Yip. Giorgos Lanthimos. 12:15, 2:30, 4:45, 7:00 & 12:30, 2:30, 4:30, 6:30 12:30, 2:30, 4:30, 6:30 & 12:30, 2:30, 4:30, 6:30 12:30, 2:30, 4:30, 6:30 & 8:30pm 8:30pm & 8:30pm & 8:30pm 9:15pm 3 4 5 6 7 Hawaii Premiere! Hawaii Premiere! Valentine's Day 4:30 MONA LISA IT'S KIND OF A NIGHTJOHN (1996) MIGRATORY BIRD (2005-Singapore) (1986-UK) in widescreen with Bob Hoskins, FUNNY STORY with Beau Bridges, Allison aka Hou Niao in Mandarin with English Jones, Carl Lumbly. -

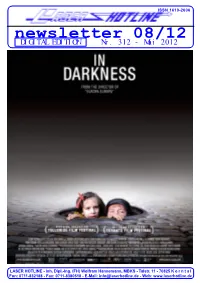

Newsletter 08/12 DIGITAL EDITION Nr

ISSN 1610-2606 ISSN 1610-2606 newsletter 08/12 DIGITAL EDITION Nr. 312 - Mai 2012 Michael J. Fox Christopher Lloyd LASER HOTLINE - Inh. Dipl.-Ing. (FH) Wolfram Hannemann, MBKS - Talstr. 11 - 70825 K o r n t a l Fon: 0711-832188 - Fax: 0711-8380518 - E-Mail: [email protected] - Web: www.laserhotline.de Newsletter 08/12 (Nr. 312) Mai 2012 editorial Hallo Laserdisc- und DVD-Fans, liebe Filmfreunde! Es war einfach wieder großartig! Und die Betonung liegt dabei definitiv auf “groß”. Denn nach einem ganzen Wo- chenende im Pictureville Cinema in Bradford sehen die Bilder in den Stutt- garter Kinos vergleichsweise klein da- gegen aus. Das sind eben die Risiken und Nebenwirkungen, die wir beson- ders dann zu spüren bekommen, wenn wir gerade frisch vom “Widescreen Weekend” heimgekehrt sind. Wie immer waren die Tage in einem der besten Kinos der Welt atemberaubend und haben großen Spaß gemacht. Vor weni- gen Wochen wurde dort auch die Tech- nik auf den neuesten Stand gebracht. So kann das “Pictureville” jetzt nicht nur digital mit einer Auflösung von 4K projizieren, sondern auch den neuen Dolby Surround 7.1 Sound nutzen. De- monstriert wurde die neue Technik mit der Aufführung von Ron Frickes spiri- tuellem Knock-Out SAMSARA. Gefilmt mit 65mm Negativfilm, gescannt mit einer Auflösung von 8K und gemastert sowie projiziert mit der maximal mögli- Gefühl von Cinerama erleben möchte, Wer glaubte, dass er mit der Ton- chen Auflösung von 4K dürfte für den hatten Dave Strohmaier und technik im eigenen Heimkino bereits am SAMSARA die neue Referenz in Sa- Randy Gitsch die passende Antwort. -

Ip Man 2 (2010) Secondo Capitolo Per La Saga Del Maestro Di Bruce Lee, Questa Volta Alle Prese Con La Boxe Dei Colonialisti Inglesi

Ip Man 2 (2010) Secondo capitolo per la saga del maestro di Bruce Lee, questa volta alle prese con la boxe dei colonialisti inglesi. Un film di Wilson Yip con Donnie Yen, Lynn Hung, Simon Yam, Sammo Kam-Bo Hung, Xiaoming Huang, Siu-Wong Fan, Kent Cheng, Darren Shahlavi, Amber Chia, Jiang Dai-Yan. Genere Azione durata 109 minuti. Produzione Hong Kong 2010. Nonostante le innumerevoli difficoltà economiche e l'atteggiamento ostile delle altre scuole di kung fu, Ip Man persevera nel suo progetto e fonda una scuola per diffondere lo stile wing chun. Emanuele Sacchi - www.mymovies.it Sembra di tornare ai tempi belli del cinema di Hong Kong dei '70 e '80, grezzo al limite dell'ignoranza ma dalla creatività inesauribile, quello che, con inequivocabile razzismo, talora metteva in scena gli inglesi (o gli occidentali in genere) come trogloditi dall'ignoranza ferina, di solito apostrofandoli come "diavoli". Immaginare qualcosa di simile mescolato a citazioni da nientemeno che 'Rocky IV' non può che produrre risultati dal gusto ai limiti del trash, ma è anche di questa sana grevità che si nutre un progetto come quello della saga di 'Ip Man' - maestro di Bruce Lee e reinventore dello stile di arti marziali Wing Chun - che giunge al secondo capitolo in un tripudio di duelli, risse ed esibizioni di abilità nelle arti marziali. E se i match della seconda metà del film tra il pugile inglese e i 'sifu' di kung fu vanno visti nell'ottica succitata, la prima metà, incentrata sulla faticosa diffusione da parte di Ip Man del Wing Chun e dai duelli tra lui e gli altri maestri, appartiene allo stato dell'arte del genere. -

NOTHING but KLEZMER a Klezmer Concert Sings and Swings, Laughs and Cries

Taipei, and the Children Orchestra Summer Concert in Changhua city. Chien-I is currently pursuing her Master’s degree at Lynn University Conservatory of Music, where she is studying with Dr. Roberta Rust. Dongfang Zhang , pianist, was born in Suzhou, Jiangsu Province, China in 1984. Mr. Zhang started to learn piano at the age of 4. He studied in a high school affiliated to Nanjing Arts Institute when he was 15 years old with Professor Chuan Qin. In 2003, he studied as an undergraduate student at Shanghai Normal University, studying with Professor Meige Lee during the whole 4 years and got the Bachelor degree in 2007. He participated in various concerts and competitions since 12. Mr. Zhang obtained the Shanghai Musicians’ Association’s Piano Performance Level 10 for Amateurs (Highest Level) at 13. He performed many famous pieces in various concerts at the prestigious Lyceum Theatre, Shanghai. As a young pianist, Mr. Zhang obtained many prizes. Such as the 4 th Pearlriver Cup National Music Contest for Music Major Students in China. Presently, Mr. Zhang is a PPC (Professional Performance Certificate) student at Lynn University Conservatory, studying collaborative piano with Tao Lin. Upcoming Events Dean’s Showcase No. 3 Friday & Saturday, December 12 and 13 NOTHING BUT KLEZMER A Klezmer concert sings and swings, laughs and cries. Paul Green and his band Klezmer East will make you do all of that as they share with you the great works of this rapidly expanding art form. Classics of the Klezmer tradition will be performed along with new pieces from the Klezmer repertoire. -

48Th Annual ISCSC Conference

Comparative Civilizations Review Volume 79 Number 79 Fall 2018 Article 8 10-2018 48th Annual ISCSC Conference Follow this and additional works at: https://scholarsarchive.byu.edu/ccr Part of the Comparative Literature Commons, History Commons, International and Area Studies Commons, Political Science Commons, and the Sociology Commons Recommended Citation (2018) "48th Annual ISCSC Conference," Comparative Civilizations Review: Vol. 79 : No. 79 , Article 8. Available at: https://scholarsarchive.byu.edu/ccr/vol79/iss79/8 This Article is brought to you for free and open access by the Journals at BYU ScholarsArchive. It has been accepted for inclusion in Comparative Civilizations Review by an authorized editor of BYU ScholarsArchive. For more information, please contact [email protected], [email protected]. et al.: 48th Annual ISCSC Conference Comparative Civilizations Review 109 48th Annual ISCSC Conference Soochow University, Suzhou, China June 15-17, 2018 Lynn Rhodes Introductory Comments Thank you for the warm welcome and to all our very special hosts here in Suzhou for the 48th Annual ISCSC Conference. I’d like to share a little history of the ISCSC as we begin this conference. In October 1961 an extraordinary group of scholars gathered in Salzburg, Austria to create the International Society for the Comparative Study of Civilizations (ISCSC). There were 26 founding members from Austria, Germany, France, Switzerland, the Netherlands, Spain, Italy, England, Russia, the United States, China and Japan. Among them were brilliant -

Poof : : Caleb Nelson University of Massachusetts Boston

University of Massachusetts Boston ScholarWorks at UMass Boston Graduate Masters Theses Doctoral Dissertations and Masters Theses 6-1-2015 : : poof : : Caleb Nelson University of Massachusetts Boston Follow this and additional works at: http://scholarworks.umb.edu/masters_theses Part of the American Literature Commons, Communication Commons, and the Fine Arts Commons Recommended Citation Nelson, Caleb, ": : poof : :" (2015). Graduate Masters Theses. Paper 325. This Open Access Thesis is brought to you for free and open access by the Doctoral Dissertations and Masters Theses at ScholarWorks at UMass Boston. It has been accepted for inclusion in Graduate Masters Theses by an authorized administrator of ScholarWorks at UMass Boston. For more information, please contact [email protected]. : : POOF : : SHORT STORIES Master’s Thesis by CALEB NELSON Submitted to the Office of Graduate Studies, University of Massachusetts Boston, in partial fulfillment of the requirements for the degree of Master of Arts in English June 2015 Creative Writing Program Copyright © 2015 Caleb Nelson All rights reserved : : POOF : : SHORT STORIES Master’s Thesis by CALEB NELSON Approved as to style and content by: ________________________________________________ Jill McDonough, Assistant Professor of English ________________________________________________ John Fulton, Associate Professor of English ________________________________________________ Daphne Kalotay, Visiting Writer ________________________________________________ Elizabeth Klimasmith, English Graduate Program Director ________________________________________________ Cheryl Nixon, Department Chair of English ABSTRACT : : POOF : : SHORT STORIES June 2015 Caleb Nelson, B.A., University of Massachusetts Boston M.A., University of Massachusetts Boston Directed by Professor Jill McDonough Storytellers have an interdependent relationship with their narratives. If you have ever told a lie, you understand. Stories take on a life of their own, as you consider the potential ramifications of each contingent piece.