Unit 4 Indexing Systems and Techniques

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Natural Language Processing Technique for Information Extraction and Analysis

International Journal of Research Studies in Computer Science and Engineering (IJRSCSE) Volume 2, Issue 8, August 2015, PP 32-40 ISSN 2349-4840 (Print) & ISSN 2349-4859 (Online) www.arcjournals.org Natural Language Processing Technique for Information Extraction and Analysis T. Sri Sravya1, T. Sudha2, M. Soumya Harika3 1 M.Tech (C.S.E) Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 2 Head (I/C) of C.S.E & IT Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 3 M. Tech C.S.E, Assistant Professor, Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] Abstract: In the current internet era, there are a large number of systems and sensors which generate data continuously and inform users about their status and the status of devices and surroundings they monitor. Examples include web cameras at traffic intersections, key government installations etc., seismic activity measurement sensors, tsunami early warning systems and many others. A Natural Language Processing based activity, the current project is aimed at extracting entities from data collected from various sources such as social media, internet news articles and other websites and integrating this data into contextual information, providing visualization of this data on a map and further performing co-reference analysis to establish linkage amongst the entities. Keywords: Apache Nutch, Solr, crawling, indexing 1. INTRODUCTION In today’s harsh global business arena, the pace of events has increased rapidly, with technological innovations occurring at ever-increasing speed and considerably shorter life cycles. -

United States Patent (19) 11 Patent Number: 6,094,649 Bowen Et Al

US006094649A United States Patent (19) 11 Patent Number: 6,094,649 Bowen et al. (45) Date of Patent: Jul. 25, 2000 54) KEYWORD SEARCHES OF STRUCTURED “Charles Schwab Broadens Deployment of Fulcrum-Based DATABASES Corporate Knowledge Library Application', Uknown, Full 75 Inventors: Stephen J Bowen, Sandy; Don R crum Technologies Inc., Mar. 3, 1997, pp. 1-3. Brown, Salt Lake City, both of Utah (List continued on next page.) 73 Assignee: PartNet, Inc., Salt Lake City, Utah 21 Appl. No.: 08/995,700 Primary Examiner-Hosain T. Alam 22 Filed: Dec. 22, 1997 Assistant Examiner Thuy Pardo Attorney, Agent, or Firm-Computer Law---- (51) Int. Cl." ...................................................... G06F 17/30 52 U.S. Cl. ......................................... 707/3; 707/5; 707/4 (57 ABSTRACT 58 Field of Search .................................... 707/1, 2, 3, 4, 707/5, 531, 532,500 Methods and Systems are provided for Supporting keyword Searches of data items in a structured database, Such as a 56) References Cited relational database. Selected data items are retrieved using U.S. PATENT DOCUMENTS an SQL query or other mechanism. The retrieved data values 5,375,235 12/1994 Berry et al. ................................. is are documented using a markup language such as HTML. 5,469,354 11/1995 Hatakeyama et al. ... 707/3 The documents are indexed using a web crawler or other 5,546,578 8/1996 Takada ................. ... 707/5 indexing agent. Data items may be Selected for indexing by 5,685,003 11/1997 Peltonen et al. .. ... 707/531 5,787.295 7/1998 Nakao ........... ... 707/500 identifying them in a data dictionary. The indexing agent 5,787,421 7/1998 Nomiyama .. -

1.3.4 Web Technologies Concise Notes

OCR Computer Science A Level 1.3.4 Web Technologies Concise Notes www.pmt.education Specification 1.3.4 a) ● HTML ● CSS ● JavaScript 1.3.4 b) ● Search engine indexing 1.3.4 c) ● PageRank algorithm 1.3.4 d) ● Server and Client side processing www.pmt.education Web Development HTML ● HTML is the language/script that web pages are written in, ● It allows a browser to interpret and render a webpage for the viewer by describing the structure and order of the webpage. ● The language uses tags written in angle brackets (<tag>, </tag>) there are two sections of a webpage, a body and head. HTML Tags ● <html> : all code written within these tags is interpreted as HTML, ● <body> :Defines the content in the main browser content area, ● <link> :this is used to link to a css stylesheet (explained later in the notes) ● <head> :Defines the browser tab or window heading area, ● <title> :Defines the text that appears with the tab or window heading area, ● <h1>, <h2>, <h3> :Heading styles in decreasing sizes, ● <p> :A paragraph separated with a line space above and below ● <img> :Self closing image with parameters (img src = location, height=x, width = y) ● <a> : Anchor tag defining a hyperlink with location parameters (<a href= location> link text </a>) ● <ol> :Defines an ordered list, ● <ul> :Defines an unordered list, ● <li> :defines an individual list item ● <div> :creates a division of a page into separate areas each which can be referred to uniquely by name, (<div id= “page”>) Classes and Identifiers ● Class and identifier selectors are the names which you style, this means groups of items can be styled, the selectors for html are usually the div tags. -

Exploring Search Engine Optimization (SEO) Techniques for Dynamic Websites

Master Thesis Computer Science Thesis no: MCS-2011-10 March, 2011 __________________________________________________________________________ Exploring Search Engine Optimization (SEO) Techniques for Dynamic Websites Wasfa Kanwal School of Computing Blekinge Institute of Technology SE – 371 39 Karlskrona Sweden This thesis is submitted to the School of Computing at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Computer Science. The thesis is equivalent to 20 weeks of full time studies. ___________________________________________________________________________________ Contact Information: Author: Wasfa Kanwal E-mail: [email protected] University advisor: Martin Boldt, PhD. School of Computing School of Computing Internet : www.bth.se/com Blekinge Institute of Technology Phone : +46 455 38 50 00 SE – 371 39 Karlskrona Fax : +46 455 38 50 57 Sweden ii ABSTRACT Context: With growing number of online businesses, Search Engine Optimization (SEO) has become vital to capitalize a business because SEO is key factor for marketing an online business. SEO is the process to optimize a website so that it ranks well on Search Engine Result Pages (SERPs). Dynamic websites are commonly used for e-commerce because they are easier to update and expand; however they are subjected to indexing related problems. Objectives: This research aims to examine and address dynamic websites indexing related issues. To achieve aims and objectives of this research I intend to explore dynamic websites indexing considerations, investigate SEO tools to carry SEO campaign in three major search engines (Google, Yahoo and Bing), experiment SEO techniques, and determine to what extent dynamic websites can be made search engine friendly on these major search engines. -

Check out Our Initial Report Example Here

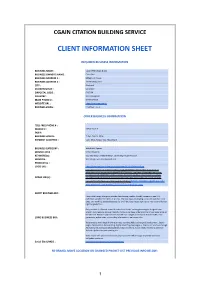

CGAIN CITATION BUILDING SERVICE CLIENT INFORMATION SHEET REQUIRED BUSINESS INFORMATION BUSINESS NAME : CGain Web Design & SEO BUSINESS OWNER'S NAME : Chris Giles BUSINESS ADDRESS 1 : Millennium House BUSINESS ADDRESS 2 : 161 Mowbray Drive CITY : Blackpool STATEPROVINCE : Lancashire ZIPPOSTAL CODE : FY3 7UN COUNTRY : United Kingdom MAIN PHONE # : 01253 675593 WEBSITE URL : hPps://www.cgain.co.uk BUSINESS EMAIL : [email protected] OTHER BUSINESS INFORMATION TOLL FREE PHONE # : MOBILE # : 07518 931616 FAX # : BUSINESS HOURS : 7 days, 9am to 10pm PAYMENT ACCEPTED : Cash, BACS, Paypal, Visa, Mastercard BUSINESS CATEGORY : Web & SEO Agency SERVICE AREA : United Kingdom KEYWORD(s) : seo, web design, website design, search engine op[misa[on SERVICES : Web design, web development, seo PRODUCT(s) : LOGO URL : hPps://www.cgain.co.uk/wp-content/uploads/2019/10/650x520.jpg hPps://www.cgain.co.uk/wp-content/uploads/2020/06/owllegal-website.jpg, hPps:// www.cgain.co.uk/wp-content/uploads/2020/06/weelz_.jpg,hPps://www.cgain.co.uk/wp- content/uploads/2020/06/mobility-scooters-blackpool.jpg,hPps://www.cgain.co.uk/wp- IMAGE URL(s) : content/uploads/2020/06/quickcrepesburnley.jpg,hPps://www.cgain.co.uk/wp-content/ uploads/2019/03/fleet-equestrian-website-2018.jpg,https://www.cgain.co.uk/ wp-content/uploads/2019/03/magellan.jpg SHORT BUSINESS BIO : CGain Web Design Blackpool provide class-leading, mobile-friendly, responsive and fully op[mised websites for clients of all sizes. We have been developing successful websites since 1995. Our work has covered websites for small local businesses right up to interna[onal human rights organisa[ons. Every website is different, honed to reflect our clients’ exis[ng branding or designed from scratch. -

How Does Google Work?

With Genealogy Links: http://www.ci.oswego.or.us/library/genealogy-presentation How does Google work? Google’s Computers: Google utilizes many computers known as “servers” to administer (serve) Google services. Google likely has around one million servers for different functions including analyzing search queries and delivering search results. One of their largest “server farms” is located in the Dalles, Oregon. Google is very secretive about how many servers it actually has. When you run a search with Google, you’ll notice that Google brags that brings back your results in a fraction of a second —For example: About 11,700,000 results (0.27 seconds), this is the result of many of Google’s servers working together on your search together. Indexing the Web: Google browses the visible web and creates and re-creates an index of the websites it browses. When you run a Google search you aren’t searching the Web itself, but instead Google’s index of sites with links to live pages that it created when it last indexed the web. Google has the largest index of web pages of any search engine, indexing over 55 billion web pages! When Google indexes the web it makes a copy of the site for its “cache”. When you search with Google and come across a website which is currently down, you can mouseover a site in the search results, then the double arrows and then click on “cached” to see what the site recently looked like when it was last indexed or before it went down. Useful for find- ing information that “was just there yesterday!”. -

Metadata Statistics for a Large Web Corpus

Metadata Statistics for a Large Web Corpus Peter Mika Tim Potter Yahoo! Research Yahoo! Research Diagonal 177 Diagonal 177 Barcelona, Spain Barcelona, Spain [email protected] [email protected] ABSTRACT are a number of factors that complicate the comparison of We provide an analysis of the adoption of metadata stan- results. First, different studies use different web corpora. dards on the Web based a large crawl of the Web. In par- Our earlier study used a corpus collected by Yahoo!'s web ticular, we look at what forms of syntax and vocabularies crawler, while the current study uses a dataset collected by publishers are using to mark up data inside HTML pages. the Bing crawler. Bizer et al. analyze the data collected by We also describe the process that we have followed and the http://www.commoncrawl.org, which has the obvious ad- difficulties involved in web data extraction. vantage that it is publicly available. Second, the extraction methods may differ. For example, there are a multitude of microformats (one for each object type) and although most 1. INTRODUCTION search engines and extraction libraries support the popular Embedding metadata inside HTML pages is one of the ones, different processors may recognize a different subset. ways to publish structured data on the Web, often pre- Unlike the specifications of microdata and RDFa published ferred by publishers and consumers over other methods of by the RDFa, the microformat specifications are also rather exposing structured data, such as publishing data feeds, informal and thus different processors may extract different SPARQL endpoints or RDF/XML documents. -

SEO Prices- Start-Up SEO :- Free SEO Step-1 Payment SEO Step-2

www.ITsupportseo.co.uk -------------------------------------------------------------------------------------------------------------------------------------- SEO Investments:- As Google continues to dedicating the world biggest search engine holding, companies are pushing spending on digital marketing services in order to get Google search engine at first page. If you are considering investing in search engine optimization (SEO), it may be hard to decide where to spend money, especially when marketing budgets are always tight. Still, SEO firms can potentially bring a lot of value to your business. But not every company can bring your required result. We have specialist SEO team who can give you best result. We believe every business need know what happen to his investment and how you will get back. We have three Step strategy, Investment in two steps and starting receiving investment back from 5th month. SEO Prices- Start-up SEO :- Free SEO Step-1 Payment SEO Step-2 Payment SEO Step-3 Payment £1500 £1500 £350 First immediately Payment 2nd Payment After 30 Days Per month 10 Days Completion 30+80 Days Completion Continues SEO Step-1 First immediately Payment SEO Step-2 Payment After First Month SEO Step-3 Payment First week of 3rd Month -------------------------------------------------------------------------------------------------------------- Start-up SEO Initial Website Analysis Homepage Analysis Keyword Research Internal Page Analysis Backlink Check -------------------------------------------------------------------------------------------------------------------------------------- -

Extending Full Text Search Engine for Mathematical Content

Extending Full Text Search Engine for Mathematical Content Jozef Mišutka and Leo Galamboš Charles University in Prague, Ke Karlovu 3, 121 16 Prague, Czech Republic E-mail: [email protected] Abstract. The WWW became the main resource of mathematical knowl- edge. Currently available full text search engines can be used on these documents but they are deficient in almost all cases. By applying ax- ioms, equal transformations, and by using different notation each formula can be expressed in numerous ways. Most of these documents do not contain semantic information; therefore, precise mathematical interpre- tation is impossible. On the other hand, semantic information can help to give more precise information. In this work we address these issues and present a new technique how to search for mathematical formulae in real-world mathematical documents, but still offering an extensible level of mathematical awareness. It exploits the advantages of full text search engine and stores each formula not only once but in several gener- alised representations. Because it is designed as an extension, any full text search engine can adopt it. Based on the proposed theory we developed EgoMath — new mathematical search engine. Experiments with EgoMath over two document sets, containing semantic information, showed that this technique can be used to build a fully-fledged mathematical search engine. Key words: mathematical discourse, language processing, mathematical searching, full text search engine, indexing 1 Introduction There are several ways how to create and publish semantically annotated mathematical content. However, these documents are still a minority of the mathematical content on the WWW. Among the commonly used document formats to exchange mathematics (LATEX, MathML, PDF, PS, Word) only MathML contains support for semantics. -

Examens Arbete

IT-Forensik och Informationssäkerhet EXAMENS Detektering av misstänkt grooming ARBETE Ewa Gärdström och Devran Öncü Datateknik 15hp Halmstad 2015-07-23 Detektering av misstänkt grooming Kandidatuppsats 2015 Juni Författare: Devran Öncü & Ewa Gärdström Handledare: Eric Järpe Examinator: Mattias Wecksten Sektionen för informationsvetenskap, data- och elektroteknik Högskolan i Halmstad Box 823, 301 18 HALMSTAD © Copyright Devran Öncü & Ewa Gärdström 2015. All rights reserved Kandidatuppsats Sektionen för informationsvetenskap, data- och elektroteknik Högskolan i Halmstad II Förord Vi vill här ta tillfället i akt och tacka alla de personer som har bidragit vid examensarbetet. Först och främst vill vi tacka vår handledare Eric Järpe för ett stort tålamod, hans goda samarbetsvilja och för alla matematiska tips. Vi vill även tacka Mats Andersson för att han tog sig tiden att träffa oss för en intervju och delade med sig av sina erfarenheter rörande grooming och sitt jobb. Och slutligen ett tack till Per-Åke Irskog som ställde upp på ett möte där han delade med sig av sina juridiska kunskaper kring brottet grooming och den svenska lagstiftningen kring brottet. Ewa Gärdström Devran Öncu III IV Sammanfattning Examensarbetets primära innehåll behandlar detektering av grooming via experiment på två olika program. Experimenten utgår från två olika ordlistor och utförs på ett textanalysverktyg - Overview, och på ett IT-forensiskt verktyg - EnCase. Då de två verktygen använder sig av olika metoder vid sökningar och är utformade för olika syften så går studien även igenom olika metoder av data mining och forensiska metoder. Dataset i form av chattkonversationer har samlats in inför studiens experiment, och har därefter kategoriserats efter harmfull och harmlös data. -

PART II Finding Needles in the World's Biggest Haystack

PART II Finding Needles in the World's Biggest Haystack - or - The Page Match & Page Rank Problems We will look at how a search engine carries out an impossible task: • Read your search word or phrase; • search all 40 billion pages on the Internet; • list all the pages that contain some or all of your phrase; • rank these matches so the best matches are first; • and do this in less than two seconds. Topics for Part II 1. The PageMatch Problem investigates how your browser takes your inter- net search string, passes it to a search engine which returns an answer incredibly fast. We will see that this process involves a number of hidden tricks and indexes. 2. The PageRank Problem considers how it is possible, once many matching web pages have been found, to select the very few that correspond most closely to your search string. Goals for this lecture 1. To realize that a search engine doesn't wait for your search string and then start checking one page after another on the web; 2. To realize that it's possible to create an index of the web, just like the index of a book; 3. To understand how Web crawling is done to create an index; 4. To recognize how information searches were done before the Internet. 5. To watch a video from a Google engineer explaining in general terms how Web searches work at Google. Reading Assignment Read Chapter 2, pages 10{23, \9 Algorithms that Changed the Future". What's the Internet? Once, computers were simply stand-alone devices. -

The Weaknesses of Full-Text Searching

The Weaknesses of Full-Text Searching by Jeffrey Beall This paper provides a theoretical critique of the INTRODUCTION deficiencies of full-text searching in academic Definition of Full-Text Searching library databases. Because full-text searching Full-text searching is the type of search a computer performs relies on matching words in a search query with when it matches terms in a search query with terms in words in online resources, it is an inefficient individual documents in a database and ranks the results algorithmically. This type of searching is ubiquitous on the method of finding information in a database. Internet and includes the type of natural language search we This matching fails to retrieve synonyms, and typically find in commercial search engines, Web site search it also retrieves unwanted homonyms. boxes, and in many proprietary databases. The term full-text searching has several synonyms and variations, including Numerous other problems also make full-text keyword searching, algorithmic searching, stochastic search- searching an ineffective information retrieval ing, and probabilistic searching. tool. Academic libraries purchase and subscribe Metadata-Enabled Searching to numerous proprietary databases, many of There is one other main type of online searching. This is which rely on full-text searching for access metadata-enabled searching, which is also called deterministic and discovery. An understanding of the searching. In this type of search, searchers pre-select and search weaknesses of full-text searching is needed to individual facets of an information resource, such as author, title, and subject. In this type of search, the system matches evaluate the search and discovery capabilities of terms in the search with terms in structured metadata and academic library databases.