Exploring Search Engine Optimization (SEO) Techniques for Dynamic Websites

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Study of Open Source Cmss by CHETAN GOPILAL JAIN a Thesis

The Study of Open Source CMSs By CHETAN GOPILAL JAIN A thesis submitted to the Graduate School-New Brunswick Rutgers, The State University of New Jersey in partial fulfillment of the requirements for the degree of Master of Science Graduate Program in Electrical and Computer Engineering written under the direction of Prof Deborah Silver and approved by ________________________ ________________________ ________________________ ________________________ New Brunswick, New Jersey May, 2010 2010 CHETAN GOPILAL JAIN ALL RIGHTS RESERVED ABSTRACT OF THE THESIS The Study of Open Source CMSs By CHETAN JAIN Thesis Director: Professor Deborah Silver In this thesis, we evaluate different open source content management systems (CMSs) and determine their appropriateness for scientific research laboratories’ website content management. We describe different CMSs and evaluate them based on the following criteria: ease of installation, usability, maintenance and updates, scalability, community strength and support, user roles and workflow, security, and Web 2.0 features. We then choose of these system, Drupal, and demonstrate its effectiveness for two different scientific websites, Bio-1 and Vizlab. Drupal allows integrating new features using community contributed modules and easy future up-gradation. Successful implementation of both projects using Drupal highlights the importance of Open Source CMSs. ii Acknowledgement I would like to thank my advisor, Prof. Deborah Silver, for her support and encouragement while writing this thesis.Also, I would like to thank my parents and family who provided me with a strong educational foundation and supported me in all my academic pursuits. I also acknowledge the help of VIZLAB at Rutgers. iii Table of Contents Abstract ……………………………………………………………………….………………….ii Acknowledgement …………………………………………………………...………………... -

Natural Language Processing Technique for Information Extraction and Analysis

International Journal of Research Studies in Computer Science and Engineering (IJRSCSE) Volume 2, Issue 8, August 2015, PP 32-40 ISSN 2349-4840 (Print) & ISSN 2349-4859 (Online) www.arcjournals.org Natural Language Processing Technique for Information Extraction and Analysis T. Sri Sravya1, T. Sudha2, M. Soumya Harika3 1 M.Tech (C.S.E) Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 2 Head (I/C) of C.S.E & IT Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 3 M. Tech C.S.E, Assistant Professor, Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] Abstract: In the current internet era, there are a large number of systems and sensors which generate data continuously and inform users about their status and the status of devices and surroundings they monitor. Examples include web cameras at traffic intersections, key government installations etc., seismic activity measurement sensors, tsunami early warning systems and many others. A Natural Language Processing based activity, the current project is aimed at extracting entities from data collected from various sources such as social media, internet news articles and other websites and integrating this data into contextual information, providing visualization of this data on a map and further performing co-reference analysis to establish linkage amongst the entities. Keywords: Apache Nutch, Solr, crawling, indexing 1. INTRODUCTION In today’s harsh global business arena, the pace of events has increased rapidly, with technological innovations occurring at ever-increasing speed and considerably shorter life cycles. -

United States Patent (19) 11 Patent Number: 6,094,649 Bowen Et Al

US006094649A United States Patent (19) 11 Patent Number: 6,094,649 Bowen et al. (45) Date of Patent: Jul. 25, 2000 54) KEYWORD SEARCHES OF STRUCTURED “Charles Schwab Broadens Deployment of Fulcrum-Based DATABASES Corporate Knowledge Library Application', Uknown, Full 75 Inventors: Stephen J Bowen, Sandy; Don R crum Technologies Inc., Mar. 3, 1997, pp. 1-3. Brown, Salt Lake City, both of Utah (List continued on next page.) 73 Assignee: PartNet, Inc., Salt Lake City, Utah 21 Appl. No.: 08/995,700 Primary Examiner-Hosain T. Alam 22 Filed: Dec. 22, 1997 Assistant Examiner Thuy Pardo Attorney, Agent, or Firm-Computer Law---- (51) Int. Cl." ...................................................... G06F 17/30 52 U.S. Cl. ......................................... 707/3; 707/5; 707/4 (57 ABSTRACT 58 Field of Search .................................... 707/1, 2, 3, 4, 707/5, 531, 532,500 Methods and Systems are provided for Supporting keyword Searches of data items in a structured database, Such as a 56) References Cited relational database. Selected data items are retrieved using U.S. PATENT DOCUMENTS an SQL query or other mechanism. The retrieved data values 5,375,235 12/1994 Berry et al. ................................. is are documented using a markup language such as HTML. 5,469,354 11/1995 Hatakeyama et al. ... 707/3 The documents are indexed using a web crawler or other 5,546,578 8/1996 Takada ................. ... 707/5 indexing agent. Data items may be Selected for indexing by 5,685,003 11/1997 Peltonen et al. .. ... 707/531 5,787.295 7/1998 Nakao ........... ... 707/500 identifying them in a data dictionary. The indexing agent 5,787,421 7/1998 Nomiyama .. -

Vol.11, No. 2, 2011

Applied Computing Review 2 SIGAPP FY’11 Semi-Annual Report July 2010- February 2011 Sung Y. Shin Mission To further the interests of the computing professionals engaged in the development of new computing applications and to transfer the capabilities of computing technology to new problem domains. Officers Chair – Sung Y. Shin South Dakota State University, USA Vice Chair – Richard Chbeir Bourgogne University, Dijon, France Secretary – W. Eric Wong University of Texas, USA Treasurer – Lorie Liebrock New Mexico Institute of Mining and Technology, USA Web Master – Hisham Haddad Kennesaw State University, USA ACM Program Coordinator – Irene Frawley ACM HQ Applied Computing Review 3 Notice to Contributing Authors to SIG Newsletters By submitting your article for distribution in this Special Interest Group publication, you hereby grant to ACM the following non-exclusive, perpetual, worldwide rights. • To publish in print on condition of acceptance by the editor • To digitize and post your article in the electronic version of this publication • To include the article in the ACM Digital Library • To allow users to copy and distribute the article for noncommercial, educational, or research purposes. However, as a contributing author, you retain copyright to your article and ACM will make every effort to refer requests for commercial use directly to you. Status Update SIGAPP's main event for this year will be the Symposium on Applied Computing (SAC) 2011 in Taichung, Taiwan from March 21-24 which will carry the tradition from Switzerland's SAC 2010. This year's SAC preparation has been very successful. More details about incoming SAC 2011 will follow in the next section. -

1.3.4 Web Technologies Concise Notes

OCR Computer Science A Level 1.3.4 Web Technologies Concise Notes www.pmt.education Specification 1.3.4 a) ● HTML ● CSS ● JavaScript 1.3.4 b) ● Search engine indexing 1.3.4 c) ● PageRank algorithm 1.3.4 d) ● Server and Client side processing www.pmt.education Web Development HTML ● HTML is the language/script that web pages are written in, ● It allows a browser to interpret and render a webpage for the viewer by describing the structure and order of the webpage. ● The language uses tags written in angle brackets (<tag>, </tag>) there are two sections of a webpage, a body and head. HTML Tags ● <html> : all code written within these tags is interpreted as HTML, ● <body> :Defines the content in the main browser content area, ● <link> :this is used to link to a css stylesheet (explained later in the notes) ● <head> :Defines the browser tab or window heading area, ● <title> :Defines the text that appears with the tab or window heading area, ● <h1>, <h2>, <h3> :Heading styles in decreasing sizes, ● <p> :A paragraph separated with a line space above and below ● <img> :Self closing image with parameters (img src = location, height=x, width = y) ● <a> : Anchor tag defining a hyperlink with location parameters (<a href= location> link text </a>) ● <ol> :Defines an ordered list, ● <ul> :Defines an unordered list, ● <li> :defines an individual list item ● <div> :creates a division of a page into separate areas each which can be referred to uniquely by name, (<div id= “page”>) Classes and Identifiers ● Class and identifier selectors are the names which you style, this means groups of items can be styled, the selectors for html are usually the div tags. -

Appximity: a Context-Aware Mobile Application Management Framework

AppXimity: A Context-Aware Mobile Application Management Framework by Ernest E. Alexander Jr. Aaron B.Sc., Universiti Tenaga Nasional, 2011 A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of MASTER OF SCIENCE in the Department of Computer Science c Ernest E. Alexander Jr. Aaron, 2017 University of Victoria All rights reserved. This thesis may not be reproduced in whole or in part, by photocopying or other means, without the permission of the author. ii AppXimity: A Context-Aware Mobile Application Management Framework by Ernest E. Alexander Jr. Aaron B.Sc., Universiti Tenaga Nasional, 2011 Supervisory Committee Dr. Hausi A. M¨uller,Supervisor (Department of Computer Science) Dr. Issa Traor´e,Outside Member (Department of Electrical and Computer Engineering) iii Supervisory Committee Dr. Hausi A. M¨uller,Supervisor (Department of Computer Science) Dr. Issa Traor´e,Outside Member (Department of Electrical and Computer Engineering) ABSTRACT The Internet of Things is an emerging technology where everyday devices with sensing and actuating capabilities are connected to the Internet and seamlessly com- municate with other devices over the network. The proliferation of mobile devices enables access to unprecedented levels of rich information sources. Mobile app cre- ators can leverage this information to create personalized mobile applications. The amount of available mobile apps available for download will increase over time, and thus, accessing and managing apps can become cumbersome. This thesis presents AppXimity, a mobile-app-management that provides personalized app suggestions and recommendations by leveraging user preferences and contextual information to provide relevant apps in a given context. Suggested apps represent a subset of the installed apps that match nearby businesses or have been identified by AppXimity as apps of interest to the user, and recommended apps are those apps that are not installed on the user's device, but may be of interest to the user, in that location. -

Annex I: List of Internet Robots, Crawlers, Spiders, Etc. This Is A

Annex I: List of internet robots, crawlers, spiders, etc. This is a revised list published on 15/04/2016. Please note it is rationalised, removing some previously redundant entries (e.g. the text ‘bot’ – msnbot, awbot, bbot, turnitinbot, etc. – which is now collapsed down to a single entry ‘bot’). COUNTER welcomes updates and suggestions for this list from our community of users. bot spider crawl ^.?$ [^a]fish ^IDA$ ^ruby$ ^voyager\/ ^@ozilla\/\d ^ÆƽâºóµÄ$ ^ÆƽâºóµÄ$ alexa Alexandria(\s|\+)prototype(\s|\+)project AllenTrack almaden appie Arachmo architext aria2\/\d arks ^Array$ asterias atomz BDFetch Betsie biadu biglotron BingPreview bjaaland Blackboard[\+\s]Safeassign blaiz\-bee bloglines blogpulse boitho\.com\-dc bookmark\-manager Brutus\/AET bwh3_user_agent CakePHP celestial cfnetwork checkprivacy China\sLocal\sBrowse\s2\.6 cloakDetect coccoc\/1\.0 Code\sSample\sWeb\sClient ColdFusion combine contentmatch ContentSmartz core CoverScout curl\/7 cursor custo DataCha0s\/2\.0 daumoa ^\%?default\%?$ Dispatch\/\d docomo Download\+Master DSurf easydl EBSCO\sEJS\sContent\sServer ELinks\/ EmailSiphon EmailWolf EndNote EThOS\+\(British\+Library\) facebookexternalhit\/ favorg FDM(\s|\+)\d feedburner FeedFetcher feedreader ferret Fetch(\s|\+)API(\s|\+)Request findlinks ^FileDown$ ^Filter$ ^firefox$ ^FOCA Fulltext Funnelback GetRight geturl GLMSLinkAnalysis Goldfire(\s|\+)Server google grub gulliver gvfs\/ harvest heritrix holmes htdig htmlparser HttpComponents\/1.1 HTTPFetcher http.?client httpget httrack ia_archiver ichiro iktomi ilse -

Check out Our Initial Report Example Here

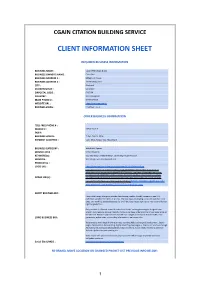

CGAIN CITATION BUILDING SERVICE CLIENT INFORMATION SHEET REQUIRED BUSINESS INFORMATION BUSINESS NAME : CGain Web Design & SEO BUSINESS OWNER'S NAME : Chris Giles BUSINESS ADDRESS 1 : Millennium House BUSINESS ADDRESS 2 : 161 Mowbray Drive CITY : Blackpool STATEPROVINCE : Lancashire ZIPPOSTAL CODE : FY3 7UN COUNTRY : United Kingdom MAIN PHONE # : 01253 675593 WEBSITE URL : hPps://www.cgain.co.uk BUSINESS EMAIL : [email protected] OTHER BUSINESS INFORMATION TOLL FREE PHONE # : MOBILE # : 07518 931616 FAX # : BUSINESS HOURS : 7 days, 9am to 10pm PAYMENT ACCEPTED : Cash, BACS, Paypal, Visa, Mastercard BUSINESS CATEGORY : Web & SEO Agency SERVICE AREA : United Kingdom KEYWORD(s) : seo, web design, website design, search engine op[misa[on SERVICES : Web design, web development, seo PRODUCT(s) : LOGO URL : hPps://www.cgain.co.uk/wp-content/uploads/2019/10/650x520.jpg hPps://www.cgain.co.uk/wp-content/uploads/2020/06/owllegal-website.jpg, hPps:// www.cgain.co.uk/wp-content/uploads/2020/06/weelz_.jpg,hPps://www.cgain.co.uk/wp- content/uploads/2020/06/mobility-scooters-blackpool.jpg,hPps://www.cgain.co.uk/wp- IMAGE URL(s) : content/uploads/2020/06/quickcrepesburnley.jpg,hPps://www.cgain.co.uk/wp-content/ uploads/2019/03/fleet-equestrian-website-2018.jpg,https://www.cgain.co.uk/ wp-content/uploads/2019/03/magellan.jpg SHORT BUSINESS BIO : CGain Web Design Blackpool provide class-leading, mobile-friendly, responsive and fully op[mised websites for clients of all sizes. We have been developing successful websites since 1995. Our work has covered websites for small local businesses right up to interna[onal human rights organisa[ons. Every website is different, honed to reflect our clients’ exis[ng branding or designed from scratch. -

Chapter 4 Working with Content

C H A P T E R 4 ! ! ! Working with Content WordPress comes with several basic content types: posts, pages, links, and media files. In addition, you can create your own content types, which I’ll talk more about in Chapter 12. Posts and pages make up the heart of your site. You’ll probably add images, audio, video, or other documents like Office files to augment your posts and pages, and WordPress makes it easy to upload and link to these files. WordPress also includes a robust link manager, which you can use to maintain a blogroll or other link directory. WordPress automatically generates a number of different feeds to syndicate your content. I’ll talk about the four feed formats, the common feeds, and the hidden ones that even experienced WordPress users might not know about. Since WordPress is known for its exceptional blogging capabilities, I’ll talk about posts first, and then discuss how pages differ from posts—and how you can modify them to be more alike. Posts Collectively, posts make up the blog (or news) section of your site. Posts are generally listed according to date, but can also be tagged or filed into categories. At its most basic, a post consists of a title and some content. In addition, WordPress will add some required metadata to every post: an ID number, an author, a publication date, a category, the publication status, and a visibility setting. There are a number of other things that may be added to posts, but the aforementioned are the essentials. -

Cached and Confused: Web Cache Deception in the Wild

Cached and Confused: Web Cache Deception in the Wild Seyed Ali Mirheidari, University of Trento; Sajjad Arshad, Northeastern University; Kaan Onarlioglu, Akamai Technologies; Bruno Crispo, University of Trento, KU Leuven; Engin Kirda and William Robertson, Northeastern University https://www.usenix.org/conference/usenixsecurity20/presentation/mirheidari This paper is included in the Proceedings of the 29th USENIX Security Symposium. August 12–14, 2020 978-1-939133-17-5 Open access to the Proceedings of the 29th USENIX Security Symposium is sponsored by USENIX. Cached and Confused: Web Cache Deception in the Wild Seyed Ali Mirheidari Sajjad Arshad∗ Kaan Onarlioglu University of Trento Northeastern University Akamai Technologies Bruno Crispo Engin Kirda William Robertson University of Trento & Northeastern University Northeastern University KU Leuven Abstract In particular, Content Delivery Network (CDN) providers Web cache deception (WCD) is an attack proposed in 2017, heavily rely on effective web content caching at their edge where an attacker tricks a caching proxy into erroneously servers, which together comprise a massively-distributed In- storing private information transmitted over the Internet and ternet overlay network of caching reverse proxies. Popular subsequently gains unauthorized access to that cached data. CDN providers advertise accelerated content delivery and Due to the widespread use of web caches and, in particular, high availability via global coverage and deployments reach- the use of massive networks of caching proxies deployed ing hundreds of thousands of servers [5,15]. A recent scien- by content distribution network (CDN) providers as a critical tific measurement also estimates that more than 74% of the component of the Internet, WCD puts a substantial population Alexa Top 1K are served by CDN providers, indicating that of Internet users at risk. -

How Does Google Work?

With Genealogy Links: http://www.ci.oswego.or.us/library/genealogy-presentation How does Google work? Google’s Computers: Google utilizes many computers known as “servers” to administer (serve) Google services. Google likely has around one million servers for different functions including analyzing search queries and delivering search results. One of their largest “server farms” is located in the Dalles, Oregon. Google is very secretive about how many servers it actually has. When you run a search with Google, you’ll notice that Google brags that brings back your results in a fraction of a second —For example: About 11,700,000 results (0.27 seconds), this is the result of many of Google’s servers working together on your search together. Indexing the Web: Google browses the visible web and creates and re-creates an index of the websites it browses. When you run a Google search you aren’t searching the Web itself, but instead Google’s index of sites with links to live pages that it created when it last indexed the web. Google has the largest index of web pages of any search engine, indexing over 55 billion web pages! When Google indexes the web it makes a copy of the site for its “cache”. When you search with Google and come across a website which is currently down, you can mouseover a site in the search results, then the double arrows and then click on “cached” to see what the site recently looked like when it was last indexed or before it went down. Useful for find- ing information that “was just there yesterday!”. -

Metadata Statistics for a Large Web Corpus

Metadata Statistics for a Large Web Corpus Peter Mika Tim Potter Yahoo! Research Yahoo! Research Diagonal 177 Diagonal 177 Barcelona, Spain Barcelona, Spain [email protected] [email protected] ABSTRACT are a number of factors that complicate the comparison of We provide an analysis of the adoption of metadata stan- results. First, different studies use different web corpora. dards on the Web based a large crawl of the Web. In par- Our earlier study used a corpus collected by Yahoo!'s web ticular, we look at what forms of syntax and vocabularies crawler, while the current study uses a dataset collected by publishers are using to mark up data inside HTML pages. the Bing crawler. Bizer et al. analyze the data collected by We also describe the process that we have followed and the http://www.commoncrawl.org, which has the obvious ad- difficulties involved in web data extraction. vantage that it is publicly available. Second, the extraction methods may differ. For example, there are a multitude of microformats (one for each object type) and although most 1. INTRODUCTION search engines and extraction libraries support the popular Embedding metadata inside HTML pages is one of the ones, different processors may recognize a different subset. ways to publish structured data on the Web, often pre- Unlike the specifications of microdata and RDFa published ferred by publishers and consumers over other methods of by the RDFa, the microformat specifications are also rather exposing structured data, such as publishing data feeds, informal and thus different processors may extract different SPARQL endpoints or RDF/XML documents.