Microsoft SQL Server 2008 DSS Performance with Fcoe, Iscsi, And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sun Storage 16 Gb Fibre Channel Pcie Host Bus Adapter, Emulex Installation Guide for HBA Model 7101684

Sun Storage 16 Gb Fibre Channel PCIe Host Bus Adapter, Emulex Installation Guide For HBA Model 7101684 Part No: E24462-10 August 2018 Sun Storage 16 Gb Fibre Channel PCIe Host Bus Adapter, Emulex Installation Guide For HBA Model 7101684 Part No: E24462-10 Copyright © 2017, 2018, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

Dell EMC Storage Compatibility Matrix for SC, PS, and FS Series Arrays

Deployment and Configuration Guide Dell EMC Storage Compatibility Matrix for SC, PS, and FS Series Arrays Abstract This document provides guidance on host and switching devices that are validated for use with Dell EMC SC Series, Dell PS Series, and Dell FS Series (FluidFS) storage solutions. May 2021 DSCM Table of contents Table of contents 1 Overview ....................................................................................................................................................................... 5 1.1 Definitions ........................................................................................................................................................... 5 1.2 Abbreviations and Terminology .......................................................................................................................... 5 1.3 Conventions used in this document ................................................................................................................... 7 2 Dell Storage support policy statement ......................................................................................................................... 8 2.1 Level 1: Full contractual support ......................................................................................................................... 8 2.2 Level 2: Conditional support ............................................................................................................................... 8 2.3 Level 3: Commercially reasonable effort ........................................................................................................... -

Ocm14102b-U5-D Dual-Port 10Gbe Converged Network Mezzanine Adapter for Dell Poweredge™ 13Th Generation Servers

DATA SHEET OCm14102B-U5-D Dual-port 10GbE Converged Network Mezzanine Adapter For Dell PowerEdge™ 13th Generation Servers The Dell OCm14102B-U5-D dual-port 10Gb Ethernet (10GbE) mezzanine adapter is based on Key features Emulex’s fourth-generation OneConnect® Converged Network Adapter (CNA) technology, n Storage, network and RDMA traffic representing the leading CNA to support Local Area Network (LAN), Storage Area Network (SAN) over a common 10GbE infrastructure and Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCEv2) on a single 10GbE adapter. - Reduce adapter and cable costs— one adapter for simultaneous Dell PowerEdge 13th generation servers, with Dell adapters provided by Emulex, offer multiple storage and data traffic on a benefits for the Dell enterprise customer, including: common 10GbE fabric n Accelerating workload performance through consolidation of multiple networking services on a n SMB Direct RoCEv2 support single high-speed Ethernet adapter platform n SR-IOV support n Increasing data center IT agility and scalability through deployment of a secure, private or hybrid, multi-tenant cloud infrastructure n Superior performance and efficiency: n Maximizing server hardware utilization through CPU efficient networking - Overlay network tunneling offloads (VXLAN and NVGRE) The OCm14102B-U5-D dual-port 10GbE adapters offer more scalable virtualization with support for enhanced Single-Root I/O Virtualization (SR-IOV), SMB Direct RoCEv2, Dell switch independent NIC - iSCSI and FCoE storage offloads extended partitioning (NParEP) and next-generation overlay networking technologies that address the requirements of virtual machine (VM) mobility and massive scaling of Layer 2 domains inside - TCP/IP stateless offloads private or hybrid cloud infrastructures. -

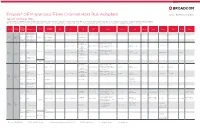

Emulex OEM-Branded Fibre Channel Host Bus Adapters Cross Reference Guide Cross Reference Guide

Emulex® OEM-branded Fibre Channel Host Bus Adapters Cross Reference Guide Support for Emulex HBAs Qualified Emulex HBA customers who also have Brocade® Premier Support contracts for their Brocade Storage Networking products are eligible to purchase support for their Emulex HBAs. The deliverables of the support are the same as Brocade Supplemental Support and this allows the existing Brocade account resources to cover the Brocade and Emulex solution. Fibre Speed I/O Bus Channel Form Factor IOPS Emulex Bull Cisco Dell EMC Fujitsu Hitachi HPE Huawei Inspur Lenovo NEC Oracle Sugon Ports Standard HBA (upgrade- Single LPe35000-M2 able to 64GFC)C PCI Gen 7 HBA (upgrade- Express Dual 5 million LPe35002-M2 LPe35002-D* (32GFC) able to 64GFC)C 4.0 HBA (upgrade- Quad LPe35004-M2 able to 64GFC)C 403-BBLX S26361- F4044-E1 (FH) 403-BBMG (FH) S26361- F4044-E201 (LP) Q0L11A Single HBA LPe32000-M2 UCSC-PCIE-BS32GF LPe32000-M2-E 6030391 7ZT7A00517A 403-BBMC S26361- F4044-L501 (FH & LP) (HPE SN1600E) 403-BBLV (LP) (LPe32000-M2-F) 403-BBLT S26361- F4044-E2 (FH) 1.6 million PCI 403-BBLY (FH) S26361- F4044-E202 (LP) Q0L12A Gen 6 Dual HBA LPe32002-M2 UCSC-PCIE-BD32GF LPe32002-M2-E 6030393 7ZT7A00519A 7335912 Express (32GFC) 403-BBLW S26361- F4044-L502 (FH & LP) (HPE SN1600E) 3.0 403-BBME (LP) (LPe32002-M2-F) Dual Mezzanine Card LPm32002 544-BBCN Quad HBA 3.2 million LPe32004-M2-SIO 403-BBMD* S26361-F5596-E1 (FH)* HBA 403-BBLS (FH)* S26361-F5596-E201 (LP)* Q0L13A* Single (upgradeable to LPe31000-M6 LPe31000-M6-E* Q0L13A* 01CV830A* Q0L13A* S26361-F5596-L501 (FH -

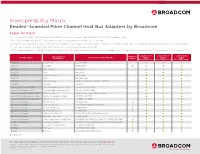

Interoperability Matrix

Interoperability Matrix Emulex®-branded Fibre Channel Host Bus Adapters by Broadcom Faster for Flash • This document contains the interoperability status for Emulex-branded Fibre Channel (FC) Host Bus Adapters (HBAs). • Most OEM-branded Emulex FC HBAs are co-listed once the Emulex-branded HBA is qualified. • Broadcom’s storage partners issue Hardware Compatibility Lists (HCLs) or interoperability matrices for the Emulex FC HBA, server OS and storage array which they have qualified. • For the most current and detailed information please consult your storage partner HCL. • *FCP support available for all Fibre Channel storage; NVMe/FC support listed below may not be available on all storage devices within a product family. Please refer to your OEM. Emulex Gen 6 Emulex Gen 6 Emulex Gen 7 Storage Partner Storage Device Storage Series Name/Number NVMe/FC 16GFC 32GFC 32GFC Brand Name Support* LPe31000-series LPe32000-series LPe35000-series Dell EMC Compellent SC, SCv Series l l l Dell EMC PowerMax 2000/8000 l l l l Dell EMC Tape Library ML3/ML3E l l l Dell EMC VNX VNX Series l l l Dell EMC Unified Storage Unity Series l l l Dell EMC VMAX 10K, 20K, 40K l l l Dell EMC VMAX All Flash 250F/FX, 450F/FX, 850F/FX, 950F/FX l l l Dell EMC XtremIO X2-R/-S l l l Hitachi Data Systems (HDS) Virtual Storage Platform (VSP) G-series (G1x00, Gx00) l l l Hitachi Data Systems (HDS) Virtual Storage Platform (VSP) F-series (F1500, Fx00) l l l Hewlett Packard Enterprise (HPE) 3PAR StorServ 20000, 9000, 8000, 7000, 10000 l l l Hewlett Packard Enterprise (HPE) Nimble -

Brocade Fabric OS 8.X Open Systems Compatibility Matrix Version 1.8; September 2021

Brocade Fabric OS 8.x Open Systems Compatibility Matrix Version 1.8; September 2021 This document summarizes equipment that is known to be compatible with the Brocade® Fabric OS® (FOS) 8.x family. Products that are named in the compatibility tables reflect equipment that has been tested at Broadcom or tested externally. NOTE: This information is constantly being updated. For the latest compatibility information, visit vendor websites, some of which are listed in Related Websites. Broadcom FOS-8X-CM-OT109 September 2021 Brocade Fabric OS 8.x Open Systems Compatibility Matrix Copyright © 2018–2021 Broadcom. All Rights Reserved. Broadcom, the pulse logo, Brocade, the stylized B logo, and Fabric OS are among the trademarks of Broadcom in the United States, the EU, and/or other countries. The term “Broadcom” refers to Broadcom Inc. and/or its subsidiaries. Broadcom reserves the right to make changes without further notice to any products or data herein to improve reliability, function, or design. Information furnished by Broadcom is believed to be accurate and reliable. However, Broadcom does not assume any liability arising out of the application or use of this information, nor the application or use of any product or circuit described herein, neither does it convey any license under its patent rights nor the rights of others. The product described by this document may contain open source software covered by the GNU General Public License or other open source license agreements. To find out which open source software is included in Brocade products, to view the licensing terms applicable to the open source software, and to obtain a copy of the programming source code, please download the open source disclosure documents in the Broadcom Customer Support Portal (CSP). -

Hitachi Compute Blade Emulex Adapter User's Guide for Utility

Hitachi Compute Blade Emulex Adapter User's Guide for Utility FASTFIND LINKS Getting Help Contents MK-99COM105-08 © 2010-2015 Hitachi, Ltd. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying and recording, or stored in a database or retrieval system for any purpose without the express written permission of Hitachi, Ltd. Hitachi, Ltd., reserves the right to make changes to this document at any time without notice and assumes no responsibility for its use. This document contains the most current information available at the time of publication. When new or revised information becomes available, this entire document will be updated and distributed to all registered users. Some of the features described in this document might not be currently available. Refer to the most recent product announcement for information about feature and product availability, or contact Hitachi Data Systems Corporation at https://portal.hds.com. Notice: Hitachi, Ltd., products and services can be ordered only under the terms and conditions of the applicable Hitachi Data Systems Corporation agreements. The use of Hitachi, Ltd., products is governed by the terms of your agreements with Hitachi Data Systems Corporation. Hitachi is a registered trademark of Hitachi, Ltd., in the United States and other countries. Hitachi Data Systems is a registered trademark and service mark of Hitachi, Ltd., in the United States and other countries. Archivas, Essential NAS Platform, HiCommand, Hi-Track, ShadowImage, Tagmaserve, Tagmasoft, Tagmasolve, Tagmastore, TrueCopy, Universal Star Network, and Universal Storage Platform are registered trademarks of Hitachi Data Systems Corporation. -

Sun Storage 16 Gb Fibre Channel Expressmodule Host Bus Adapter, Emulex Installation Guide for HBA Model 7101690

Sun Storage 16 Gb Fibre Channel ExpressModule Host Bus Adapter, Emulex Installation Guide For HBA Model 7101690 Part No: E24463-09 August 2018 Sun Storage 16 Gb Fibre Channel ExpressModule Host Bus Adapter, Emulex Installation Guide For HBA Model 7101690 Part No: E24463-09 Copyright © 2017, 2018, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

Emulex HBA Manager Application Command Line Interface User Guide Table of Contents

Emulex® HBA Manager Application Command Line Interface User Guide Release 12.6 Broadcom HBAManager-CLI-UG126-100 February 4, 2020 Broadcom, the pulse logo, Connecting everything, Avago Technologies, Avago, the A logo, Brocade, ClearLink, Emulex, ExpressLane, OneCommand, and SLI are among the trademarks of Broadcom and/or its affiliates in the United States, certain other countries, and/or the EU. The PowerPC name and logo are registered trademarks of IBM Corp. and used under license therefrom. Copyright © 2003–2020 Broadcom. All Rights Reserved. The term “Broadcom” refers to Broadcom Inc. and/or its subsidiaries. For more information, please visit www.broadcom.com. Broadcom reserves the right to make changes without further notice to any products or data herein to improve reliability, function, or design. Information furnished by Broadcom is believed to be accurate and reliable. However, Broadcom does not assume any liability arising out of the application or use of this information, nor the application or use of any product or circuit described herein, neither does it convey any license under its patent rights nor the rights of others. Emulex HBA Manager Application Command Line Interface User Guide Table of Contents Chapter 1: Introduction ...................................................................................................................... 8 1.1 Abbreviations ............................................................................................................................................................9 1.2 -

Ocm14102-U2-D Dual-Port 10Gbe Converged Network Daughter Card for Dell Poweredge 12G Blade Servers

DATA SHEET OCm14102-U2-D Dual-port 10GbE Converged Network Daughter Card For Dell PowerEdge 12G Blade Servers High Performance Virtual Networking and Trusted Storage Interoperability Overview Key Benefits As the fourth generation of the Emulex OneConnect® product line, the n Enable higher virtualization ratios with OCm14102-U2-D dual-port Converged Network Adapter (CNA) daughter card provides hardware offloads, critical to getting a higher high performance 10Gb Ethernet (10GbE) connectivity delivering multiple benefits for return from server investments the enterprise cloud data center, including: n Simplify VM mobility in cloud infrastructures n Increasing data center IT agility and scalability through deployment of a secure multi- eliminating network re-configuration by tenant cloud leveraging on-adapter overlay networking n Driving scalability and flexibility in space constrained blade infrastructures offloads n Maximizing server hardware utilization by scaling high density virtualization n Improve storage networking with lossless end- to-end iSCSI-over-Data Center Bridging (DCB) The OCm14102-U2-D 10GbE CNA is designed for the high bandwidth and scalability connectivity, from CNA to Dell or other iSCSI demands of Tier-1 enterprise applications powered by storage protocol (Fibre Channel storage targets over Ethernet [FCoE] and iSCSI) and stateless TCP hardware offloads, more scalable virtualization with Single-Root I/O Virtualization (SR-IOV), optimized bandwidth n Minimize wasted idle bandwidth by optimizing allocation using Dell switch -

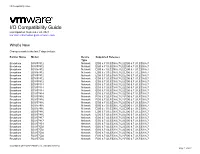

I/O Compatibility Guide

I/O Compatibility Guide I/O Compatibility Guide Last Updated: September 24, 2021 For more information go to vmware.com. What's New Changes made in the last 7 days include: Partner Name Model Device Supported Releases Type Broadcom BCM57412 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57412 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57412 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57412 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57412 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57412 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57412 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57414 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57414 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57414 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57414 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57416 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57416 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57416 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57416 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57416 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57416 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57416 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi 6.7 Broadcom BCM57417 Network ESXi 6.7 U3,ESXi 6.7 U2,ESXi 6.7 U1,ESXi -

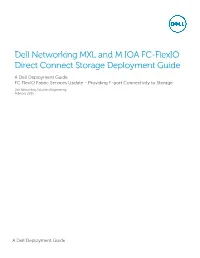

Dell Networking MXL and M IOA FC-Flexio Direct Connect Storage Deployment Guide

Dell Networking MXL and M IOA FC-FlexIO Direct Connect Storage Deployment Guide A Dell Deployment Guide FC FlexIO Fabric Services Update - Providing F-port Connectivity to Storage Dell Networking Solutions Engineering February 2015 A Dell Deployment Guide Revisions Date Description Authors February 2015 Initial Release Jim Slaughter, Kevin Locklear, Curtis Bunch ©2015 Dell Inc., All rights reserved. Except as stated below, no part of this document may be reproduced, distributed or transmitted in any form or by any means, without express permission of Dell. You may distribute this document within your company or organization only, without alteration of its contents. THIS DOCUMENT IS PROVIDED “AS-IS”, AND WITHOUT ANY WARRANTY, EXPRESS OR IMPLIED. IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE SPECIFICALLY DISCLAIMED. PRODUCT WARRANTIES APPLICABLE TO THE DELL PRODUCTS DESCRIBED IN THIS DOCUMENT MAY BE FOUND AT: http://www.dell.com/learn/us/en/19/terms-of-sale-commercial-and-public-sector Performance of network reference architectures discussed in this document may vary with differing deployment conditions, network loads, and the like. Third party products may be included in reference architectures for the convenience of the reader. Inclusion of such third party products does not necessarily constitute Dell’s recommendation of those products. Please consult your Dell representative for additional information. This document is an independent publication and has not been authorized, sponsored, or otherwise approved by NetApp, Inc., or VMware Inc. Trademarks used in this text: Dell™, the Dell logo, PowerEdge™, PowerVault™, PowerConnect™, OpenManage™, EqualLogic™, Compellent™, KACE™, FlexAddress™, Force10™ and Vostro™ are trademarks of Dell Inc.