Electromagnetism University of Cambridge Part IB Mathematical Tripos

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Glossary Physics (I-Introduction)

1 Glossary Physics (I-introduction) - Efficiency: The percent of the work put into a machine that is converted into useful work output; = work done / energy used [-]. = eta In machines: The work output of any machine cannot exceed the work input (<=100%); in an ideal machine, where no energy is transformed into heat: work(input) = work(output), =100%. Energy: The property of a system that enables it to do work. Conservation o. E.: Energy cannot be created or destroyed; it may be transformed from one form into another, but the total amount of energy never changes. Equilibrium: The state of an object when not acted upon by a net force or net torque; an object in equilibrium may be at rest or moving at uniform velocity - not accelerating. Mechanical E.: The state of an object or system of objects for which any impressed forces cancels to zero and no acceleration occurs. Dynamic E.: Object is moving without experiencing acceleration. Static E.: Object is at rest.F Force: The influence that can cause an object to be accelerated or retarded; is always in the direction of the net force, hence a vector quantity; the four elementary forces are: Electromagnetic F.: Is an attraction or repulsion G, gravit. const.6.672E-11[Nm2/kg2] between electric charges: d, distance [m] 2 2 2 2 F = 1/(40) (q1q2/d ) [(CC/m )(Nm /C )] = [N] m,M, mass [kg] Gravitational F.: Is a mutual attraction between all masses: q, charge [As] [C] 2 2 2 2 F = GmM/d [Nm /kg kg 1/m ] = [N] 0, dielectric constant Strong F.: (nuclear force) Acts within the nuclei of atoms: 8.854E-12 [C2/Nm2] [F/m] 2 2 2 2 2 F = 1/(40) (e /d ) [(CC/m )(Nm /C )] = [N] , 3.14 [-] Weak F.: Manifests itself in special reactions among elementary e, 1.60210 E-19 [As] [C] particles, such as the reaction that occur in radioactive decay. -

Particle Motion

Physics of fusion power Lecture 5: particle motion Gyro motion The Lorentz force leads to a gyration of the particles around the magnetic field We will write the motion as The Lorentz force leads to a gyration of the charged particles Parallel and rapid gyro-motion around the field line Typical values For 10 keV and B = 5T. The Larmor radius of the Deuterium ions is around 4 mm for the electrons around 0.07 mm Note that the alpha particles have an energy of 3.5 MeV and consequently a Larmor radius of 5.4 cm Typical values of the cyclotron frequency are 80 MHz for Hydrogen and 130 GHz for the electrons Often the frequency is much larger than that of the physics processes of interest. One can average over time One can not however neglect the finite Larmor radius since it lead to specific effects (although it is small) Additional Force F Consider now a finite additional force F For the parallel motion this leads to a trivial acceleration Perpendicular motion: The equation above is a linear ordinary differential equation for the velocity. The gyro-motion is the homogeneous solution. The inhomogeneous solution Drift velocity Inhomogeneous solution Solution of the equation Physical picture of the drift The force accelerates the particle leading to a higher velocity The higher velocity however means a larger Larmor radius The circular orbit no longer closes on itself A drift results. Physics picture behind the drift velocity FxB Electric field Using the formula And the force due to the electric field One directly obtains the so-called ExB velocity Note this drift is independent of the charge as well as the mass of the particles Electric field that depends on time If the electric field depends on time, an additional drift appears Polarization drift. -

Electrostatics Vs Magnetostatics Electrostatics Magnetostatics

Electrostatics vs Magnetostatics Electrostatics Magnetostatics Stationary charges ⇒ Constant Electric Field Steady currents ⇒ Constant Magnetic Field Coulomb’s Law Biot-Savart’s Law 1 ̂ ̂ 4 4 (Inverse Square Law) (Inverse Square Law) Electric field is the negative gradient of the Magnetic field is the curl of magnetic vector electric scalar potential. potential. 1 ′ ′ ′ ′ 4 |′| 4 |′| Electric Scalar Potential Magnetic Vector Potential Three Poisson’s equations for solving Poisson’s equation for solving electric scalar magnetic vector potential potential. Discrete 2 Physical Dipole ′′′ Continuous Magnetic Dipole Moment Electric Dipole Moment 1 1 1 3 ∙̂̂ 3 ∙̂̂ 4 4 Electric field cause by an electric dipole Magnetic field cause by a magnetic dipole Torque on an electric dipole Torque on a magnetic dipole ∙ ∙ Electric force on an electric dipole Magnetic force on a magnetic dipole ∙ ∙ Electric Potential Energy Magnetic Potential Energy of an electric dipole of a magnetic dipole Electric Dipole Moment per unit volume Magnetic Dipole Moment per unit volume (Polarisation) (Magnetisation) ∙ Volume Bound Charge Density Volume Bound Current Density ∙ Surface Bound Charge Density Surface Bound Current Density Volume Charge Density Volume Current Density Net , Free , Bound Net , Free , Bound Volume Charge Volume Current Net , Free , Bound Net ,Free , Bound 1 = Electric field = Magnetic field = Electric Displacement = Auxiliary -

Review of Electrostatics and Magenetostatics

Review of electrostatics and magenetostatics January 12, 2016 1 Electrostatics 1.1 Coulomb’s law and the electric field Starting from Coulomb’s law for the force produced by a charge Q at the origin on a charge q at x, qQ F (x) = 2 x^ 4π0 jxj where x^ is a unit vector pointing from Q toward q. We may generalize this to let the source charge Q be at an arbitrary postion x0 by writing the distance between the charges as jx − x0j and the unit vector from Qto q as x − x0 jx − x0j Then Coulomb’s law becomes qQ x − x0 x − x0 F (x) = 2 0 4π0 jx − xij jx − x j Define the electric field as the force per unit charge at any given position, F (x) E (x) ≡ q Q x − x0 = 3 4π0 jx − x0j We think of the electric field as existing at each point in space, so that any charge q placed at x experiences a force qE (x). Since Coulomb’s law is linear in the charges, the electric field for multiple charges is just the sum of the fields from each, n X qi x − xi E (x) = 4π 3 i=1 0 jx − xij Knowing the electric field is equivalent to knowing Coulomb’s law. To formulate the equivalent of Coulomb’s law for a continuous distribution of charge, we introduce the charge density, ρ (x). We can define this as the total charge per unit volume for a volume centered at the position x, in the limit as the volume becomes “small”. -

Electromagnetism What Is the Effect of the Number of Windings of Wire on the Strength of an Electromagnet?

TEACHER’S GUIDE Electromagnetism What is the effect of the number of windings of wire on the strength of an electromagnet? GRADES 6–8 Physical Science INQUIRY-BASED Science Electromagnetism Physical Grade Level/ 6–8/Physical Science Content Lesson Summary In this lesson students learn how to make an electromagnet out of a battery, nail, and wire. The students explore and then explain how the number of turns of wire affects the strength of an electromagnet. Estimated Time 2, 45-minute class periods Materials D cell batteries, common nails (20D), speaker wire (18 gauge), compass, package of wire brad nails (1.0 mm x 12.7 mm or similar size), Investigation Plan, journal Secondary How Stuff Works: How Electromagnets Work Resources Jefferson Lab: What is an electromagnet? YouTube: Electromagnet - Explained YouTube: Electromagnets - How can electricity create a magnet? NGSS Connection MS-PS2-3 Ask questions about data to determine the factors that affect the strength of electric and magnetic forces. Learning Objectives • Students will frame a hypothesis to predict the strength of an electromagnet due to changes in the number of windings. • Students will collect and analyze data to determine how the number of windings affects the strength of an electromagnet. What is the effect of the number of windings of wire on the strength of an electromagnet? Electromagnetism is one of the four fundamental forces of the universe that we rely on in many ways throughout our day. Most home appliances contain electromagnets that power motors. Particle accelerators, like CERN’s Large Hadron Collider, use electromagnets to control the speed and direction of these speedy particles. -

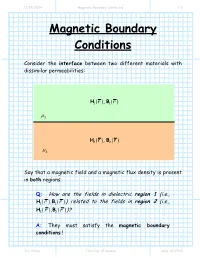

Magnetic Boundary Conditions 1/6

11/28/2004 Magnetic Boundary Conditions 1/6 Magnetic Boundary Conditions Consider the interface between two different materials with dissimilar permeabilities: HB11(r,) (r) µ1 HB22(r,) (r) µ2 Say that a magnetic field and a magnetic flux density is present in both regions. Q: How are the fields in dielectric region 1 (i.e., HB11()rr, ()) related to the fields in region 2 (i.e., HB22()rr, ())? A: They must satisfy the magnetic boundary conditions ! Jim Stiles The Univ. of Kansas Dept. of EECS 11/28/2004 Magnetic Boundary Conditions 2/6 First, let’s write the fields at the interface in terms of their normal (e.g.,Hn ()r ) and tangential (e.g.,Ht (r ) ) vector components: H r = H r + H r H1n ()r 1 ( ) 1t ( ) 1n () ˆan µ 1 H1t (r ) H2t (r ) H2n ()r H2 (r ) = H2t (r ) + H2n ()r µ 2 Our first boundary condition states that the tangential component of the magnetic field is continuous across a boundary. In other words: HH12tb(rr) = tb( ) where rb denotes to any point along the interface (e.g., material boundary). Jim Stiles The Univ. of Kansas Dept. of EECS 11/28/2004 Magnetic Boundary Conditions 3/6 The tangential component of the magnetic field on one side of the material boundary is equal to the tangential component on the other side ! We can likewise consider the magnetic flux densities on the material interface in terms of their normal and tangential components: BHrr= µ B1n ()r 111( ) ( ) ˆan µ 1 B1t (r ) B2t (r ) B2n ()r BH222(rr) = µ ( ) µ2 The second magnetic boundary condition states that the normal vector component of the magnetic flux density is continuous across the material boundary. -

19-8 Magnetic Field from Loops and Coils

Answer to Essential Question 19.7: To get a net magnetic field of zero, the two fields must point in opposite directions. This happens only along the straight line that passes through the wires, in between the wires. The current in wire 1 is three times larger than that in wire 2, so the point where the net magnetic field is zero is three times farther from wire 1 than from wire 2. This point is 30 cm to the right of wire 1 and 10 cm to the left of wire 2. 19-8 Magnetic Field from Loops and Coils The Magnetic Field from a Current Loop Let’s take a straight current-carrying wire and bend it into a complete circle. As shown in Figure 19.27, the field lines pass through the loop in one direction and wrap around outside the loop so the lines are continuous. The field is strongest near the wire. For a loop of radius R and current I, the magnetic field in the exact center of the loop has a magnitude of . (Equation 19.11: The magnetic field at the center of a current loop) The direction of the loop’s magnetic field can be found by the same right-hand rule we used for the long straight wire. Point the thumb of your right hand in the direction of the current flow along a particular segment of the loop. When you curl your fingers, they curl the way the magnetic field lines curl near that segment. The roles of the fingers and thumb can be reversed: if you curl the fingers on Figure 19.27: (a) A side view of the magnetic field your right hand in the way the current goes around from a current loop. -

Transformation Optics for Thermoelectric Flow

J. Phys.: Energy 1 (2019) 025002 https://doi.org/10.1088/2515-7655/ab00bb PAPER Transformation optics for thermoelectric flow OPEN ACCESS Wencong Shi, Troy Stedman and Lilia M Woods1 RECEIVED 8 November 2018 Department of Physics, University of South Florida, Tampa, FL 33620, United States of America 1 Author to whom any correspondence should be addressed. REVISED 17 January 2019 E-mail: [email protected] ACCEPTED FOR PUBLICATION Keywords: thermoelectricity, thermodynamics, metamaterials 22 January 2019 PUBLISHED 17 April 2019 Abstract Original content from this Transformation optics (TO) is a powerful technique for manipulating diffusive transport, such as heat work may be used under fl the terms of the Creative and electricity. While most studies have focused on individual heat and electrical ows, in many Commons Attribution 3.0 situations thermoelectric effects captured via the Seebeck coefficient may need to be considered. Here licence. fi Any further distribution of we apply a uni ed description of TO to thermoelectricity within the framework of thermodynamics this work must maintain and demonstrate that thermoelectric flow can be cloaked, diffused, rotated, or concentrated. attribution to the author(s) and the title of Metamaterial composites using bilayer components with specified transport properties are presented the work, journal citation and DOI. as a means of realizing these effects in practice. The proposed thermoelectric cloak, diffuser, rotator, and concentrator are independent of the particular boundary conditions and can also operate in decoupled electric or heat modes. 1. Introduction Unprecedented opportunities to manipulate electromagnetic fields and various types of transport have been discovered recently by utilizing metamaterials (MMs) capable of achieving cloaking, rotating, and concentrating effects [1–4]. -

Principle and Characteristic of Lorentz Force Propeller

J. Electromagnetic Analysis & Applications, 2009, 1: 229-235 229 doi:10.4236/jemaa.2009.14034 Published Online December 2009 (http://www.SciRP.org/journal/jemaa) Principle and Characteristic of Lorentz Force Propeller Jing ZHU Northwest Polytechnical University, Xi’an, Shaanxi, China. Email: [email protected] Received August 4th, 2009; revised September 1st, 2009; accepted September 9th, 2009. ABSTRACT This paper analyzes two methods that a magnetic field can be generated, and classifies them under two types: 1) Self-field: a magnetic field can be generated by electrically charged particles move, and its characteristic is that it can’t be independent of the electrically charged particles. 2) Radiation field: a magnetic field can be generated by electric field change, and its characteristic is that it independently exists. Lorentz Force Propeller (ab. LFP) utilize the charac- teristic that radiation magnetic field independently exists. The carrier of the moving electrically charged particles and the device generating the changing electric field are fixed together to form a system. When the moving electrically charged particles under the action of the Lorentz force in the radiation magnetic field, the system achieves propulsion. Same as rocket engine, the LFP achieves propulsion in vacuum. LFP can generate propulsive force only by electric energy and no propellant is required. The main disadvantage of LFP is that the ratio of propulsive force to weight is small. Keywords: Electric Field, Magnetic Field, Self-Field, Radiation Field, the Lorentz Force 1. Introduction also due to the changes in observation angle.) “If the electric quantity carried by the particles is certain, the The magnetic field generated by a changing electric field magnetic field generated by the particles is entirely de- is a kind of radiation field and it independently exists. -

Magnetostatics: Part 1 We Present Magnetostatics in Comparison with Electrostatics

Magnetostatics: Part 1 We present magnetostatics in comparison with electrostatics. Sources of the fields: Electric field E: Coulomb’s law Magnetic field B: Biot-Savart law Forces exerted by the fields: Electric: F = qE Mind the notations, both Magnetic: F = qvB printed and hand‐written Does the magnetic force do any work to the charge? F B, F v Positive charge moving at v B Negative charge moving at v B Steady state: E = vB By measuring the polarity of the induced voltage, we can determine the sign of the moving charge. If the moving charge carriers is in a perfect conductor, then we can have an electric field inside the perfect conductor. Does this contradict what we have learned in electrostatics? Notice that the direction of the magnetic force is the same for both positive and negative charge carriers. Magnetic force on a current carrying wire The magnetic force is in the same direction regardless of the charge carrier sign. If the charge carrier is negative Carrier density Charge of each carrier For a small piece of the wire dl scalar Notice that v // dl A current-carrying wire in an external magnetic field feels the force exerted by the field. If the wire is not fixed, it will be moved by the magnetic force. Some work must be done. Does this contradict what we just said? For a wire from point A to point B, For a wire loop, If B is a constant all along the loop, because Let’s look at a rectangular wire loop in a uniform magnetic field B. -

Lecture 8: Magnets and Magnetism Magnets

Lecture 8: Magnets and Magnetism Magnets •Materials that attract other metals •Three classes: natural, artificial and electromagnets •Permanent or Temporary •CRITICAL to electric systems: – Generation of electricity – Operation of motors – Operation of relays Magnets •Laws of magnetic attraction and repulsion –Like magnetic poles repel each other –Unlike magnetic poles attract each other –Closer together, greater the force Magnetic Fields and Forces •Magnetic lines of force – Lines indicating magnetic field – Direction from N to S – Density indicates strength •Magnetic field is region where force exists Magnetic Theories Molecular theory of magnetism Magnets can be split into two magnets Magnetic Theories Molecular theory of magnetism Split down to molecular level When unmagnetized, randomness, fields cancel When magnetized, order, fields combine Magnetic Theories Electron theory of magnetism •Electrons spin as they orbit (similar to earth) •Spin produces magnetic field •Magnetic direction depends on direction of rotation •Non-magnets → equal number of electrons spinning in opposite direction •Magnets → more spin one way than other Electromagnetism •Movement of electric charge induces magnetic field •Strength of magnetic field increases as current increases and vice versa Right Hand Rule (Conductor) •Determines direction of magnetic field •Imagine grasping conductor with right hand •Thumb in direction of current flow (not electron flow) •Fingers curl in the direction of magnetic field DO NOT USE LEFT HAND RULE IN BOOK Example Draw magnetic field lines around conduction path E (V) R Another Example •Draw magnetic field lines around conductors Conductor Conductor current into page current out of page Conductor coils •Single conductor not very useful •Multiple winds of a conductor required for most applications, – e.g. -

Modeling of Ferrofluid Passive Cooling System

Excerpt from the Proceedings of the COMSOL Conference 2010 Boston Modeling of Ferrofluid Passive Cooling System Mengfei Yang*,1,2, Robert O’Handley2 and Zhao Fang2,3 1M.I.T., 2Ferro Solutions, Inc, 3Penn. State Univ. *500 Memorial Dr, Cambridge, MA 02139, [email protected] Abstract: The simplicity of a ferrofluid-based was to develop a model that supports results passive cooling system makes it an appealing from experiments conducted on a cylindrical option for devices with limited space or other container of ferrofluid with a heat source and physical constraints. The cooling system sink [Figure 1]. consists of a permanent magnet and a ferrofluid. The experiments involved changing the Ferrofluids are composed of nanoscale volume of the ferrofluid and moving the magnet ferromagnetic particles with a temperature- to different positions outside the ferrofluid dependant magnetization suspended in a liquid container. These experiments tested 1) the effect solvent. The cool, magnetic ferrofluid near the of bringing the heat source and heat sink closer heat sink is attracted toward a magnet positioned together and using less ferrofluid, and 2) the near the heat source, thereby displacing the hot, optimal position for the permanent magnet paramagnetic ferrofluid near the heat source and between the heat source and sink. In the model, setting up convective cooling. This paper temperature-dependent magnetic properties were explores how COMSOL Multiphysics can be incorporated into the force component of the used to model a simple cylinder representation of momentum equation, which was coupled to the such a cooling system. Numerical results from heat transfer module. The model was compared the model displayed the same trends as empirical with experimental results for steady-state data from experiments conducted on the cylinder temperature trends and for appropriate velocity cooling system.