Image File Formats, Digital Archival and TI/A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Preferred Formats National Archives of the Netherlands in View of Sustainable Accessibility

Preferred formats National Archives of the Netherlands In view of sustainable accessibility Version 1.0, November 2016 Contents Contents—2 1 Introduction—3 2 Why preferred formats?—6 Archival regulation: ‘open unless...’—6 Management and availability: easier with minimal diversity—6 Open standards and interoperability: as few obstacles as possible—7 3 Preferred formats and acceptable formats—8 Summary of referred formats and acceptable formats—8 Overview preferred formats including substantiation—8 Overview of acceptable formats including substantiation—10 Appendix 1: Archival regulation—12 Appendix 2: Dutch Standardisation Forum and open standards—14 What are open standards?—14 Why open standards?—14 Interoperability and supplier independence—14 Publishing details—15 Page 2 van 15 1 Introduction The National Archives’ e-Depot can receive, sustainably store and make available digital information in a variety of forms and formats. But in light of digital sustainability, the National Archives has a number of preferred formats for the information supplied by the legal caretakers. This document describes those preferred formats and provides a substantiation for their use. This allows custodians to take sustainable accessibility into account from the inception of their information. Context and cause The document Preferred formats of the National Archives is an elaboration of its Preservation Policy. The Preservation Policy describes the overall policy for preservation, i.e. the way in which the National Archives keeps the digital information it manages authentic and useable. In addition to Preferred formats, the Preservation Policy is elaborated upon in other documents.1 Figure 1 depicts the components of the National Archives’ preservation policy. Preservation policy Policy (what) Strategy and Strategies and standards standards (why which choices) Information types Preferred formats Technical Registry Preservation Watch Essential characteristics (Monitor Designated Community & Technology), incl. -

File Formats Slides

Workshop Background Purpose • To provide you with resources and tools to help you know how to handle file format decisions as a researcher. Context • Workshop Series: Preservation and Curation of ETD Research Data and Complex Digital Objects • Other topics: Copyright, Data Organization, Metadata, Storage, Version Control • https://educopia.org/research/etdplus Photo by Dmitri Popov on Unsplash Learning Objectives • Understand you have a range of file format options and that each choice has implications for future use and access. • Gain exposure to tools for archiving particularly challenging file types (e.g., web pages). • Understand how to reduce your risk via using export and “save as” functions. Photo by Christian Fregnan on Unsplash Examples of file formats • Images: jpg, gif, tiff, png, ai, svg, ... • Video: mpeg, m2tvs, flv, dv, ... • GIS: kml, dxf, shp, tiff, ... • CAD: dxf, dwg, pdf, … • Data: csv, mdf, fp, spv, xls, tsv, ... Key concept The file formats you choose will determine how easy (or difficult!) your research outputs are to access and build upon in the future. How to choose • Use software that imports and exports data in common formats to which you know you’ll have long-term access. • Ask advisors and colleagues what formats they use and why. • Choose a format with functions that support your research needs (e.g., collaboration). • Save your content in multiple formats to spread your risk across software platforms (e.g., docx, pdf, & txt; or mp4, avi, & mpg). Photo by Bryan Minear on Unsplash Informing your decision • Sustainability of Digital Formats https://www.loc.gov/preservation/digital/ formats/intro/intro.shtml • Recommended Formats Statement https://www.loc.gov/preservation/resources/rf s/ Photo by Lou Levit on Unsplash Archiving Web-based Resources • Wayback Machine (Internet Archive) https://archive.org/web/ • Robust Links http://robustlinks.mementoweb.org/ • Screen shots Photo by Logan Popoff on Unsplash File Format Conversions • Options include proprietary, freeware, and open source solutions. -

List of File Formats

Nicole Martin November 4, 2007 Digital Preservation - MIAP List of File Formats File Name: Advanced Audio Coding File Extension: .aac Creator: Collaboration between corporations approved by MPEG Creation Date: 1997 Media Type: Sound Format: Lossy Compression Notes: Developed to work as a part of MPEG-4, the AAC file format employs a very efficient form of compression meant to improve on the standard MP3. File Name: Advanced Authoring Format File Extension: .aaf Creator: Advanced Media Workflow Association Creation Date: 2000 Media Type: Moving Image Format: Uncompressed Notes: Created specifically for use in the post-production/editing environment to address interoperability issues. The AAF file format acts as a wrapper, is capable of storing metadata, and was designed to be versatile enough to withstand the myriad changes inherent in a production setting. File Name: Audio Interchange File Format File Extension: .aiff Creator: Electronic Arts Interchange and Apple Computer, Inc. Creation Date: 1988 Media Type: Sound Format: Uncompressed Notes: Standard Macintosh file format that is compatible with Windows (.aif) and often used by digital audio devices. Regular AIFF files are uncompressed, but compressed versions of the format (AIFF-C or AIFC) were developed to function with various codecs. File Name: Audio Video Interleave File Extension: .avi Creator: Microsoft Creation Date: 1992 Media Type: Moving Image Format: Container Notes: File most often created when DV files are imported from a camcorder to computer. File Name: Bitmap File Extension: .bmp Creator: IBM and Microsoft Creation Date: 1988 Media Type: Still Image Format: Compressed or Uncompressed Notes: Originally created for release with the Windows OS/2 operating system, BMPs are raster image files that are able to range from large, high quality files to small files of lesser quality. -

ABBYY® Finereader 14

ABBYY® FineReader 14 User’s Guide © 2017 ABBYY Production LLC. All rights reserved. ABBYY® FineReader 14 User’s Guide Information in this document is subject to change w ithout notice and does not bear any commitment on the part of ABBYY. The softw are described in this document is supplied under a license agreement. The softw are may only be used or copied in strict accordance w ith the terms of the agreement. It is a breach of the "On legal protection of softw are and databases" law of the Russian Federation and of international law to copy the softw are onto any medium unless specifically allow ed in the license agreement or nondisclosure agreements. No part of this document may be reproduced or transmitted in any from or by any means, electronic or other, for any purpose, w ithout the express w ritten permission of ABBYY. Copyrights 262 2 ABBYY® FineReader 14 User’s Guide Contents Introducing ABBYY FineReader ..................................................................................... 8 About ABBYY FineReader ........................................................................................... 9 What's New in ABBYY FineReader .............................................................................. 11 The New Task window ................................................................................................ 13 Viewing and editing PDFs ........................................................................................... 15 Quick conversion .................................................................................................... -

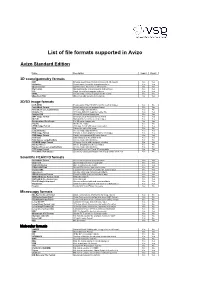

List of File Formats Supported in Avizo

List of file formats supported in Avizo Avizo Standard Edition Name Description Import Export 3D scene/geometry formats DXF Drawing Interchange Format for AutoCAD 3D models Yes Yes HxSurface Avizo's native format for triangular surfaces Yes Yes Open Inventor Open Inventor file format for 3D models Yes Yes Ply Format Stanford triangle format for points and surfaces Yes Yes STL Simple format for triangular surfaces Yes Yes VRML Virtual reality markup language for 3D models Yes Yes Wavefront OBJ Wavefront OBJ generic 3D file format No Yes 2D/3D image formats ACR-NEMA Predecessor of the DICOM format for medical images Yes No AmiraMesh Format Avizo's native general purpose format Yes Yes AmiraMesh as LargeDiskData Access image data blockwise Yes No Analyze 7.5 3D image data with separate header file Yes Yes AnalyzeAVW 2D and 3D medical image data Yes Yes BMP Image Format Uncompressed Windows bitmap format Yes Yes DICOM Standard file format for medical images Yes Yes Encapsulated PostScript For 2D raster images only No Yes Interfile Interfile file reader Yes No JPEG Image Format 2D image format with lossy compression Yes Yes LDA VolumeViz native file format Yes No LargeDiskData Access image data blockwise Yes Yes PNG Image Format Portable network graphics format for 2D images Yes Yes PNM Image Format Simple uncompressed 2D image format Yes Yes Raw Data Binary data as a 3D uniform field Yes Yes Raw Data as LargeDiskData Access image data blockwise Yes No SGI-RGB Image Format 2D image format with run-length encoding Yes Yes Stacked-Slices Info -

2005 3D Viewers Roundup Product Reference Guide

2005 3D Viewers Roundup Product Reference Guide June 2005 As product development becomes increasingly more globalized, more and more players— both inside and outside of design engineering departments—are involved in the product development process. For organizations both large and small, 3D viewing technology is taking on more importance as it enables collaboration within and without the company by letting users view, markup, and print 3D CAD files without the originating CAD software. But how do you know which 3D viewing solution is best for your organization? With the goal of helping our community members address this question, we bring you the 3D Viewers Roundup. The 2005 3D Viewers Roundup consists of feature articles contributed by industry experts and this Product Reference Guide, which lists specifications for products that provide a range of viewing, markup, and collaboration capabilities in CAD neutral and cross-CAD file formats. See the associated articles under Community Features, “A Higher Level View of Enterprise Data Assets” and “Product Visualization across the Extended Enterprise.” These articles provide differing perspectives on selecting and implementing 3D Viewer technologies for collaboration on product development throughout the organization and beyond. The data herein was compiled using submissions from the listed companies. Note that it is not an exhaustive list of companies, nor does it include all the products of each company. However, we have attempted to include all the major software solutions. ConnectPress Ltd. cannot guarantee the accuracy of this data; we suggest you contact specific companies for further information, particularly to confirm technical details regarding their products. Space does not permit us to list all file formats supported, nor all versions of 3D CAD supported. -

Falcongaze Securetower User Guide

Falcongaze SecureTower. User Guide Table of Contents 1 What's............................................................................................................................7 New 2 Program............................................................................................................................8 overview 3 Tips for............................................................................................................................9 Guide 4 Getting............................................................................................................................10 started. Connecting to the server 5 Console............................................................................................................................13 options 5.1 Tips &.........................................................................................................................20 Tricks in the console 6 Information............................................................................................................................24 search 6.1 Full-text.........................................................................................................................26 search 6.2 Search.........................................................................................................................29 by data type 6.3 General.........................................................................................................................30 search parameters 6.4 Additional.........................................................................................................................31 -

11 – 3D Modeling for Games

CSc 165 Lecture Note Slides 11 - 3D Modeling For Games CSc 165 Computer Game Architecture Overview • Model Characteristics 11 – 3D Modeling • 3D Model File Formats for Games • Model Loaders • Digital Content Creation (DCC) Tools • Skinning and UV-unwrapping 2 CSc 165 Lecture Note Slides CSc 165 Lecture Note Slides 11 - 3D Modeling For Games 11 - 3D Modeling For Games Models Static Data o 3D geometry (vertex data) o Polygon (face) data o Rendering attributes o Wireframe / Faceted / Smooth-shaded o Lighting & Materials o Texturing (“skinning”) data Animation Data (sometimes) o Model structure (skeletons, joints) o Model poses o Animation sequences o walk / run / jump / die … 3 4 CSc 165 Lecture Note Slides CSc 165 Lecture Note Slides 11 - 3D Modeling For Games 11 - 3D Modeling For Games Common 3D Model File Formats .msdl – Manchester Scene Description Language .nff & .enff – (Extended) Neutral File Format .3ds – 3D Studio Max format .obj – Alias|Wavefront Object Files .blend – Blender format .off – 3D mesh Object File Format .dae – COLLADA Digital Asset Exchange format .oogl – Object Oriented Graphics Library .dem – USGS Standard for Digital Elevation Models .ply – Stanford Scanning Repository format .dxf – Autodesk's AutoCAD format .pov – Persistence of Vision ray-tracer .hdf – Hierarchical Data Format .qd3d – Apple's QuickDraw 3D metafile format .iges – Initial Graphics Exchange Specification .viz – used by Division's dVS/dVISE .iv – Open Inventor File Format Info .vrml – Virtual Reality Modeling Language .lwlo, .lwob & .lwsc – Lightwave -

JPEG: Currently the Most Important Common File Format

Main Reference • Ze-Nian Li and Mark S Drew, Fundamentals of Multimedia, second edition. 2 Chapter Three Graphics and Image Data Representations 3 Content 3-1: Graphics/Image Data Types 3-2: Popular File Formats. 4 Outlines • This chapter introduces: ▪ how best to represent the graphics and image data since it is of crucial importance in the study of multimedia. ▪ Starting with 1-bit images, then 8-bit gray images, then 24- bit color images and 8-bit versions of color images. • The specifics of file formats for storing such images are also discussed. 5 3.1 Graphics/Image Data Types • Table 3.1 shows a list of file formats used in the popular product Adobe Premiere. • We concentrate on GIF and JPG image file formats, since the GIF file format is one of the simplest and contains several fundamental features, • and the JPG file format is arguably the most important overall. 6 3.1 Graphics/Image Data Types 7 3.1.1 1-Bit Images • Images consist of pixels (picture elements in digital images). • A 1-bit image (also called binary image) consists of on and off bits only and thus is the simplest type of image. • Each pixel is stored as a single bit (0 or 1). • It is also sometimes called a 1-bit monochrome (called Lena image by multimedia scientists) image since it contains no color. See Figures in next two slides. • 1-bit images can be satisfactory for pictures containing only simple graphics and text. • Fax machines use 1-bit data, so in fact 1-bit images are still important, even though storage capacities have increased enough to permit the use of imaging that carries more information. -

Registry Support for Multimedia and Metadata in Emu 3.2.03

Registry support for multimedia and metadata in EMu 3.2.03. • Overview • ImageMagick • Multimedia o Audio o Video o Images • Metadata o EXIF o IPTC o XMP o Embed in derivatives o Extract into Multimedia module o Limiting Colours computation Overview The image, audio and video libraries used to support multimedia have been replaced in KE EMu 3.2.03. The previous libraries were becoming dated and lacked support for newer file formats, in particular 16 bit graphics and CMYK colour spaces, as well as JPEG 2000. The previous libraries also used a simple algorithm for resizing images, which led to loss of clarity and colour. Rather than tie EMu image development to a third party vendor an open source solution was adopted as this provides development for new image formats and metadata standards as they emerge. It was decided that ImageMagick offered the functionally to expand the current image support in EMu. Unfortunately ImageMagick does not provide support for audio or video formats, so it was decided to build this functionality into EMu rather then use third party libraries. Access to metadata stored in image files is made available through ImageMagick. In particular, it has limited support for EXIF, IPTC and XMP metadata profiles. EMu now uses this support to extract metadata from master images and to embed metadata into derived images. This document describes how the new multimedia and metadata features can be configured using the EMu Registry. ImageMagick The ImageMagick libraries distributed with EMu are stored under the same directory as the program executable. If a network installation is performed, the libraries reside on a server machine in a directory accessible to all client machines. -

List of File Formats - Wikipedia, the Free Encyclopedia

List of file formats - Wikipedia, the free encyclopedia http://en.wikipedia.org/w/index.php?title=List_of_file_fo... List of file formats From Wikipedia, the free encyclopedia See also: List of file formats (alphabetical) This is a list of file formats organized by type, as can be found on computers. Filename extensions are usually noted in parentheses if they differ from the format name or abbreviation. In theory, using the basic Latin alphabet (A–Z) and an extension of up to three single-cased letters, 18,279 combinations can be made (263+262+261+260). When other acceptable characters are accepted, the maximum number is increased (very possibly to a number consisting of at least six digits). Many operating systems do not limit filenames to a single extension shorter than 4 characters, like what was common with some operating systems that supported the FAT file system. Examples of operating systems that don't have such a small limit include Unix-like systems. Also, Microsoft Windows NT, 95, 98, and Me don't have a three character limit on extensions for 32-bit or 64-bit applications on file systems other than pre-Windows 95/Windows NT 3.5 versions of the FAT file system. Some filenames are given extensions longer than three characters. Contents 1 Archive and compressed 1.1 Physical recordable media archiving 2 Computer-aided 2.1 Computer-aided design (CAD) 2.2 Electronic design automation (EDA) 2.3 Test technology 3 Database 4 Desktop publishing 5 Document 6 Font file 7 Geographic information system 8 Graphical information organizers -

Appendix B Open Source, Standards, Document | 3 Introduction

Getting Started Guide Appendix B Open Source, Open Standards, OpenDocument Copyright This document is Copyright © 2020 by the LibreOffice Documentation Team. Contributors are listed below. You may distribute it and/or modify it under the terms of either the GNU General Public License (http://www.gnu.org/licenses/gpl.html), version 3 or later, or the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0/), version 4.0 or later. All trademarks within this guide belong to their legitimate owners. Contributors To this edition Jean Hollis Weber Steve Fanning Kees Kriek To previous editions Olivier Hallot Valerii Goncharuk Jean Hollis Weber Dave Barton Andrew Jensen Peter Schofield Feedback Please direct any comments or suggestions about this document to the Documentation Team’s mailing list: [email protected] Note Everything you send to a mailing list, including your email address and any other personal information that is written in the message, is publicly archived and cannot be deleted. Publication date and software version Published September 2020. Based on LibreOffice 6.4. Note for macOS users Some keystrokes and menu items are different on macOS from those used in Windows and Linux. The table below gives some common substitutions for the instructions in this chapter. For a more detailed list, see the application Help. Windows or Linux macOS equivalent Effect Tools > Options LibreOffice > Preferences Access setup options Right-click Control+click and/or right-click Open a context menu depending