Building an Intelligent Knowledgebase of Brachiopod Paleontology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

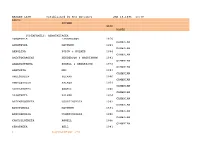

BRAGEN LIST Established by Rex Doescher JAN 19,1996 13:38 GENUS AUTHOR DATE RANGE

BRAGEN LIST established by Rex Doescher JAN 19,1996 13:38 GENUS AUTHOR DATE RANGE SUPERFAMILY: ACROTRETACEA ACROTHELE LINNARSSON 1876 CAMBRIAN ACROTHYRA MATTHEW 1901 CAMBRIAN AKMOLINA POPOV & HOLMER 1994 CAMBRIAN AMICTOCRACENS HENDERSON & MACKINNON 1981 CAMBRIAN ANABOLOTRETA ROWELL & HENDERSON 1978 CAMBRIAN ANATRETA MEI 1993 CAMBRIAN ANELOTRETA PELMAN 1986 CAMBRIAN ANGULOTRETA PALMER 1954 CAMBRIAN APHELOTRETA ROWELL 1980 CAMBRIAN APSOTRETA PALMER 1954 CAMBRIAN BATENEVOTRETA USHATINSKAIA 1992 CAMBRIAN BOTSFORDIA MATTHEW 1891 CAMBRIAN BOZSHAKOLIA USHATINSKAIA 1986 CAMBRIAN CANTHYLOTRETA ROWELL 1966 CAMBRIAN CERATRETA BELL 1941 1 Range BRAGEN LIST - 1996 CAMBRIAN CURTICIA WALCOTT 1905 CAMBRIAN DACTYLOTRETA ROWELL & HENDERSON 1978 CAMBRIAN DEARBORNIA WALCOTT 1908 CAMBRIAN DIANDONGIA RONG 1974 CAMBRIAN DICONDYLOTRETA MEI 1993 CAMBRIAN DISCINOLEPIS WAAGEN 1885 CAMBRIAN DISCINOPSIS MATTHEW 1892 CAMBRIAN EDREJA KONEVA 1979 CAMBRIAN EOSCAPHELASMA KONEVA & AL 1990 CAMBRIAN EOTHELE ROWELL 1980 CAMBRIAN ERBOTRETA HOLMER & USHATINSKAIA 1994 CAMBRIAN GALINELLA POPOV & HOLMER 1994 CAMBRIAN GLYPTACROTHELE TERMIER & TERMIER 1974 CAMBRIAN GLYPTIAS WALCOTT 1901 CAMBRIAN HADROTRETA ROWELL 1966 CAMBRIAN HOMOTRETA BELL 1941 CAMBRIAN KARATHELE KONEVA 1986 CAMBRIAN KLEITHRIATRETA ROBERTS 1990 CAMBRIAN 2 Range BRAGEN LIST - 1996 KOTUJOTRETA USHATINSKAIA 1994 CAMBRIAN KOTYLOTRETA KONEVA 1990 CAMBRIAN LAKHMINA OEHLERT 1887 CAMBRIAN LINNARSSONELLA WALCOTT 1902 CAMBRIAN LINNARSSONIA WALCOTT 1885 CAMBRIAN LONGIPEGMA POPOV & HOLMER 1994 CAMBRIAN LUHOTRETA MERGL & SLEHOFEROVA -

GSSP) of the Drumian Stage (Cambrian) in the Drum Mountains, Utah, USA

Articles 8585 by Loren E. Babcock1, Richard A. Robison2, Margaret N. Rees3, Shanchi Peng4, and Matthew R. Saltzman1 The Global boundary Stratotype Section and Point (GSSP) of the Drumian Stage (Cambrian) in the Drum Mountains, Utah, USA 1 School of Earth Sciences, The Ohio State University, 125 South Oval Mall, Columbus, OH 43210, USA. Email: [email protected] and [email protected] 2 Department of Geology, University of Kansas, Lawrence, KS 66045, USA. Email: [email protected] 3 Department of Geoscience, University of Nevada, Las Vegas, Las Vegas, NV 89145, USA. Email: [email protected] 4 State Key Laboratory of Palaeobiology and Stratigraphy, Nanjing Institute of Geology and Palaeontology, Chinese Academy of Sciences, 39 East Beijing Road, Nanjing 210008, China. Email: [email protected] The Global boundary Stratotype Section and Point correlated with precision through all major Cambrian regions. (GSSP) for the base of the Drumian Stage (Cambrian Among the methods that should be considered in the selection of a GSSP (Remane et al., 1996), biostratigraphic, chemostratigraphic, Series 3) is defined at the base of a limestone (cal- paleogeographic, facies-relationship, and sequence-stratigraphic cisiltite) layer 62 m above the base of the Wheeler For- information is available (e.g., Randolph, 1973; White, 1973; McGee, mation in the Stratotype Ridge section, Drum Moun- 1978; Dommer, 1980; Grannis, 1982; Robison, 1982, 1999; Rowell et al. 1982; Rees 1986; Langenburg et al., 2002a, 2002b; Babcock et tains, Utah, USA. The GSSP level contains the lowest al., 2004; Zhu et al., 2006); that information is summarized here. occurrence of the cosmopolitan agnostoid trilobite Pty- Voting members of the International Subcommission on Cam- chagnostus atavus (base of the P. -

Bibliography and Index

Bulletin No. 203. Series G, Miscellaneous, 23 DEPARTMENT OF THE INTERIOR UNITED STATES GEOLOGICAL SURVEY CHARLES .1). YVALCOTT, DIRECTOR BIBLIOGRAPHY AND INDEX FOR T I-I E Y E A. R 1 9 O 1 BY FRED BOUGHTON "WEEKS WASHINGTON - GOVERNMENT PRINTING OFFICE 1902 CONTENTS, Page. Letter of transmittal....................................................... 5 Introduction ......... 4 ................................................... 7 List of publications examined ............................................. 9 Bibliography ............................................................ 13 Addenda to bibliographies for previous years............................... 95 Classified key to the index ...........'.......... ............................ 97 Index ..................................................................... 103 LETTER OF TRANSM1TTAL. DEPARTMENT OF THE INTERIOR, UNITED STATES GEOLOGICAL SURVEY, Washington, D. 0., July % SIR: I have the honor to transmit herewith the manuscript of a Bibliography and Index of North American Geology, Paleontology, Petrology, and Mineralogy for the Year 1901, and to request that it be published as a Bulletin of the Survey. Yours respectfully, F. B. WEEKS. Hon. CHARLES D. WALCOTT, director United State* Geological Survey. BIBLIOGRAPHY AND INDEX OF NORTH AMERICAN GEOLOGY, PALEONTOLOGY, PETROLOGY, AND MINERALOGY FOR THE YEAR 1901. By FRED BOUGHTON WEEKS. INTRODUCTION. The preparation and arrangement of the material of the Bibliog raphy and Index for 1901 is similar to that adopted for the previous publications.(Bulletins Nos. 130, 135, 146, 149, 156, 162, 172, 188, and 189). Several papers that should have been entered in the pre vious bulletins are here recorded, and the date of publication is given with each entry. Bibliography. The bibliography consists of full titles of separate papers, arranged alphabetically by authors' names, an abbreviated reference to the publication in which the paper is printed, and a brief description of the contents, each paper being numbered for index reference. -

Smithsonian Miscellaneous Collections

SMITHSONIAN MISCELLANEOUS COLLECTIONS VOLUME 116, NUMBER 5 Cfjarle* £. anb Jfflarp "^Xaux flKHalcott 3Resiearcf) Jf tmb MIDDLE CAMBRIAN STRATIGRAPHY AND FAUNAS OF THE CANADIAN ROCKY MOUNTAINS (With 34 Plates) BY FRANCO RASETTI The Johns Hopkins University Baltimore, Maryland SEP Iff 1951 (Publication 4046) CITY OF WASHINGTON PUBLISHED BY THE SMITHSONIAN INSTITUTION SEPTEMBER 18, 1951 SMITHSONIAN MISCELLANEOUS COLLECTIONS VOLUME 116, NUMBER 5 Cfjarie* B. anb Jfflarp "^Taux OTalcott &egearcf) Jf unb MIDDLE CAMBRIAN STRATIGRAPHY AND FAUNAS OF THE CANADIAN ROCKY MOUNTAINS (With 34 Plates) BY FRANCO RASETTI The Johns Hopkins University Baltimore, Maryland (Publication 4046) CITY OF WASHINGTON PUBLISHED BY THE SMITHSONIAN INSTITUTION SEPTEMBER 18, 1951 BALTIMORE, MD., U. 8. A. CONTENTS PART I. STRATIGRAPHY Page Introduction i The problem I Acknowledgments 2 Summary of previous work 3 Method of work 7 Description of localities and sections 9 Terminology 9 Bow Lake 11 Hector Creek 13 Slate Mountains 14 Mount Niblock 15 Mount Whyte—Plain of Six Glaciers 17 Ross Lake 20 Mount Bosworth 21 Mount Victoria 22 Cathedral Mountain 23 Popes Peak 24 Eiffel Peak 25 Mount Temple 26 Pinnacle Mountain 28 Mount Schaffer 29 Mount Odaray 31 Park Mountain 33 Mount Field : Kicking Horse Aline 35 Mount Field : Burgess Quarry 37 Mount Stephen 39 General description 39 Monarch Creek IS Monarch Mine 46 North Gully and Fossil Gully 47 Cambrian formations : Lower Cambrian S3 St. Piran sandstone 53 Copper boundary of formation ?3 Peyto limestone member 55 Cambrian formations : Middle Cambrian 56 Mount Whyte formation 56 Type section 56 Lithology and thickness 5& Mount Whyte-Cathedral contact 62 Lake Agnes shale lentil 62 Yoho shale lentil "3 iii iv SMITHSONIAN MISCELLANEOUS COLLECTIONS VOL. -

North American Geology, Paleontology, Petrology, and Mineralogy

Bulletin No. 240 Series G, Miscellaneous, 28 DEPARTMENT OF THE INTERIOR UNITED STATES GEOLOGICAL SURVEY CHARLES D. VVALCOTT, DIRECTOR BIBIIOGRAP.HY AND INDEX OF NORTH AMERICAN GEOLOGY, PALEONTOLOGY, PETROLOGY, AND MINERALOGY FOR THE YEAJR, 19O3 BY IFIRIEID WASHINGTON GOVERNMENT PRINTING OFFICE 1904 CONTENTS Page. Letter of transmittal...................................................... 5 Introduction.....:....................................,.................. 7 List of publications examined ............................................. 9 Bibliography............................................................. 13 Addenda to bibliographies J'or previous years............................... 139 Classi (led key to the index................................................ 141 Index .._.........;.................................................... 149 LETTER OF TRANSMITTAL DEPARTMENT OF THE INTERIOR, UNITED STATES GEOLOGICAL SURVEY, Washington, D. 0. , June 7, 1904.. SIR: I have the honor to transmit herewith the manuscript of a bibliography and index of North American geology, paleontology, petrology, and mineralogy for the year 1903, and to request that it be published as a bulletin of the Survey. Very respectfully, F. B. WEEKS, Libraria/ii. Hon. CHARLES D. WALCOTT, Director United States Geological Survey. BIBLIOGRAPHY AND INDEX OF NORTH AMERICAN GEOLOGY,- PALEONTOLOGY, PETROLOGY, AND MINERALOGY FOR THE YEAR 1903. By FRED BOUGHTON WEEKS. INTRODUCTION, The arrangement of the material of the Bibliography and Index f Or 1903 is similar -

The Palaeontology Newsletter

The Palaeontology Newsletter Contents100 Editorial 2 Association Business 3 Annual Meeting 2019 3 Awards and Prizes AGM 2018 12 PalAss YouTube Ambassador sought 24 Association Meetings 25 News 30 From our correspondents A Palaeontologist Abroad 40 Behind the Scenes: Yorkshire Museum 44 She married a dinosaur 47 Spotlight on Diversity 52 Future meetings of other bodies 55 Meeting Reports 62 Obituary: Ralph E. Chapman 67 Grant Reports 72 Book Reviews 104 Palaeontology vol. 62 parts 1 & 2 108–109 Papers in Palaeontology vol. 5 part 1 110 Reminder: The deadline for copy for Issue no. 101 is 3rd June 2019. On the Web: <http://www.palass.org/> ISSN: 0954-9900 Newsletter 100 2 Editorial This 100th issue continues to put the “new” in Newsletter. Jo Hellawell writes about our new President Charles Wellman, and new Publicity Officer Susannah Lydon gives us her first news column. New award winners are announced, including the first ever PalAss Exceptional Lecturer (Stephan Lautenschlager). (Get your bids for Stephan’s services in now; check out pages 34 and 107.) There are also adverts – courtesy of Lucy McCobb – looking for the face of the Association’s new YouTube channel as well as a call for postgraduate volunteers to join the Association’s outreach efforts. But of course palaeontology would not be the same without the old. Behind the Scenes at the Museum returns with Sarah King’s piece on The Yorkshire Museum (York, UK). Norman MacLeod provides a comprehensive obituary of Ralph Chapman, and this issue’s palaeontologists abroad (Rebecca Bennion, Nicolás Campione and Paige dePolo) give their accounts of life in Belgium, Australia and the UK, respectively. -

Paleoecology of the Greater Phyllopod Bed Community, Burgess Shale ⁎ Jean-Bernard Caron , Donald A

Available online at www.sciencedirect.com Palaeogeography, Palaeoclimatology, Palaeoecology 258 (2008) 222–256 www.elsevier.com/locate/palaeo Paleoecology of the Greater Phyllopod Bed community, Burgess Shale ⁎ Jean-Bernard Caron , Donald A. Jackson Department of Ecology and Evolutionary Biology, University of Toronto, Toronto, Ontario, Canada M5S 3G5 Accepted 3 May 2007 Abstract To better understand temporal variations in species diversity and composition, ecological attributes, and environmental influences for the Middle Cambrian Burgess Shale community, we studied 50,900 fossil specimens belonging to 158 genera (mostly monospecific and non-biomineralized) representing 17 major taxonomic groups and 17 ecological categories. Fossils were collected in situ from within 26 massive siliciclastic mudstone beds of the Greater Phyllopod Bed (Walcott Quarry — Fossil Ridge). Previous taphonomic studies have demonstrated that each bed represents a single obrution event capturing a predominantly benthic community represented by census- and time-averaged assemblages, preserved within habitat. The Greater Phyllopod Bed (GPB) corresponds to an estimated depositional interval of 10 to 100 KA and thus potentially preserves community patterns in ecological and short-term evolutionary time. The community is dominated by epibenthic vagile deposit feeders and sessile suspension feeders, represented primarily by arthropods and sponges. Most species are characterized by low abundance and short stratigraphic range and usually do not recur through the section. It is likely that these are stenotopic forms (i.e., tolerant of a narrow range of habitats, or having a narrow geographical distribution). The few recurrent species tend to be numerically abundant and may represent eurytopic organisms (i.e., tolerant of a wide range of habitats, or having a wide geographical distribution). -

North American Geology, Paleontology Petrology, and Mineralogy

Bulletin No. 221 Series G, Miscellaneous, 25 DEPARTMENT OF THE INTERIOR UNITED STATES GEOLOGICAL SURVEY CHARLES D. WALCOTT, DIRECTOR OF NORTH AMERICAN GEOLOGY, PALEONTOLOGY PETROLOGY, AND MINERALOGY FOR BY 3FJRJEJD BOUGHHXCMV WEEKS WASHINGTON @QVEE,NMENT PRINTING OFFICE 1 9 0 3 O'Q.S. Pago. Letter of transrnittal...................................................... 5 Introduction...............................:............................. 7 List of publications examined............................................. 9 Bibliography............................................................. 13 Addenda to bibliographies for previous years............................... 124 Classified key to the index................................................ 125 Index................................................................... 133 £3373 LETTER OF TRANSMITTAL. DEPARTMENT OF THE INTERIOR, UNITED STATES GEOLOGICAL SURVEY, Washington, D. C., October 20, 1903. SIR: I have the honor to transmit herewith the manuscript) of a bibliography and index of North American geology, paleontology, petrology, and mineralogy for the year 1902, and to request that it be published as a bulletin of the Survey. Very respectfully, F. B. WEEKS. Hon. CHARLES D. WALCOTT, Director United States Geological Survey. 5 BIBLIOaRAPHY AND INDEX OF NORTH AMERICAN GEOLOGY, PALEONTOLOGY, PETROLOGY, AND MINERALOGY FOR THE YEAR 1902. By FRED BOUGHTON WEEKS. INTRODUCTION. The arrangement of the material of the Bibliography and Index for 1902 is similar to that adopted for the previous publications (Bulletins Nos. 130, 135, 146, 149, 156, 162, 172, 188, 189, and 203). Several papers that should have been entered in the previous bulletins are here recorded, and the date of publication is given with each entry. Bibliography. The bibliography consists of full titles of separate papers, arranged alphabetically by authors' names, an abbreviated reference to the publication in which the paper is printed, and a brief description of the contents, each r>aper being numbered for index reference. -

Subsurface Facies Analysis of the Cambrian

SUBSURFACE FACIES ANALYSIS OF THE CAMBRIAN CONASAUGA FORMATION AND KERBEL FORMATION . IN EAST- CENTRAL OHIO Bharat Banjade A Thesis Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE December 2011 Committee: James E. Evans, Advisor Charles M. Onasch Jeffrey Snyder ii ABSTRACT James E. Evans, advisor This study presents a subsurface facies analysis of the Cambrian Conasauga Formation and Kerbel Formation using well core and geophysical logs. Well- 2580, drilled in Seneca County (Ohio), was used for facies analysis, and the correlation of facies was based on the gamma- ray (GR) log for three wells from adjacent counties in Ohio (Well-20154 in Erie County, Well-20233 in Huron County, and Well-20148 in Marion County). In Well-2580, the Conasauga Formation is 37- m thick and the Kerbel Formation is 23-m thick. Analysis of the core identified 18 lithofacies. Some of the lithofacies are siliciclastic rocks, including: massive, planar laminated, cross-bedded, and hummocky stratified sandstone with burrows; massive and planar- laminated siltstone; massive mudstone; heterolithic sandstone and silty mudstone with tidal rhythmites showing double mud drapes, flaser-, lenticular-, and wavy- beddings; and heterogeneous siltstone and silty mudstone with rhythmic planar- lamination. Other lithofacies are dolomitized carbonate rocks that originally were massive, oolitic, intraclastic, and fossiliferous limestones. In general, the Conasauga Formation is a mixed siliciclastic-carbonate depositional unit with abundant tidal sedimentary structures consistent with a shallow- marine depositional setting and the Kerbel Formation is a siliciclastic depositional unit consistent with a marginal-marine depositional setting. -

Smithsonian Miscellaneous Collections

SMITHSONIAN MISCELLANEOUS COLLECTIONS PART OF VOLUME LI II CAMBRIAN GEOLOGY AND PALEONTOLOGY No. 5.—CAMBRIAN SECTIONS OF THE CORDILLERAN AREA With Ten Plates BY CHARLES D. WALCOTT No. 1812 CITY OF WASHINGTON published by the SMITHSONIAN INSTITUTION December 10, 1908 CAMBRIAN GEOLOGY AND PALEONTOLOGY No. 5.—CAMBRIAN SECTIONS OF THE CORDILLERAN AREA By CHARLES D. WALCOTT (With Ten Plates) Contents Pag« Introduction 167 Correlation of sections 168 House Range section, Utah I73 Waucoba Springs section, California 185 Barrel Spring section, Nevada 188 Blacksmith Fork section, Utah 190 Dearborn River section, Montana 200 Mount Bosworth section, British Columbia 204 Bibliography 218 Index 221 Illustrations Plate 13. Map of central portion of House Range, Utah 172-173 Plate 14. West face of House Range south of Marjum Pass 173 Plate 15. Northeast face of House Range south of Marjum Pass; ridge east and southeast of Wheeler Amphitheater, House Range 178-179 Plate 16. North side of Dome Canyon, House Range 182 Plate 17. West face of House Range, belovi: Tatow Knob 184 Plate 18. Cleavage of quartzitic sandstones, Deep Spring Valley, Cali- fornia 186 Plate 19. Sherbrooke Ridge on Mount Bosworth, British Columbia 207 Plate 20. Ridge north of Castle Mountain, Alberta; profile of southeast front of Castle Mountain 209 Plate 21. Mount Stephen, British Columbia 210 Plate 22. Profile of mountains surrounding Lake Louise, Alberta 216 INTRODUCTION My first study of a great section of Paleozoic rocks of the western side of North America was that of the Grand Canyon of the Colo- rado River, Arizona. In this section the Cambrian strata extend down to the horizon of the central portion of the Middle Cambrian (Acadian) where the Cambrian rests unconformably on the pre- Cambrian formations.^ * See American Jour. -

List of Brachiopod Genera in 1995

List of Brachiopod Genera in 1995 List established by Rex Doescher Aalenirhynchia:Shi&Grant:1993:Rhynchonella:Subdecorata:Davidson1851:Rhynchonellacea:Jurassic Aberia:Melou:1990:Aberia:Havliceki:Melou1990:Orthacea:Ordovician Aboriginella:Koneva:1983:Aboriginella:Denudata:Koneva1983:Lingulacea:Cambrian Abrekia:Dagys:1974:Abrekia:Sulcata:Dagys1974:Rhynchonellacea:Triassic Absenticosta:Lazarev:1991:Absenticosta:Uldzejtuensis:Suursuren&Lazarev1991:Productacea:Mississippian Abyssorhynchia:Zezina:1980:Hemithyris:Craneana:Dall1895:Rhynchonellacea:Recent Abyssothyris:Thomson:1927:Terebratula:Wyvillei:Davidson1878:Terebratulacea:Tertiary-Recent Acambona:White:1865:Acambona:Prima:White1865:Retziacea:Mississippian Acanthalosia:Waterhouse:1986:Acanthalosia:Domina:Waterhouse1986:Strophalosiacea:Carboniferous-Permian Acanthambonia:Cooper:1956:Acanthambonia:Minutissima:Cooper1956:Lingulacea:Ordovician Acanthatia:Muir-Wood&CooPer:1960:Heteralosia:NuPera:Stainbrook1947:Productacea:Devonian Acanthobasiliola:Zezina:1981:Rhynchonella:Doederleini:Davidson1886:Rhynchonellacea:Recent Acanthocosta:Roberts:1971:Acanthocosta:Teicherti:Roberts1971:Productacea:Mississippian Acanthocrania:Williams:1943:Crania:Spiculata:Rowley1908:Craniacea:Ordovician-Permian Acanthoglypha:Williams&Curry:1985:Streptis:Affinis:Reed1909:Porambonitacea:Ordovician Acanthoplecta:Muir-Wood&CooPer:1960:Producta:Mesoloba:PhilliPs1836:Productacea:MississiPPian Acanthoproductus:Martynova:1970:Acanthoproductus:Bogdanovi:Martynova1970:Productacea:Devonian Acanthorhynchia:Buckman:1918:Acanthothyris:Panacanthina:Buckman&Walker1889:Rhynchonellacea:Jurassic -

Disturbance, 133, 134 — Geosyncline, 9

INDEX Acadian (strata), 69 Bailiella viola, 88 — disturbance, 133, 134 Balls Lake, 115 — geosyncline, 9 formation, 14, 113-114, 116,127, 133, pi. 9 — plain, 7 basins, 54, 55, 59, 60, 61, 62, 64, 91-104,126, 132 — revolution, 125 Bassler, R. S., 86 Acrothele dbaria, 84 Bastin, E. S., 105 — coriacea, 88 batholith, 106 — matthewi, 88 Bay of Fundy, 7, 8,18, 23, 113, 115, 117, 118 Acrothyra signata, 84 beaches, 9, 52 Aarotreta bisecta, 90 Belgium, 90 Adair Cove, 118 Bell, Walter A., 18,107, 110, 114, 117, 118, 119, 133 Agnostus Cove, 102 BeUerophon trilobate, 105 formation 15,56,76-77,78, 79, 83,99,100,102 Bergeronia articepkala, 86 Agnostus gibbus, 74 — elegans, 86 — jiathorsti, 74 — radegasti, 86 Brögger, 73 Beyrickona, 67 confluens, 73 — beds, 67, 87, 93 — pisiformis, 77, 89, 102, 132 — fauna, 86, 98 afjinis, 89 — sandstone, 66, 85, 86 rugulosus, 89 — zone, 56, 66, 94 validus, 89 biotite, 29 zone, 56, 77, 78, 83 , 89 — gneiss, 27, 29, 31, 32, pi. 9 Albert formation, 15, 106-108, 112, pi. 9 — norite, 34 — shales, 106, 127 Black limestone, 70, 87, 88, 94, 99 Alcock, F. B., 26, 100, 114 — River, 16, 114, 115, 116 algae, 24 — sandstone, 65, 66, 93, 94, 96 algal limestone, 112, 125, 133, pi. 5 — Shale Brook, 78, 99 Ami, 17 formation, 15, 56, 77-80, 83 andesite, 50, 117 block-faulting, 19, 126, 134 — flows, 115, 133 Bloomsbury anticline, 109, 115, 127 — tuff, 49 — group, 6 Annapolis formation, 121 — Mountain, 116, 127 Annularia latifolia, 119 — Ridge, 63, 127 anticline, 51, 52, 103,107, 115, 116, 126, 127 Boar Head formation, 15, 108-109, 133, pi.