University of Groningen Opensesame Mathot, Sebastiaan; Schreij, Daniel

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

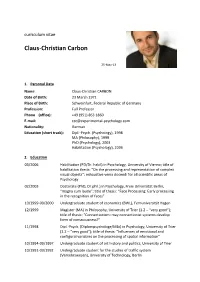

Claus-Christian Carbon

curriculum vitae Claus-Christian Carbon 25-May-13 1. Personal Data Name: Claus-Christian CARBON Date of Birth: 23 March 1971 Place of Birth: Schweinfurt, Federal Republic of Germany Profession: Full Professor Phone (office): +49 (951) 863 1860 E-mail: [email protected] Nationality: German Education (short track): Dipl.-Psych. (Psychology), 1998 MA (Philosophy), 1999 PhD (Psychology), 2003 Habilitation (Psychology), 2006 2. Education 03/2006 Habilitation (PD/Dr. habil) in Psychology, University of Vienna; title of habilitation thesis: “On the processing and representation of complex visual objects”; exhaustive venia docendi for all scientific areas of Psychology 02/2003 Doctorate (PhD, Dr.phil.) in Psychology, Freie Universität Berlin, “magna cum laude”; title of thesis: “Face Processing: Early processing in the recognition of faces” 10/1999-09/2000 Undergraduate student of economics (BWL), Fernuniversität Hagen 12/1999 Magister (MA) in Philosophy, University of Trier (1.2 – “very good”); title of thesis: “Connectionism: may connectionist systems develop form of consciousness?” 11/1998 Dipl.-Psych. (Diplompsychologe/MSc) in Psychology, University of Trier (1.2 – “very good”); title of thesis: “Influences of emotional and configural variables on the processing of spatial information” 10/1994-09/1997 Undergraduate student of art history and politics, University of Trier 10/1991-03/1992 Undergraduate student for the studies of traffic system (Verkehrswesen), University of Technology, Berlin Claus-Christian Carbon curriculum vitae - 2/21 - 07/1990-12/1990 Training and qualification for a national paramedic (Bavarian Red Cross) 06/1990 Abitur (GCSE) with focus on mathematics and physics, Dominikus Zimmermann Gymnasium, Landsberg am Lech Summer/Spring Schools 03/2008 EOG and Eyetracking in Psychological Research: Workshop of the University of Cologne (Prof. -

Travma Sonrası Stres Bozukluğu Tedavisinde Bilişsel Ve Davranışçı Yaklaşımlar 28

Hasan Kalyoncu Üniversitesi Psikoloji Bölümü www.hku.edu.tr Havalimanı Yolu Üzeri 8.Km 27410 Şahinbey/GAZİANTEP +90 (342) 211 80 80 - [email protected] EDİTÖR Doç. Dr. Şaziye Senem BAŞGÜL YARDIMCI EDİTÖR Arş. Gör. Saadet YAPAN YAYIN KURULU Prof. Dr. Mehmet Hakan TÜRKÇAPAR Prof. Dr. Mücahit ÖZTÜRK Prof. Dr. Bengi SEMERCİ Prof. Dr. Osman Tolga ARICAK Doç. Dr. Şaziye Senem BAŞGÜL Doç. Dr. Hanna Nita SCHERLER Öğr. Gör. Mehmet DİNÇ Öğr. Gör. Ferhat Jak İÇÖZ Öğr. Gör. Mediha ÖMÜR Arş. Gör. Saadet YAPAN Arş. Gör. Mahmut YAY Arş. Gör. Feyza TOPÇU DANIŞMA KURULU Prof. Dr. Can TUNCER Doç. Dr. Zümra ÖZYEŞİL Yrd. Doç. Dr. Itır TARI CÖMERT Dr. Özge MERGEN Dr. Akif AVCU KAPAK TASARIM Uğur Servet KARALAR GRAFİK UYGULAMA Yakup BAYRAM 0342 211 80 80 psikoloji.hku.edu.tr [email protected] Havalimanı Yolu Üzeri 8. Km 27410 Şahinbey/GAZİANTEP Sonbahar ve ilkbahar sayıları olarak yılda iki kere çıkar. İÇİNDEKİLER Önsöz 4 Editörden 5 Bilimsel Bir Araştırmanın Yol Haritası 6 Psikoterapilere Varoluşçu Bir Bakış 10 Anne, Baba ve Çocuk Tarafından Algılanan Ebeveyn Kabul-Ret ve Kontrolünün Çocuğun Duygu Düzenleme Becerisi İle İlişkisi 13 Fabrika İşçilerinde Stres ve Depresyon Arasındaki İlişki 20 Travma Sonrası Stres Bozukluğu Tedavisinde Bilişsel ve Davranışçı Yaklaşımlar 28 Araştırmalar ve Olgu Değerlendirmelerinde Nöro-Psikolojik Testler ve Bilgisayar Programlarının Bütünleştirilmesi: Bir Gözden Geçirme 32 Tez Özetleri 35 ÖNSÖZ Değerli okurlarımız, Hasan Kalyoncu Üniversitesi Psikoloji Bölümü öğretim üyeleri ve öğ- rencilerinin girişimiyle yayınlanan Psikoloji Araştırmaları dergisinin yeni bir sayısıyla yeniden karşınızdayız. Yolculuğumuz dördüncü sayı- sına ulaştı. Psikoloji Araştırmaları dergisinin bu sayısında, klinik çalışmalar ve göz- den geçirme derleme yazıları yer alıyor. -

A Proposal for a Data-Driven Approach to the Influence of Music

METHODS published: 20 August 2021 doi: 10.3389/fcvm.2021.699145 A Proposal for a Data-Driven Approach to the Influence of Music on Heart Dynamics Ennio Idrobo-Ávila 1*, Humberto Loaiza-Correa 1, Flavio Muñoz-Bolaños 2, Leon van Noorden 3 and Rubiel Vargas-Cañas 4 1 Escuela de Ingeniería Eléctrica y Electrónica, PSI - Percepción y Sistemas Inteligentes, Universidad del Valle, Cali, Colombia, 2 Departamento de Ciencias Fisiológicas, CIFIEX - Ciencias Fisiológicas Experimentales, Universidad del Cauca, Popayán, Colombia, 3 Department of Art, Music, and Theatre Sciences, IPEM—Institute for Systematic Musicology, Ghent University, Ghent, Belgium, 4 Departamento de Física, SIDICO - Sistemas Dinámicos, Instrumentación y Control, Universidad del Cauca, Popayán, Colombia Electrocardiographic signals (ECG) and heart rate viability measurements (HRV) provide information in a range of specialist fields, extending to musical perception. The ECG signal records heart electrical activity, while HRV reflects the state or condition of the autonomic nervous system. HRV has been studied as a marker of diverse psychological and physical diseases including coronary heart disease, myocardial infarction, and stroke. HRV has also been used to observe the effects of medicines, the impact of exercise and the analysis of emotional responses and evaluation of effects of various quantifiable Edited by: elements of sound and music on the human body. Variations in blood pressure, levels of Xiang Xie, stress or anxiety, subjective sensations and even changes in emotions constitute multiple First Affiliated Hospital of Xinjiang aspects that may well-react or respond to musical stimuli. Although both ECG and Medical University, China HRV continue to feature extensively in research in health and perception, methodologies Reviewed by: Ming-Jie Wang, vary substantially. -

A Escolha De Software E Hardware Na Psicolinguística: Revisão E Opinião

Revista de Estudos da Linguagem, Belo Horizonte, v.25, n.3, p. 971-1010, 2017 A escolha de software e hardware na psicolinguística: revisão e opinião The choice of software and hardware in psycholinguistics: review and opinion Thiago Oliveira da Motta Sampaio Language Acquisition, Processing and Syntax Lab - LAPROS Universidade Estadual de Campinas, Campinas, São Paulo / Brasil [email protected] Resumo: Nos últimos anos, diversos softwares foram criados para auxiliar a elaboração de experimentos em ciências cognitivas. A oferta de softwares de simples utilização deveria facilitar o trabalho dos iniciantes, porém, acabou trazendo novos problemas e dúvidas. Que software usar? Qual deles é o mais adequado ao meu estudo e por quê? Através de uma revisão sobre computação, linguagem de programação e técnicas de apresentação de estímulos visuais,1 este artigo pretende fomentar a discussão a respeito (i) dos diversos tipos de softwares para estimulação, (ii) da importância de conhecer os detalhes técnicos do hardware utilizado e (iii) da compatibilização hardware-software-método como uma variável a ser controlada durante o desenvolvimento do protocolo experimental. Palavras-chave: psicolinguística; ciências cognitivas; linguagens de programação; métodos. Abstract: In the last few years, several softwares have been designed to help the development of experiments in cognitive sciences. The offer of user friendly software would help beginners in their first tests. However, it brought new problems and questions. Which software should one use? Which one is more adequate for my research and why? The present paper brings a quick and panoramic review on computer science, programming languages and on the presentation of visual stimuli.