IBM AIX Version 6.1 Differences Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IBM Iseries Universal Connection for Electronic Support and Services

IBM iSeries Universal Connection for Electronic Support and Services Explains the supported functions with Universal Connection Shows how to install, tailor, and configure Universal Connection Helps you determine communications problems Masahiko Hamada Michael S Alexander Makoto Kikuchi ibm.com/redbooks International Technical Support Organization SG24-6224-00 iSeries Universal Connection for Electronic Support and Services August 2001 Take Note! Before using this information and the product it supports, be sure to read the general information in Appendix A, “Special notices” on page 209. First Edition (August 2001) This edition applies to Version 5 Release 1 of OS/400. Comments may be addressed to: IBM Corporation, International Technical Support Organization Dept. JLU Building 107-2 3605 Highway 52N Rochester, Minnesota 55901-7829 When you send information to IBM, you grant IBM a non-exclusive right to use or distribute the information in any way it believes appropriate without incurring any obligation to you. © Copyright International Business Machines Corporation 2001. All rights reserved. Note to U.S Government Users - Documentation related to restricted rights - Use, duplication or disclosure is subject to restrictions set forth in GSA ADP Schedule Contract with IBM Corp. Contents Preface . vii The team that wrote this redbook . vii Comments welcome . viii Chapter 1. Extreme Support Personalized (ESP) . .1 1.1 Introduction to ESP . .1 1.1.1 Customer Care Advantage . .1 1.1.2 ESP approach. .3 1.1.3 Benefits for customers. .4 1.2 Available applications in electronic support . .5 1.2.1 Electronic Customer Support (ECS) connection . .5 1.2.2 Service Agent . .6 1.2.3 Consolidated inventory collection using Management Central . -

Debugging with GDB

Debugging with GDB The gnu Source-Level Debugger HP Seventeenth Edition, for GDB January 2007 Richard Stallman, Roland Pesch, Stan Shebs, et al. (Send bugs and comments on GDB to [email protected] with copy to [email protected] ) Debugging with GDB TEXinfo 2003-02-03.16 Copyright c 2007 Free Software Foundation, Inc. Published by the Free Software Foundation 59 Temple Place - Suite 330, Boston, MA 02111-1307 USA ISBN 1-882114-77-9 Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions of this manual under the conditions for verbatim copying, provided also that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this manual into another lan- guage, under the above conditions for modified versions. i Table of Contents Summary of GDB............................. 1 Free software ................................................ 1 Contributors to GDB......................................... 1 1 A Sample GDB Session .................... 5 1.1 Loading the Executable .................................. 5 1.2 Setting Display width.................................... 6 1.3 Setting Breakpoints ..................................... 6 1.4 Running the executable under GDB ...................... 6 1.5 Stepping to the next line in the source program............ 6 1.6 Stepping into a subroutine ............................... 6 1.7 Examining the Stack .................................... 7 1.8 Printing Variable Values ................................. 7 1.9 Listing Source Code ..................................... 7 1.10 Setting Variable Values During a Session ................. 8 2 Getting In and Out of GDB .............. -

Portable Executable File Format

Chapter 11 Portable Executable File Format IN THIS CHAPTER + Understanding the structure of a PE file + Talking in terms of RVAs + Detailing the PE format + The importance of indices in the data directory + How the loader interprets a PE file MICROSOFT INTRODUCED A NEW executable file format with Windows NT. This for- mat is called the Portable Executable (PE) format because it is supposed to be portable across all 32-bit operating systems by Microsoft. The same PE format exe- cutable can be executed on any version of Windows NT, Windows 95, and Win32s. Also, the same format is used for executables for Windows NT running on proces- sors other than Intel x86, such as MIPS, Alpha, and Power PC. The 32-bit DLLs and Windows NT device drivers also follow the same PE format. It is helpful to understand the PE file format because PE files are almost identi- cal on disk and in RAM. Learning about the PE format is also helpful for under- standing many operating system concepts. For example, how operating system loader works to support dynamic linking of DLL functions, the data structures in- volved in dynamic linking such as import table, export table, and so on. The PE format is not really undocumented. The WINNT.H file has several struc- ture definitions representing the PE format. The Microsoft Developer's Network (MSDN) CD-ROMs contain several descriptions of the PE format. However, these descriptions are in bits and pieces, and are by no means complete. In this chapter, we try to give you a comprehensive picture of the PE format. -

IBM Power® Systems for SAS® Empowers Advanced Analytics Harry Seifert, Laurent Montaron, IBM Corporation

Paper 4695-2020 IBM Power® Systems for SAS® Empowers Advanced Analytics Harry Seifert, Laurent Montaron, IBM Corporation ABSTRACT For over 40+ years of partnership between IBM and SAS®, clients have been benefiting from the added value brought by IBM’s infrastructure platforms to deploy SAS analytics, and now SAS Viya’s evolution of modern analytics. IBM Power® Systems and IBM Storage empower SAS environments with infrastructure that does not make tradeoffs among performance, cost, and reliability. The unified solution stack, comprising server, storage, and services, reduces the compute time, controls costs, and maximizes resilience of SAS environment with ultra-high bandwidth and highest availability. INTRODUCTION We will explore how to deploy SAS on IBM Power Systems platforms and unleash the full potential of the infrastructure, to reduce deployment risk, maximize flexibility and accelerate insights. We will start by reviewing IBM and SAS’s technology relationship and the current state of SAS products on IBM Power Systems. Then we will look at some of the infrastructure options to deploy SAS 9.4 on IBM Power Systems and IBM Storage, while maximizing resiliency & throughput by leveraging best practices. Next, we will look at SAS Viya, which introduces changes to the underlying infrastructure requirements while remaining able to be deployed alongside a traditional SAS 9.4 operation. We’ll explore the various deployment modes available. Finally, we’ll look at tuning practices and reference materials available for a deeper dive in deploying SAS on IBM platforms. SAS: 40 YEARS OF PARTNERSHIP WITH IBM IBM and SAS have been partners since the founding of SAS. -

POWER® Processor-Based Systems

IBM® Power® Systems RAS Introduction to IBM® Power® Reliability, Availability, and Serviceability for POWER9® processor-based systems using IBM PowerVM™ With Updates covering the latest 4+ Socket Power10 processor-based systems IBM Systems Group Daniel Henderson, Irving Baysah Trademarks, Copyrights, Notices and Acknowledgements Trademarks IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. These and other IBM trademarked terms are marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml The following terms are trademarks of the International Business Machines Corporation in the United States, other countries, or both: Active AIX® POWER® POWER Power Power Systems Memory™ Hypervisor™ Systems™ Software™ Power® POWER POWER7 POWER8™ POWER® PowerLinux™ 7® +™ POWER® PowerHA® POWER6 ® PowerVM System System PowerVC™ POWER Power Architecture™ ® x® z® Hypervisor™ Additional Trademarks may be identified in the body of this document. Other company, product, or service names may be trademarks or service marks of others. Notices The last page of this document contains copyright information, important notices, and other information. Acknowledgements While this whitepaper has two principal authors/editors it is the culmination of the work of a number of different subject matter experts within IBM who contributed ideas, detailed technical information, and the occasional photograph and section of description. -

Raiffeisenbank Speeds Data Warehouse, Cuts Costs with Red Hat Enterprise Linux

CUSTOMER CASE STUDY RAIFFEISENBANK SPEEDS DATA WAREHOUSE, CUTS COSTS WITH RED HAT ENTERPRISE LINUX Raiffeisenbank, a banking institution that provides a wide range of services to private and corporate clients in the Czech Republic, needed to replace the aging hardware and IBM AIX operating system that supported its data warehouse. By migrating to Red Hat Enterprise Linux running on cost-effective Hitachi servers with Intel processors, the bank has tripled system performance speed and maintained stability — while cutting total cost SOFTWARE AND of ownership (TCO) by 50%. SERVICES Red Hat® Enterprise Linux® HARDWARE Hitachi Unified Compute Platform for Oracle Database Hitachi Compute Blade 2500 Prague, Czech Republic FINANCIAL SERVICES (CB 2500) Hitachi Virtual Storage HEADQUARTERS 3,000 EMPLOYEES Platform G600 (VSP G600) 120 BRANCHES PARTNER “There are many benefits to using Red Hat MHM computer a.s. and Oracle solutions together, and also BENEFITS from moving from IBM to Intel. We feel • Achieved three times faster a combination of Red Hat and Oracle on system performance an Intel platform is a preferred solution • Anticipates 50% decrease for any company.” in total cost of ownership over five years JIŘÍ KOUTNÍK HEAD OF SYSTEM ADMINISTRATION, • Gained greater flexibility by RAIFFEISENBANK eliminating vendor lock-in facebook.com/redhatinc @redhatnews linkedin.com/company/red-hat redhat.com AGING UNIX SYSTEM TOO SLOW FOR MODERN BUSINESS Raiffeisenbank a.s. provides a wide range of banking services to private and corporate clients in the Czech Republic at more than 120 branches and business client centers. The bank offers corpo- rate and personal finance products and services related to savings, insurance, and leasing, including specialized mortgage centers and business advisors. -

GSI Local Guide

UNIX Primer GSI Local Guide GSI Computing Center Version 2.0 This is draft version !!! Preface: More than one year ago, we published our ®rst version of the Unix primer, which has been used in the meantime by many people at GSI and even in the outside HEP community. Nowadays, as more and more physicists have access to a Unix computer either via a X-terminal or use their own workstation, and as the installed computing power has increased by a large factor, we have revised the ®rst version of our Unix primer. We tried to re¯ect the changes in the installedhardware, like the installationof the 11 machine AIX cluster, and the installationof new software products, as the batch system for job submission, new backup and restore products and the graphics system IDL. Almost all chapters have been revised, and some have undergone substantial changes like the introduction, the section about experimental data and tape handling and the chapter about the editors, where more editors are described in detail. Although many topics are still missing or could be improved, we decided to publishthe second edition of the Unix primer now in order to give a guide to the rapidly increasing Unix user community at GSI. As for the ®rst edition, many people again have contributed to this document: Wolfgang Ahner, Eliete Bertulani, Michael Dahlinger, Matthias Feyerabend, Ingo Giese, Horst GÈoringer, Eva Hocks, Peter Malzacher, Udo Meyer, Kerstin Schiebel, Kay Winkler and Heiko Weber. Preface for Version 1.0: In early summer 1991 the GSI Computing Center started a Unix Pilot Project investigating the hardware and software possibilities of centrally operated unix workstation systems. -

IBM Powervp Introduction and Technical Overview

Front cover IBM PowerVP Introduction and Technical Overview Liviu Rosca Stefan Stefanov Redpaper International Technical Support Organization IBM PowerVP: Introduction and Technical Overview August 2015 REDP-5112-01 Note: Before using this information and the product it supports, read the information in “Notices” on page v. Second Edition (August 2015) This edition applies to Version 1, Release 1, Modification 3 of PowerVP Standard Edition (product number 5765-SLE). This document was created or updated on August 14, 2015. © Copyright International Business Machines Corporation 2014, 2015. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . .v Trademarks . vi IBM Redbooks promotions . vii Preface . ix Authors. ix Now you can become a published author, too! . .x Comments welcome. xi Stay connected to IBM Redbooks . xi Chapter 1. Introduction. 1 1.1 PowerVP overview . 2 1.2 PowerVP features and benefits. 2 1.3 PowerVP architecture . 4 Chapter 2. Planning and installation . 9 2.1 Planning for PowerVP . 10 2.1.1 PowerVP security considerations . 12 2.2 PowerVP installation . 12 2.2.1 PowerVP installation overview . 13 2.2.2 PowerVP installer on Microsoft Windows systems . 13 2.2.3 Installing PowerVP GUI on AIX or Linux. 27 2.2.4 Installing PowerVP agent manually on IBM i . 30 2.2.5 Installing PowerVP agent on AIX and the VIOS . 31 2.2.6 Installing PowerVP agent on Linux . 34 2.3 Starting and stopping PowerVP . 39 2.4 Upgrading PowerVP agents . 40 2.5 PowerVP SSL configuration . -

Programming the Cell Broadband Engine Examples and Best Practices

Front cover Draft Document for Review February 15, 2008 4:59 pm SG24-7575-00 Programming the Cell Broadband Engine Examples and Best Practices Practical code development and porting examples included Make the most of SDK 3.0 debug and performance tools Understand and apply different programming models and strategies Abraham Arevalo Ricardo M. Matinata Maharaja Pandian Eitan Peri Kurtis Ruby Francois Thomas Chris Almond ibm.com/redbooks Draft Document for Review February 15, 2008 4:59 pm 7575edno.fm International Technical Support Organization Programming the Cell Broadband Engine: Examples and Best Practices December 2007 SG24-7575-00 7575edno.fm Draft Document for Review February 15, 2008 4:59 pm Note: Before using this information and the product it supports, read the information in “Notices” on page xvii. First Edition (December 2007) This edition applies to Version 3.0 of the IBM Cell Broadband Engine SDK, and the IBM BladeCenter QS-21 platform. © Copyright International Business Machines Corporation 2007. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Draft Document for Review February 15, 2008 4:59 pm 7575TOC.fm Contents Preface . xi The team that wrote this book . xi Acknowledgements . xiii Become a published author . xiv Comments welcome. xv Notices . xvii Trademarks . xviii Part 1. Introduction to the Cell Broadband Engine . 1 Chapter 1. Cell Broadband Engine Overview . 3 1.1 Motivation . 4 1.2 Scaling the three performance-limiting walls. 6 1.2.1 Scaling the power-limitation wall . 6 1.2.2 Scaling the memory-limitation wall . -

Redbooks Paper Bringing PHP to Your IBM Iseries

David Larson Bryan Logan Redbooks Paper Tim Massaro Heiko Mueller Brian R. Smith Bringing PHP to Your IBM iSeries Server Hypertext Preprocessor (PHP) is a powerful server-side scripting language for the Apache Web server. PHP is popular for its ability to process database information and create dynamic Web pages. Server-side refers to the fact that PHP language statements, which are included directly in your HTML, are processed by the Web server. Scripting language means that PHP is not compiled. Since the results of processing PHP language statements is standard HTML, PHP-generated Web pages are quick to display and are compatible with most all Web browsers and platforms. PHP is for the open source Apache community as Net.Data® is for the IBM® community. To “run” PHP scripts with your HTTP Server (powered by Apache), a PHP engine is required on your IBM ^™ iSeries™ server. The PHP engine is an open source product, so this IBM Redpaper demonstrates the process to download, compile, install, and then configure PHP on your iSeries. It explains how to install versions 4.3.0 and the older version 4.2.2 of PHP. The PHP engine is available both as an Apache module and a CGI-BIN. Support for PHP as a module is not yet available for OS/400®. The step-by-step implementation discussed in this Redpaper involves the CGI version of PHP running in OS/400 Portable Application Solutions Environment (PASE). This allows you to run AIX® binaries directly on an iSeries. It includes the necessary patches for the minor modifications needed to the PHP source code. -

CPE 323 Introduction to Software Reverse Engineering in Embedded Computer Systems

CPE 323 Introduction to Software Reverse Engineering in Embedded Computer Systems Aleksandar Milenković Email: [email protected] Web: http://www.ece.uah.edu/~milenka Objective: Introduce tools and methods for software reverse engineering in embedded systems Contents Contents ...................................................................................................................................... 1 1 Introduction ............................................................................................................................. 2 2 Format of Executable Files ...................................................................................................... 2 3 GNU Utilities ............................................................................................................................ 6 4 Deconstructing Executable Files: An Example ....................................................................... 10 5 Working with HEX Files and MSP430Flasher Utility .............................................................. 25 6 To Learn More ....................................................................................................................... 29 CPE 323: Software Reverse Engineering © A. Milenković 1 1 Introduction In this section we will introduce basic concepts, tools, and techniques for software reverse engineering with a special emphasis on embedded computer systems. Reverse engineering in general is a process of deconstructing man-made artifacts with a goal to reveal their designs and architecture -

IBM Communications Server

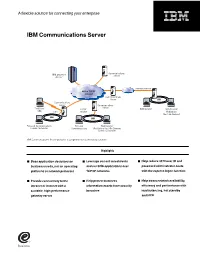

A flexible solution for connecting your enterprise IBM Communications Server ^ Communications IBM Server zSeriesTM Internet/extranet SNA or TCP/IP intranet Communications Server LAN Communications Server Communications Server Traditional TCP/IP Web browser Web browser SNA SNA intranet WebSphere Host On-Demand LAN LAN Personal Communications Personal Web browser Screen Customizer Communications WebSphere Host On-Demand Screen Customizer IBM Communications Server provides a comprehensive connectivity solution. Highlights ■ Base application decisions on ■ Leverage current investments ■ Help reduce 3270 user ID and business needs, not on operating and run SNA applications over password administrator costs platforms or network protocols TCP/IP networks with the express logon function ■ Provide connectivity to the ■ Help protect business ■ Help boost network availability, intranet or Internet with a information assets from security efficiency and performance with scalable, high-performance breaches load balancing, hot standby gateway server and HPR As your business needs grow, place, while making the transition at applications over existing SNA chances are, so will your network. your own pace. IBM Communications networks without adding a separate Explosive growth and constant change Server can connect your employees, TCP/IP network. And you can extend in the information management and customers and trading partners to SNA applications to TCP/IP users delivery arena call for versatile the information and applications they without the addition of a separate technologies to meet your company’s need, independent of the underlying SNA network. growing needs. IBM Communications network. Designed to meet your Server meets the challenge—offering company’s growing e-business needs, Reliability and flexibility a comprehensive package of IBM Communications Server provides IBM Communications Server also enterprise networking solutions to fast, efficient ways to extend data to works as a Telnet server, providing effectively connect your employees, Web users.