On Effective Personalized Music Retrieval by Exploring Online User Behaviors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Excesss Karaoke Master by Artist

XS Master by ARTIST Artist Song Title Artist Song Title (hed) Planet Earth Bartender TOOTIMETOOTIMETOOTIM ? & The Mysterians 96 Tears E 10 Years Beautiful UGH! Wasteland 1999 Man United Squad Lift It High (All About 10,000 Maniacs Candy Everybody Wants Belief) More Than This 2 Chainz Bigger Than You (feat. Drake & Quavo) [clean] Trouble Me I'm Different 100 Proof Aged In Soul Somebody's Been Sleeping I'm Different (explicit) 10cc Donna 2 Chainz & Chris Brown Countdown Dreadlock Holiday 2 Chainz & Kendrick Fuckin' Problems I'm Mandy Fly Me Lamar I'm Not In Love 2 Chainz & Pharrell Feds Watching (explicit) Rubber Bullets 2 Chainz feat Drake No Lie (explicit) Things We Do For Love, 2 Chainz feat Kanye West Birthday Song (explicit) The 2 Evisa Oh La La La Wall Street Shuffle 2 Live Crew Do Wah Diddy Diddy 112 Dance With Me Me So Horny It's Over Now We Want Some Pussy Peaches & Cream 2 Pac California Love U Already Know Changes 112 feat Mase Puff Daddy Only You & Notorious B.I.G. Dear Mama 12 Gauge Dunkie Butt I Get Around 12 Stones We Are One Thugz Mansion 1910 Fruitgum Co. Simon Says Until The End Of Time 1975, The Chocolate 2 Pistols & Ray J You Know Me City, The 2 Pistols & T-Pain & Tay She Got It Dizm Girls (clean) 2 Unlimited No Limits If You're Too Shy (Let Me Know) 20 Fingers Short Dick Man If You're Too Shy (Let Me 21 Savage & Offset &Metro Ghostface Killers Know) Boomin & Travis Scott It's Not Living (If It's Not 21st Century Girls 21st Century Girls With You 2am Club Too Fucked Up To Call It's Not Living (If It's Not 2AM Club Not -

Song of the Year

General Field Page 1 of 15 Category 3 - Song Of The Year 015. AMAZING 031. AYO TECHNOLOGY Category 3 Seal, songwriter (Seal) N. Hills, Curtis Jackson, Timothy Song Of The Year 016. AMBITIONS Mosley & Justin Timberlake, A Songwriter(s) Award. A song is eligible if it was Rudy Roopchan, songwriter songwriters (50 Cent Featuring Justin first released or if it first achieved prominence (Sunchasers) Timberlake & Timbaland) during the Eligibility Year. (Artist names appear in parentheses.) Singles or Tracks only. 017. AMERICAN ANTHEM 032. BABY Angie Stone & Charles Tatum, 001. THE ACTRESS Gene Scheer, songwriter (Norah Jones) songwriters; Curtis Mayfield & K. Tiffany Petrossi, songwriter (Tiffany 018. AMNESIA Norton, songwriters (Angie Stone Petrossi) Brian Lapin, Mozella & Shelly Peiken, Featuring Betty Wright) 002. AFTER HOURS songwriters (Mozella) Dennis Bell, Julia Garrison, Kim 019. AND THE RAIN 033. BACK IN JUNE José Promis, songwriter (José Promis) Outerbridge & Victor Sanchez, Buck Aaron Thomas & Gary Wayne songwriters (Infinite Embrace Zaiontz, songwriters (Jokers Wild 034. BACK IN YOUR HEAD Featuring Casey Benjamin) Band) Sara Quin & Tegan Quin, songwriters (Tegan And Sara) 003. AFTER YOU 020. ANDUHYAUN Dick Wagner, songwriter (Wensday) Jimmy Lee Young, songwriter (Jimmy 035. BARTENDER Akon Thiam & T-Pain, songwriters 004. AGAIN & AGAIN Lee Young) (T-Pain Featuring Akon) Inara George & Greg Kurstin, 021. ANGEL songwriters (The Bird And The Bee) Chris Cartier, songwriter (Chris 036. BE GOOD OR BE GONE Fionn Regan, songwriter (Fionn 005. AIN'T NO TIME Cartier) Regan) Grace Potter, songwriter (Grace Potter 022. ANGEL & The Nocturnals) Chaka Khan & James Q. Wright, 037. BE GOOD TO ME Kara DioGuardi, Niclas Molinder & 006. -

Words You Should Know How to Spell by Jane Mallison.Pdf

WO defammasiont priveledgei Spell it rigHt—everY tiMe! arrouse hexagonnalOver saicred r 12,000 Ceilling. Beleive. Scissers. Do you have trouble of the most DS HOW DS HOW spelling everyday words? Is your spell check on overdrive? MiSo S Well, this easy-to-use dictionary is just what you need! acheevei trajectarypelled machinry Organized with speed and convenience in mind, it gives WordS! you instant access to the correct spellings of more than 12,500 words. YOUextrac t grimey readallyi Also provided are quick tips and memory tricks, such as: SHOUlD KNOW • Help yourself get the spelling of their right by thinking of the phrase “their heirlooms.” • Most words ending in a “seed” sound are spelled “-cede” or “-ceed,” but one word ends in “-sede.” You could say the rule for spelling this word supersedes the other rules. Words t No matter what you’re working on, you can be confident You Should Know that your good writing won’t be marred by bad spelling. O S Words You Should Know How to Spell takes away the guesswork and helps you make a good impression! PELL hoW to spell David Hatcher, MA has taught communication skills for three universities and more than twenty government and private-industry clients. He has An A to Z Guide to Perfect SPellinG written and cowritten several books on writing, vocabulary, proofreading, editing, and related subjects. He lives in Winston-Salem, NC. Jane Mallison, MA teaches at Trinity School in New York City. The author bou tique swaveu g narl fabulus or coauthor of several books, she worked for many years with the writing section of the SAT test and continues to work with the AP English examination. -

Eyez on Me 2PAC – Changes 2PAC - Dear Mama 2PAC - I Ain't Mad at Cha 2PAC Feat

2 UNLIMITED- No Limit 2PAC - All Eyez On Me 2PAC – Changes 2PAC - Dear Mama 2PAC - I Ain't Mad At Cha 2PAC Feat. Dr DRE & ROGER TROUTMAN - California Love 311 - Amber 311 - Beautiful Disaster 311 - Down 3 DOORS DOWN - Away From The Sun 3 DOORS DOWN – Be Like That 3 DOORS DOWN - Behind Those Eyes 3 DOORS DOWN - Dangerous Game 3 DOORS DOWN – Duck An Run 3 DOORS DOWN – Here By Me 3 DOORS DOWN - Here Without You 3 DOORS DOWN - Kryptonite 3 DOORS DOWN - Landing In London 3 DOORS DOWN – Let Me Go 3 DOORS DOWN - Live For Today 3 DOORS DOWN – Loser 3 DOORS DOWN – So I Need You 3 DOORS DOWN – The Better Life 3 DOORS DOWN – The Road I'm On 3 DOORS DOWN - When I'm Gone 4 NON BLONDES - Spaceman 4 NON BLONDES - What's Up 4 NON BLONDES - What's Up ( Acoustative Version ) 4 THE CAUSE - Ain't No Sunshine 4 THE CAUSE - Stand By Me 5 SECONDS OF SUMMER - Amnesia 5 SECONDS OF SUMMER - Don't Stop 5 SECONDS OF SUMMER – Good Girls 5 SECONDS OF SUMMER - Jet Black Heart 5 SECONDS OF SUMMER – Lie To Me 5 SECONDS OF SUMMER - She Looks So Perfect 5 SECONDS OF SUMMER - Teeth 5 SECONDS OF SUMMER - What I Like About You 5 SECONDS OF SUMMER - Youngblood 10CC - Donna 10CC - Dreadlock Holiday 10CC - I'm Mandy ( Fly Me ) 10CC - I'm Mandy Fly Me 10CC - I'm Not In Love 10CC - Life Is A Minestrone 10CC - Rubber Bullets 10CC - The Things We Do For Love 10CC - The Wall Street Shuffle 30 SECONDS TO MARS - Closer To The Edge 30 SECONDS TO MARS - From Yesterday 30 SECONDS TO MARS - Kings and Queens 30 SECONDS TO MARS - Teeth 30 SECONDS TO MARS - The Kill (Bury Me) 30 SECONDS TO MARS - Up In The Air 30 SECONDS TO MARS - Walk On Water 50 CENT - Candy Shop 50 CENT - Disco Inferno 50 CENT - In Da Club 50 CENT - Just A Lil' Bit 50 CENT - Wanksta 50 CENT Feat. -

Alternative 2020

Mediabase Charts Alternative 2020 Published (U.S.) -- Currents & Recurrents January 2020 through December, 2020 Rank Artist Title 1 TWENTY ONE PILOTS Level Of Concern 2 BILLIE EILISH everything i wanted 3 AJR Bang! 4 TAME IMPALA Lost In Yesterday 5 MATT MAESON Hallucinogenics 6 ALL TIME LOW Monsters f/blackbear 7 ABSOFACTO Dissolve 8 POWFU Coffee For Your Head 9 SHAED Trampoline 10 UNLIKELY CANDIDATES Novocaine 11 CAGE THE ELEPHANT Black Madonna 12 MACHINE GUN KELLY Bloody Valentine 13 STROKES Bad Decisions 14 MEG MYERS Running Up That Hill 15 HEAD AND THE HEART Honeybee 16 PANIC! AT THE DISCO High Hopes 17 KILLERS Caution 18 WEEZER Hero 19 TWENTY ONE PILOTS The Hype 20 WALLOWS Are You Bored Yet? 21 LOVELYTHEBAND Broken 22 DAYGLOW Can I Call You Tonight? 23 GROUPLOVE Deleter 24 SUB URBAN Cradles 25 NEON TREES Used To Like 26 CAGE THE ELEPHANT Social Cues 27 WHITE REAPER Might Be Right 28 BLACK KEYS Shine A Little Light 29 LUMINEERS Life In The City 30 LANA DEL REY Doin' Time 31 GREEN DAY Oh Yeah! 32 MARSHMELLO Happier f/Bastille 33 AWOLNATION The Best 34 LOVELYTHEBAND Loneliness For Love 35 KENNYHOOPLA How Will I Rest In Peace If... 36 BAKAR Hell N Back 37 BLUE OCTOBER Oh My My 38 KILLERS My Own Soul's Warning 39 GLASS ANIMALS Your Love (Deja Vu) 40 BILLIE EILISH bad guy 41 MATT MAESON Cringe 42 MAJOR LAZER F/MARCUS Lay Your Head On Me 43 PEACH TREE RASCALS Mariposa 44 IMAGINE DRAGONS Natural 45 ASHE Moral Of The Story f/Niall 46 DOMINIC FIKE 3 Nights 47 I DONT KNOW HOW BUT THEY.. -

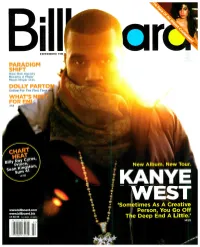

`Sometimes As a Creative

EXPERIENCE THE PARADIGM SHIFT How One Agency Became A Major Music Player >P.25 DOLLY PARTO Online For The First Tim WHAT'S FOR >P.8 New Album. New Tour. KANYE WEST `Sometimes As A Creative www.billboard.com Person, You Go Off www.billboard.biz US $6.99 CAN $8.99 UK E5.50 The Deep End A Little.' >P.22 THE YEAR MARK ROHBON VERSION THE CRITICS AIE IAVIN! "HAVING PRODUCED AMY WINEHOUSE AND LILY ALLEN, MARK RONSON IS ON A REAL ROLL IN 2007. FEATURING GUEST VOCALISTS LIKE ALLEN, IN NOUS AND ROBBIE WILLIAMS, THE WHOLE THING PLAYS LIKE THE ULTIMATE HIPSTER PARTY MIXTAPE. BEST OF ALL? `STOP ME,' THE MOST SOULFUL RAVE SINCE GNARLS BARKLEY'S `CRAZY." PEOPLE MAGAZINE "RONSON JOYOUSLY TWISTS POPULAR TUNES BY EVERYONE FROM RA TO COLDPLAY TO BRITNEY SPEARS, AND - WHAT DO YOU KNOW! - IT TURNS OUT TO BE THE MONSTER JAM OF THE SEASON! REGARDLESS OF WHO'S OH THE MIC, VERSION SUCCEEDS. GRADE A" ENT 431,11:1;14I WEEKLY "THE EMERGING RONSON SOUND IS MOTOWN MEETS HIP -HOP MEETS RETRO BRIT -POP. IN BRITAIN, `STOP ME,' THE COVER OF THE SMITHS' `STOP ME IF YOU THINK YOU'VE HEARD THIS ONE BEFORE,' IS # 1 AND THE ALBUM SOARED TO #2! COULD THIS ROCK STAR DJ ACTUALLY BECOME A ROCK STAR ?" NEW YORK TIMES "RONSON UNITES TWO ANTITHETICAL WORLDS - RECENT AND CLASSIC BRITPOP WITH VINTAGE AMERICAN R &B. LILY ALLEN, AMY WINEHOUSE, ROBBIE WILLIAMS COVER KAISER CHIEFS, COLDPLAY, AND THE SMITHS OVER BLARING HORNS, AND ORGANIC BEATS. SHARP ARRANGING SKILLS AND SUITABLY ANGULAR PERFORMANCES! * * * *" SPIN THE SOUNDTRACK TO YOUR SUMMER! THE . -

500 Drugstore Feat. Thom Yorke El President 499 Blink

500 DRUGSTORE FEAT. EL PRESIDENT THOM YORKE 499 BLINK 182 ADAMS SONG 498 SCREAMING TREES NEARLY LOST YOU 497 LEFTFIELD OPEN UP 496 SHIHAD BEAUTIFUL MACHINE 495 TEGAN AND SARAH WALKING WITH A GHOST 494 BOMB THE BASS BUG POWDER DUST 493 BAND OF HORSES IS THERE A GHOST 492 BLUR BEETLEBUM 491 DISPOSABLE HEROES TELEVISION THE DRUG OF THE OF... NATION 490 FOO FIGHTERS WALK 489 AIR SEXY BOY 488 IGGY POP CANDY 487 NINE INCH NAILS THE HAND THAT FEEDS 486 PEARL JAM NOT FOR YOU 485 RADIOHEAD EVERYTHING IN ITS RIGHT PLACE 484 RANCID TIME BOMB 483 RED HOT CHILI MY FRIENDS PEPPERS 482 SANTIGOLD LES ARTISTES 481 SMASHING PUMPKINS AVA ADORE 480 THE BIG PINK DOMINOS 479 THE STROKES REPTILIA 478 THE PIXIES VELOURIA 477 BON IVER SKINNY LOVE 476 ANIMAL COLLECTIVE MY GIRLS 475 FILTER HEY MAN NICE SHOT 474 BRAD 20TH CENTURY 473 INTERPOL SLOW HANDS 472 MAD SEASON SLIP AWAY 471 OASIS SOME MIGHT SAY 470 SLEIGH BELLS RILL RILL 469 THE AFGHAN WHIGS 66 468 THE FLAMING LIPS FIGHT TEST 467 ALICE IN CHAINS MAN IN THE BOX 466 FAITH NO MORE A SMALL VICTORY 465 THE THE DOGS OF LUST 464 ARCTIC MONKEYS R U MINE 463 BECK DEADWEIGHT 462 GARBAGE MILK 461 BEN FOLDS FIVE BRICK 460 NIRVANA PENNYROYAL TEA 459 SHIHAD YR HEAD IS A ROCK 458 SNEAKER PIMPS 6 UNDERGROUND 457 SOUNDGARDEN BURDEN IN MY HAND 456 EMINEM LOSE YOURSELF 455 SUPERGRASS RICHARD III 454 UNDERWORLD PUSH UPSTAIRS 453 ARCADE FIRE REBELLION (Lies) 452 RADIOHEAD BLACK STAR 451 BIG DATA DANGEROUS 450 BRAN VAN 3000 DRINKING IN LA 449 FIONA APPLE CRIMINAL 448 KINGS OF LEON USE SOMEBODY 447 PEARL JAM GETAWAY 446 BEASTIE -

Eltot 1350.Pdf

Els Soldats de la Pau busquen el reconeixement al Vaticà El Cruïlla sí funciona a Barcelona amb 6.000 persones totcultura Cirera encara el segon cap de setmana de Festa Popular totcultura El Mataró comença perdent i “abandonant” per l’àrbitre eltotesport La Diada arriba amb els actes · ENTREVISTA A MOSSÈN CUSSÓ de sempre, la setmana que ve · NITS DE JAZZ A LA FRESCA · MÉS FESTES A BARRIS I CARRERS tot ciutat · VÍCTOR FLORES DEIXA EL QUADIS · NOVA TEMPORADA DE TEATRE horòscops, cinemes, televisió, guia cultural, columnes d’opinió, santoral, farmà- cies, la foto antiga, portades d’El Tot, exposicions, sudoku, imatges, econòmics, ... Ofi cines i Redacció: Carrer d’en Xammar, 11 08301 Mataró Edita: EL TOT MATARÓ S.L. Telf: 93 796 16 42 Director: Antoni Rodríguez Administració: Maria Carme Rodríguez Fax: 93 755 41 06 | OFICINA: Paquita Cruz i Carme Fabrés (atenció al client), Joan Guillén (disseny) i Ma. Fernanda Marañón. www.totmataro.cat REDACCIÓ: Jordi Aliberas (disseny), Laura Arias, Eloi Sivilla, Cugat Comas (coordinació) i Xènia Sola (fotografi a). www.portalmataro.com | DIPÒSIT LEGAL: B-18.356-1981 | Imprimeix: iGRÀFIC | Difusió controlada per l’Ofi cina de la Justifi cació de la Difusió 35.000 Exemplars | [email protected] | Distribució Gratuïta a Mataró, Argentona, Vilassar, Premià, Arenys, Caldes d’Estrac, Llavaneres, Canet. | [email protected] La redacció d’EL TOT MATARÓ no es fa necessàriament responsable del contingut dels articles, opinions i missatges comercials inclosos en la revista. [email protected] ...i a Granollers EL TOT GRANOLLERS I VALLÈS ORIENTAL c/ Roger de Flor, 71 3r Telf. -

Song Title Artist Genre

Song Title Artist Genre - General The A Team Ed Sheeran Pop A-Punk Vampire Weekend Rock A-Team TV Theme Songs Oldies A-YO Lady Gaga Pop A.D.I./Horror of it All Anthrax Hard Rock & Metal A** Back Home (feat. Neon Hitch) (Clean)Gym Class Heroes Rock Abba Megamix Abba Pop ABC Jackson 5 Oldies ABC (Extended Club Mix) Jackson 5 Pop Abigail King Diamond Hard Rock & Metal Abilene Bobby Bare Slow Country Abilene George Hamilton Iv Oldies About A Girl The Academy Is... Punk Rock About A Girl Nirvana Classic Rock About the Romance Inner Circle Reggae About Us Brooke Hogan & Paul Wall Hip Hop/Rap About You Zoe Girl Christian Above All Michael W. Smith Christian Above the Clouds Amber Techno Above the Clouds Lifescapes Classical Abracadabra Steve Miller Band Classic Rock Abracadabra Sugar Ray Rock Abraham, Martin, And John Dion Oldies Abrazame Luis Miguel Latin Abriendo Puertas Gloria Estefan Latin Absolutely ( Story Of A Girl ) Nine Days Rock AC-DC Hokey Pokey Jim Bruer Clip Academy Flight Song The Transplants Rock Acapulco Nights G.B. Leighton Rock Accident's Will Happen Elvis Costello Classic Rock Accidentally In Love Counting Crows Rock Accidents Will Happen Elvis Costello Classic Rock Accordian Man Waltz Frankie Yankovic Polka Accordian Polka Lawrence Welk Polka According To You Orianthi Rock Ace of spades Motorhead Classic Rock Aces High Iron Maiden Classic Rock Achy Breaky Heart Billy Ray Cyrus Country Acid Bill Hicks Clip Acid trip Rob Zombie Hard Rock & Metal Across The Nation Union Underground Hard Rock & Metal Across The Universe Beatles -

Spinner.Com - Free Mp3s, Interviews, Music News, Live Performances, Songs and Videos 09/19/2007 09:12 AM

Gypsy Caravan on the Long and Bending Road - Spinner.com - Free MP3s, Interviews, Music News, Live Performances, Songs and Videos 09/19/2007 09:12 AM AOL Entertainment Guide powered by AOL Music AOL MY AOL MAIL MAKE AOL MY HOMEPAGE ENTERTAINMENT NEWS GAMES MOVIES MUSIC RADIO TELEVISION TMZ Spinner Web SEARCH Search for artists, news, MP3s and more RSS Feed Contact Us Send News Tips Features Gypsy Caravan on the Long and BEST OPENING Bending Road LYRICS ELVIS FACT OR Posted Jun 5th 2007 11:00AM by Steve Hochman FICTION Filed under: Around the World ICONIC ELVIS PHOTOS WORST LYRICS EVER BEST PROTEST SONGS CARTOON ROCKERS HOW DID THEY DIE? ICONIC ROCK PHOTOS SAD SONGS WOMEN BEHIND SONGS WORST BAND PHOTOS KILLER B-SIDES ADVERTISEMENT WORST ROCK JOBS GAY MOMENTS CONCERT ETIQUETTE Free Music! MONUMENTAL FLOPS Millions of songs -- from Akon to ROCK 'N' ROLL DOLLS Zero 7 -- await your ears. RANK THE ROCK Try Napster for Free READERS' LYRICS WOMEN WHO ROCK Halfway through the new film 'Gypsy Caravan: When the Road Bends,' which Hot Categories documents a 2001 North American tour that brought together musicians from across the Euro-Asian Gypsy world (or, to use the preferred term, Roma), one of ELVIS PRESLEY the participants makes a startling statement. A member of the band Fanfare Most Commented On (7 days) MP3 OF THE DAY Ciocarlia, known for its frenzied swirls of brass sounds, recalled the first time the Fire Injures Several at Austin City Limits Music Festival NEWS group played outside its native Romania in the early '90s following the downfall of New Music: Thrice, 'Fire Breather' & 'Digital Sea' VIDEO OF THE DAY dictator Nicolae Ceausescu. -

Making Music Radio

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Stirling Online Research Repository MAKING MUSIC RADIO THE RECORD INDUSTRY AND POPULAR MUSIC PRODUCTION IN THE UK James Mark Percival A thesis submitted for the degree of Doctor of Philosophy Department of Film and Media Studies University of Stirling July 2007 J Mark Percival - Making music radio: the record industry and popular music production in the UK For my mother, Elizabeth Ann Percival (née Murphy) (1937-2004) and my father, Stanley Thomas Percival. J Mark Percival - Making music radio: the record industry and popular music production in the UK i ABSTRACT Music radio is the most listened to form of radio, and one of the least researched by academic ethnographers. This research project addresses industry structure and agency in an investigation into the relationship between music radio and the record industry in the UK, how that relationship works to produce music radio and to shape the production of popular music. The underlying context for this research is Peterson's production of culture perspective. The research is in three parts: a model of music radio production and consumption, an ethnographic investigation focusing on music radio programmers and record industry pluggers, and an ethnographic investigation into the use of specialist music radio programming by alternative pop and rock artists in Glasgow, Scotland. The research has four main conclusions: music radio continues to be central to the record industry's promotional strategy for new commercial recordings; music radio is increasing able to mediate the production practices of the popular music industry; that mediation is focused through the social relationship between music radio programmers and record industry pluggers; cultural practices of musicians are developed and mediated by consumption of specialist music radio, as they become part of specialist music radio. -

Corpus Antville

Corpus Epistemológico da Investigação Vídeos musicais referenciados pela comunidade Antville entre Junho de 2006 e Junho de 2011 no blogue homónimo www.videos.antville.org Data Título do post 01‐06‐2006 videos at multiple speeds? 01‐06‐2006 music videos based on cars? 01‐06‐2006 can anyone tell me videos with machine guns? 01‐06‐2006 Muse "Supermassive Black Hole" (Dir: Floria Sigismondi) 01‐06‐2006 Skye ‐ "What's Wrong With Me" 01‐06‐2006 Madison "Radiate". Directed by Erin Levendorf 01‐06‐2006 PANASONIC “SHARE THE AIR†VIDEO CONTEST 01‐06‐2006 Number of times 'panasonic' mentioned in last post 01‐06‐2006 Please Panasonic 01‐06‐2006 Paul Oakenfold "FASTER KILL FASTER PUSSYCAT" : Dir. Jake Nava 01‐06‐2006 Presets "Down Down Down" : Dir. Presets + Kim Greenway 01‐06‐2006 Lansing‐Dreiden "A Line You Can Cross" : Dir. 01‐06‐2006 SnowPatrol "You're All I Have" : Dir. 01‐06‐2006 Wolfmother "White Unicorn" : Dir. Kris Moyes? 01‐06‐2006 Fiona Apple ‐ Across The Universe ‐ Director ‐ Paul Thomas Anderson. 02‐06‐2006 Ayumi Hamasaki ‐ Real Me ‐ Director: Ukon Kamimura 02‐06‐2006 They Might Be Giants ‐ "Dallas" d. Asterisk 02‐06‐2006 Bersuit Vergarabat "Sencillamente" 02‐06‐2006 Lily Allen ‐ LDN (epk promo) directed by Ben & Greg 02‐06‐2006 Jamie T 'Sheila' directed by Nima Nourizadeh 02‐06‐2006 Farben Lehre ''Terrorystan'', Director: Marek Gluziñski 02‐06‐2006 Chris And The Other Girls ‐ Lullaby (director: Christian Pitschl, camera: Federico Salvalaio) 02‐06‐2006 Megan Mullins ''Ain't What It Used To Be'' 02‐06‐2006 Mr.