Structures, Learning and Ergosystems: Chapters 1-4, 6

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fma-Special-Edition-Balisong.Pdf

Publisher Steven K. Dowd Contributing Writers Leslie Buck Stacey K. Sawa, a.k.a. ZENGHOST Chuck Gollnick Contents From the Publishers Desk History A Blade is Born Introduction to the Balisong Modern Custom Balisong Knives Balisong Master Nilo Limpin Balisong Information Centers Filipino Martial Arts Digest is published and distributed by: FMAdigest 1297 Eider Circle Fallon, Nevada 89406 Visit us on the World Wide Web: www.fmadigest.com The FMAdigest is published quarterly. Each issue features practitioners of martial arts and other internal arts of the Philippines. Other features include historical, theoretical and technical articles; reflections, Filipino martial arts, healing arts and other related subjects. The ideas and opinions expressed in this digest are those of the authors or instructors being interviewed and are not necessarily the views of the publisher or editor. We solicit comments and/or suggestions. Articles are also welcome. The authors and publisher of this digest are not responsible for any injury, which may result from following the instructions contained in the digest. Before embarking on any of the physical activates described in the digest, the reader should consult his or her physician for advice regarding their individual suitability for performing such activity. From the Publishers Desk Kumusta I personally have been intrigued with the Balisong ever since first visiting the Philippines in the early 70’s. I have seen some Masters of the balisong do very amazing things with the balisong. And have a small collection that I obtained through the years while being in the Philippines. In this Special Edition on the Balisong, Chuck Gollnick, Stacey K. -

April Newsletter 2013.Cdr

KNIFEOKCA 38th Annual SHOW • April 13-14 Lane Events Center EXHIBIT HALL • Eugene, Oregon April 2013 Our international membership is happily involved with “Anything that goes ‘cut’!” YOU ARE INVITED TO THE OKCA 38th ANNUAL KNIFE SHOW & SALE April 13 - 14 * Lane Events Center & Fairgrounds, Eugene, Oregon In the super large EXHIBIT HALL. Now 360 Tables! ELCOME to the Oregon Knife have a Balisong/Butterfly knife Tournament, Auction Saturday only. Just like eBay but Collectors Association Special Blade Forging, Flint Knapping, quality real and live. Anyone can enter to bid in the WShow Knewslettter. On Saturday, Kitchen Cutlery seminar, Martial Arts, Silent Auction. See the display cases at the April 13, and Sunday, April 14, we want to Scrimshaw, Self Defense, Sharpening Club table to make a bid on some extra welcome you and your friends and family to the Knives, Wood Carving and a special seminar special knives . famous and spectacular OREGON KNIFE on “What do you do with that kitchen knife SHOW & SALE. Now the Largest you have.” And don't miss the FREE knife Along the side walls, we will have twenty organizational Knife Show East & West of the identification and appraisal by Tommy Clark four MUSEUM QUALITY KNIFE AND Mississippi River. from Marion, VA(Table N01) - Mark Zalesky CUTLERY COLLECTIONS ON DISPLAY from Knoxville TN (Table N02) - Mike for your enjoyment and education, in The OREGON KNIFE SHOW happens just Silvey on military knives is from Pollock addition to our hundreds of tables of hand- once a year, at the Lane Events Center Pines CA (Table J14) and Sheldon made, factory and antique knives for sale. -

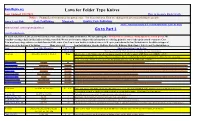

Laws for Folder Type Knives Go to Part 1

KnifeRights.org Laws for Folder Type Knives Last Updated 1/12/2021 How to measure blade length. Notice: Finding Local Ordinances has gotten easier. Try these four sites. They are adding local government listing frequently. Amer. Legal Pub. Code Publlishing Municode Quality Code Publishing AKTI American Knife & Tool Institute Knife Laws by State Admins E-Mail: [email protected] Go to Part 1 https://handgunlaw.us In many states Knife Laws are not well defined. Some states say very little about knives. We have put together information on carrying a folding type knife in your pocket. We consider carrying a knife in this fashion as being concealed. We are not attorneys and post this information as a starting point for you to take up the search even more. Case Law may have a huge influence on knife laws in all the states. Case Law is even harder to find references to. It up to you to know the law. Definitions for the different types of knives are at the bottom of the listing. Many states still ban Switchblades, Gravity, Ballistic, Butterfly, Balisong, Dirk, Gimlet, Stiletto and Toothpick Knives. State Law Title/Chapt/Sec Legal Yes/No Short description from the law. Folder/Length Wording edited to fit. Click on state or city name for more information Montana 45-8-316, 45-8-317, 45-8-3 None Effective Oct. 1, 2017 Knife concealed no longer considered a deadly weapon per MT Statue as per HB251 (2017) Local governments may not enact or enforce an ordinance, rule, or regulation that restricts or prohibits the ownership, use, possession or sale of any type of knife that is not specifically prohibited by state law. -

A Guide to Switchblades, Dirks and Daggers Second Edition December, 2015

A Guide to Switchblades, Dirks and Daggers Second Edition December, 2015 How to tell if a knife is “illegal.” An analysis of current California knife laws. By: This article is available online at: http://bit.ly/knifeguide I. Introduction California has a variety of criminal laws designed to restrict the possession of knives. This guide has two goals: • Explain the current California knife laws using plain language. • Help individuals identify whether a knife is or is not “illegal.” This information is presented as a brief synopsis of the law and not as legal advice. Use of the guide does not create a lawyer/client relationship. Laws are interpreted differently by enforcement officers, prosecuting attorneys, and judges. Dmitry Stadlin suggests that you consult legal counsel for guidance. Page 1 A Guide to Switchblades, Dirks and Daggers II. Table of Contents I. Introduction .................................................................................... 1 II. Table of Contents ............................................................................ 2 III. Table of Authorities ....................................................................... 4 IV. About the Author .......................................................................... 5 A. Qualifications to Write On This Subject ............................................ 5 B. Contact Information ...................................................................... 7 V. About the Second Edition ................................................................. 8 A. Impact -

OKCA 29Th Annual • April 17-18

KNIFEOKCA 29th Annual SHOW • April 17-18 Lane County Fairgrounds & Convention Center • Eugene, Oregon April 2004 Ourinternational membership is happily involved with “Anything that goes ‘cut’!” YOU ARE INVITEDTO THE OKCA 29th ANNUAL KNIFE SHOW & SALE In the freshly refurbished EXHIBIT HALL. Now 470 Tables! You Could Win... a new Brand Name knife or other valuable prize, just for filling out a door prize coupon. Do it now so you don't forget! You can also... buy tickets in our Saturday (only) RAFFLE for chances to WIN even more fabulous knife prizes. Stop at the OKCA table before 5:00 p.m Saturday. Tickets are only $1 each, or 6 for $5. Free Identification & Appraisal Ask for Bernard Levine, author of Levine's Guide to Knives and Their Values, at table N-01. ELCOME to the Oregon Knife At the Show, don't miss the special live your name to be posted near the prize showcases Collectors Association Special Show demonstrations Saturday and Sunday. This (if you miss the posting, we will MAIL your WKnewslettter. On Saturday, April 17 year we have Martial Arts, Scrimshaw, prize). and Sunday, April 18, we want to welcome you Engraving, Knife Sharpening, Blade Grinding and your friends and family to the famous and Competition, Knife Performance Testing and Along the side walls, we will have more than a spectacular OREGON KNIFE SHOW & SALE. Flint Knapping. New this year: big screen live score of MUSEUM QUALITY KNIFE AND Now the Largest Knife Show in the World! TV close-ups of the craftsmen at work. And SWORD COLLECTIONS ON DISPLAY for don't miss the FREE knife identification and your enjoyment, in addition to our hundreds of The OREGON KNIFE SHOW happens just appraisal by renowned knife author tables of hand-made, factory, and antique knives once a year, at the Lane County Fairgrounds & BERNARD LEVINE (Table N-01). -

The Lure of the Balisong Some Weapons Experts Say the Balisong Or Butterfly Knife Opens Faster Than a Switchblade

Lye’s law The Lure of the Balisong Some weapons experts say the balisong or butterfly knife opens faster than a switchblade. It certainly makes a great impression when twirled in the hand and opening and closing the blade with each resolution. But is it practical, or legal? By William Lye he balisong is a beautifully made knife, and thus portrays a bad image. In many those mishaps! especially those produced by the well- states of the USA it is illegal to own, However, the balisong is probably the Tknown US knife maker, Benchmade. possess or carry a balisong except in the last knife most would choose for self- However, in most states and territories of state of Oregon (the home of Benchmade). In defence. Its use relies too much on fine Australia, the balisong is a prohibited some parts of Europe and Asia, the balisong motor skills. Its fancy and sometimes aerial weapon. Most states (except WA and ACT) is legally and readily available for purchase. artistic motion might leave some people in refer to it as the butterfly knife (presumably The balisong is most likely banned awe of the balisong, but I would certainly be more worried facing a competent knifer with Balisongs: prohibited weapons in Australia. a kitchen knife than a person with a balisong capable of doing only fancy twirls! In a stressful situation, the last thing one would be thinking about is how to flail the balisong. It is indeed very tricky to deploy properly. In Australia, there is no good reason for anyone to own or carry a balisong other than to train in one’s martial arts style. -

2 0 1 8 Catalog

LifeSharp is more than a lifetime guarantee. It’s a creed to live by. It’s the ever-evolving pursuit of excellence. A never-ending journey to master your craft, hone your skills and soak up some wisdom along the way. At Benchmade, every knife we make is a learning experience. We strive to make each knife better than the knife that came before it, as we chase the elusive perfection. And, once that knife passes from our hands to yours, we’ll ensure that your Benchmade stays in pristine condition for many generations to come. Our word is our bond and we’ll hold up our end keeping your knife sharp, but, it’s up to you to keep your LifeSharp. 2018 CATALOG HOW TO CHOOSE YOUR BENCHMADE KNIFE ACTIVITY BLADE STYLE Outdoor, tactical, every day use—there are many uses for a knife, and some While the cutting edge does the work, the edge is applied to the cutting surface knives are better for certain activities than others. in different ways depending on the shape of the blade. Blade shapes with larger BMK ICONOGRAPHY radiuses may be optimal for hunting, while blade shapes with hard angles, like the tanto, may be better for tactical applications. BMKHOW ICONOG WILLRAPHY YOU USE YOUR KEY KNIFE FEATURES BMK ICONOGRAPHYKNIFE? SHAPES AXIS® Exclusive to TACTICAL Designed for hard, immediate Benchmade,AXIS® The AXIS® the AXIS® lock is lock truly Clip-point Blade contains a sharp Sheepsfoot The spine of the use,BMK these ICONOG high-strengthRAPHY knives feature isambidextrous truly ambidextrous and extremely and beak, typically forward of the blade ‘rolls’ into the tip, creating a robust mechanisms for situations where extremelystrong. -

Semantic Specificity of Perception Verbs in Maniq

Semantic specificity of perception verbs in Maniq © Ewelina Wnuk 2016 Printed and bound by Ipskamp Drukkers Cover photo: A Maniq campsite, Satun province, Thailand, September 2011 Photograph by Krittanon Thotsagool Semantic specificity of perception verbs in Maniq Proefschrift ter verkrijging van de graad van doctor aan de Radboud Universiteit Nijmegen op gezag van de rector magnificus prof. dr. J.H.J.M. van Krieken, volgens besluit van het college van decanen in het openbaar te verdedigen op vrijdag 16 september 2016 om 10.30 uur precies door Ewelina Wnuk geboren op 28 juli 1984 te Leżajsk, Polen Promotoren Prof. dr. A. Majid Prof. dr. S.C. Levinson Copromotor Dr. N. Burenhult (Lund University, Zweden) Manuscriptcommissie Prof. dr. P.C. Muysken Prof. dr. N. Evans (Australian National University, Canberra, Australië) Dr. N. Kruspe (Lund University, Zweden) The research reported in this thesis was supported by the Max-Planck-Gesellschaft zur Förderung der Wissenschaften, München, Germany. For my parents, Zofia and Stanisław Contents Acknowledgments ............................................................................................. i Abbreviations ................................................................................................ vii 1 General introduction ........................................................................................ 1 1.1 Aim and scope ................................................................................................... 1 1.2 Theoretical background to verbal semantic specificity -

Through a Mind Darkly. an Empirically-Informed Philosophical Perspective on Systematic Knowledge Acquisition and Cognitive Limita- Tions

Through a mind darkly An empirically-informed philosophical perspective on systematic knowledge acquisition and cognitive limitations Helen De Cruz c Copyright by Helen De Cruz, 2011. All rights reserved. PhD-dissertation of University of Groningen Title: Through a mind darkly. An empirically-informed philosophical perspective on systematic knowledge acquisition and cognitive limita- tions. Author: H. L. De Cruz ISBN 978-90-367-5182-7 (ISBN print version: 978-90-367-5181-0) Publisher: University of Groningen, The Netherlands Printed by: Reproduct, Ghent, Belgium Cover illustration: The externalized thinker. This image is based on Au- gust Rodin’s bronze sculpture, The Thinker (Le Penseur, 1880). It il- lustrates one of the main theses of this dissertation: cognition is not a solitary and internalized process, but a collective, distributed and exter- nalized activity. RIJKSUNIVERSITEIT GRONINGEN Through a mind darkly An empirically-informed philosophical perspective on systematic knowledge acquisition and cognitive limitations Proefschrift ter verkrijging van het doctoraat in de Wijsbegeerte aan de Rijksuniversiteit Groningen op gezag van de Rector Magnificus, dr. E. Sterken in het openbaar te verdedigen op donderdag 27 oktober 2011 om 16.15 uur door Helen Lucretia De Cruz geboren op 1 september 1978 te Gent Promotor: Prof. dr. mr. I. E. Douven Beoordelingscommissie: Prof. dr. M. Muntersbjorn Prof. dr. J. Peijnenburg Prof. dr. L. Horsten CONTENTS List of figures ix List of tables xi List of papers xiii Acknowledgments xv Preface xvii 1 Introduction 1 1.1 Two puzzles on scientific knowledge ............ 1 1.1.1 Naturalism and knowledge acquisition ....... 1 1.1.2 Puzzle 1: The boundedness of human reasoning . -

Phonological, Semantic and Root Activation in Spoken Word Recognition in Arabic: Evidence from Eye Movements

Phonological, Semantic and Root Activation in Spoken Word Recognition in Arabic: Evidence from Eye Movements by Abdulrahman Alamri Thesis submitted to the Faculty of Graduate and Postdoctoral Studies In partial fulfillment of the requirements For the Ph.D. degree in Linguistics Thesis supervisor Tania S. Zamuner Department of Linguistics Faculty of Arts University of Ottawa © Abdulrahman Alamri, Ottawa, Canada, 2017 Abstract Three eyetracking experiments were conducted to explore the effects of phonological, semantic and root activation in spoken word recognition (SWR) in Saudi Arabian Arabic. Arabic roots involve both phonological and semantic information, therefore, a series of three studies were conducted to isolate the effect of the root independently from phono- logical and semantic effects. Each experiment consisted of a series of trials. On each trial, participants were presented with a display with four images: a target, a competitor, and two unrelated images. Participants were asked to click on the target image. Partic- ipants' proportional fixations to the four areas of interest and their reaction times (RT) were automatically recorded and analyzed. The assumption is that eye movements to the different types of images and RTs reflect degrees of lexical activation. Experiment 1 served as a foundation study to explore the nature of phonological, semantic and root activation. Experiment 2A and 2B aimed to explore the effect of the Arabic root as a func- tion of semantic transparency and phonological onset similarity. Growth Curve Analyses (Mirman, 2014, GCA;) were used to analyze differences in target and competitor fixations across conditions. Results of these experiments highlight the importance of phonological, semantic and root effects in SWR in Arabic. -

University of Groningen Through a Mind Darkly De Cruz, Helen Lucretia

University of Groningen Through a mind darkly De Cruz, Helen Lucretia IMPORTANT NOTE: You are advised to consult the publisher's version (publisher's PDF) if you wish to cite from it. Please check the document version below. Document Version Publisher's PDF, also known as Version of record Publication date: 2011 Link to publication in University of Groningen/UMCG research database Citation for published version (APA): De Cruz, H. L. (2011). Through a mind darkly: an empirically-informed philosophical perspective on systematic knowledge acquisition and cognitive limitations. [s.n.]. Copyright Other than for strictly personal use, it is not permitted to download or to forward/distribute the text or part of it without the consent of the author(s) and/or copyright holder(s), unless the work is under an open content license (like Creative Commons). The publication may also be distributed here under the terms of Article 25fa of the Dutch Copyright Act, indicated by the “Taverne” license. More information can be found on the University of Groningen website: https://www.rug.nl/library/open-access/self-archiving-pure/taverne- amendment. Take-down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. Downloaded from the University of Groningen/UMCG research database (Pure): http://www.rug.nl/research/portal. For technical reasons the number of authors shown on this cover page is limited to 10 maximum. Download date: 07-10-2021 Through a mind darkly An empirically-informed philosophical perspective on systematic knowledge acquisition and cognitive limitations Helen De Cruz c Copyright by Helen De Cruz, 2011. -

Knives and the Second Amendment

Knives and the Second Amendment By David B. Kopel,1 Clayton E. Cramer2 & Joseph Edward Olson3 This article is a working draft that will be published in 2013 in the University of Michigan Journal of Law Reform Abstract: This Article is the first scholarly analysis of knives and the Second Amendment. Knives are clearly among the “arms” which are protected by the Second Amendment. Under the Supreme Court’s standard in District of Columbia v. Heller, knives are Second Amendment “arms” because they are “typically possessed by law-abiding citizens for lawful purposes,” including self-defense. Bans of knives which open in a convenient way (bans on switchblades, gravity knives, and butterfly knives) are unconstitutional. Likewise unconstitutional are bans on folding knives which, after being opened, have a safety lock to prevent inadvertent closure. Prohibitions on the carrying of knives in general, or of particular knives, are unconstitutional. There is no knife which is more dangerous than a 1 Adjunct Professor of Advanced Constitutional Law, Denver University, Sturm College of Law. Research Director, Independence Institute, Denver, Colorado. Associate Policy Analyst, Cato Institute, Washington, D.C. Kopel is the author of 15 books and over 80 scholarly journal articles, including the first law school textbook on the Second Amendment: NICHOLAS J. JOHNSON, DAVID B. KOPEL, GEORGE A. MOCSARY, & MICHAEL P. O’SHEA, FIREARMS LAW AND THE SECOND AMENDMENT: REGULATION, RIGHTS, AND POLICY (Aspen Publishers, 2012). www.davekopel.org. 2 Adjunct History Faculty, College of Western Idaho. Cramer is the author of CONCEALED WEAPON LAWS OF THE EARLY REPUBLIC: DUELING, SOUTHERN VIOLENCE, AND MORAL REFORM (1999) (cited by Justice Breyer in McDonald v.