Cost-Configurable Cloud Storage System Architecture Designs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vimal Daga Chief Technical Officer (CTO) – Linuxworld Informatics Pvt Ltd Professional Experience & Certifications

Vimal Daga Chief Technical Officer (CTO) – LinuxWorld Informatics Pvt Ltd Professional Experience & Certifications: I Professional Experience During this period, has been engaged with various corporate clients on different domains and has been involved in imparting corporate Training programs and Consultancy for various technologies that covers the following: A. Sr. Machine Learning / Deep Learning / Data Scientist / NLP Consultant and Researcher Expertise in the field of Artificial Intelligence, Deep Learning, and Computer Vision and having ability to solve problems such as Face Detection, Face Recognition and Object Detection using Deep Neural Network (CNN, DNN, RNN, Convolution Networks etc.) and Optical Character Detection and Recognition (OCD & OCR) Worked in tools such as Tensorflow, Caffe/Caffe2, Keras, Theano, PyTorch etc. Build prototypes related to deep learning problems in the field of computer vision. Publications at top international conferences/ journals in fields related to computer vision/deep learning/machine learning / AI Experience on tools, frameworks like Microsoft Azure ML, Chat Bot Framework/LUIS . IBM Watson / ConversationService, Google TensorFlow / Python for Machine Learning (e.g. scikit-learn),Open source ML libraries and tools like Apache Spark Highly Worked on Data Science, Big Data,datastructures, statistics , algorithms like Regression, Classification etc. Working knowlegde of Supervised / Unsuperivsed learning (Decision Trees, Logistic Regression, SVMs,GBM, etc) Expertise in Sentiment Analysis, Entity Extraction, Natural Language Understanding (NLU), Intent recognition Strong understanding of text pre-processing and normalization techniques, such as tokenization, POS tagging, and parsing, and how they work at a basic level and NLP toolkits as NLTK, Gensim,, Apac SpaCyhe UIMA etc. I have Hands on experience related to Datasets such as or including text, images and other logs or clickstreams. -

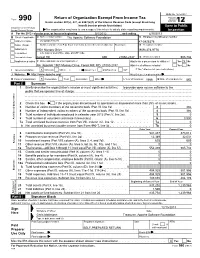

Return of Organization Exempt from Income

OMB No. 1545-0047 Return of Organization Exempt From Income Tax Form 990 Under section 501(c), 527, or 4947(a)(1) of the Internal Revenue Code (except black lung benefit trust or private foundation) Open to Public Department of the Treasury Internal Revenue Service The organization may have to use a copy of this return to satisfy state reporting requirements. Inspection A For the 2011 calendar year, or tax year beginning 5/1/2011 , and ending 4/30/2012 B Check if applicable: C Name of organization The Apache Software Foundation D Employer identification number Address change Doing Business As 47-0825376 Name change Number and street (or P.O. box if mail is not delivered to street address) Room/suite E Telephone number Initial return 1901 Munsey Drive (909) 374-9776 Terminated City or town, state or country, and ZIP + 4 Amended return Forest Hill MD 21050-2747 G Gross receipts $ 554,439 Application pending F Name and address of principal officer: H(a) Is this a group return for affiliates? Yes X No Jim Jagielski 1901 Munsey Drive, Forest Hill, MD 21050-2747 H(b) Are all affiliates included? Yes No I Tax-exempt status: X 501(c)(3) 501(c) ( ) (insert no.) 4947(a)(1) or 527 If "No," attach a list. (see instructions) J Website: http://www.apache.org/ H(c) Group exemption number K Form of organization: X Corporation Trust Association Other L Year of formation: 1999 M State of legal domicile: MD Part I Summary 1 Briefly describe the organization's mission or most significant activities: to provide open source software to the public that we sponsor free of charge 2 Check this box if the organization discontinued its operations or disposed of more than 25% of its net assets. -

Trafficcontrol Documentation

trafficcontrol Documentation Release 3 jvd Jun 19, 2018 Contents 1 CDN Basics 3 1.1 CDN Basics...............................................3 2 Traffic Control Overview 11 2.1 Traffic Control Overview......................................... 11 3 Administrator’s Guide 21 3.1 Administrator’s Guide.......................................... 21 4 Developer’s Guide 131 4.1 Developer’s Guide............................................ 131 5 APIs 157 5.1 APIs................................................... 157 6 FAQ 521 6.1 FAQ.................................................... 521 7 Indices and Tables 525 7.1 Glossary................................................. 525 i ii trafficcontrol Documentation, Release 3 The vast majority of today’s Internet traffic is media files being sent from a single source to many thousands or even millions of destinations. Content Delivery Networks make that one-to-many distribution possible in an economical way. Traffic Control is an Open Source implementation of a Content Delivery Network. The following documentation sections are available: Contents 1 trafficcontrol Documentation, Release 3 2 Contents CHAPTER 1 CDN Basics A review of the basic functionality of a Content Delivery Network. 1.1 CDN Basics Traffic Control is a CDN control plane, see the topics below to familiarize yourself with the basic concepts of a CDN. 1.1.1 Content Delivery Networks The vast majority of today’s Internet traffic is media files (often video or audio) being sent from a single source (the Content Provider) to many thousands or even millions of destinations (the Content Consumers). Content Delivery Networks are the technology that make that one-to-many distribution possible in an economical way. A Content De- livery Network (CDN) is a distributed system of servers for delivering content over HTTP. -

Apache Ambari Operations (May 17, 2018)

Hortonworks Data Platform Apache Ambari Operations (May 17, 2018) docs.cloudera.com Hortonworks Data Platform May 17, 2018 Hortonworks Data Platform: Apache Ambari Operations Copyright © 2012-2018 Hortonworks, Inc. Some rights reserved. The Hortonworks Data Platform, powered by Apache Hadoop, is a massively scalable and 100% open source platform for storing, processing and analyzing large volumes of data. It is designed to deal with data from many sources and formats in a very quick, easy and cost-effective manner. The Hortonworks Data Platform consists of the essential set of Apache Hadoop projects including MapReduce, Hadoop Distributed File System (HDFS), HCatalog, Pig, Hive, HBase, ZooKeeper and Ambari. Hortonworks is the major contributor of code and patches to many of these projects. These projects have been integrated and tested as part of the Hortonworks Data Platform release process and installation and configuration tools have also been included. Unlike other providers of platforms built using Apache Hadoop, Hortonworks contributes 100% of our code back to the Apache Software Foundation. The Hortonworks Data Platform is Apache-licensed and completely open source. We sell only expert technical support, training and partner-enablement services. All of our technology is, and will remain free and open source. Please visit the Hortonworks Data Platform page for more information on Hortonworks technology. For more information on Hortonworks services, please visit either the Support or Training page. Feel free to Contact Us directly to discuss your specific needs. Except where otherwise noted, this document is licensed under Creative Commons Attribution ShareAlike 4.0 License. http://creativecommons.org/licenses/by-sa/4.0/legalcode ii Hortonworks Data Platform May 17, 2018 Table of Contents 1. -

Annual Report

ANNUAL REPORT FY2017 [1 May 2016 – 30 April 2017] THE APACHE® SOFTWARE FOUNDATION (ASF) Open. Innovation. Community. Are you powered by Apache? The answer is most likely “yes”. Apache projects serve as the backbone for some of the world's most visible and widely used applications in Artificial Intelligence and Deep Learning, Big Data, Build Management, Cloud Computing, Content Management, DevOps, IoT and Edge Computing, Mobile, Servers, and Web Frameworks, among other categories. Dependency on Apache projects for critical applications cannot go underestimated, from the 2.6- terabyte, Pulitzer Prize-winning Panama Papers investigation to system-wide information management at the US Federal Aviation Administration to capturing 500B events each day at Netflix to enabling real-time financial services at Lloyds Banking Group to simplifying mobile application development across Android/Blackberry/iOS/Ubuntu/Windows/Windows Phone/OS X platforms to processing requests at Facebook’s 300-petabyte data warehouse to powering clouds for Apple, Disney, Huawei, Tata, and countless others. Every day, more programmers, solutions architects, individual users, educators, researchers, corporations, government agencies, and enthusiasts around the world are choosing Apache software for development tools, libraries, frameworks, visualizers, end-user productivity solutions, and more. Advancing the ASF’s mission of providing software for the public good, momentum over the past fiscal year includes: 35M page views per week across apache.org; Web requests received from every Internet-connected country on the planet; Apache OpenOffice exceeded 200M downloads; Apache Groovy downloaded 12M times during the first 4 months of 2017; Nearly 300 new code contributors and 300-400 new people filing issues each month Bringing value has been a driving force behind Apache’s diverse projects. -

Deflect Documentation Релiз 1.4.0

Deflect Documentation Релiз 1.4.0 лип. 02, 2019 What is Deflect 1 Про Deflect 1 1.1 Контекст...............................................1 1.2 Обґрунтування...........................................1 1.3 Для кого призначено Deflect?...................................2 1.4 З чого почати............................................2 1.5 Налаштування...........................................2 1.6 Наш пiдхiд..............................................3 1.6.1 Дизайн...........................................3 1.6.2 Який захист ми пропонуємо...............................4 1.6.3 Як працює Deflect.....................................4 1.7 Деталi та обмеження........................................6 1.7.1 Закешованi компоненти..................................6 1.7.2 Cookies...........................................6 1.7.3 Чи Deflect працює?....................................6 1.7.4 SSL.............................................6 1.7.5 DNS.............................................6 1.7.6 Налаштування Deflect...................................6 2 Deflect features 9 2.1 eQpress hosting...........................................9 2.1.1 eQPress FAQ........................................9 2.1.2 Що таке eQpress...................................... 11 2.2 Control panel............................................. 11 2.2.1 Бiчна панель........................................ 12 2.2.2 Налаштування вебсайту................................. 15 2.2.3 Повiдомити про DDoS атаку............................... 23 2.2.4 Challenging requests................................... -

IPS Signature Release Note V7.16.71

SOPHOS IPS Signature Update Release Notes Version : 7.16.71 Release Date : 30th January 2020 IPS Signature Update Release Information Upgrade Applicable on IPS Signature Release Version 7.16.70 Sophos Appliance Models XG-550, XG-750, XG-650 Upgrade Information Upgrade type: Automatic Compatibility Annotations: None Introduction The Release Note document for IPS Signature Database Version 7.16.71 includes support for the new signatures. The following sections describe the release in detail. New IPS Signatures The Sophos Intrusion Prevention System shields the network from known attacks by matching the network traffic against the signatures in the IPS Signature Database. These signatures are developed to significantly increase detection performance and reduce the false alarms. Report false positives at [email protected], along with the application details. January 2020 Page 2 of 97 IPS Signature Update This IPS Release includes Nine Hundred and Sixty Four(964) signatures to address Seven Hundred and Forty(740) vulnerabilities. New signatures are added for the following vulnerabilities: Name CVE–ID Category Severity BROWSER-CHROME Google Chrome Browser CVE-2008- Browsers 2 CVE-2008-5750 Remote 5750 Parameter Injection BROWSER-CHROME Google Chrome CVE-2019- FileReader CVE-2019- Browsers 2 5786 5786 Use After Free (Published Exploit) BROWSER-CHROME Google Chrome CVE-2019- Browsers 1 FileReader CVE-2019- 5786 5786 Use After Free BROWSER-FIREFOX Mozilla Firefox CSS CVE-2006- Browsers 2 Letter-Spacing Heap 1730 Overflow BROWSER-FIREFOX Mozilla -

Return of Organization Exempt from Income

OMB No. 1545-0047 Return of Organization Exempt From Income Tax Form 990 Under section 501(c), 527, or 4947(a)(1) of the Internal Revenue Code (except black lung benefit trust or private foundation) Open to Public Department of the Treasury Internal Revenue Service The organization may have to use a copy of this return to satisfy state reporting requirements. Inspection A For the 2012 calendar year, or tax year beginning 5/1/2012 , and ending 4/30/2013 B Check if applicable: C Name of organization The Apache Software Foundation D Employer identification number Address change Doing Business As 47-0825376 Name change Number and street (or P.O. box if mail is not delivered to street address) Room/suite E Telephone number Initial return 1901 Munsey Drive (909) 374-9776 Terminated City, town or post office, state, and ZIP code Amended return Forest Hill MD 21050-2747 G Gross receipts $ 905,732 Application pending F Name and address of principal officer: H(a) Is this a group return for affiliates? Yes X No Jim Jagielski 1901 Munsey Drive, Forest Hill, MD 21050-2747 H(b) Are all affiliates included? Yes No I Tax-exempt status: X 501(c)(3) 501(c) ( ) (insert no.) 4947(a)(1) or 527 If "No," attach a list. (see instructions) J Website: http://www.apache.org/ H(c) Group exemption number K Form of organization: X Corporation Trust Association Other L Year of formation: 1999 M State of legal domicile: MD Part I Summary 1 Briefly describe the organization's mission or most significant activities: to provide open source software to the public that we sponsor free of charge 2 Check this box if the organization discontinued its operations or disposed of more than 25% of its net assets. -

IPS Signature Release Note V7.16.70

SOPHOS IPS Signature Update Release Notes Version : 7.16.70 Release Date : 28th January 2020 IPS Signature Update Release Information Upgrade Applicable on IPS Signature Release Version 7.16.69 Sophos Appliance Models XG-550, XG-750, XG-650 Upgrade Information Upgrade type: Automatic Compatibility Annotations: None Introduction The Release Note document for IPS Signature Database Version 7.16.70 includes support for the new signatures. The following sections describe the release in detail. New IPS Signatures The Sophos Intrusion Prevention System shields the network from known attacks by matching the network traffic against the signatures in the IPS Signature Database. These signatures are developed to significantly increase detection performance and reduce the false alarms. Report false positives at [email protected], along with the application details. January 2020 Page 2 of 51 IPS Signature Update This IPS Release includes Five Hundred and Seven(507) signatures to address Three Hundred and Sixty Four(364) vulnerabilities. New signatures are added for the following vulnerabilities: Name CVE–ID Category Severity BROWSER-FIREFOX Mozilla Firefox CVE- 2010-3765 CVE-2010- Browsers 4 document.write And 3765 DOM Insertions Memory Corruption BROWSER-FIREFOX (Published Exploit) Mozilla Firefox CVE-2010- Browsers 4 document.write And 3765 DOM Insertions Memory Corruption BROWSER-IE Microsoft Internet Explorer CVE-2008- ActiveX Navigate Browsers 4 4258 Handling Code Execution BROWSER-IE Microsoft Internet Explorer CVE- CVE-2012- 2012-4969 Browsers -

Performance Evaluation of the Apache Traffic Server and Varnish Reverse

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by NORA - Norwegian Open Research Archives UNIVERSITY OF OSLO Department of Informatics Performance Evaluation of the Apache Traffic Server and Varnish Reverse Proxies Shahab Bakhtiyari Network and System Administration University of Oslo May 23, 2012 Performance Evaluation of the Apache Traffic Server and Varnish Reverse Proxies Shahab Bakhtiyari Network and System Administration University of Oslo May 23, 2012 Contents 1 Introduction8 1.1 Why Cache?............................... 8 1.2 Motivation................................ 9 1.3 Problem statement ........................... 11 1.4 Thesis Outline.............................. 11 2 Background and Related Workj 12 2.1 Web servers............................... 12 2.1.1 Static web resources ...................... 12 2.1.2 Dynamic web resources .................... 13 2.2 Cache servers.............................. 13 2.2.1 Client side proxy........................ 14 2.2.2 Organization and ISP proxy caches .............. 14 2.2.3 Server ISP or CDN reverse proxy caches ........... 14 2.2.4 Server side reverse proxy cache ................ 15 2.2.5 Distributed Caches and ICP .................. 15 2.3 Cache replacement algorithms ..................... 16 2.3.1 Replacement strategies..................... 16 2.4 HTTP.................................. 21 2.4.1 HTTP Message Structure.................... 22 2.5 Caching software............................ 25 2.5.1 Apache Traffic Server ..................... 25 2.5.2 Varnish............................. 27 2.5.3 Others.............................. 28 2.6 Challenges................................ 28 2.6.1 Realistic Workloads ...................... 28 2.6.2 Lack of recent works...................... 30 2.6.3 Tools specifically designed for cache benchmarking . 30 3 Model and Methodology 32 3.1 Approach ................................ 32 3.2 Test environment ............................ 34 3.3 Web Polygraph ............................ -

Low Latency for Cloud Data Management

Low Latency for Cloud Data Management Dissertation with the aim of achieving a doctoral degree at the Faculty of Mathematics, Informatics, and Natural Sciences Submitted at the University of Hamburg by Felix Gessert, 2018 Day of oral defense: December 18th, 2018 The following evaluators recommend the admission of the dissertation: Prof. Dr. Norbert Ritter Prof. Dr. Stefan Deßloch Prof. Dr. Mathias Fischer There are only two hard things in Computer Science: cache invalidation, naming things, and off-by-one errors. – Anonymous ii iii Acknowledgments This dissertation would not have been possible without the support and hard work of numerous other people. First and foremost, I would like to thank my advisor Prof. Norbert Ritter for his help and mentoring that enabled this research. Not only has he always given me the freedom and patience to execute my ideas in different directions, but he has formed my perception that academic research should eventually be practically applicable. Therefore, he is one of the key persons that enabled building a startup from this research. I also deeply enjoyed our joint workshops, talks, tutorials, and conference presentations with the personal development these opportunities gave rise to. I am convinced that without his mentoring and pragmatic attitude neither my research nor entrepreneurial efforts would have worked out this well. I would also like to express my gratitude to my co-advisor Prof. Stefan Deßloch. His insightful questions and feedback on different encounters helped me improve the overall quality of this work. My sincerest thanks also go to my co-founders Florian Bücklers, Hannes Kuhlmann, and Malte Lauenroth. -

Topic: Transmission of Values and Culture in Open Source

Topic: Transmission of Values and Culture in Open Source Research Question: To what extent can open source values and culture be effectively transmitted to new projects ? Supervisor: Ing. Aleš Kubíček, Ph.D. Supervisor: Shane Curcuru Student: Sharan Foga Date: 10th January 2019 Page 1 of 31 Table of Contents Introduction..........................................................................................................................................3 History and Background..................................................................................................................3 Culture and Apache..........................................................................................................................4 Meritocracy......................................................................................................................................4 Theory...................................................................................................................................................5 Methodology....................................................................................................................................5 Culture.........................................................................................................................................6 Assessing “The Apache Way”.....................................................................................................6 Data Source......................................................................................................................................9